Sage Updated Install (5/1/2025)

The installation is far easier then before and does not require compilation using Visual x64

Install Cuda 12.8

Uninstall/Install Triton Version 3.3 OLD VERSION WILL CAUSE FAILURE

pip install triton-windowsInstall Sage Attention

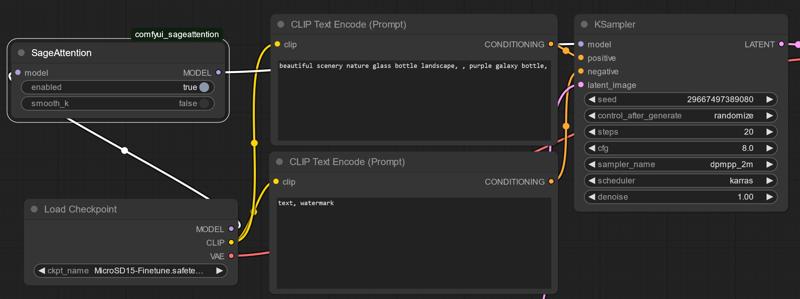

pip install sageattentionYou can use the launch command below however I prefer the old sage attention node by blepping, so I can use cross attention and switch to sage attention when needed.

--use-sage-attentionTo use the sage attention node simply copy it to your "custom_nodes" folder.

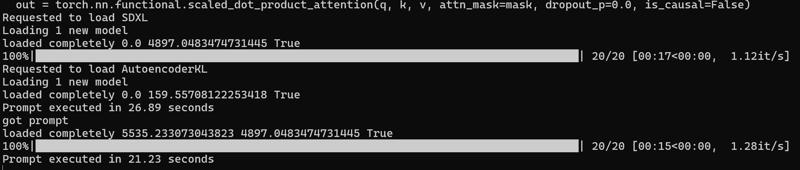

Ampere Cards and beyond 30xx 40xx and 50xx will see IT/s improvements usually 5% or greater.

Blackwell Series (50xx) cards likely see the most benefit

Deprecated Below

If you have windows you will need to install Triton Windows - I have attached the test python file run to make sure Triton is working.

Triton requires you launch X64 native visual basic command line prompt rather then powershell or cmd. You will need to copy the file from the Triton downloads and install locally for windows. Linux users just use the pip install command. (Don't forget to activate your VENV)

pip install triton-3.0.0-cp310-cp310-win_amd64.whlWindows user also need to manually copy vcruntime140.dll and vcruntime140_1.dll to the same folder as your python.exe in your scripts folder of your VENV (Part of the https://aka.ms/vs/17/release/vc_redist.x64.exe distribution)

Both Linux and Windows users - install Sage via:

pip install sageattention Copy the Sage Plugin to the custom_nodes folder of Comfy (Not a subfolder)

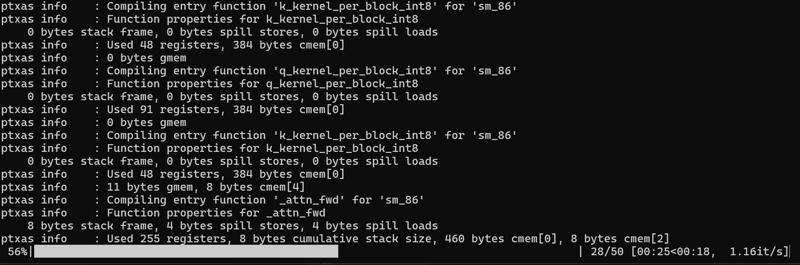

If you did everything right sage will execute code when you go to generate an image in PONY/SDXL, writing the files for 8-bit attention this is a one time process and only takes a minute or so.

Troubleshooting

Windows/Linux

Did you test Triton before launch

Did you remember to connect the Sage plugin

Linux Users

Did you use

pip install triton sageattention Windows Users

Did you launch Via X64 VC command line and start your VENV

Did you copy the .dll files from windows system to your python scripts VENV

BIG BOLD - WINDOWS USERS FORGETTING AND LAUNCHING VIA CMD OVER X64 EVEN AFTER GENERATING THE C++ MAY CAUSE SAGE NOT TO FUNCTION CORRECTLY

Seed to Seed image comparison XFORMERS vs SAGE

The seed to seed difference on this seed was a line in back and very minor differences in the bottles only visually perceptible with comparing the difference.

While I am at it did you know I am working on a finetune that can generate FLUX sized images out of SD 1.5 (18 seconds on SD 1.5 vs 22 seconds on SDXL for a 1024x1024 image for my 3050RTX)

Quick notes while I did get working on my RTX3050 it will only benefit cards with native 8Bit support fully. I knew this this before install but I wanted the option to test 8bit attention and did see a small benefit as noted.

The creator of the plugin mentions it does not work with SD 1.5 which is odd because the embed dim is 768 and the number of heads is 16 - giving us a head value of 64 (But his plugin worked and mine did not so.)

Tyler mentioned that this was a must have plugin for using the LLM video generation software locally, and how I came to know of sage.

Attribution

Comfyui_sageattention is by blepping

Triton Test is copied from Triton