Understanding Concept Geometry: From Theory to Better Prompts

Paper here: https://arxiv.org/html/2410.19750v1

UPDATE 10/31:

Further testing indicates this works best for Lora-less output, at high CFG (7.5-9.5). It tends to make those CFGs actually usable, even on models that are less suited for high CFG, like Illustrious XL.

More updates to come.

Author's Note

This article was developed with assistance from Claude AI to help organize and articulate these concepts. While the MIT paper's findings are peer-reviewed research, my applications to Stable Diffusion prompt engineering are still experimental and need further testing. The effectiveness of different syntax patterns (:: vs :) and prompt structures are based on initial results, and I'll be updating this article soon with practical image examples to demonstrate these techniques in action. I encourage community members to test these methods and share their findings as we explore these concepts together.

Also, most of my testing has been conducted on the Illustrious XL model, especially because of its novel nature and the at-the-moment specific prompt structure that it seems to need. I found that this concept-based geometric approach actually produced superior results compared to the conventional "quality tags first" structure (perfect quality, best quality, masterpiece, etc.) that's commonly recommended. This suggests that understanding and working with the model's natural concept organization might be more effective than traditional prompt patterns.

More image examples and practical demonstrations coming soon.

Introduction

Recently, I've been diving deep into a fascinating MIT research paper titled "The Geometry of Concepts: Sparse Autoencoder Feature Structure." While studying this paper and experimenting with prompts, I've discovered some interesting connections that have significantly improved my prompt engineering. Before I share these insights, let's establish some fundamental concepts that will help everything make more sense.

Understanding the Basics

### Latent Space: The Universe of Concepts

Think of latent space as an enormous multidimensional universe where every possible image exists somewhere. When you use Stable Diffusion, you're essentially:

1. Converting your prompt into coordinates in this space

2. Finding a location that matches your description

3. Moving through this space during the diffusion process to create your image

It's like having an infinite art gallery where every possible artwork exists - your prompt is just giving directions to find the one you want.

### How Stable Diffusion Understands Prompts

When you write a prompt, Stable Diffusion:

1. Breaks it into tokens (words and concepts it understands)

2. Maps these tokens to points in latent space

3. Uses these points as guides for generating your image

Understanding this process is crucial because it explains why prompt structure and weights matter so much - you're essentially giving the AI more precise coordinates in this concept space.

The MIT Paper's Key Findings

The researchers discovered that AI models organize concepts at three distinct scales:

1. Crystal Scale (Small): Concepts form geometric patterns like parallelograms

2. Brain Scale (Medium): Features cluster into functional "lobes" like a brain

3. Galaxy Scale (Large): The overall structure follows specific patterns

Let me explore each and show how they apply to prompting.

Important note for all AI model usage

These concepts should apply across all model families since they all use latent space for training and data structuring - whether for text, images, video, or music generation. Any model operating in latent space would likely follow these patterns as it, based on current research, seems to be the most efficient way to structure weights/space/relationship data for any given model.

This is somewhat of a heady conclusion/speculation on my part, but as a general rule of thumb when it comes to science and especially energy concerns + efficiency:

When systems are not given rigid guidelines in terms of how to organize themselves by humans,

Systems will fundamentally organize themselves in a way that makes them most efficient

for them to maintain their current state, whether that's the way gasses distribute across a container, reactions between different chemicals, the way that star systems will distribute their planets, etc.

I believe that this concept geometry finding is just another example of that idea.

Organizing these concepts into standardized geometrical shapes makes it so that a given AI model, when creating an output, operates in a way most efficient in both energy expenditure and time taken for said model to traverse the latent space in order to reach the desired output to the user.

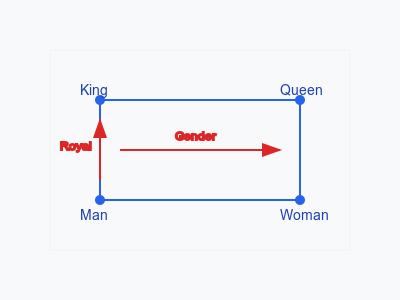

### 1. Crystal Structure: How Concepts Combine

Imagine concepts as points in space. The researchers found that related concepts often form geometric patterns.

Parallelogram Structures

Concepts form four-point patterns where relationships between points are preserved

These structures maintain consistent "semantic distances"

Quality of these relationships improves when removing "distractor" dimensions

Function Vectors

The difference between two concepts creates a "function vector"

These vectors can be applied to other concepts

Multiple vectors can combine to create new concepts

Key Discovery: Distractor Features

Some dimensions (like word length in text) can mask these relationships

The classic example is:

forming a parallelogram where:

- The difference between "man" and "woman" represents the concept of "gender"

- The difference between "man" and "king" represents the concept of "royalty"

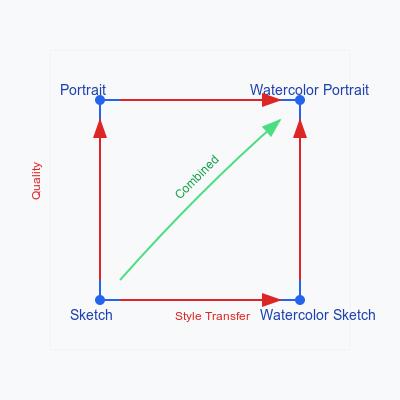

How This Applies to Stable Diffusion

In art generation, I've observed similar patterns:

portrait + watercolor_style = watercolor_portrait

This explains why, in my findings, certain prompt structures worked well:

masterpiece portrait::1.4, [artist] style::1.2The double-colon syntax (::) seems to create stronger geometric relationships between concepts, which might explain why I've found it often produces better results than single-colon syntax (:) for core concepts.

### 2. Brain-Like Organization: Functional Groups

The research found that features cluster into specialized "lobes" that handle specific types of content. This is similar to how a human brain has different regions for different functions.

At this intermediate scale, the researchers discovered:

Functional Lobes

Features cluster into specialized regions

Each region handles specific types of content

Strong spatial modularity similar to brain regions

Co-occurrence Patterns

Features that fire together cluster together

These clusters show strong statistical significance

Different clustering metrics reveal different functional relationships

Cross-Lobe Interactions

Lobes communicate in structured ways

Information flows between functional groups

Patterns mirror neural network architecture

Application to Prompting

This finding suggests we should group related concepts together in prompts. Here's the structure I've found most effective:

# Artistic/Style Group

(masterpiece::1.4), (digital art::1.3), (artist style::1.2)# Physical/Subject Group

(character details:1.1), (pose:1.0)# Environment Group

(background elements:0.9), (lighting:0.8)# Technical Group

(detailed:0.8), (high quality:0.8)### 3. Galaxy-Scale Structure: Hierarchical Organization

The research found that feature importance follows a power-law distribution, with some features being dramatically more important than others.

To elaborate slightly, this largest scale, the research revealed:

Power Law Distribution

Feature importance follows a mathematical power law

Creates a "fractal cucumber" shape of concept relationships in high-dimensional space

Middle layers show steepest power law slopes

Layer Dependencies

Different layers show different organizational patterns

Middle layers act as information bottlenecks

Pattern steepness varies systematically across layers

Clustering Patterns

Strong hierarchical organization

Systematic variation across model layers

Complex interaction between 3+ scales

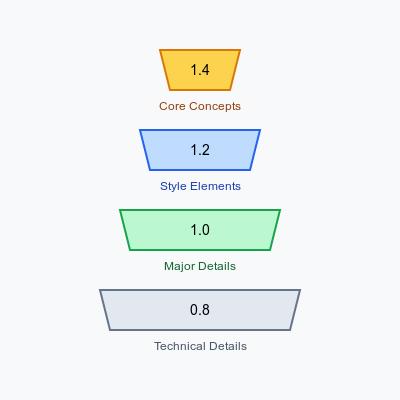

Prompting Implementation

This (speculatively) suggests using decreasing weights for less important elements:

(Again, speculation. I find the reverse order of this weighting works better in some cases)

# Primary Elements (1.3-1.4)

masterpiece portrait::1.4# Style Elements (1.1-1.2)

artist style::1.2# Details (0.8-1.0)

(specific details:0.9)My Discoveries About Syntax

More than anything, I believe the parenthetical grouping of similar concepts and types of concepts, in proper order, is the most important factor in getting really what you want out of any given prompt.

However, through extensive testing, I've found that mixing syntax types can be additionally effective:

1. Double-colon (::) for primary elements:

masterpiece portrait::1.4, artist style::1.22. Single-colon (:) for details:

(specific details:1.0), (background elements:0.9)This mixed approach seems to leverage different types of concept relationships:

- :: for strong geometric relationships (crystal scale)

- : for smoother blending of details (brain scale)

Examples

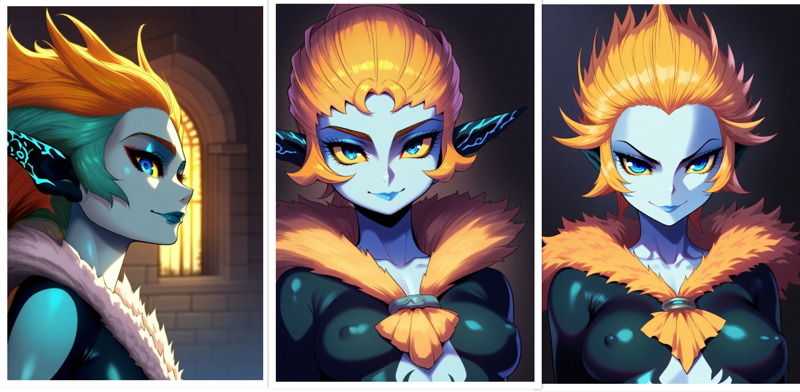

Grouping/no grouping + colons

first with parentheses grouping of concepts:

masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, (elegant feminine figure), (blue eyes, confident smile), (form-fitting blue textured bodysuit), (regal fur trim), (majestic castle background), (dramatic outdoor lighting), (highly detailed, perfect lighting)Second with no parentheses grouping but with double colons:

masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, elegant feminine figure::1.1, blue eyes, confident smile::1.1, form-fitting blue textured bodysuit::1.1, regal fur trim::1.1, majestic castle background::1.1, dramatic outdoor lighting::1.1, highly detailed, perfect lighting::1.1third with no parentheses grouping and with single colons:

masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, elegant feminine figure:1.1, blue eyes, confident smile:1.1, form-fitting blue textured bodysuit:1.1, regal fur trim:1.1, majestic castle background:1.1, dramatic outdoor lighting:1.1, highly detailed, perfect lighting:1.1

Here, the first probably adheres to the prompt the most in terms of the castle background, but the second picture has the best detail, styling, and composition arguably versus the other two photos.

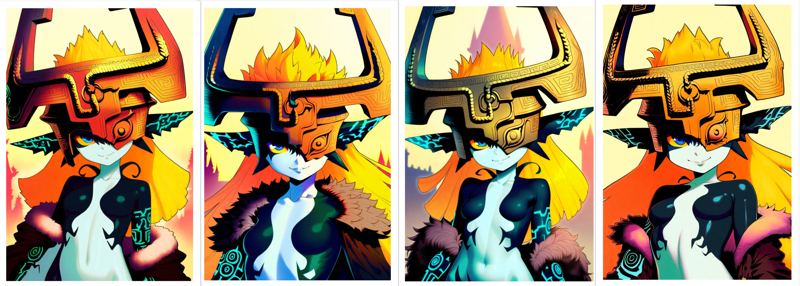

Double vs Single Colon

first with double colons and second with single colon.

Notice the better overall details, adherence, styling, artifact presence, overall composition, and shading being better in the first pictures:

masterpiece portrait of Princess Midna::1.4, Yoshitaka Amano style::1.2, ethereal fantasy art::1.1, (elegant feminine figure::1.1), (blue eyes, confident smile::1.0), (form-fitting blue textured bodysuit::1.0), (regal fur trim::0.9), (majestic castle background::0.9), (dramatic outdoor lighting::0.8), (highly detailed, perfect lighting::0.8)

masterpiece portrait of Princess Midna:1.4, Yoshitaka Amano style:1.2, ethereal fantasy art:1.1, (elegant feminine figure:1.1), (blue eyes, confident smile:1.0), (form-fitting blue textured bodysuit:1.0), (regal fur trim:0.9), (majestic castle background:0.9), (dramatic outdoor lighting:0.8), (highly detailed, perfect lighting:0.8)

Colon syntax mixing + order of mixing:

double, single only, double first then single, single first then double.

In my opinion, number three (double colon then single) looks the best in regards to adherence, style and details.

Prompts 1-4:

masterpiece::1.4, masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, (elegant feminine figure::1.1), (blue eyes, confident smile::1.1), (form-fitting blue textured bodysuit::1.1), (regal fur trim::1.1), (majestic castle background::1.1), (dramatic outdoor lighting::1.1), (highly detailed, perfect lighting::1.1)masterpiece:1.4, masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, (elegant feminine figure:1.1), (blue eyes, confident smile:1.1), (form-fitting blue textured bodysuit:1.1), (regal fur trim:1.1), (majestic castle background:1.1), (dramatic outdoor lighting:1.1), (highly detailed, perfect lighting:1.1)masterpiece::1.4, masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, (elegant feminine figure:1.1), (blue eyes, confident smile:1.1), (form-fitting blue textured bodysuit:1.1), (regal fur trim:1.1), (majestic castle background:1.1), (dramatic outdoor lighting:1.1), (highly detailed, perfect lighting:1.1)masterpiece:1.4, masterpiece portrait of Princess Midna, Yoshitaka Amano style, ethereal fantasy art, (elegant feminine figure::1.1), (blue eyes, confident smile::1.1), (form-fitting blue textured bodysuit::1.1), (regal fur trim::1.1), (majestic castle background::1.1), (dramatic outdoor lighting::1.1), (highly detailed, perfect lighting::1.1)

This suggests that a combination of parentheses grouping of related concepts, in the correct order, with the mixed double+single colon syntax, is the best route for both adherence and overall picture quality.

Weight Guidelines I've Developed

Based on the paper's hierarchical findings and my testing:

1. Core Concepts: 1.3-1.4

- Main subject

- Quality markers

- Primary style

2. Major Attributes: 1.1-1.2

- Artist style

- Key features

- Important details

3. Supporting Elements: 0.8-1.0

- Background

- Lighting

- Technical details

(Again, speculation. I find the reverse order of this weighting works better in some cases)

Practical Templates

Here's my optimized template that's proven effective:

# Core Concept (Crystal Structure)

masterpiece portrait of [subject]::1.4,

[artist] style::1.2,

[art style] art::1.1,# Details (Functional Groups)

(physical description:1.1),

(facial features, expression:1.0),

(clothing details:1.0),

(accessories:0.9),

(background setting:0.9),

(lighting style:0.8),

(technical details:0.8)Example Implementation

masterpiece portrait of ethereal sorceress::1.4,

Alphonse Mucha style::1.2,

art nouveau fantasy::1.1,

(slender ethereal figure:1.1),

(silver eyes, serene expression:1.0),

(flowing iridescent robes:1.0),(crystal staff:0.9),(magical library background:0.9),

(mystical lighting:0.8),(highly detailed, magical effects:0.8)Advanced Applications

For more complex images, I've developed these specialized templates:

Character Portrait Template

masterpiece portrait::1.4,

[artist] style::1.2,

[art style] art::1.1,

(physical description:1.1),

(facial features, expression:1.0),

(clothing details:1.0),

(pose, gesture:0.9),

(background:0.9),

(lighting:0.8),

(technical details:0.8)

Landscape Template

masterpiece landscape::1.4,

[artist] style::1.2,

[mood] atmosphere::1.1,

(terrain features:1.1),

(weather conditions:1.0),

(environmental details:1.0),

(time of day:0.9),

(lighting effects:0.8),

(technical quality:0.8)

Troubleshooting Common Issues (speculative!)

Based on my testing, here are solutions to common problems:

1. Style Not Strong Enough

- Increase style weight to 1.3-1.4

- Move style terms earlier in prompt

- Group style terms together

2. Lost Detail

- Increase detail weights to 1.0-1.1

- Add specific detail descriptors

- Group related details together

3. Inconsistent Results

- Use more specific core concepts

- Maintain consistent weight hierarchy

- Group related concepts together

Conclusion

Understanding how Stable Diffusion organizes concepts in its latent space has transformed how I write prompts. By aligning our instructions with the model's natural organization of concepts, we can achieve more consistent and higher-quality results.

The key principles I've found most valuable are:

- Group related concepts together

- Use appropriate weights for different elements

- Mix syntax types strategically

- Maintain hierarchical structure

I encourage you to experiment with these templates and principles. Every model and style might need slight adjustments, but these fundamental concepts should provide a strong foundation for developing your own effective prompts.

---

This article is based on research from "The Geometry of Concepts: Sparse Autoencoder Feature Structure" (MIT, 2024) and my personal experimentation with Stable Diffusion prompting. Feel free to share your results and experiences with these techniques.

.jpeg)