This is a quick and simple follow up to a previous post about UMAP on pooled text encoder embeddings for an Illustrious model (I use my finesse merge).

The interactive link I'll share today:

Uses a hopefully better dataset for artist names

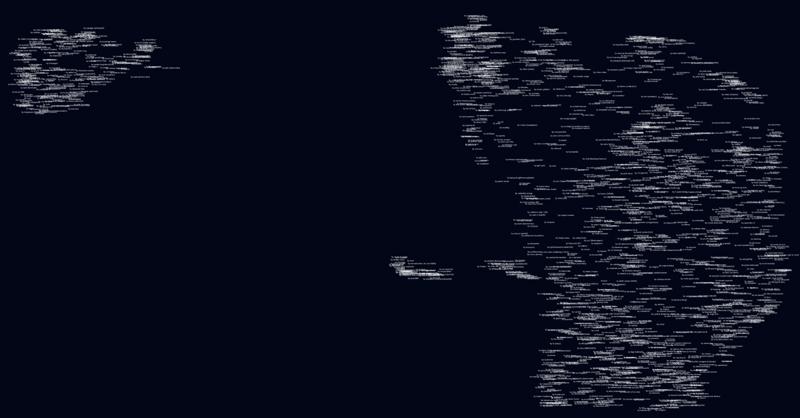

Allows switching between the UMAP projection of the pooled text embeddings and visual embeddings from nomic)

Here's the link for you to play around with:

https://satisfying-trusted-pruner.glitch.me/artist-emb-images-2x.html

Updated version 2, with search and copy text https://satisfying-trusted-pruner.glitch.me/artists-emb-2048-v2.html

WARNING: This includes very, very NSFW images and subject matter influenced by the artists tags. You are advised to not view this link unless you fully understand what you might see if you ask a model to generate whatever relates to random Danbooru artists.

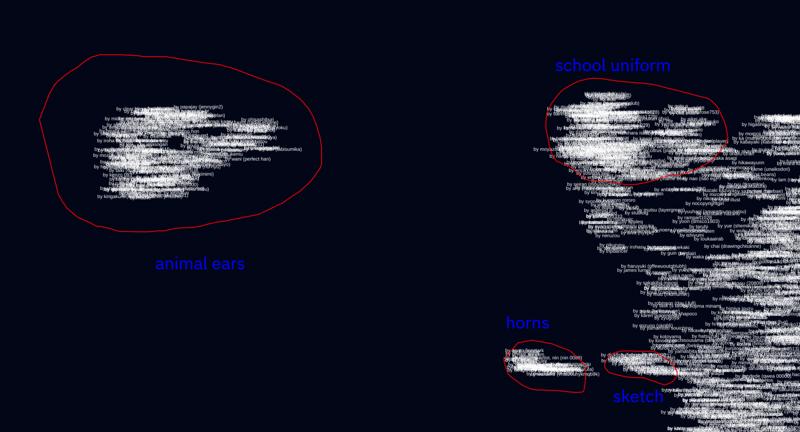

Even with a better dataset for artist names I still don't believe the clustering of the pooled text embeddings is very informative as is. But obviously the vision embeddings from nomic are.

We can switch between either embedding type with the Switch Emb button

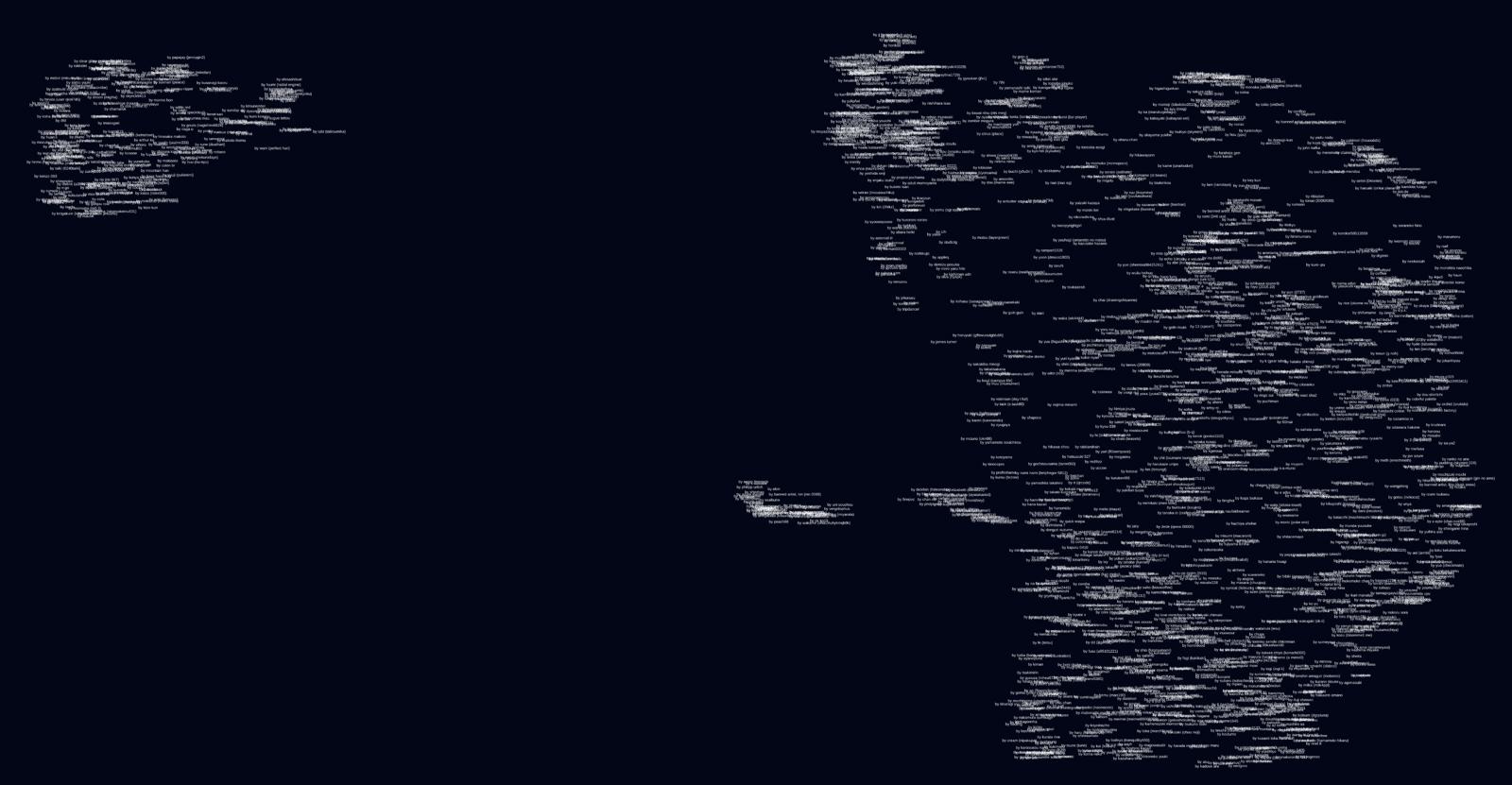

Pooled Text Encoder Embeddings

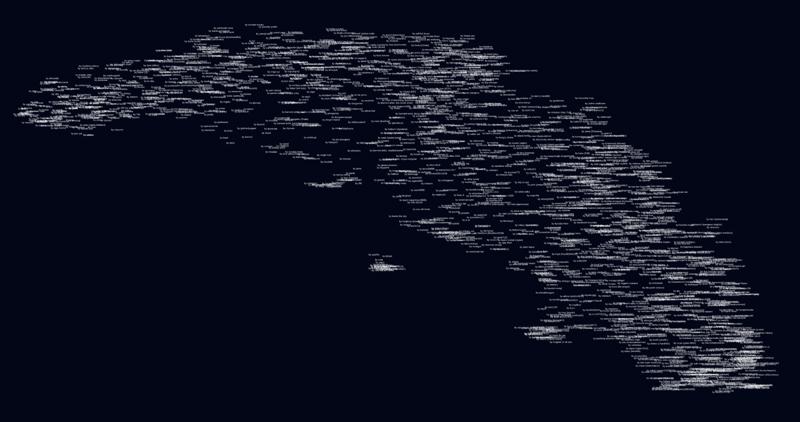

Nomic Vision Embeddings

Just wanted to share, have fun!