(Cover image was generated with my LORA text included)

Download the model at F-22 Raptor V1

As the name of the article suggests, I'm going to walk you through how I conduct quality control after training so I can release the best epoch instead of rolling the dice and hoping it's a good model. If you are also training and fine-tuning your own models and LORAs, I believe this is a viable way to conduct testing, especially in the case of Flux Dev LORA.

First, let me tell you about the training process and provide a bit of history:

I have a dual-GPU (RTX 3090) local setup—one for training and one for testing—which allows me to start testing as soon as the first epoch is being saved.

I have been training models for almost two years now, almost as soon as DreamBooth was released, and I could start training with cloud computing. However, back then, it was mostly a hobby for me. For the past year or so, I have started training LORAs and fine-tuning models like SDXL and Pony for commissions, but until recently, I had not released any model on Civit. I was planning on waiting for SD 3's release to start, but as you may be aware of the disastrous launch of SD 3.0, I halted my plan. Then Flux came out and shocked all of us, so I decided to start working on Flux. No matter what I did, I couldn't figure it out and train Flux LORA on my local setup until I came across Dr. Furkan's YouTube channel, which fully explained how to fix the issues I had and get everything running. Afterwards, I decided to purchase his Patreon, where he shared his experiments and all the configs for it, which was really useful and avoided unnecessary and boring trial and error. Using his configs, which were based on my GPU's VRAM, I managed to start training quality LORAs and even doing DreamBooth fine-tuning.

(I am in no way associated with Dr. Furkan, and this is not a plug for his Patreon; I simply like to give credit where credit is due.)

Full screenshot of my parameters:

The only change I made to his config was enabling bucket resolution due to my past experiences with SDXL. A variety of resolutions and aspect ratios for your dataset helps a lot with the flexibility of the model. He also suggested that we should use rare tokens for the initial prompt, but my past tests with SDXL indicate that using an already existing token works much better, especially if the class matches the same class in the raw model.

For this particular model, it was also a test for me to see if I could train a more flexible and higher quality LORA just using the default Flux text encoders and the initial prompt, which in my case was "F-22 Raptor," instead of automatic or manual captioning. This was, for me, the biggest issue when I started training Flux LORAs; no matter how I captioned them, the results were not acceptable. In most cases, my results show that you are usually better off without captioning, at least in the case of character and concept LORAs. I have not tested styles yet.

If you look closely at the image, you can see that I have set it up to save every 5 epochs, and that's the first step of quality control. What I have found from my experience is that, no matter the config, depending on your dataset, you will never know which epoch or how many steps are going to give you the desired results. So saving as many as you can and testing them is the best strategy.

In my case, I have 150 images with a resolution of 1024 and various aspect ratios, plus different styles and forms (e.g., F-22 poster, F-22 as a toy, F-22 in the style of a drawing, and much more). I had set the max epoch at 200, which translates to a maximum of 30,000 steps. Thanks to the fact that I have a second GPU, I was able to test the epochs before the maximum epoch was reached, and just for experimenting, I let the model run until 100 epochs.

Quality Standards and Expectations:

Style Flexibility: Ensuring that the concept can be generated in many styles.

Aspect Ratio Flexibility: Making sure that the subject can be generated in any aspect ratio (AR).

Generation Flexibility: Ensuring that the LORA can generate a variety of results by mixing and matching humans and the subject. For example, one could have "one F-22 Raptor in the background, a photograph capturing an American Air Force pilot in front of a F-22 Raptor holding a pilot visor helmet in his left hand and showing thumbs up with his right hand, taken with a 22mm lens on a Sony Alpha 7 IV camera." This checks if the model is capable of that flexibility.

Balanced Training: Avoiding both overtraining and undertraining so the model can generate flexible images while showing a perfect resemblance to the subject.

Prompt Following: Testing whether the model will follow the prompt or, for example, if it is overtrained and ignores everything, only trying to give you the subject no matter what.

Overall Ease of Use: Ensuring that the user can simply plug it in, write a simple prompt, and expect good results.

Quality Control:

First and foremost, dataset preparation: I manually collect and inspect the highest quality images, compile them into a folder, resize them, and make sure I have a good variety of images so the final model is flexible.

Next is setting or finding the best parameters. In my case, with Flux, I used Dr. Furkan's configurations.

I run the training and wait until at least 25% of the epochs are saved. In my case, with a maximum of 200 epochs, I started testing the first 50 epochs (10 saved LORAs of 5 epochs each) using my second RTX 3090.

After the initial epoch test, I will decide whether we have enough training or if I should leave it for a longer period. If it's enough, I will halt the training.

I run long batches of tests with all the standards in mind.

Here’s what that looks like:

The initial test after 50 epochs involved saving every 5 epochs to determine the quality according to the standards. I used the simple prompt {F-22 Raptor} with 25 steps using Euler Simple for sampling, the XYZ script on Forge UI, and the Prompt S/R parameter to inject the LORAs. Here are the results (the last image is without any LORAs), using seed 2278699223.

From the first result, you can see that almost from Epoch 5 we have a perfect resemblance. By Epoch 20, it is not following the prompt correctly, indicating that the ideal epoch should be between five and twenty. I ran another test using the prompt {F-22, F-22 Raptor, in the style of a poster, large text that says "F-22 Raptor"}; every parameter was the same as the last one, with the only difference being the size: 768x1365 instead of 1024x1024. This round of testing was for flexibility standards and confirmed which epoch was the best save.

As you can see here, Epoch 15 almost had a perfect batch and produced results that met our standards. It generated perfect text in 3 out of 5 images, maintained a 9x16 aspect ratio, and exhibited near-perfect stylization. The prompt following was excellent, and there were no signs of overtraining or undertraining, making it the most perfect LORA in the batch.

So, up to this point, we have confirmation that Epoch 15 is the best in the batch. I ran another generation but only changed the prompt slightly to see how much better the result could be with minimal prompt improvement. The new prompt was {F-22, F-22 Raptor, in the style of a poster, (large text that says "F-22 Raptor":2.0)}. To my delight, it achieved a perfect score of 5/5.

Up to this point, we have met all of our standards, or at least the majority of them. However, to eliminate any errors in our experiment, we are going to run a full test with several different prompts in an isolated manner using two completely different seeds over 100 epochs. Here are the results.

Final Testing:

Remember, the last picture was generated without any LORAs.

These tests are solely conducted to determine if we were correct about the quality of the LORA. To do that, we need to check the results across all the generations to see if Epoch 15, on average, comes out on top.

Here is the raw metadata for each run:

Prompt: {F-22 Raptor, lora:F-22_Raptor(V1)-000005:1}

Steps: 25

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 10000000000000000000

Size: 1024x1024

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000005: e5bf104b600f"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 1 min. 22.8 sec.

Script: X/Y/Z plot

X Type: Prompt S/R

X Values: ""lora:F-22_Raptor(V1)-000005:1",..."

Prompt: {F-22 Raptor, lora:F-22_Raptor(V1)-000005:1}

Steps: 25

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 9999999999999999999

Size: 1024x1024

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000005: e5bf104b600f"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 51.9 sec.

Script: X/Y/Z plot

X Type: Seed

X Values: "9999999999999999999,555555555555555555"

Fixed X Values: "9999999999999999999, 555555555555555555"

Y Type: Prompt S/R

Y Values: ""lora:F-22_Raptor(V1)-000005:1","lora:F-22_Raptor(V1)-000010:1","lora:F-22_Raptor(V1)-000015:1","lora:F-22_Raptor(V1)-000020:1","lora:F-22_Raptor(V1)-000025:1","lora:F-22_Raptor(V1)-000030:1","lora:F-22_Raptor(V1)-000035:1","lora:F-22_Raptor(V1)-000040:1","lora:F-22_Raptor(V1)-000045:1","lora:F-22_Raptor(V1)-000050:1","lora:F-22_Raptor(V1)-000055:1","lora:F-22_Raptor(V1)-000060:1","lora:F-22_Raptor(V1)-000065:1","lora:F-22_Raptor(V1)-000070:1","lora:F-22_Raptor(V1)-000075:1","lora:F-22_Raptor(V1)-000080:1","lora:F-22_Raptor(V1)-000085:1","lora:F-22_Raptor(V1)-000090:1","lora:F-22_Raptor(V1)-000095:1","lora:F-22_Raptor(V1)-000100:1","

Version: f2.0.1v1.10.1-previous-597-gecd4d28e

Module 1: ae

Module 2: t5xxl_fp16

Module 3: clip_l

Prompt: {F-22 Raptor, lora:F-22_Raptor(V1)-000005:1}

Steps: 25

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 9999999999999999999

Size: 1365x768

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000005: e5bf104b600f"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 52.9 sec.

Script: X/Y/Z plot

X Type: Seed

X Values: "9999999999999999999,555555555555555555"

Fixed X Values: "9999999999999999999, 555555555555555555"

Y Type: Prompt S/R

Y Values: ""lora:F-22_Raptor(V1)-000005:1","lora:F-22_Raptor(V1)-000010:1","lora:F-22_Raptor(V1)-000015:1","lora:F-22_Raptor(V1)-000020:1","lora:F-22_Raptor(V1)-000025:1","lora:F-22_Raptor(V1)-000030:1","lora:F-22_Raptor(V1)-000035:1","lora:F-22_Raptor(V1)-000040:1","lora:F-22_Raptor(V1)-000045:1","lora:F-22_Raptor(V1)-000050:1","lora:F-22_Raptor(V1)-000055:1","lora:F-22_Raptor(V1)-000060:1","lora:F-22_Raptor(V1)-000065:1","lora:F-22_Raptor(V1)-000070:1","lora:F-22_Raptor(V1)-000075:1","lora:F-22_Raptor(V1)-000080:1","lora:F-22_Raptor(V1)-000085:1","lora:F-22_Raptor(V1)-000090:1","lora:F-22_Raptor(V1)-000095:1","lora:F-22_Raptor(V1)-000100:1","

Version: f2.0.1v1.10.1-previous-597-gecd4d28e

Module 1: ae

Module 2: t5xxl_fp16

Module 3: clip_l

Prompt: {F-22 Raptor, in the style of a poster, (large text that says "F-22 Raptor":2.0), lora:F-22_Raptor(V1)-000005:1}

Steps: 25

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 9999999999999999999

Size: 768x1365

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000005: e5bf104b600f"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 54.0 sec.

Script: X/Y/Z plot

X Type: Seed

X Values: "9999999999999999999,555555555555555555"

Fixed X Values: "9999999999999999999, 555555555555555555"

Y Type: Prompt S/R

Y Values: ""lora:F-22_Raptor(V1)-000005:1","lora:F-22_Raptor(V1)-000010:1","lora:F-22_Raptor(V1)-000015:1","lora:F-22_Raptor(V1)-000020:1","lora:F-22_Raptor(V1)-000025:1","lora:F-22_Raptor(V1)-000030:1","lora:F-22_Raptor(V1)-000035:1","lora:F-22_Raptor(V1)-000040:1","lora:F-22_Raptor(V1)-000045:1","lora:F-22_Raptor(V1)-000050:1","lora:F-22_Raptor(V1)-000055:1","lora:F-22_Raptor(V1)-000060:1","lora:F-22_Raptor(V1)-000065:1","lora:F-22_Raptor(V1)-000070:1","lora:F-22_Raptor(V1)-000075:1","lora:F-22_Raptor(V1)-000080:1","lora:F-22_Raptor(V1)-000085:1","lora:F-22_Raptor(V1)-000090:1","lora:F-22_Raptor(V1)-000095:1","lora:F-22_Raptor(V1)-000100:1","

Version: f2.0.1v1.10.1-previous-597-gecd4d28e

Module 1: ae

Module 2: t5xxl_fp16

Module 3: clip_l

Prompt: {one F-22 Raptor in the background, a photograph capturing an American Air Force pilot in front of a F-22 Raptor holding a pilot visor helmet in his left hand and showing thumbs up with his right hand, taken with a 22mm lens on a Sony Alpha 7 IV camera, lora:F-22_Raptor(V1)-000005:1}

Steps: 25

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 9999999999999999999

Size: 896x1195

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000005: e5bf104b600f"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 56.0 sec.

Script: X/Y/Z plot

X Type: Seed

X Values: "9999999999999999999,555555555555555555"

Fixed X Values: "9999999999999999999, 555555555555555555"

Y Type: Prompt S/R

Y Values: ""lora:F-22_Raptor(V1)-000005:1","lora:F-22_Raptor(V1)-000010:1","lora:F-22_Raptor(V1)-000015:1","lora:F-22_Raptor(V1)-000020:1","lora:F-22_Raptor(V1)-000025:1","lora:F-22_Raptor(V1)-000030:1","lora:F-22_Raptor(V1)-000035:1","lora:F-22_Raptor(V1)-000040:1","lora:F-22_Raptor(V1)-000045:1","lora:F-22_Raptor(V1)-000050:1","lora:F-22_Raptor(V1)-000055:1","lora:F-22_Raptor(V1)-000060:1","lora:F-22_Raptor(V1)-000065:1","lora:F-22_Raptor(V1)-000070:1","lora:F-22_Raptor(V1)-000075:1","lora:F-22_Raptor(V1)-000080:1","lora:F-22_Raptor(V1)-000085:1","lora:F-22_Raptor(V1)-000090:1","lora:F-22_Raptor(V1)-000095:1","lora:F-22_Raptor(V1)-000100:1","

Version: f2.0.1v1.10.1-previous-597-gecd4d28e

Module 1: ae

Module 2: t5xxl_fp16

Module 3: clip_l

And the last test was conducted to see if it could be mixed with a style LORA without any conflicts, using the LORA style of Fritz Zuber-Buhler [FLUX] 240. Epoch 15 still came out without any conflicts.

Prompt: {a oil painting of an F-22 Raptor taking off the runway with full afterburners on, in the style of Fritz Zuber-Buhler, lora:F-22_Raptor(V1)-000005:1 lora:style_of_Fritz_Zuber-Buhler_FLUX_240:1}

Steps: 25

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 9999999999999999999

Size: 1195x896

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000005: e5bf104b600f, style_of_Fritz_Zuber-Buhler_FLUX_240: 41c638fa425e"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 56.5 sec.

Script: X/Y/Z plot

X Type: Seed

X Values: "9999999999999999999,555555555555555555"

Fixed X Values: "9999999999999999999, 555555555555555555"

Y Type: Prompt S/R

Y Values: ""lora:F-22_Raptor(V1)-000005:1","lora:F-22_Raptor(V1)-000010:1","lora:F-22_Raptor(V1)-000015:1","lora:F-22_Raptor(V1)-000020:1","lora:F-22_Raptor(V1)-000025:1","lora:F-22_Raptor(V1)-000030:1","lora:F-22_Raptor(V1)-000035:1","lora:F-22_Raptor(V1)-000040:1","lora:F-22_Raptor(V1)-000045:1","lora:F-22_Raptor(V1)-000050:1","lora:F-22_Raptor(V1)-000055:1","lora:F-22_Raptor(V1)-000060:1","lora:F-22_Raptor(V1)-000065:1","lora:F-22_Raptor(V1)-000070:1","lora:F-22_Raptor(V1)-000075:1","lora:F-22_Raptor(V1)-000080:1","lora:F-22_Raptor(V1)-000085:1","lora:F-22_Raptor(V1)-000090:1","lora:F-22_Raptor(V1)-000095:1","lora:F-22_Raptor(V1)-000100:1","

Version: f2.0.1v1.10.1-previous-597-gecd4d28e

Module 1: ae

Module 2: t5xxl_fp16

Module 3: clip_l

Summary of the Tests According to the Order of the XYZ Results:

(Eye score is my human evaluation test comparing the Epoch 15 results to the other generations in the grid.)

Aspect Ratio + Resolution Test:

Came out perfectly, unlike many of the higher LORAs.

Eye test: 8/10

Came out perfectly, unlike many of the higher LORAs.

Eye test: 9/10

Came out perfectly, unlike many of the higher LORAs.

Eye test: 9/10

Stylization + Text Test:

Came out okay but still better than most of the LORAs.

Eye test: 7/10

Specific Prompt + Subject Off Focus Test:

Came out okay; not fully following the prompt but better than anything above 50 epochs.

Eye test: 7/10

Style LORA Conflict Test:

Came out beautifully and did not have any conflicts with the style LORA.

Eye test: 10/10

Overall Average Eye Score: 8.33

Making Epoch 15 the official release model

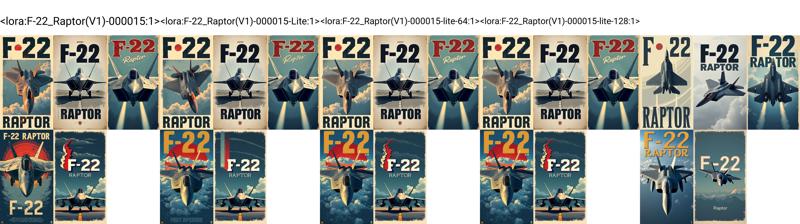

Now, due to the model size being over 1.6GB, I will have to release two versions: the Full and the Lite version. For that, I have to resize it. I have already resized the model with three different LORA ranks (32, 64, 128), significantly lowering the size to almost 1/10th of the original. However, there is a small loss of generation quality, although it's not noticeable. Here is an XYZ grid generation.

Prompt: {F-22, F-22 Raptor, in the style of a poster, (large text that says "F-22 Raptor":2.0), lora:F-22_Raptor(V1)-000015:1}

Steps: 30

Sampler: Euler

Schedule Type: Simple

CFG Scale: 1

Distilled CFG Scale: 3.5

Seed: 2278699223

Size: 768x1365

Model Hash: 3f97fdc57a

Model: flux1-dev

Style Selector Enabled: True

Style Selector Randomize: False

Style Selector Style: base

Lora Hashes: "F-22_Raptor(V1)-000015: a4cf554de612"

Hardware Info: "RTX 3090 24GB, i9-13900K, 128GB RAM"

Time Taken: 1 min. 3.1 sec.

Script: X/Y/Z plot

X Type: Prompt S/R

X Values: ""lora:F-22_Raptor(V1)-000015:1","lora:F-22_Raptor(V1)-000015-Lite:1","lora:F-22_Raptor(V1)-000015-lite-64:1","lora:F-22_Raptor(V1)-000015-lite-128:1","

Version: f2.0.1v1.10.1-previous-597-gecd4d28e

Module 1: ae

Module 2: t5xxl_fp16

Module 3: clip_l

However, I will be releasing both the full model and the lite model for ease of accessibility for the end users.

For any inquiries or if you would like to commission me to train models, you can reach me at:

Discord Username: theaiking.2024

Email: [email protected]