Through my experiences sourcing information and data, there is an exorbitant amount images that should simply be tagged... bad hands. Essentially, the majority of ai generated images, have shit hands, completely omitted hands, or entirely useless to conceptualize color bleeds.

Lets use a few small examples on how to spot bad data.

https://safebooru.org/index.php?page=post&s=view&id=4462284

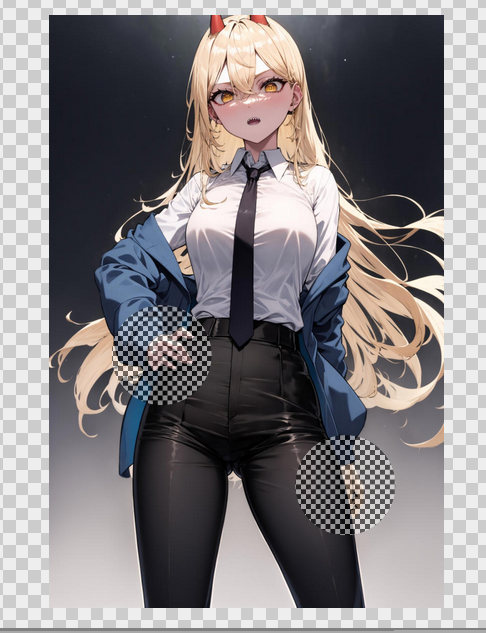

So here's a fair image, really not that bad.

However, there are faults. You may not notice them at first glance, but they exist. For example, look at the collar. It appears to be for a button-up shirt yes? Well, where are the buttons? The tie is covering every, single, button. This means you'll be teaching the AI the concept of a tie covering the buttons of a button-up collar shirt, while the button up collar shirt is under an overshirt/coat/etc.

Okay not the end of the world, flux can figure that out. I would train with this.

It fits a paradigm and consistent shape.

The background angle could depict the bottom of a stairwell, so you can substitute the background with it.

You can bulk train it for clothing styles without heavily impacting the background.

You can teach it the individual character.

Lets try another.

https://safebooru.org/index.php?page=post&s=view&id=4878731

Alright, this one is pretty obvious in comparison. If you look at the character on the right, one of the two hands appears to be... bleeding two fingers together. Nearly identical color on the open shirt below it, and you'll find the entire thing to be slightly off color-wise. The style of the sky doesn't fit, the trees appear to be blurry but they cannot be blurry, and so on.

Okay yeah, I would train with this one. Here's the reasoning;

There are two associated characters.

Testing the tags for martial arts style gloves, will give you a good idea which pair of gloves is more stable to use to teach the AI what this is.

The style is smooth and clean.

The image is aesthetically pleasing in it's own right.

Next, lets show some bad hands and how they can be used to train the AI, even with the bad hands training WITHIN the dataset.

https://safebooru.org/index.php?page=post&s=view&id=4462251

Right, the eye fixates on the face, the body, the clothing, and so on. Then if you start paying closer attention to the hands, you start seeing... the flaws. The genuine anatomical problems that are actually present within the image.

Clearly her hands and arms are in the correct pose, but the fingers... they are associated with a different pose. The fingernails are completely disjointed and incorrect.

Alright lets salvage this one in a really simple step huh.

I've trained Simulacrum, to identify censorship. "censored" is attached directly to anime, so it procs the majority of anime styles and shunts the genitals, the mouth, the face, and any penises into a censored form.

I however, taught it to censor... HANDS!!!

So for my model, and it's really not hard to teach, you can quite literally just... tag censored hands, and then you can take an image like this, and inpaint the hands away.

As you've seen, simulacrum v38 can in fact repair hands, and even identify the hands simply using the "no hands" tag.

Using a more complex tagging process you can actually use YOLO to identify and repair bad hands in ai generated images automatically, but no matter what you do you'll need to ensure you guarantee they are tagged with the ai generated tag. Without the ai generated tag, they may hurt your model, potentially corrupting large amounts of good hands.

Simulacrum's anime portion is facing this issue now, which is why my fixation has shifted to ensuring the hands themselves are solid.

I'll be introducing roughly 15,000 good hand images converted to anime, and 3d, from the HAGRID dataset (5k identical images each), as specific inpainting and timestep training. That way the system can be both used to safely inpaint hands, as well as proc hands for specific and strong reassertion.

I will solve this hand problem.

Next, we have the coloration and style issue.

Normally, the coloration and style isn't that big of a deal, but when large scale training, you end up with a... kind of bleed, between compounding color problems.

There's quite a few terms for this, but I prefer to call it "the blind teaching the deaf how to see", as it's a metaphor that has existed for many years, and it's directly related to the way I think. People like to coin these sorts of analysis like their own, but human history has indications of these sorts of behaviors throughout all of our recorded records, so I'll just use the old adage.

So lets look for some blind teaching the deaf how to see, so the deaf can learn... what it already knows, in a worse way.

https://safebooru.org/index.php?page=post&s=view&id=5333410

Look at this absolutely beautiful image. The landscape, the smoke, the beast. Truly a fantastic and awe-inspiring image. Highly artistic and full of detail.

How can this HURT, Simulacrum? That's what I ask myself, every single time I see something like this. I rely heavily on my own ability to aesthetically assess and identify certain traits, based on the information I've already seen. Most people can't do this, from what I've seen, since there's a large disconnect between this part of the brain, to the logical portion of the brain, but I digress.

If the colors are defined in the tags.

If the system around it doesn't understand the basics.

If it teaches bad indications of things that aren't directly visible.

mountainous horizon is, iffy. There is no visible horizon.

This is also not good. There is an indication that clouds will bleed between foreground clouds, and background clouds. This image is no good for training Simulacrum in it's current state; I would need to condition Simulacrum to accept multi-layered clouds, many types of scale, many colors of scale, unique horizon views of mountains, and so on.

We essentially, do not want to teach the T5 nor CLIP_L any of these colorations. We do NOT want our current colors to be impacted, as they are already very very good. We cannot teach this to CLIP_L so it must be omitted from any CLIP_L training until the beast itself is to be trained with a pack of similar beasts.

Simply put, you can ALWAYS find a reason to not train something, if you look hard enough. Even the most beautiful artwork on the face of the earth, will require prior conditioning to generate similar information.

Which, is why, I feed Simulacrum EVERYTHING.

ENTIRELY contradictory to the learnings in the earlier trainings of SD1.5 and SDXL, FLUX can actually handle things like this. Flux handles everything, if you carefully teach it, using the correct words, with the correct phrases, with the correct detailing, with careful interpretation between the various interacting objects within the image itself.

One well tagged image, often means more than 10,000 badly tagged images.

I've thought long and hard on this policy before I implemented it. T5 and CLIP_L WILL identify things based on their tags, so MORE tags with bad hands associated with ai generated, will simply strengthen that tag. However, they must be tagged with bad, incorrect, deformed hands. Essentially, positive reinforcement IS our negative prompt, because T5 knows the difference.

This is the primary reason why I don't teach the T5. I don't know how yet, even after all this testing.

CLIP_L however, is an idiot. It's more akin to a fool naysayer than a helper a lot of the time, which is why you need to teach it anatomical differences.