I have been playing with griptape this weekend, for those of you unfamiliar, griptape has the ability to call on any LLM, Yes any be it ChatGPT, Gemini or in my case a personal LLama running on my own PC. And thus you can make agents with griptape and tons of other tools.

But this article is not on Griptape But I figured let's join this with flux and see what we get and the result was....Poor actually, the flux images had artifacts and patterns in them.

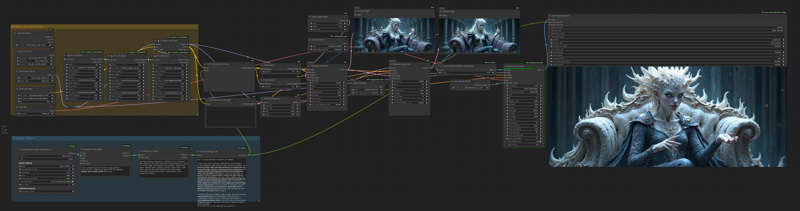

So I remembered a video I saw once by Olivio Sarikas where he put a few (QUITE a few) Tweaks in it, to improve the result of Flux images and I figured hey, why don't I combine some things and get an LLM powered prompt booster together with amazing results and voila my workflow came to fruition, and since we already use a lora (the Turbo Flux lora) WHY STOP AT 1?

So here is a little explanation:

- The griptape part: Not really necessary you can bypass those groupnodes if you do not want to or are unable (It runs Comfy with flux and a personal LLM so it kind of bites in to your resources)

But for those who are interested and have LLama installed play with it, and maybe those who have an API key for any of the big models (Yeah you do need those for ChatGPT, claude etc....) you could ease up on your own resources, but then it bites in to your wallet, But OK I said this wasn't about Griptape so you can find tons more on their Github and even videos on YT (

)

- The yellow group we are pretty much familiar withthis one is connected to the GGUF Unet models now but you can connect it with the upper nodes if you use the Marduk flux models that are in the regular models file and then off course some lora stackers and the apply Lora Stack

Notice how the first Lora is the Flux1-Turbo-Alpha, We will use this in Both Ksamplers but with a little twist thx to our good friend Olivio

Now the easiest thing to do is to watch the video or else you will be stuck with a TLDR explanation (Should have done this earlier but hey hindsight being 20/20 and all)

but yeah those who say A have to say B the clip text encode might look a bit weaird but you can convert the text input to a widget again if you do not use the griptape nodes and use it as a regular positive prompt

The first Ksampler, at first nothing unusual, empty latent, noise seed, empty negative prompt

and the positive prompt goes through a fluxguidance of 3.5 and off course the model coming in from the Lora apllicator (Mainly for the flux turbo but feel free to play with other loras aswell), 8 steps going from zero to eight, So far so good , But those who loaded the model and are attentive enough will ask what about the other flux guidance and the model sampling flux? Those are for the second Ksampler be patient.

And those who asked "Only 8 steps?" Yeah that is what the Turbo Lora is for, SPEEDBut speed comes with a price, poor images with a lot of artifacts and a pattern that is alas a flux weirdness, So in comes Ksampler 2, not an empty latent but the 1st ksamplers result which you can see in the first preview, separate seed generator same negative, BUT the model comes through the model sampling flux node and a Guidance of 7.5, this is what clears that first bad image up really good AND ads more detail.

So we then we VAE decode it to see another preview but also send in through the "Image contrast adaptive sharpening node" to make the image sharper and through the ultimate Upscaler BUT wait notice one little detail here the model bypasses the Loras here, what no Turbo? Well turns out the Lora does not add speed there on the contrary, it is actually faster without the turbo lora there

And then you are set should make the sharpest images with lots of details, even Odin went "Damn Bruh"