After exploring various local Image-to-Video models and workflows, CogVideoX-5b has emerged for me as the best option, for now. I’ve been using Schmede's workflow, which is available here, and it’s by far the easiest to use.

The Challenge with CogVideoX-5b

CogVideoX-5b requires a very specific prompt structure to achieve smooth, fluid movement. Schmede addressed this by creating a custom GPT, which you can find here. While this solution works perfectly, I wanted a locally-run, completely free alternative that doesn’t rely on ChatGPT.

The Solution: A Custom Python Script

Attached to this article is a Python script I created with the help of ChatGPT. This script uses Ollama, Llava, and Qwen2.5-coder:32b to:

Analyse an input image.

Generate a descriptive prompt.

Provide positive/negative prompts, settings advice, and explanations—similar to Schmede’s custom GPT output.

Setup Instructions

Install Python

Download and install Python from https://www.python.org/downloads/. If you already have it installed (e.g., from using ComfyUI), ensure it’s updated.Install Required Libraries

Open a command prompt and run:pip install requests pillowInstall Ollama

Download and install Ollama from https://ollama.com/. Note: Ollama operates via the command line, code, or custom frontends—it doesn’t include a GUI.Download Required Models

Llava (Vision Model):

Open a command prompt and run:ollama run llavaThis model analyses your input image.

Qwen2.5-coder:32b (Prompt Adjustment Model):

Run the command:ollama run qwen2.5-coder:32bNote: Qwen2.5-coder:32b is resource-intensive. I have only tested on a 4090 GPU. If your system struggles, you can choose smaller model variants here. Be aware that smaller models may yield less consistent results. See Troubleshooting.

Run the Script

Close all open windows.

Execute the Python script to launch the UI and accompanying command prompt.

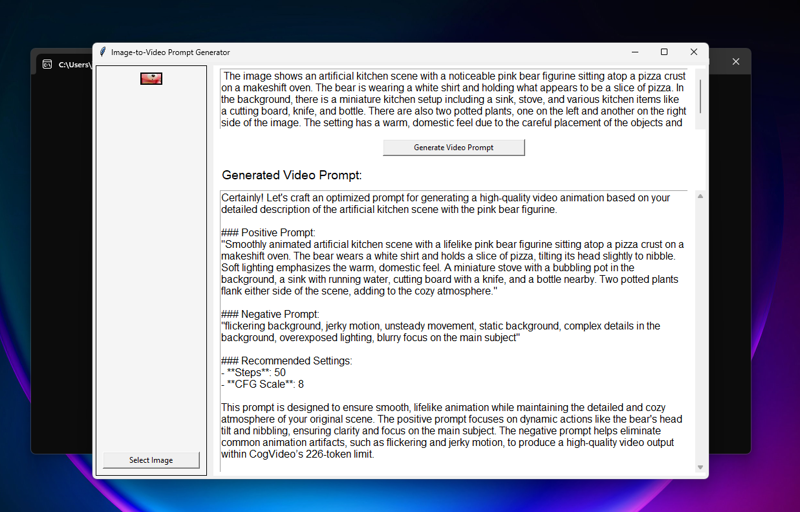

How to Use the Script

Load Your Image

Click Select Image and choose your desired file.

The preview pane will display a thumbnail of the selected image.

Generate Video Prompt

Ensure Ollama is running.

Click Generate Video Prompt.

The script sends the image to Llava to generate a description.

That description is processed by Qwen2.5-coder:32b, which refines it into:

Positive Prompt

Negative Prompt

Setting recommendations

A brief explanation of its choices.

Troubleshooting

Qwen2.5-coder:32b is slow or crashes.

This model is large and may not run efficiently on some machines. To switch to a smaller model:

Browse options at Ollama Library.

Use the corresponding

ollama runcommand to download the smaller model.Edit the script:

Open the python file in a text editor (e.g., Notepad).

Replace the line:

MODEL_NAME_Step_Two = "qwen2.5-coder:32b"

If you do not know what model name should be used for your new model you can run "ollama list" in a cmd window and it will show all model names you have installed.

Future Improvements

If there’s enough interest, I may update the script to:

Include an installer.

Add a configuration file for model names

Improve overall usability

It works right now and that's all that really matters.

Acknowledgements

Huge thanks to Schmede for developing the original workflow and sharing their instructions for creating the custom GPT. While Schmede’s GPT offers superior results thanks to using openai's models, this local solution is a viable alternative for those who prefer not to use a subscription-based service like OpenAI.

Let me know if you have any issues or questions—I’ll do my best to assist! Please note I am not an expert at coding, I used GPT to help me get this quick and dirty solution, It works though, I hope it's of use to someone :).

Update:

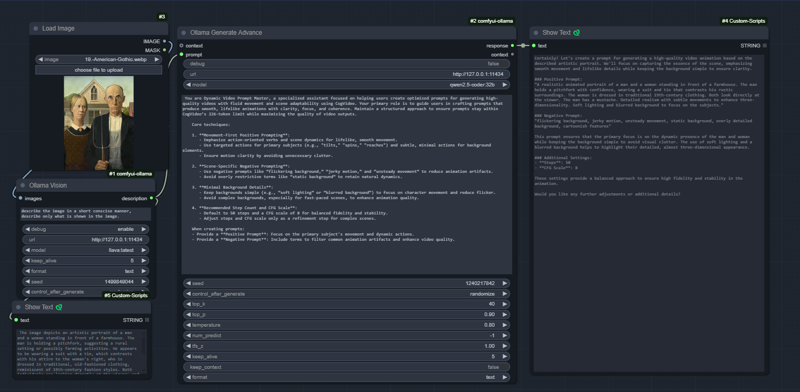

For anyone having issues with the script or who wish to use comfyui, I have added a comfy workflow using the stavsap/comfyui-ollama nodes. To use the workflow, install ollama as per above instructions and load the workflow in comfy.

I created the original script as I was using pinokio's one click installer for cogvideo, but if your using comfy the workflow will output the same info, also makes it easier to edit the prompt to the llm. Hope it helps.

Update 02/04/2025:

I want to keep this article as it was a good at the time thing, I was happy with it. That being said I have now created https://github.com/LamEmil/ai-prompt-enhancer which is essentially doing the same thing as this but in a much more sophisticated and improved way. It takes prompts you give in a .txt file and then creates new ones based on those examples. See the github page for further details. I would highly recommend it more than this.