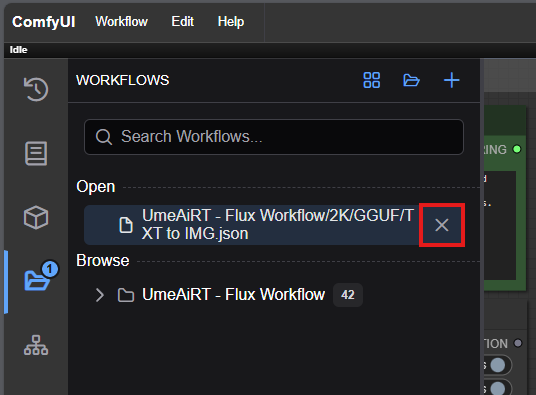

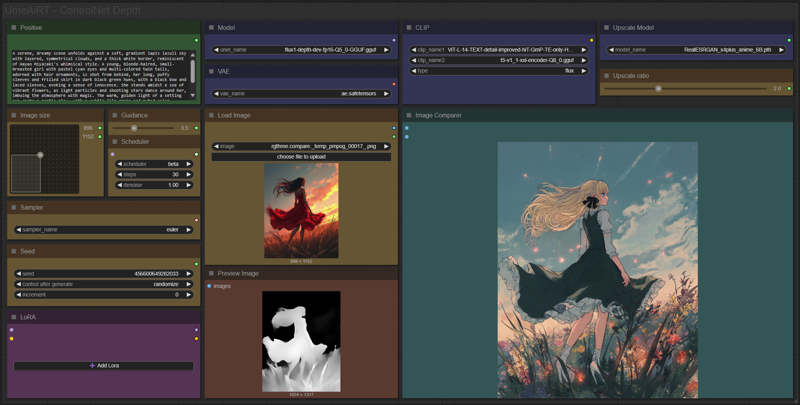

Step-by-Step Guide Series:

ComfyUI - ControlNet Workflow

This article accompanies this workflow: link

Foreword :

English is not my mother tongue, so I apologize for any errors. Do not hesitate to send me messages if you find any.

This guide is intended to be as simple as possible, and certain terms will be simplified.

Workflow description :

The aim of this workflow is to generate images from another one and a text in a simple window.

Prerequisites :

Flux.1

Depth

boricuapab/flux1-depth-dev-fp8 · Hugging Face

"flux1-depth-dev-fp8" in ComfyUI\models\unet

Canny

boricuapab/flux1-canny-dev-fp8 · Hugging Face

"flux1-canny-dev-fp8" in ComfyUI\models\unet

black-forest-labs/FLUX.1-dev at main (huggingface.co)

"ae" in \ComfyUI\models\vae

comfyanonymous/flux_text_encoders at main (huggingface.co)

"t5xxl_fp8_e4m3fn" in \ComfyUI\models\clip

"clip_l" in \ComfyUI\models\clip

For GGUF

I recommend :

24 gb Vram: Q8_0 + T5_Q8 or FP8

16 gb Vram: Q5_K_S + T5_Q5_K_M or T5_Q3_K_L

<12 gb Vram: Q4_K_S + T5_Q3_K_L

GGUF_Depth

SporkySporkness/FLUX.1-Depth-dev-GGUF · Hugging Face

"flux1-depth-dev-fp16-Q8_0-GGUF" in ComfyUI\models\unet

GGUF_Canny

SporkySporkness/FLUX.1-Canny-dev-GGUF · Hugging Face

"flux1-canny-dev-fp16-Q8_0-GGUF" in ComfyUI\models\unet

GGUF_clip

city96/t5-v1_1-xxl-encoder-gguf at main (huggingface.co)

"t5-v1_1-xxl-encoder-Q8_0.gguf" in \ComfyUI\models\clip

Better flux text encoder

ViT-L-14-TEXT-detail-improved-hiT-GmP-TE-only-HF.safetensors · zer0int/CLIP-GmP-ViT-L-14 at main (huggingface.co)

"ViT-L-14-GmP-ft-TE-only-HF-format.safetensors" in \ComfyUI\models\clip

Upscaler

ESRGAN/4x_NMKD-Siax_200k.pth · uwg/upscaler at main (huggingface.co)

"4x_NMKD-Siax_200k.pth" in \ComfyUI\models\upscale_models

Controlnet (for v5 and earlier)

Canny: flux-canny-controlnet-v3.safetensors

Depth: flux-depth-controlnet-v3.safetensors

Hed: flux-hed-controlnet-v3.safetensors

https://huggingface.co/XLabs-AI/flux-controlnet-hed-v3/blob/main/flux-hed-controlnet-v3.safetensors

in \ComfyUI\models\xlabs\controlnets

Custom Nodes :

Don't forget to close the workflow and open it again once the nodes have been installed.

Overview of the different versions :

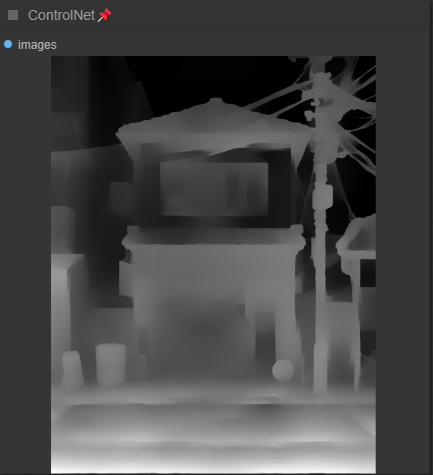

DEPTH

Here we see that it's the global shapes that are captured.

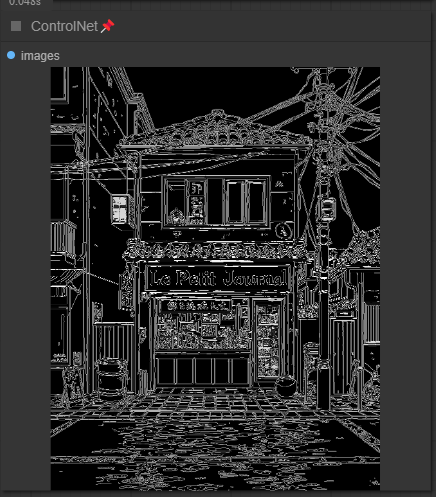

CANNY

It takes all the lines of the original image.

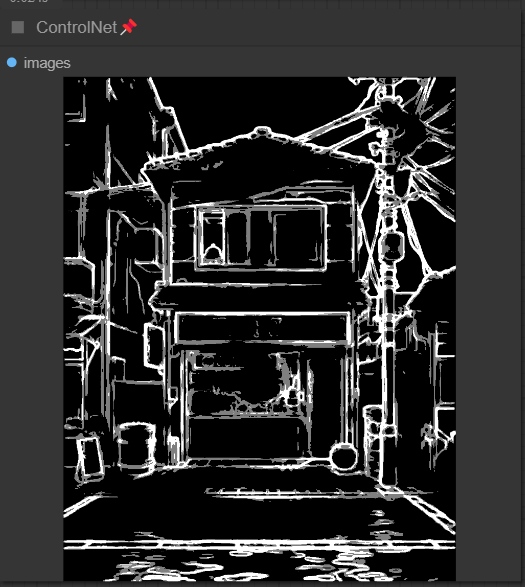

HED

It resembles CANNY, but retains only the main lines.

Usage :

In this new version of the workflow everything is organized by color:

Green is what you want to create, also called prompt,

Yellow is all the parameters to adjust the video,

Blue are the model files used by the workflow,

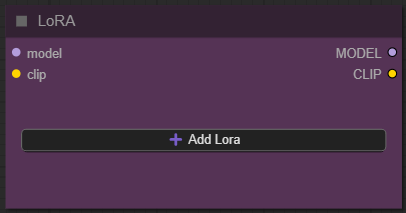

Purple is for LoRA.

We will now see how to use each node:

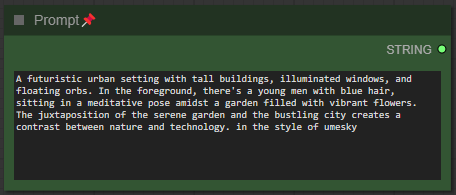

Write what you want in the “Prompt” node :

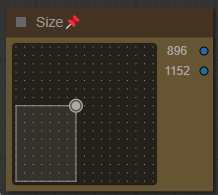

Select image format :

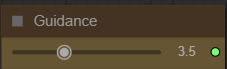

Choose the guidance level :

I recommend between 3.5 and 4.5. The lower the number, the freer you leave the model. The higher the number, the more the image will resemble what you “strictly” asked for.

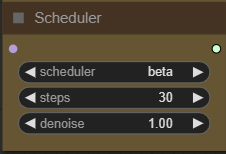

Choose a scheduler and number of steps :

I recommend normal or beta and between 20 and 30. The higher the number, the better the quality, but the longer it takes to get an image.

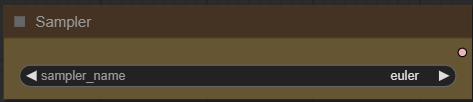

Choose a sampler :

I recommend euler.

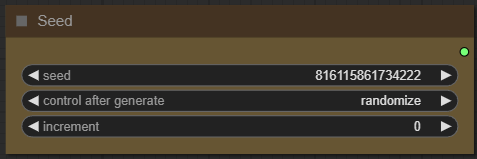

Define a seed or let comfy generate one:

Add how many LoRA you want to use, and define it :

If you dont know what is LoRA just dont active any.

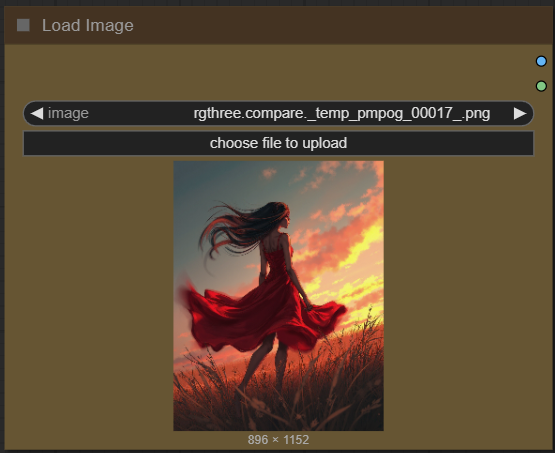

Load your base image :

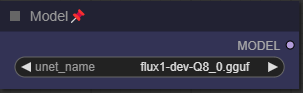

Choose your model:

Depending on whether you've chosen basic or gguf workflow, this setting changes. I personally use the gguf Q8_0 version.

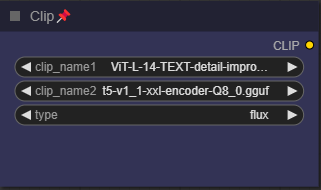

Choose a FLUX clip encoder and a text encoder :

I personally use the GGUF Q8_0 encoder and the text encoder ViT-L-14-TEXT-detail-improved-hiT-GmP-TE-only-HF.

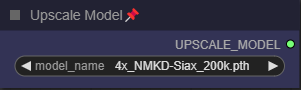

Select an upscaler : (optional)

I personally use RealESRGAN_x4plus.pth.

Now you're ready to create your image.

Just click on the “Queue” button to start:

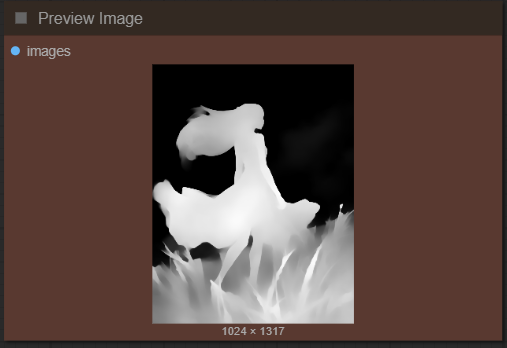

You will see a preview of the controlnet processor.

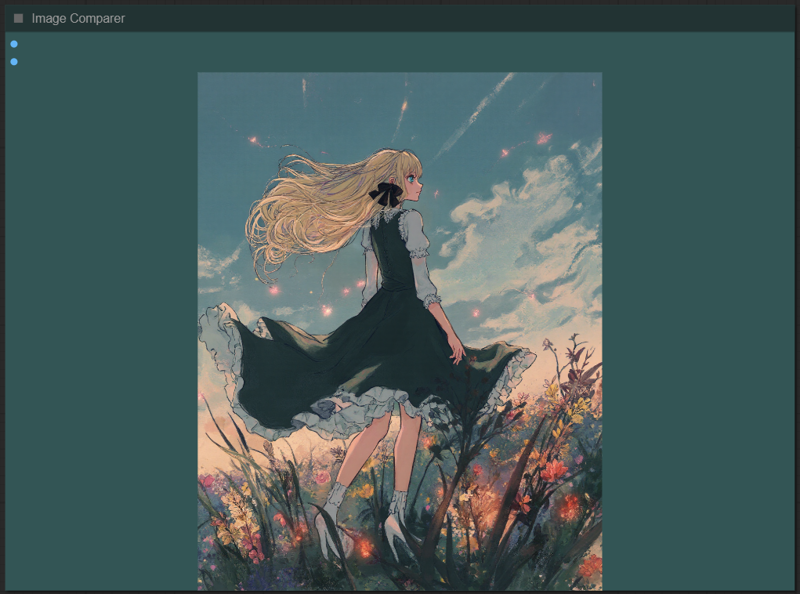

Once rendering is complete, the image appears in the “image viewer” node.

If you have enabled upscaling, a slider will show the base image and the upscaled version.

This guide is now complete. If you have any questions or suggestions, don't hesitate to post a comment.