A simple tutorial and examples for NOOBAI XL

Laxhar Dream Lab

Authored by: Niangao Special Forces

This guide only teaches the most basic content to help beginners get started. If you are already very familiar with AI image generation methods and just want to know about the use of NOOBAI XL, you can directly skip to Section 5.

1. Introduction

In the field of AI image generation, we have many models to choose from, each based on different architectures and playing different roles in different image generation domains. Today, we would like to introduce NOOBAI XL, which is based on the SDXL architecture and trained primarily for anime image generation. It uses the complete Danbooru and e621 datasets and should be able to complete generation tasks excellently with as few lora models as possible.

2. Deployment

If you want to use NOOBAI XL, you can typically use online cloud generation or offline local deployment. The difference is that online generation services are usually paid, while local deployment has certain requirements for your device. If you are already using any generation method, you can read the content directly.

Online Generation

Online generation usually has faster generation speed, a more comfortable generation experience, and can generate images with any device, but these services are usually paid.

Local Generation

Local deployment has certain requirements for your device, especially in terms of graphics cards. Although there are examples of running with low-end devices, the generation experience is far from comfortable; generally, your device should have at least 8GB of video memory, 16GB of memory, and 50GB of hard disk storage space. The author uses an NVIDIA 4070ti 16GB graphics card and 32GB of memory, which can meet most needs.

The tool used this time is ComfyUI, which supports v-prediction on one hand, and on the other hand, its portability and customizability are better. If you want to use webui, that's also possible.

Download ComfyUI

You can download ComfyUI from this GitHub repository

https://github.com/comfyanonymous/ComfyUI.

After handling the relevant dependencies, it can be used; at the same time, I also recommend the one-click package made by Anzu

https://www.bilibili.com/video/BV1Ew411776J

which can automatically install related dependencies and is more suitable for beginners.

Download Models

NOOBAI XL currently has two series of models (eps-prediction and v-prediction) available for download. For now, the eps-prediction model is superior to the v-prediction model because the v-prediction model has not yet been fully trained.

You can download the model you want from

https://civitai.com/models/833294.

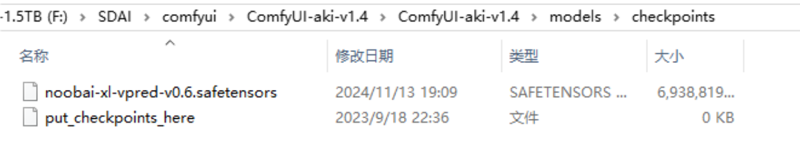

Model Import

Move the downloaded model to the comfyui/models/checkpoints folder and refresh the comfyui interface to load it.

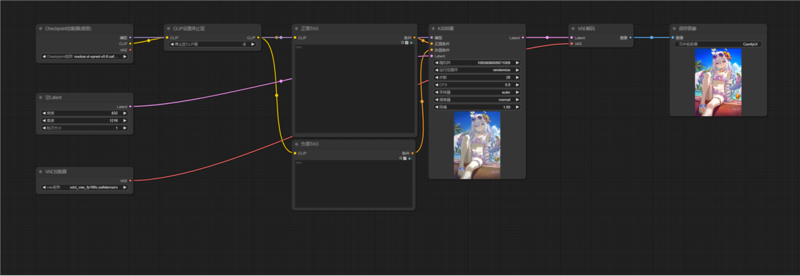

Workflow Import

Comfyui allows you to design your own workflow, and you can also import existing workflows. If you are using comfyui for the first time, I suggest you use the following workflow template.

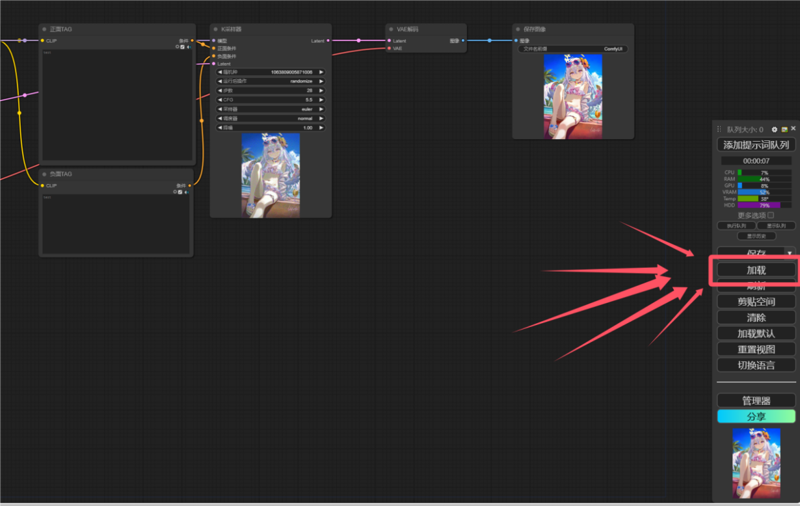

Import Method: Download the workflow file from the link.

Click the "Load" button in the lower right corner of the interface (usually the lower right corner).

Select the file you just downloaded, and you can import it.

(Advanced) Custom Workflow

If you want to achieve more functions, such as facial repair, high-definition repair, you can add the corresponding nodes in the workflow. There are many related tutorials online, which will not be repeated here.

3. Image Generation

Characters and Artists

NOOBAI XL uses the complete Danbooru + e621 dataset. You can query the corresponding tags in the dataset link. At the same time, you can directly use some TAG dictionaries from nai3.

Precautions

Due to model differences, nai3's artist trigger words will not work exactly the same in NOOBAI XL;

You need to remove the underscores from the tags obtained from Danbooru and e621, and add a backslash "\" before and after the parentheses (note the direction of the slash, do not confuse it with "/"). For example, the tag "lucy_(cyberpunk)" from Danbooru should be used as "lucy \(cyberpunk\)" in the prompt.

How to Get the Trigger Word List?

The following link provides all the characters and artist styles that the model recognizes:

How to Use the Table?

Download the files from the corresponding links to get a CSV format table. Each row in the table represents a character or artist. For each character/artist, the "trigger" column indicates its trigger word. You just need to copy and paste it into your prompt.

For the Danbooru character table, the "core_tags" column indicates the core character tags, which are used to assist in character restoration. If the character you generate has insufficient restoration, you can add these tags to help improve the restoration.

The "url" column in the table shows the tag image display.

ComfyUI Basic Generation Parameters

You can directly click on the corresponding interface to adjust the parameters you want.

Resolution

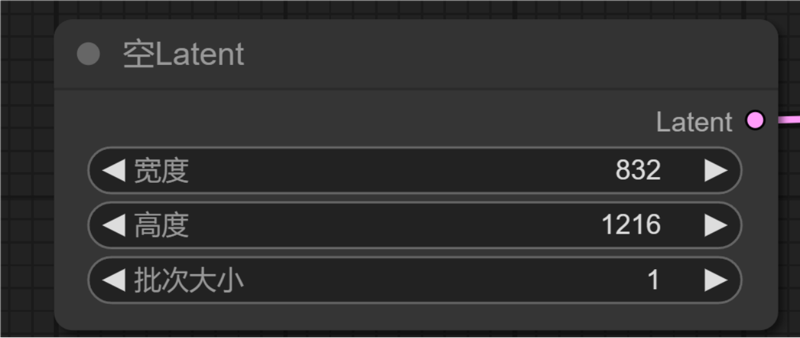

NOOBAI XL supports a variety of resolutions, but it is strongly recommended to choose from the following resolutions (width x height):

768x1344, 832x1216, 896x1152, 1024x1024, 1024x1536, 1152x896, 1216x832, 1344x768, 1536x1024

Resolutions below 512512 will cause image errors*

CLIP Skip Layer

No need to set the CLIP skip layer

Prompts

Prompts are divided into two types, positive prompts and negative prompts; they will affect the generation results, making the image closer to/farther from the positive/negative prompts you input. For better generation effects, I suggest usually writing prompts in the following order: number of characters (1girl/1boy), character name, artist prompt, scene/environment/camera angle, action, expression, items, quality prompts.

At the same time, I also suggest writing the TAG string in detail, a detailed TAG will bring better generation effects; adding masterpiece, best quality, newest, absurdres, highres at the end of the TAG can significantly improve the image generation quality.

Regarding TAG queries, you can find every TAG corresponding image and specific meaning on Danbooru. The WIKI on huggingface can also be queried.

Negative prompts do not need too much attention, generally copying a string of commonly used ones is enough. If you do not want the image to generate NSFW content, add the nsfw TAG to the negative prompts.

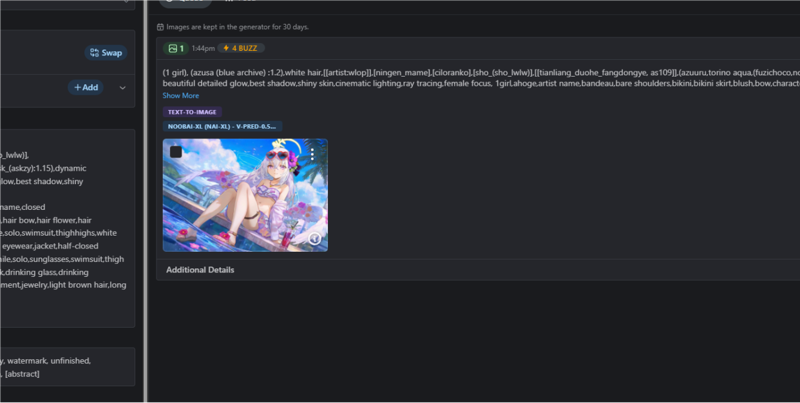

Here is an example of an image tag

Plain Text

1 girl,(klee \(genshin_impact\):1.2 ),blonde hair,(alternate costume:1.2),(foreshortening ),smug,mesugaki,hand to own mouth,half-closed eyes ,[[artist:wlop]],[ningen_mame],[ciloranko],[sho \(sho_lwlw\)],[[tianliang_duohe_fangdongye, as109]],(azuuru,torino aqua,(fuzichoco,nobaba:1.17),ask \(askzy\):1.15),dynamic angle,depth of field,high contrast,colorful,detailed light,light leaks,beautiful detailed glow,best shadow,shiny skin,cinematic lighting,ray tracing,female focus,+++,open mouth,1girl,bare legs,black ribbon,blonde hair,blue skirt,blush,braid,fang,feet out of frame,hair ribbon,jacket,long hair,looking at viewer,multicolored clothes,multicolored jacket,pleated skirt,ribbon,shirt,simple background,skin fang,skirt,smile,solo,twin braids,white background,yellow eyes,yellow shirt,(((masterpiece,best quality,newest,absurdres,highres)))

Plain Text

lowres,bad anatomy,blurry,(worst quality:1.8),low quality,hands bad,(normal quality:1.3),bad hands,mutated hands and fingers,extra legs,extra arms,duplicate,cropped,jpeg,artifacts,long body,multiple breasts,mutated,disfigured,bad proportions,bad feet,ugly,text font ui,missing limb,monochrome,face bad,

Sampler

A sampler refers to the algorithm used in the denoising process of generating images; different samplers have different execution steps and computational requirements for converging images. In most cases, NOOBAI XL can achieve good results with Euler and Euler a samplers (28 steps), but this does not mean that NOOBAI XL only supports these two samplers. You can try other samplers until you find your favorite one.

Generally speaking, the v-prediction model uses Euler/Euler a sampler; using other samplers may lead to oversaturated images, ghost images, black images, and other different problems. The sampler parameters applicable to the EPS model may not be effective on the V prediction model.

CFG

CFG refers to the degree of association between prompts and generated images. A higher CFG value will strengthen the connection between prompts and generated images. The CFG coefficient is recommended to be between 3-5.5. Values that are too high or too low may generate unexpected results.

VAE

Directly use the VAE that comes with SDXL. Using the wrong encoder may lead to image fragmentation, flower images, or unexpected distortions.

4. Example Image Display

more imgs:

https://fcnk27d6mpa5.feishu.cn/wiki/S8Z4wy7fSiePNRksiBXcyrUenOh

5. Summary

NOOBAI XL is an anime image generation model and does not perform well on real-person images; the training set data is up to October 24th, using Danbooru TAG annotations. In terms of character generation, Danbooru TAG images with more than 50 should achieve good results, and it also supports a certain degree of natural language image generation. At the same time, you can also combine different artist tags to give the generated images a variety of styles.

When using it, you should pay attention to the following:

• The resolution should be greater than 512*512;

• No need to set the CLIP skip layer;

• Use Danbooru tags for prompts, and artist tags need to add the artist: prefix

• Regarding the V prediction model:

• If you are using the V prediction version, choose Euler sampler (not Euler A) first, other samplers may cause image oversaturation.

• CFG Scale is recommended to be 3.5-5.5;

• The sampler and CFG Scale parameters effective on the noise (eps) prediction model may not be effective on the V prediction model;

• Use the SDXL default VAE;

• Webui using the V prediction version requires additional plugins or switching to the dev branch (which may lead to bugs);

6. Postscript

NOOBAI XL is an excellent anime image generation model. I believe it is not inferior to Animagine XL, pony, and other old SDXL models. It can output a large number of accurate characters without relying on any other models, and also supports style adjustments through artist tags. I am very happy that the open-source community has added a powerful model, but I have also noticed that in the closed-source commercial field, there is a model called NAI3, which is also an anime image generation model. Despite having a large lora pony, Animagine XL, or the newly released NOOBAI XL, it seems to be lagging behind. I don't know what technology NAI uses, but the most likely clue seems to be novel's SMEA sampler, which can greatly enhance the detail and lighting intensity of the image and make the image look more "Anime". I look forward to the community having a substitute or even better technology in the near future. Of course, in the end, I would like to thank Laxhar Dream Lab for adding new momentum to the community, and also thank the community groups that have helped NOOBAI XL.

Finally, I would like to put a Linglan image generated by NAI3, which uses the SMEA sampler. Unfortunately, I still cannot generate a similar image in the local model.