This article covers how to install & use the Auto1111/ForgeUI SegmentAnything extension, which uses a combination of GroundingDino for detection boxes of concepts in any image then SegmentAnything to generate masks for LoRa Training, img2img, Controlnet...

It can be found here : https://github.com/continue-revolution/sd-webui-segment-anything

This extension is very powerful and is IMHO worth installing Auto1111/ForgeUI just for it.

NB : If you know alternative solutions, for ComfyUI or whatever, please leave a comment 😊

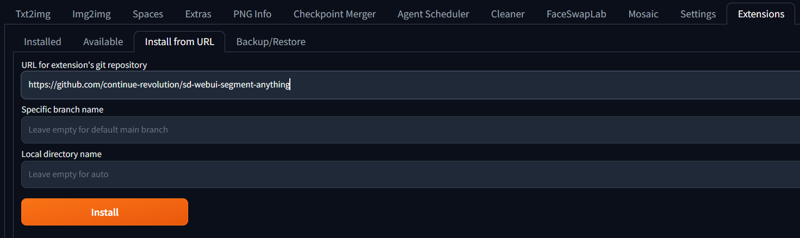

Installation :

The simplest way to install it is using the “Extensions Tab > Install from URL” in uto1111/ForgeUI

Follow the readme on github. DO READ IT at some point. It contains a lot of valuable information, even very interesting stuff about Controlnet I have not mastered yet.

There are also some videos showing how it works. It’s pretty straightforward tbh.

It will not work out of the box, you need to download a SAM model from the readme page and paste it in your extension folder (for me “C:\Forge\webui\extensions\sd-webui-segment-anything-altoids”). I’m using the SAM-HQ largest one

Also, go to Settings tab > Segment Anything and tick “Use local groundingdino to bypass C++ problem” to get rid of some annoying messages in the terminal further on.

How to use it to generate masks

Quick explanation of how it works to generate a single mask before we move on to batch generation to do the whole dataset 😉

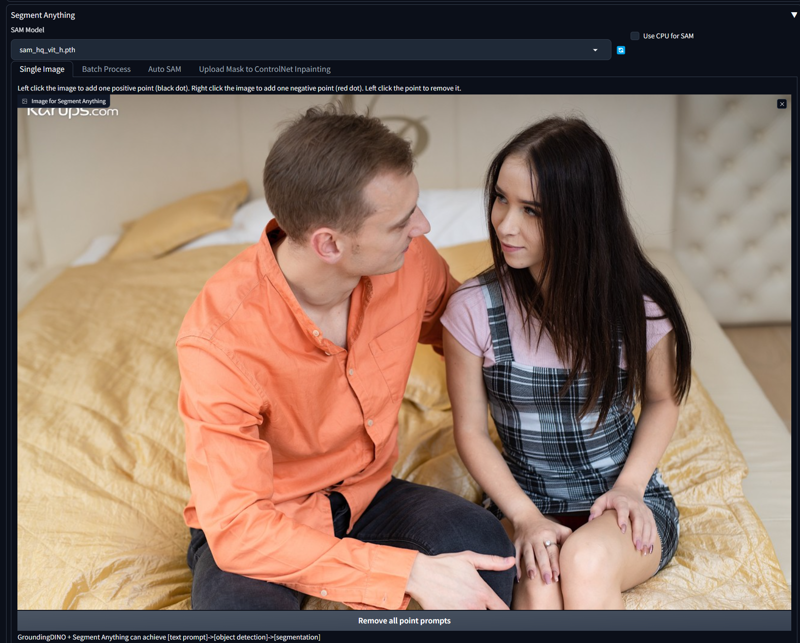

You go to either Txt2img or Img2Img tab then you unfold SegmentAnything.

Then you have to select the SAM model you want to use, and upload a picture.

There you have two options :

Click on the picture to specify through positive and negative points what you want to keep or exclude in the picture. I’m not using that - it won’t work for batchs

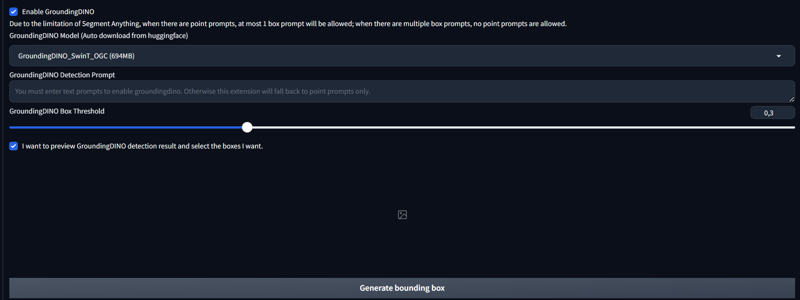

Enable GroundingDino so you can prompt what you want to keep

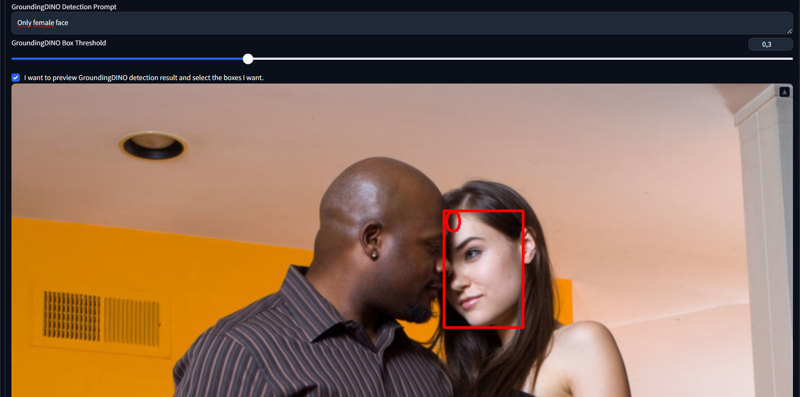

So you tick Enable GroundingDino and select a GroundingDino model. Despite being smaller, I prefer the SwinT_OGC one because in my tests I could use the “Only Female face” in the Detection Prompt. That’s right. It’s able to detect only the girl’s face !

You can use detection prompts such as : “Face”, “Face and hair”, “Person”, “Body”, “Mouth”, “Nose”, “Mouth and nose”, “Eyes”. (Tell me if you find good ones please)

It is truly awesome but has some limitations. I haven’t found how to use negative prompts (“Face without mouth” for instance) with it, doesn’t seem possible with the extension.

The Box Threshold defines how tolerant the detection is. Default 0.3 value is fine.

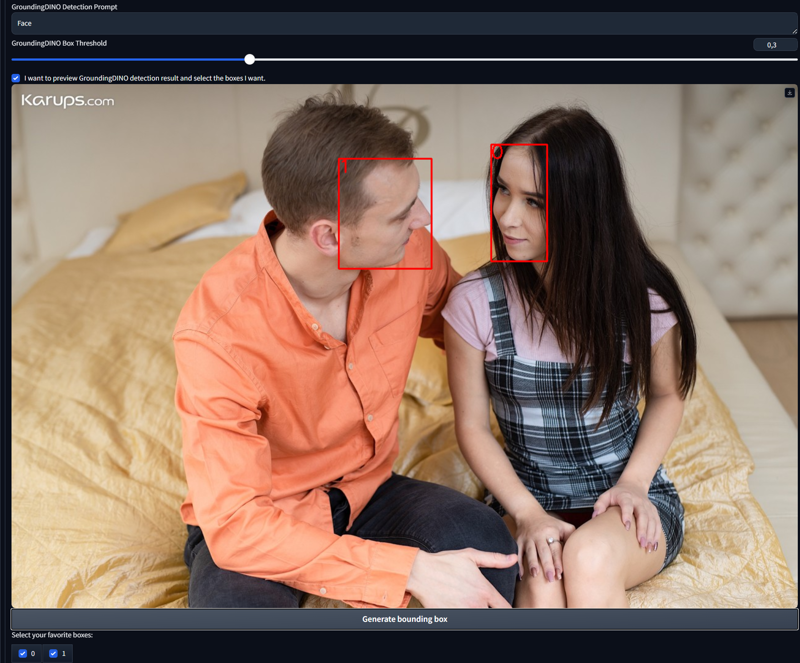

Click on “I want to preview” so you can test your prompt on your picture. Depending on your prompt you will see one or several boxes appear.

Here I prompted just “Face” so I get two boxes, number 0 and number 1. Then you can select which boxes you want to generate masks from using the tickboxes.

Another example using “Only female face”. Please note it doesn’t always work, that’s why I had to change the pic :-D

I think GroundingDino is the best at this game at the moment though.

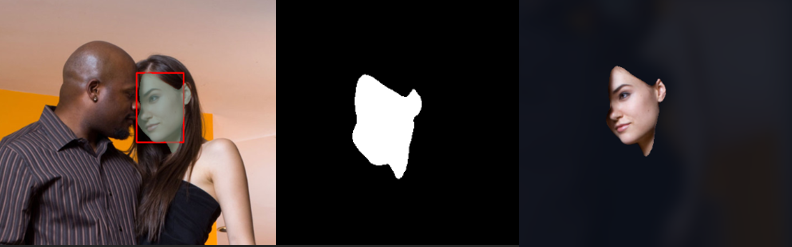

So now I run SegmentAnything using “Preview Segmentation” on that box 0, which will output a grid of three possibles sets of “Blend” / “Mask” / “Masked image”. Here’s one :

The blend is the picture with the mask and the GroundingDINO box so you can check the detection process.

The mask is, well, the mask.

The masked image is what was cropped, and could be useful for other purposes.

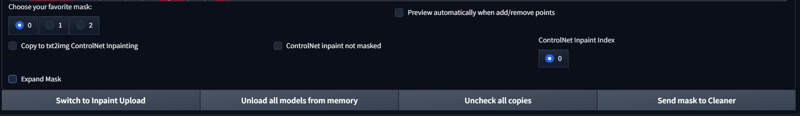

There are others interesting options under that I will let you discover. But basically you can pick up your favorite mask out of the 3 sets and then use shortcuts to Inpainting / Controlnet. I don’t really use that stuff yet but it looks very valuable. See the readme.

Expand mask is useful to reupload a mask and as it name says, expand/dilate it

How to use it to batch generate masks for a whole dataset

I cover this subject in my other article "Training non-Face altering LoRas : Full workflow" https://civitai.com/articles/8974