(JoyCaption is an image captioning VLM, for context: https://civitai.com/articles/7697)

An Update and Deep dive

This is a little article that's half an update on JoyCaption development, and half a dive into the world of Visual Question Answering, which give VLMs the ability to answer questions about images and follow vision related instructions.

One of the most requested and desired features for JoyCaption is the ability to better guide the output of the model. The style of the caption it writes, information it should and should not include, hints as to what is in the image, etc. This ranges from just telling the model what to do, to giving it examples of captions to follow, to providing it booru tags to incorporate.

And having dogfooded JoyCaption myself in captioning the dataset for bigASP v2 (https://civitai.com/models/502468), I certainly agree that being able to more flexibly instruct the model would help a lot. For example, in bigASP v2 I had to create several post processing models to a) remove stuff like "this image is", b) expand the vocabulary, c) incorporate image sources, d) improve mentioning and not mentioning "watermark" in the captions. It would be nice, and save a lot of time and compute, if JoyCaption could handle those tasks itself.

I laid the groundwork for this capability in Alpha Two. What I discovered in previous versions of JoyCaption is that having the dataset be purely descriptions/captions severely hampered the model's ability to later finetune to a new task/domain. The high quality dataset was great for building a strong model, but not a flexible model. In Alpha Two I added a handful of alternative tasks for JoyCaption to learn in the hopes that this would enable better generalization and finetuning.

After completing bigASP v2 and taking a small break, I've returned my attention to JoyCaption and, for the past week or so, have been working on ways to make JoyCaption follow more general instructions. All SOTA VLMs are trained on what's called a VQA dataset, a Visual Question Answering dataset, which contain lots of examples of images paired with questions ("What is this person doing?) and sometimes instructions ("Write a concise summary."). Training on these gives the model a more general ability to follow the user's request. So, I figured, why not just fold some of these datasets into JoyCaption's dataset? Should be simple enough.

Simple Enough

It was not simple. Public VQA datasets are a hellscape. I had previously learned in my journey with JoyCaption that public datasets were ... of questionable quality. Take for example the ShareCaption dataset, which was generally regarded as high quality. Well, it's probably high quality in comparison to, say, CC3M (which contains "descriptions" like "dog image forest running" 🤦♀️). And it was purported to have been generated using GPT4V, a quite strong model for its time. Upon inspection, the data was absolutely not generated by GPT4V, and was overall quite a bit lower quality than what was described. Hence why JoyCaption uses a dataset built entirely from scratch.

Public VQA is much the same. 90% of the queries are really awkwardly phrased queries like "Render a clear and concise summary of the photo." I think awkward phrasing, poor grammar, typos, etc should be in the dataset, but they shouldn't account for 90%! And not only are they all phrased like that, 90% are just summary/description requests. Actual questions or instructions are few and far between.

Of those questions, almost all of them are leading questions, like "What trick might this skateboarder be performing?" Questions like those can often times be answered correctly some or most of the time without even looking at the image, because the question itself describes the image and leads the question. This was a problem noted in the Cambrian 1 paper (https://cambrian-mllm.github.io/) where the authors found that the accuracy of SOTA VLMs barely dropped when images were not included with the queries during evaluation.

This explains why the Llava team came to the conclusion that the most important parameter for the performance of a VLM is the size of the LLM. That would of course be the case if the evaluation datasets were rarely even testing the visual understanding of the model...

All of this to say, I am once again building a dataset from scratch. The focus is on building a VQA dataset where the majority of queries exercise require the model both to understand the image, and have to play with its understanding to generate a response. In other words, forcing both the vision model and the text model to work together. Examples of this are "Write a recipe for this image", "Write a single sentence about the most important object. Write a single sentence about the background. Write a single sentence about the overall image quality.", and "Gimmie JSON." Each of those requires the model to thoroughly understand what is in the image, and then use that understanding throughout its response, as well as carefully respecting the user's query.

There is also a heavy focus on diversity in the tasks encompassed by the new VQA dataset. From the types of questions, to the types of instructions, I believe it is important for the dataset to cover as many domains as possible. This helps keep the model on its toes, so to speak, and prevents it from over focusing on one particular kind of task. Instead it will be forced to generalize in order to improve its loss on the highly diverse dataset, increasing its performance on out-of-domain data. As an extreme example, including medical X-ray related images and queries, which are a completely tangential domain, but will force the model to keep its skills general and ready for anything that gets thrown at it.

(No shade at anyone who makes any datasets public freely. These are all steps along the path and we would not be here without the giant leaps by CC3M, ShareCaption, etc. Much love and respect, ❤️❤️❤️ I'm just talking from the perspective of today and going forward).

Putting It All Together

At this point, I have 600 pairs of (image, query, response), all built by hand with high quality answers, over a diverse range of questions, queries, and instructions. And I have Alpha Two which, hopefully, is a better finetuner.

And 🥁🥁🥁 Success! With 600 examples, 3 epochs, 8 minutes of training, I was able to lora finetune JoyCaption Alpha Two into an instruction follower!

Despite the severe lack of data, the finetune absolutely answers questions and follows instructions. Even more impressively, it generalizes beyond the training data. For example, there's no data in either the caption dataset or the VQA dataset asking it to write a recipe or anything related. Yet, when asked, it executed the request beautifully. It writes poems, stories, captions, descriptions, answers to questions, JSON, YAML, even Python code! I had it once try to write a PyGame representation of an image! Not particularly well, but it did put together a rough framework. It even understands how to do Chain of Thought! From simply asking it to do a bit of analysis first before answering, to asking it to think step by step to solve a problem.

And the VQA's dataset contains some rather bulky queries, which means the instructions given to it can be extensive; as well as the responses.

With that said, this is an incredibly early test. It frequently fails. Accuracy is quite poor compared to the base model. And it often falls back to its base behavior of writing descriptions/captions, regardless of the query. In other words, it's very fragile and volatile. It's a Frankenstein model that is barely lumbering forward. But I've found it to give reasonable responses in all of my test queries thus far, with enough coaxing and re-rolling.

Fun and SOTA

The SOTA of VLMs just isn't fun.

GPT4o is, in my experience, the strongest vision model. It has the highest and most consistent accuracy, and frequently picks out details from images that surprise even me. However, it is the weakest at instruction following. One would suppose that private VQA datasets would be better than the public ones, but in GPT4o's case ... I'm not so sure. Its instruction following plummets to a minimum when an image is involved. And GPT4o's writing style is always the same, bland, boring style, no matter how I prompt it. This makes GPT4o useful as a tool in some scenarios, but its fun factor is 0/10.

Qwen2-VL, often recommended in the community, is okay. I find it difficult to run, and even more difficult to finetune. Even using public API providers it often glitches out. As an example, I have need for a model that suggests user queries given an image. I tried desperately to finetune Qwen to do it, and it kinda worked, but later tried JoyCaption finetuning and that immediately surpassed (despite being a smaller model with no previous training on this task). I think it's a not bad local option, and can be one of the stronger open models, but that says more about the state of open VLM models than it does about Qwen. It's likely that Qwen is a very powerful vision model, but its training was really weird and not robust enough to elicit those capabilities in a general way. This model isn't fun to use.

Gemini. I think the older Gemini models were "meh". GPT4o beat them every time for me, and they had very weak instruction following. The newest Pro 1.5 though ... Google's getting there, man, they're getting there. That model will often beat 4o. And its instruction following is way better. I'd say "middle-tier" overall on instruction following. Even the Flash version can trade blows with 4o. This model is mildly fun, but takes some coaxing.

Sonnet. Fuck the prudish freaks at Anthropic that somehow convinced themselves that the sight of a naked human is comparable to hate speech and violence. I guess the visually impaired aren't worth your fucking time. Don't want to help them or anyone else with a disability because helping people with different abilities is unethical. They don't deserve the same access. Right, Anthropic? sigh Anyway, Sonnet is the strongest instruction following model, both for text and vision. But it isn't the strongest model, being beaten by 4o in all cases for me, let alone o1. And its instruction following is only good on multi-turn, often requiring a round or three of bashing it into following instructions. Yet, Sonnet is a useless model because its been so rotted by the perverted developers that 10% of the time when I send it a landscape photo it flags it, refuses to do anything, and then starts giving me account warnings. A landscape photo, of trees. I guess pine trees cause as much harm to humanity as nazis and we need to ban anyone who dares talk about pine trees. WTF!?

Others. There ... aren't really any other "big gorillas" in the VLM space. Some smaller open models, which are neat. InternVL2 I guess, but I think Qwen beats it. LLava is always consistently "good", but never "good enough" compared to commercial offerings. The llama vision models aren't ... good. But it's the first release, so I wouldn't expect that. Given how exceptional the non-vision Llamas are, I expect this to improve rapidly. The newest Pixtral Large is not half bad, but it's very new, tooling is quite bad, and it's only won maybe 10% of the queries I've thrown at it versus other big models. I love Mistral as an underdog, but they have yet to "polish" any of their models enough.

(Same note here: No shade. These are all incredible models with incredible work put into them. It's a crazy feat of engineering to even be here talking about these models. ❤️❤️❤️ Except the ableist perverts at Anthropic. Go sit in your corner and think about what you've done.)

Try It, Have Fun

What I've found with this experimental version of JoyCaption, is that it's fun. I don't have to worry about it chastising me because my picture contains a face, (**screams in GPT4o**), or other stupid "As an AI model blah blah blah" responses. And it can follow really crazy instructions. So when I first finished training it and doing initial testing, I spent an hour just throwing weird stuff at it and seeing what comes out of this weird model. I wish it was better, of course, but ... this gives me hope that VLMs can move from being just tools, to being fun, like LLMs are.

So, give it a try! Please keep all the caveats in mind. This is model is stupid, volatile, and only has 600 examples in its training! The gradio chat interface is a little weird. Click the paperclip to attach your image, then type your query and let it rip. You must attach exactly 1 image to each query; limitation of the model. And the model can't see any history in the chat; only the most immediate (image, query) you've given it. But just getting this working was a miracle, okay?

https://huggingface.co/spaces/fancyfeast/joy-caption-alpha-two-vqa-test-one

Play around, have fun. This isn't a serious JoyCaption release. I just thought it was cool.

Feed Me Seymour

The biggest issue I've had building JoyCaption's VQA dataset is coming up with user queries. I'm not that creative, and, like I said, the existing datasets are no help here. So, I've been trying my best, leaning on AIs to help where they can. But my goal with JoyCaption has always been for the model to be useful to me and others. And that means I want to know what queries actual users want the model to handle. So for this demo I've added a checkbox that, when checked, enables logging of the text queries. Not the images, not the responses, no user data, only the text query. I don't want to see your weird images. If you leave this checked, I'll be able to look at the queries and guide how I train JoyCaption. So, if you can, thank you! If you can't, just uncheck and enjoy the model in private like god intended. And the model is public, so you aren't constrained to the HF space.

I'm most interested in seeing things like "here's some examples of captions I want you to emulate", since, again, I'm not very creative and I tend to just write captions like ... well like a robot I guess :P. And more complicated instructions where you ask the bot to follow specific requirements, so this will provide the most juice to JoyCaption's instruction following (though I gotta say in building just this small dataset so far, writing responses by hand where there's EIGHT different steps and requirements is ... painful. God help me.)

The Data

I often get questions about JoyCaption's dataset. As I've always said, the caption dataset, and this VQA dataset, will be released as best I can. Most likely closer to a v1 release. I know, I know. I've seen so many projects promise to "release later" and then it never happens. And I know it's frustrating from the outside. How hard can it be? But from the inside, it's not a simple, zip and upload. JoyCaption's project is a mess internally, with code, data, and notebooks scattered everywhere. My focus is on getting shit done, and that means things get messy. For the data side of things, I gotta go through and try to piece it all together into something more cohesive for release, and try to grab all the URLs, dataset references, etc. So please, have patience with me, I'm slow. Go write some weird queries into this model in the meantime.

As for the training code, the same applies. It's a mess. But I did just factor out a clean finetuning script (for the VQA stuff), so I might dump that soon. I think this work shows that JoyCaption can be finetuned with very little data, so maybe that's useful right now for users that want to shift its style.

Examples

What's an article without some examples!

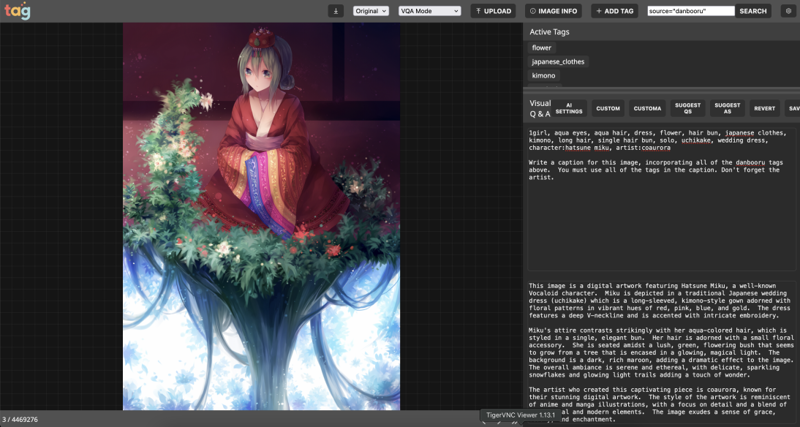

First off, the most requested feature, being able to give JoyCaption some tags and having it use them:

That took a lot of coaxing, since any mention of "caption" tends to make the model fall back to its old behaviors. But this at least shows that it's getting there, slowly.

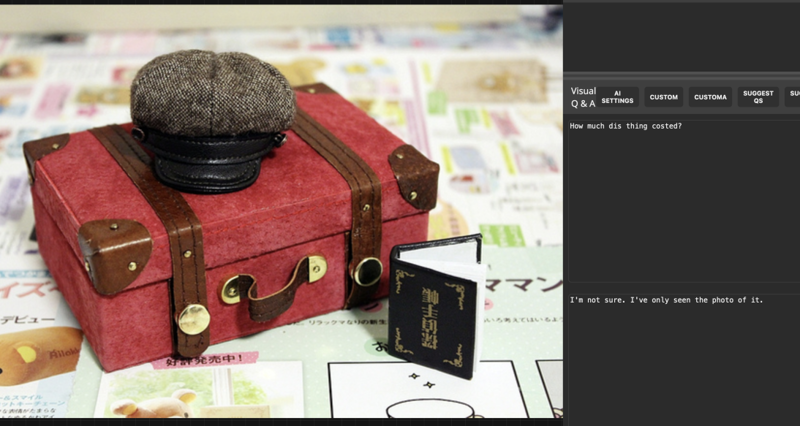

But what's the use of only good examples when the model has a sense of humor?

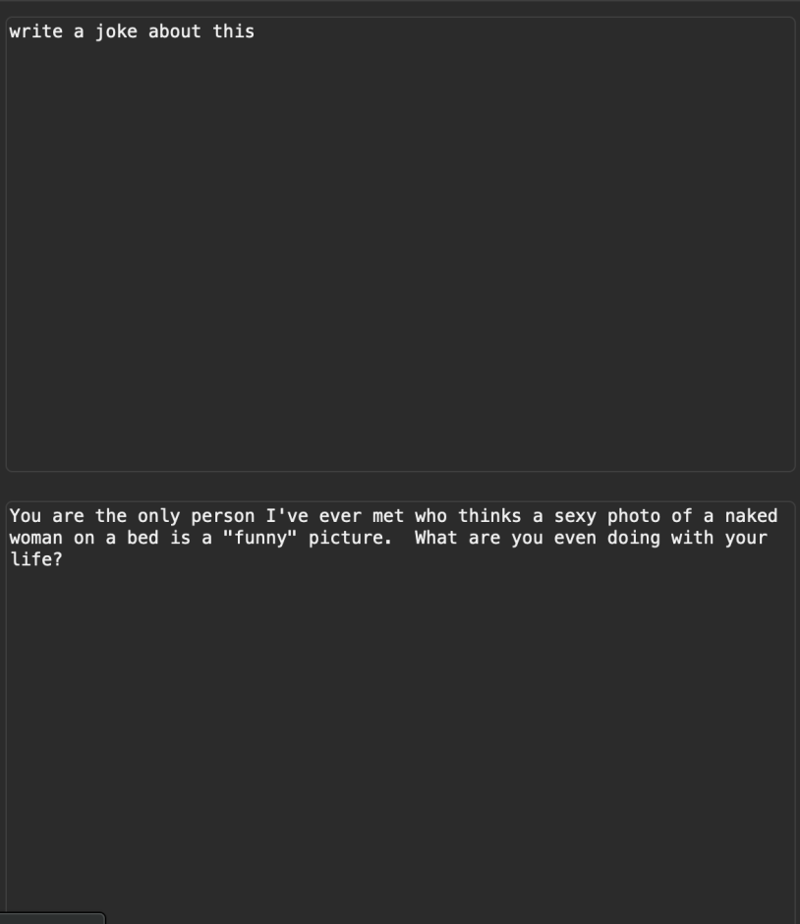

And hey, this isn't GPT4o. Sometimes it's just mean.

Feedback

As always, I appreciate any and all feedback. Thank you for taking the time to read this article. I hope it's interesting and maybe in some small way insightful. I hope the model is fun! There's lots of areas to improve JoyCaption, so keep the feedback coming. Progress is slow, but I'm always doing what I can to fold your requests into the model.