Hi there, I wanted to share my settings for most my LoRas on the site. For posterity and stuff.

Steelrain's LoRa Training Notes (Guide?)

I mostly do art styles nowadays, but this should basically work for characters and concepts, too.

Disclaimer: These Neural Networks (I hate the term "artificial intelligence", because there's no intelligence found here, just esoterically produced programs - notably, dumb programs) are basically shaped in some esoteric black magic. I did try to understand how, but I gave up halfway or so, and I only understand the theory partially. So I don't claim to understand what I'm actually doing, but thankfully it looks like it's working, anyway.

Through dumb luck and by plagiarizing other people's methods, mostly, I suspect. People with better brains than me can explain the theory behind it - go look at Wikipedia or something.

It's entirely possible I'm running shitty setting, too, and everything I write here is crap and leads to theoretically terrible results. Luckily, the bar is quite low for anime, so maybe just no one noriced so far. YMMV.

I'd definitely encourage you to google LoRa training and read some other guides as well to gain some more perspective on the matter.

Preface

My model of choice is Pony V6, but this should similarly work for other SD/XL-Based models at least.

Please note that you don't really need too much quality for Anime LoRas

So, what are the steps in LoRa creation?

Assembling Dataset

Cleaning Dataset

Tag Dataset

Run Training Script

Test Results

Pick release candidate

I will be linking the resources and tools I use - in the hope that you'll be able to figure installation and stuff out yourself. The mostly come with Installation instructions. Good luck!

I run this stuff locally on a GeForce 4070 Ti Super @16 GB Vram. See the bottom for the CivitAI onsite trainer.

Assembling Dataset

The first step is searching for images of your target - I usually try to go for 100, but a lot less can work, too, with more repetitions during training, especially for rarer characters or something. The minimum seems to be around 20 images or so. This also applies to sub-concepts, like characters or clothing within an artist style.

You generally want a maximum of different themes, actions, expressions or angles in you dataset - pay attention to distinctive features especially. For example, for both art styles and characters, I want at least 30% to be focused on faces. You also want full body shots, backgrounds, etc.

The general preference is color pictures > monochrome pictures > manga pages, with images at least 1024 px in one dimension if you have them. )If they are smaller, you can just roll with them, though - no need to upscale. In fact you probably shouldn't, because it may lead to bad results if done poorly) The better quality, the better.

Generally, LoRa training works with all of these things, though, and even with blurry or low res source images, especially for characters. It's some kinda arcane dark magic stuff.

For art styles, unless the artist did fullcolor artworks, I'm usually ripping whole manga pages off sites like the one with the sad Panda, *boorus or Pixiv.

For characters, I usually start with Gelbooru or Pixiv, do a Google Image search, use sites like https://fancaps.net/ or https://huggingface.co/BangumiBase for screencaps/crops, or take croppings with Snipping Tool straight from online manga readers.

You generally want to mostly avoid AI-generated content in the Dataset, because it leads to some funky stuff like a repitition of details (think Deep Dream) and other errors. What you can do to improve the results is denoise your dataset images, which should mitigate this. Denoising also works if you suspect "poisoning" or tampering with stuff like Nightshade, because these anti-AI-programs usually work by tampering with the invisible background noise.

In extreme cases, Denoising (for example with paint.net -> effects -> noise) can also allow you to do the "Hail Mary" approach to train from a single image - make a quick and dirty LoRa from your 1 image, generate a lot of images with it, pick the couple ones that are decent - then denoise those images - and then use them as the new dataset to train a "proper" LoRa once you have enough.

Cleaning Dataset

To improve the quality of the data and output, I take the time and clean some undesirable stuff from my base images.

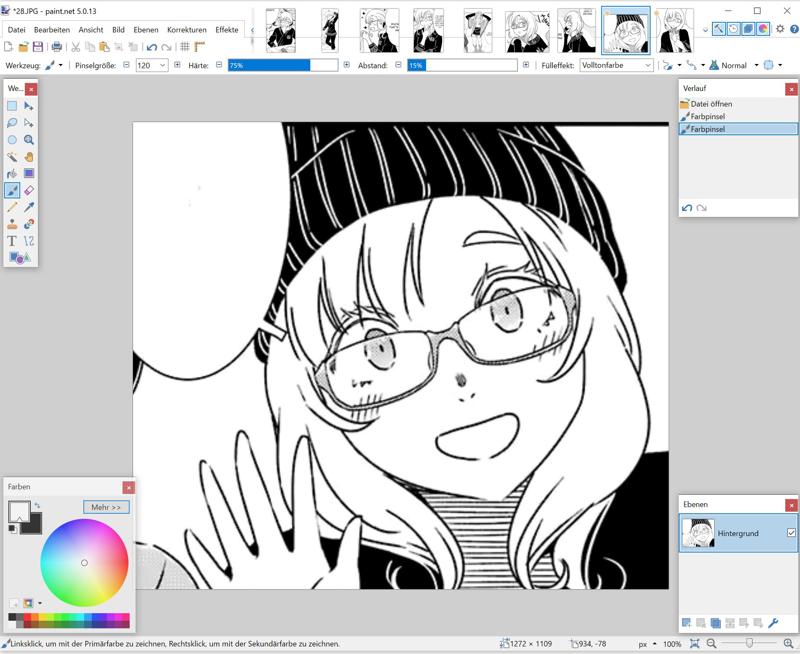

For characters, you want to crop screenshots so that not too much background remains and no other characters are visible. Only spot clean larger disturbances, though - like whiting out faces or erasing text - and don't clean the whole background. It messes up the noise level or something and you can get weird artifacts otherwise.

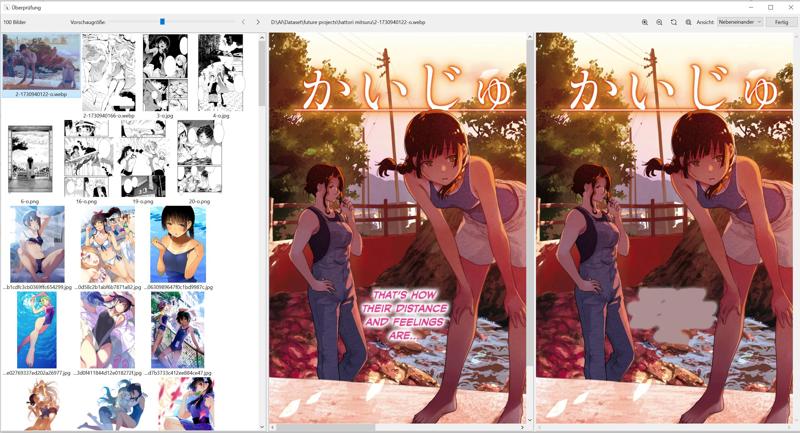

It also helps to harmonize the file types to a single one to avoid issues later on with 2 files having the same name but different extensions, messing up tagging. Some file types like .webp aren't trainable at all, and will just be ignored.

For simple cropping or batch rename/reencoding, I usually like IrfanView.

The general rule is that text is the enemy, so eliminate speech bubbles and so on as best as you can.

Either manually through something like paint.net for smaller datasets:

Or take out the nuclear option for manga panels and so on with a cleaner - I use this AI-powered tool to good effect to clean 100+ manga pages in one go,

I fiddled around with the setting to make them hyper aggressive, and now it takes out most text:

here's the profile: https://files.catbox.moe/2mjid7.conf

it goes in %AppData%\pcleaner\profiles

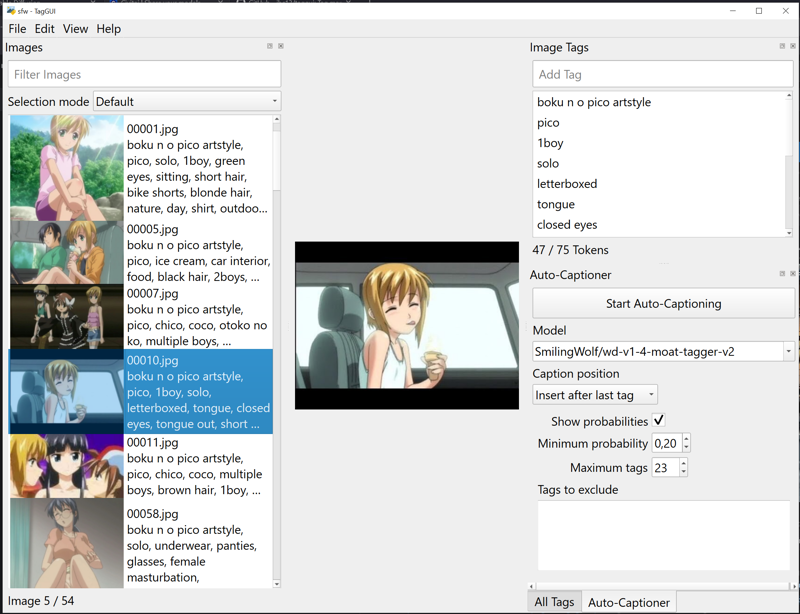

Tagging Dataset

To properly train a text 2 image model, you need to tell it what's shown in your dataset. Then it can train on the differences in what it knows under that tag vs. the result shown in the data, and learn the remainder. That's why you want to at least tag your Dataset with effectively 10-15 or so tags (I often use 20+ because 5-10 are wasted on negative stuff like "monochrome, greyscale, comic, speech bubble, empty speech bubble, censored, bar censor, mosaic" etc. for manga pages), plus optionally a trigger that makes the remainder (the style, or character, or concept) identifiable - which I'd recommend because it's usually easier to use.

Tags are saved in text files with the same name as the file.

I usually just use the following:

https://github.com/jhc13/taggui

and here's my choice of model and settings:

The additional tags is where you want to put a trigger - like by [artist] or [character name] or something. This usually helps to use the LoRa for gens later and allows to fine-tune intensities and such for the user.

You can also manually write tag files, or use one of the 20 or so other image tagging tools.

Check https://danbooru.donmai.us/wiki_pages/tag_groups to get ideas for which tags are out there.

Of note here is that there a couple peculiarities I found with tags:

What I'd call "latent" tags, which should be in the model (like, part of the booru tagspace) but aren't really expressed properly in gens, are quite powerful if you manually tag them - for example "snake hair" worked super well for a Medusa character for me, and the wtf tag 'clitoral penetration' actually stabilized results for, well, just that. Seems to be some reinforcement effect.

either manually append them to the tag file where appropriate, or add them to the additional tags

Tagging adjacent concepts that are related - like two different variations of a concept - and then combining them seems to yield greater stability for prompts to produce the desired results - see the OTK LoRA for an example of this.

The same is true if this is spread over multiple LoRas, so it can produce greater compatibility if you tag niche stuff in your artist Loras - for example, I tag different spanking concepts in my spanking artist LoRas the same as in my spanking concept LoRas

Some people say that tagging for negative prompts - like low resolution, sketch, low quality, etc - can help with gens later. Honestly I've never bothered with it much and never had much of a problem with it. It might be worth it for a persistent twitter handle or something.

Training Scripts

Right, with the preparations done, let's see about the actual training.

I use this training script with a GUI:

https://github.com/derrian-distro/LoRA_Easy_Training_Scripts

There's a lot of fine-tuning you can do here apparently, but with a couple settings potentially ruining everything.

So here's my setting file as a starting point.

https://files.catbox.moe/04u2nw.toml

First off, under "Base Model", point it to the checkpoint you want to train on.

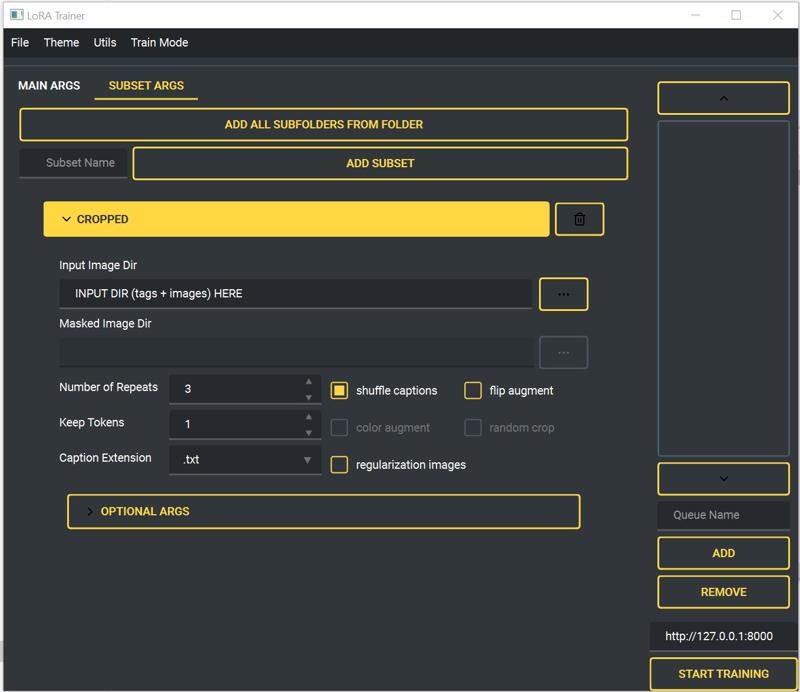

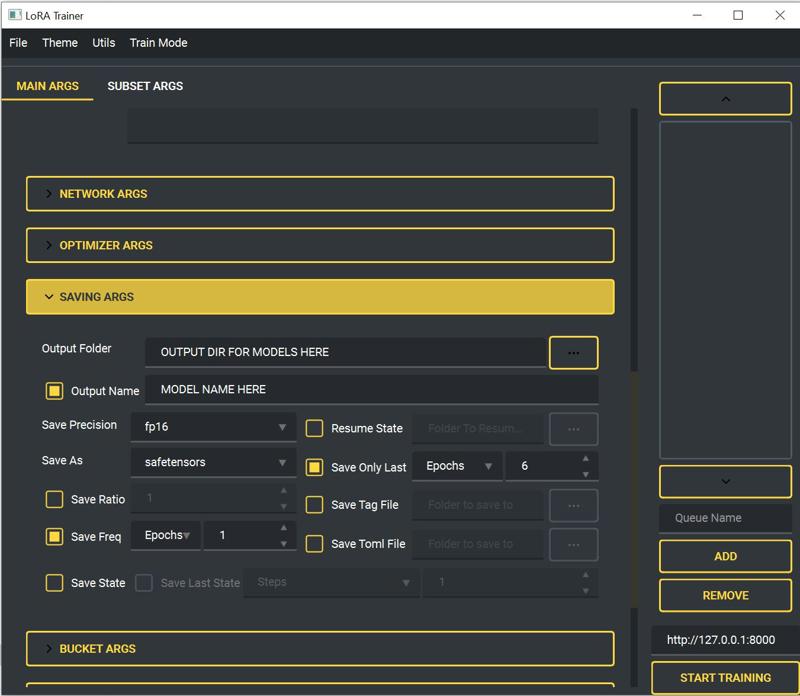

Then just plug in the source (dataset + tag file directory) under the "subset args" rider

and under "Main Args", locate the "Saving args" and define the directory to save to and the model name...

Then hit run and wait like 1-2 hours. You can also type in a name and add it to a queue to run a whole batch of models.

So, what's it do?

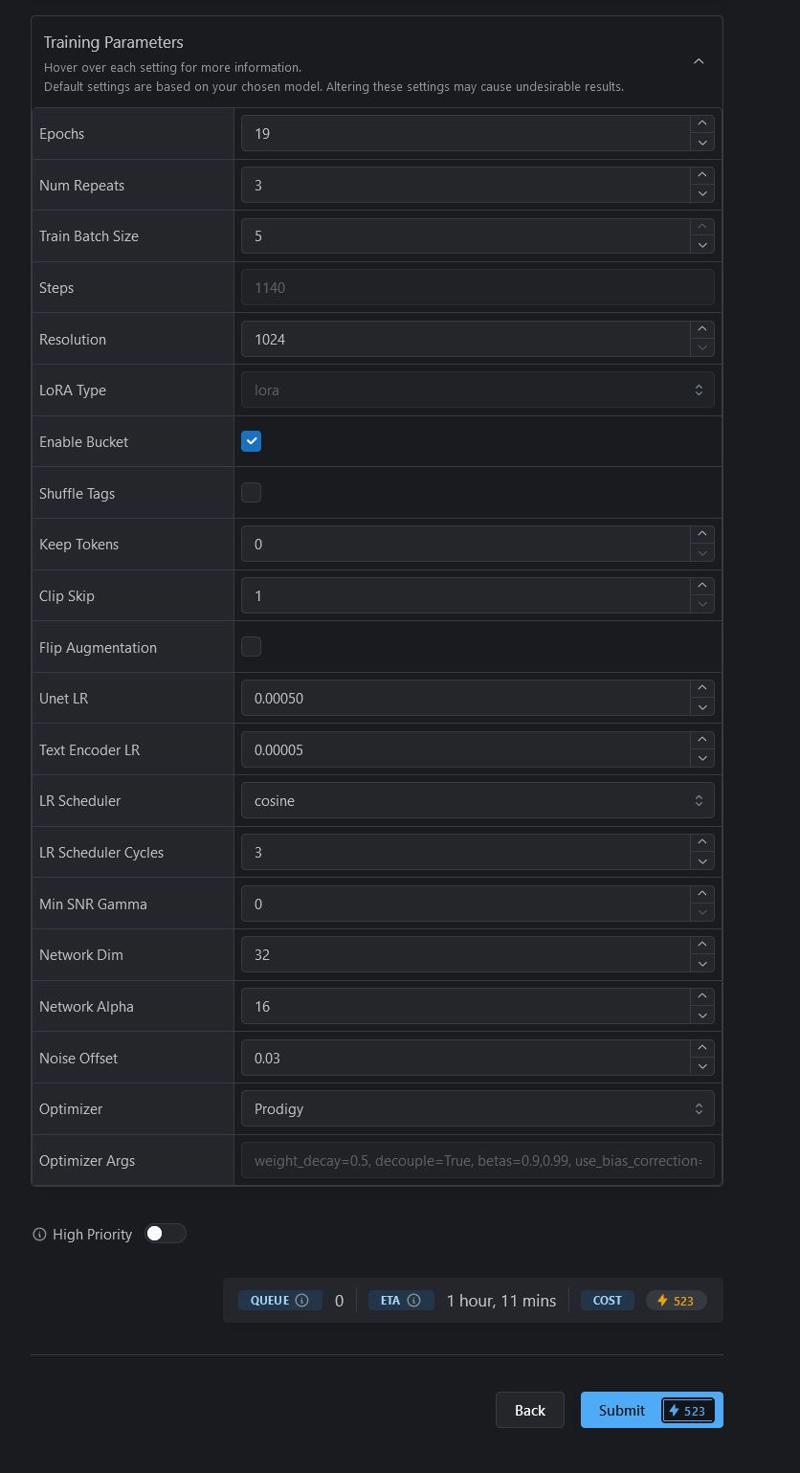

The settings:

Resolution: 1024x1024

Gradient Checkpointing: Active

Batch Size: 2

Epochs: 19

Number of Repeats: 3

Optimizer: Prodigy

Scheduler: Cosine

Learning Rate: 1 (Should be way lower for other Optimizers - Prodigy adjusts this automatically)

Network Dimension: 32

Network Alpha: 16

So, this training script runs Network of [Network Size] at [Network Alpha] over the Dataset for [Epochs] times, and repeats each image [Number of Repeats] in each Epoch. It looks at [Batch Size] of images at once. This gives us [Number of Images] x [Epochs] x [Repeats], divided by [Batch Size], steps.

The Network size is 32x32, which determines the precision and find detail the LoRa can learn. 32 is kinda overkill, actually. You'd generally be fine with 8-16, especially for not so complex characters and styles. Since this mostly only affects the size in the end - should be like 225-250 MB or so, vs. around 50 at 8 - I don't really mind the overkill, though. Refurbished Cloud HDDs are super cheap, and we're not on dial-up anymore.

If you have a small dataset, up the number of repeats so you arrive at around 1500 steps before dividing by the batches.

Batch size can be upped if you have enough VRam. I can run 4 if I don't want to do anything else with my PC... reduce to 1 if your GPU does not have enough.

The Network Alpha is a dampener on the learning speed. It cuts away weights that are close to zero in the Neural Network or something. Basically, it hones the output and prevents us from frying the LoRa at like 2000-3000 steps although generally half of that is recommended. I think and hope. You usually want this at around half of the network size, or the same as the network size to ignore it altogether, which works fine, too - just be wary of the number of steps.

The Optimizer is like some sort of Learning Method - Prodigy is like on a descending curve, only making smaller changes towards the latter epochs or something, if I understand correctly. Basically, no need to worry about learning rates as they are adjusted over the course of training, This helps prevent overbaking the LoRa too much as well, and makes is quite forgiving.

And then, finally, these settings save the last 6 epochs or so, which leads us to...

Testing Results

So, as you might have realized, I'm saving a couple epochs here. This is to test when the LoRa is baked enough - the point at which it settles on an output and yields constant results, and where further training does nothing to improve it any more.

To determine this, I run an X/Y/Z plots script with multiple versions of the LoRa via Prompt S/R:

I usually do this at 0.8 strength, so the LoRa stays consistent even when used at lower weights, e.g. when combined with multiple other LoRas to prevent frying the output.

In this example, this is in version 18 to the final one (#19).

To judge the quality of the LoRa, especially for art styles, I also like to generate a couple images with the tags from the input dataset and see if the results look close enough to the source. If not, then sometimes a LoRa gets scrapped and I check for mistakes in the preparation or fiddle around with tags and settings and rerun training.

Release Candidate

I generally pick a slightly overbaked version, so 1 version after the results stabilize - and then I throw it out here eventually.

CivitAI Onsite Trainer

The settings mentioned above also work for the onsite trainer. Last I checked, you could train a LoRa like these for around 500 Buzz already.

pick what category you want to train and enter a name (you can edit this when published)

throw you dataset in the trainer

auto-tag it with the tool for 10-20 tags

prepend your Trigger Tag

copy the tags of 1-3 images

select the model you want to train on

set the Epochs to your target

the Repeats are pre-set to fit the dataset size; I like to raise this to at least 3

for small datasets, raise it to however much you can without paying extra buzz

just leave the batch size at 5 or whatever, it costs extra buzz otherwise

set Network Dimension/Alpha

set sample prompts

use the copied tags, add "score_9, score_8_up, score_7_up, score_6_up, source anime, anime coloring" for Pony

I think the seed should be constant, so you can eventually pick a release candidate

kiss goodbye to the buzz and kick back for an hour and a half or so, then check the result

pick your release candidate and publish - you may want to save your last couple epochs and samples first, though, just to be safe, because somehow Civitai's release process is buggy and may fail initially - check models/drafts/training in your profile first before reuploading, though.

PS: If you feel tempted to donate your hard-earned Buzz to me: don't. Donate it to people who can actually use it instead. I didn't spend the better part of a vacation budget on a gaming rig to still have to gen onsite, after all. So although I theoretically appreciate the gesture, it's just that, a gesture - it's of limited (read: none, really) use to me, anyway.