Why?

NoobXL v0.65S is really good, but the 3d is heavily lacking artists, types, and differentiation taggings; danbooru and e621 are both lacking many of those, however my database is not. NoobXL is very very good mind you, but both the 3d and realism are lacking enough for me to really want to add /SOMETHING/ of substance, hence a REASON TO EXPERIMENT!!!

Even though I know this is going to basically be outdated by this time next week (I'll probably train another), I think the experiment was highly potent at teaching myself, and inspiring me to improve my local tools.

I wanted about 10000 images or so for this style, and I wanted them from unique places to introduce new things.

This includes 3d objects, people, cars, locations, and so on. Mostly 3d people though.

puts on lab coat for data sourcing

Alright the sourcing for this one is pretty unique, because I wanted to have a large array of 3d and realistic behind it and I'm certain that NoobXL was already fully saturated with everything from danbooru and e621.

To give my full local database a run-through, I decided to query my indexes and gave it the full picker form using a similar method as cheesechaser but not quite identical.

Video data!?!?!?! WHAT?!?!!

I picked all the 3d videos out that I had, and piled them into a folder automatically. First I ran my video slicer on them and found it to be abysmally slow, so I went through and found a couple things running on CPU instead of GPU and then fixed that. I then cranked up the knob and broke it off using 32 local threads after jamming everything into an asychronous database.

Afterword, I ran the slicer script on them in multithreaded mode and bluescreened my computer.

I noticed there was a couple other issues with the underlying structure so I made sure to add about a billion try/catches, ram checks, and a couple of failsafes and then I stopped getting blue screens. and managed to snag roughly 50 images per video, depending on the video differences and so on.

When I finally got the slicer working, I ran it for a few hours and grabbed about 5000 images from various videos using difference checks. Most of them were bad, as apparently I forgot to enable people detection (woops), so I ran detection after and figured out which ones actually had people and then I autotagged them all using the Simulacrum tagset and the newly in-testing Rule34 auto-tagger (I've been working on this) with an e621 tagger devoted to specifics.

There's quite a bit of minimal gradients from point A to B on these images, which provides a large array of similar images with minor differentiations, and it seems to have introduced some very interesting changes and fixes to the NoobXL.

Interpolation

When checking image differences, you have to take into account how similar. If the gradients are too different from point A to B, you end up with an entirely different picture, different angle, and so on. You can't use the video's tags themselves, but you can actually grab all of the tags for identified characters if you want, but I chose the alternative aproach; remove the faces.

So I used 3 kinship images, the base was blank white face, second was minimal blurry face, third was mostly there blurry face.

The outcome was quite interesting, but seems to produce extra heads every so often. It usually means that it needs more training, as it's currently on epoch 15 out of 37 (based on the math), which should be only about 440k samples or so. Compared to the amount of samples in noobXL this is almost nothing.

Training time! LR, Alpha, and Reasons

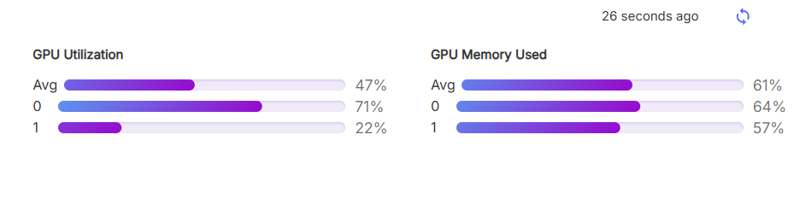

2x A100 SXM rented on Runpod

UNET LR 0.00001

TE LR 0.000001

Dims 20

Alpha 9

Batch Size: 64

Even with batch size 64, the A100s are nowhere near fully saturated with GPU Memory, so I likely could run 128 or even 256 batch, which isn't something I ever expected to have access to. Flux tends to cap out and blorp after 8 batch.

I read that a higher batch size like this, is best spent with a higher learn rate. I was not willing to do the math to make that work with cosine repeat 2 and my particular formula last night, so I'll figure out 128 batch and 256 batch later. For now it's cooking at 64 batch.

WHYYYY alpha 9 and dims 20? WHY THAT!?!?!

This is an interesting one, as it's both a minor slide into insanity and a validation of normalized math.

After training CLIP_L so much and seeing the outcomes, I had some unique insight into these numbers. Something that only someone who really gets their hands on the tools with my type of brain can see, and I saw things in the outcome values that reflect these numbers. I know for a fact that I'm having some confirmation bias, but that's fine. I'm aware of it, so I can put it aside when the math doesn't line up.

There are many repeated 9s. Enough for me to want to try literally training a hard structured alpha 9 months ago, but never actually doing it because the math didn't line up. I don't know why I keep seeing them, and I don't know if it'll work. This is a full on experiment based on normalization, and I saw enough 9s in my normalization to force this as a possibility.

That was what I thought anyway, before I started doing the actual math for it.

The real reason, is the relation to PI and cosine repeats. It's very difficult for me to explain with words, but there are formulas related to it in my code. The entire normalization system said these were the perfect numbers to use based on the outputs of the clip checks.

Technically it wanted 9.13117~, and 20.312219~, and I wasn't going to yield the tags nor the images after running the full tag analysis and reverse inference checks.

I keep seeing the 9s, I might be going crazy, or Nikola Tesla might have been onto something more than just the math that related to it.

Setting up the trainer WAS tricky but not too difficult

Clone main kohya_ss gui, then run the runpod setup.

When Kohya is installed and fully operationally running, cd to the sd-scripts folder, and then run this;

git checkout dev

./../venv/bin/python -m pip install -r "requirements.txt"

Since Kohya is already running, it's semi-encapsulated, and the requirements for sd-scripts are a little different but not different enough to smash the GUI to bits... and I still don't know why, I just know it works, and it's absolutely blazingly fast compared to flux.

Tagging structure

I used a similar tagging structure as to what I used to train Flux, but omitted the complex captioning. I don't want this thing to know those complex captions. I don't trust CLIP_G and CLIP_L to differentiate and use them with Noob currently, so BOORU TAGGING IT IS!

I used offset, aesthetic, relative position, and a couple other experiments all in the same tag set.

So, hopefully by epoch 37 the normalization will complete and it'll have totally gutted and destroyed the model! (I hope it actually works).

Epoch 15 testing

It's only about a 20 hour training cycle, which by the way would take potentially weeks with this hardware with flux.

The initial findings show a clear fidelity boost.

Original:

Euler - Simple

Steps: 20

CFG: 6

Upscale: 1.25x at 0.66 denoise

Positive: 1girl,

brown hair, waving, smile,

highres, absurdres, masterpiece, 3d, Negative:nsfw, worst quality, old, early, low quality, lowres, signature, username, logo, bad hands, mutated hands, mammal, anthro, furry, ambiguous form, feral, semi-anthro,

3d juicer Epoch 15

It's introduced depth of field, motion blur, and action states.

The video images are doing something very very interesting.

I'll update this article with more images as more epochs come in until the release.

Update 2:

Testing the depiction-position tags.

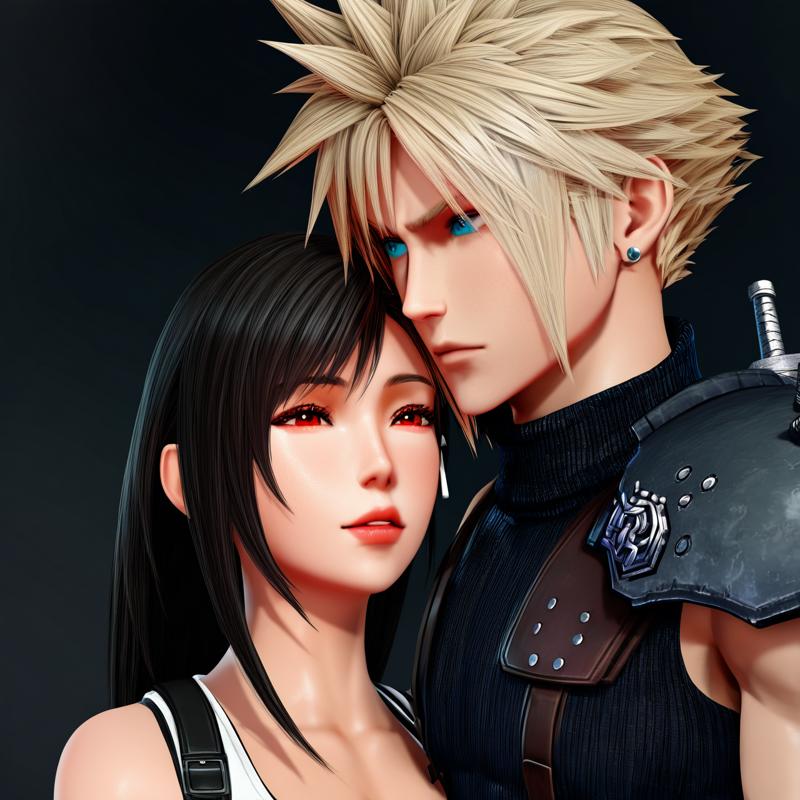

1boy, 1girl,

cloud strife, tifa lockhart,

depicted-upper-right head,

(depicted-lower-left head,:1.3)

highres, absurdres, newest, masterpiece, 3d, 3d \(artwork\),

<lora:noob_3d_juicer_v1-000018:1>

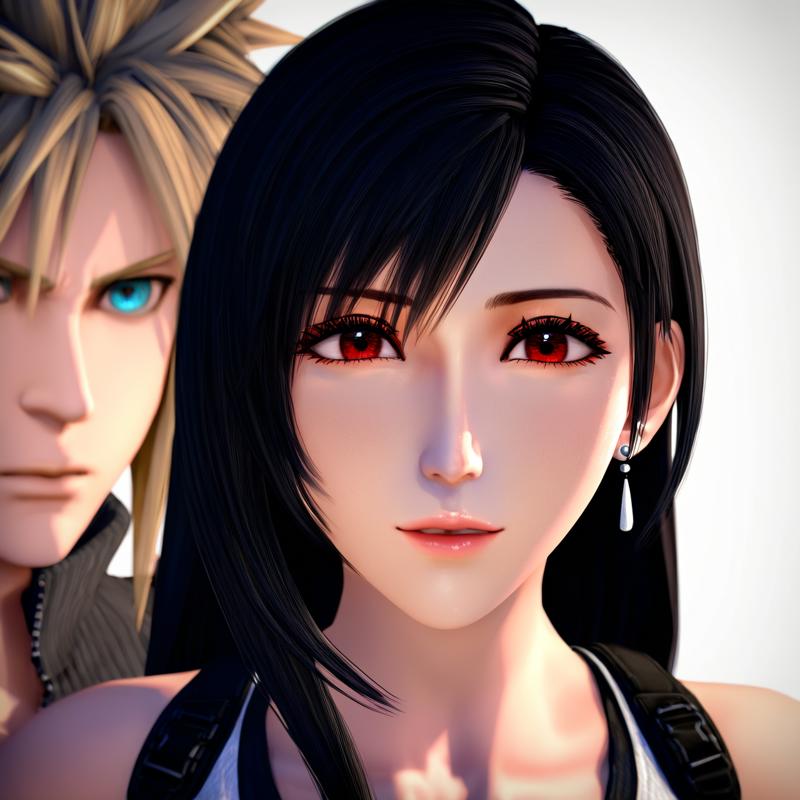

1boy, 1girl, duo,

cloud strife, tifa lockhart,

portrait,

depicted-middle-right quarter-frame collarbone,

depicted-lower-left half-frame head,

highres, absurdres, newest, masterpiece, 3d, 3d \(artwork\),

<lora:noob_3d_juicer_v1-000018:1>

They are hit or miss for now, but it definitely has an effect on every tag I've tried using the depicted-position tags. It's not a strong enough effect yet, but it's better than placebo.

Not a bad first round.

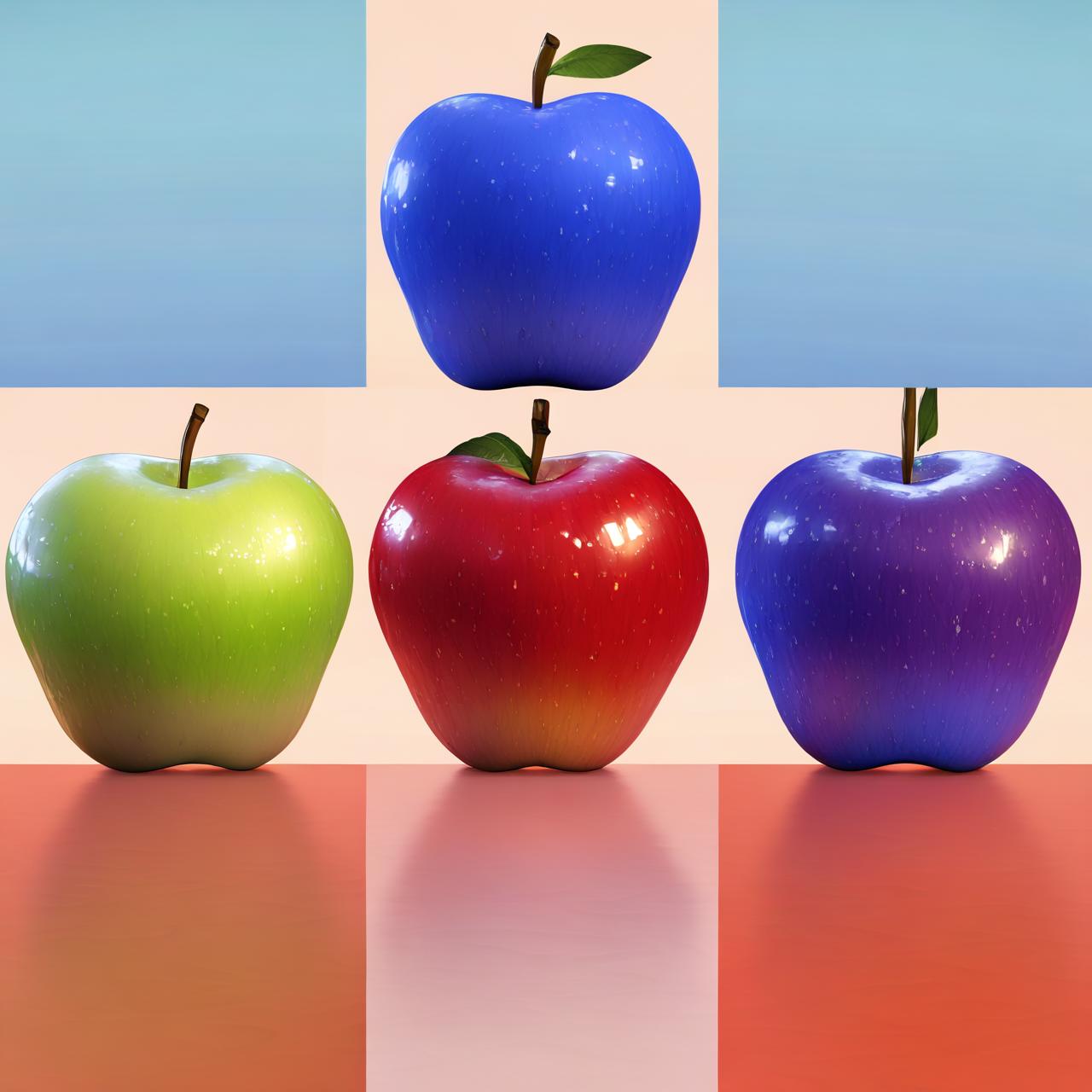

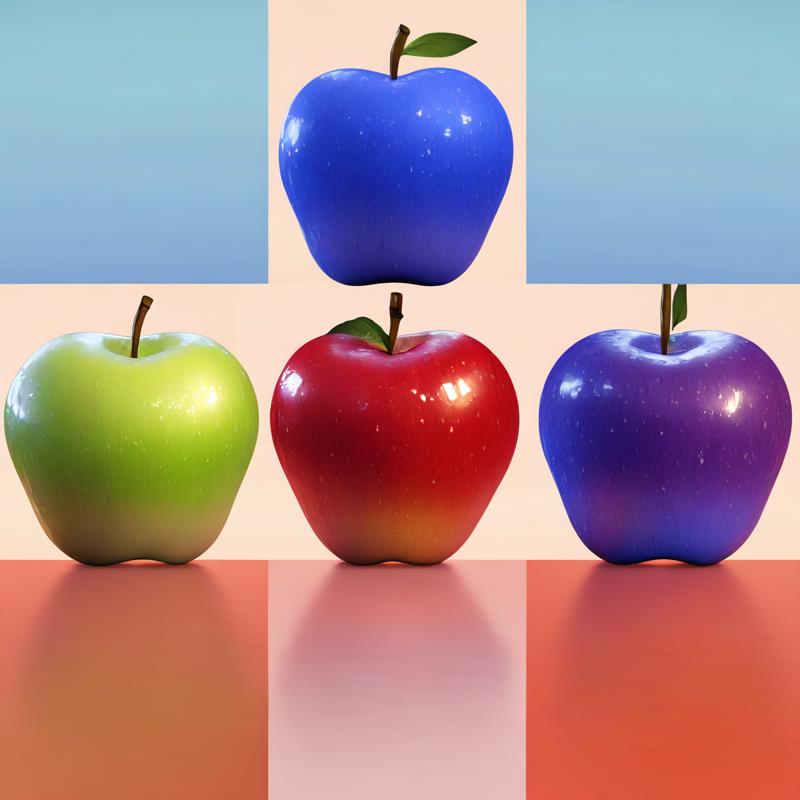

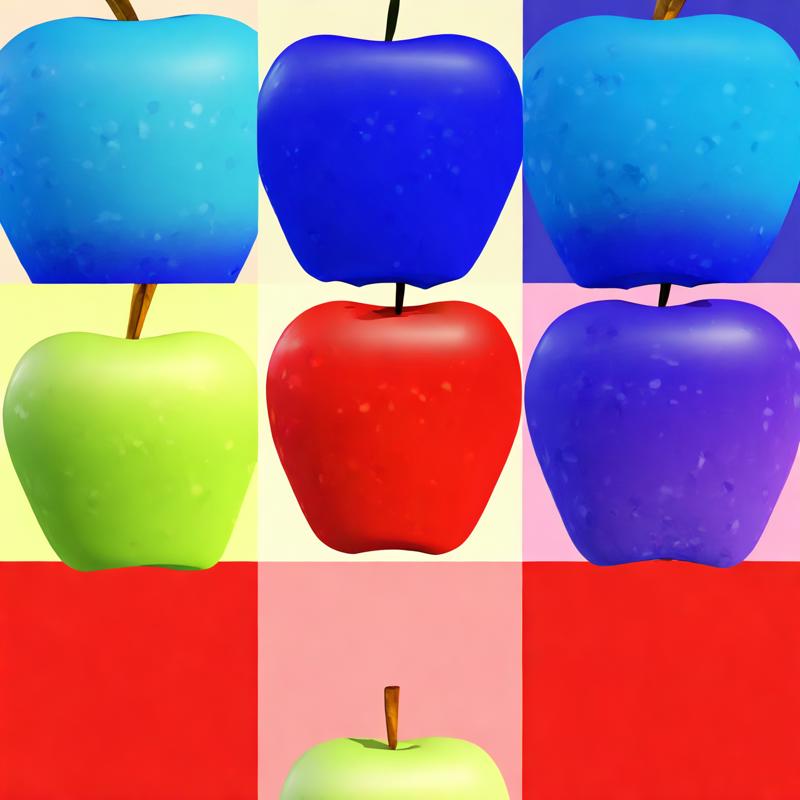

four apples,

(depicted-upper-left quarter-frame red apple,

depicted-lower-right quarter-frame blue apple,

depicted-upper-right quarter frame green apple,

depicted-lower-left quarter-frame purple apple,:1.4)

highres, absurdres, newest, masterpiece, 3d, 3d \(artwork\),

With the lora:

Without the lora:

There is 100% a causal response here.