Content warning: I'll explain stuff most people either already know or don't bother to know. It will be "simplified" and may contains small misunderstanding, but it should not be far for understandable and ok :D

In order to train a LoRA, I believe understanding how they works and what they do is helpful. But before jumping into LoRA, let's do a quick recap on diffusion model, with a focus on SDXL. I said i came for the math didn't I? (but indeed, i stayed for the waifus ^^;)

Introduction

Neural Networks - The short version

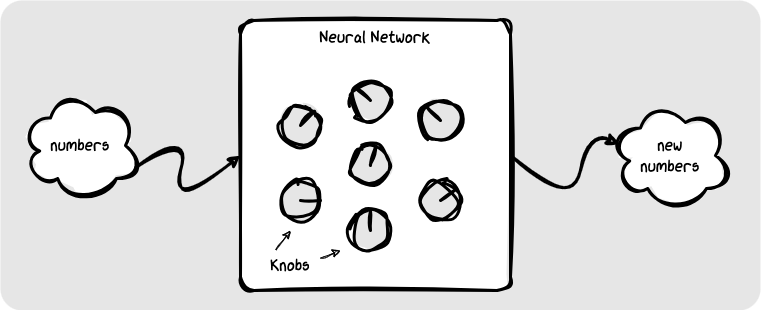

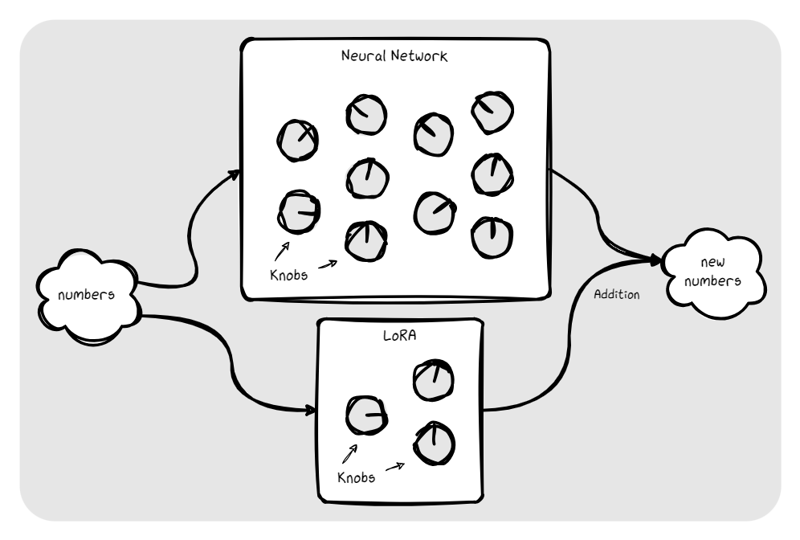

Neural Networks, the core of all the current AI models, are better understood as a magic black box, with a lot of knobs on them, that eat and spit out numbers depending on the "position" of the knobs.

Those knobs are the "weights" of a neural network: numeric values most of the times stored as "16bit floating point numbers", or fp16 for short, that make it output different result based on the same input.

The amount and type of knobs, and how they are connected in the "box", is what makes "a model" => a neural network which can be used to "model" the way a specific "magic" math function would behave, like generating waifus pictures from nothingness :D

To configure the knobs, aka "training the model", we feed it some known numbers with known expected result, run the machine, see what got out, and then "fix" the position of the knobs, from the end to the beginning, in a process called the "backpropagation", using what is called a "loss function" by comparing what got out and the difference with the expected result.

Once you do that enough times, the model is starting to output what you want and that's it :D

The initial position of the knobs is random and you don't change them too much at a time, using a "Learning rate" to get closer and closer instead of trying blindly to find the best position for them.

Using (or even worse, training!) a model is actually doing a lot of complex math computation (and computer CPUs actually know only stuff like additions and so on, everything else is code) especially due to the large amount of knobs (we are talking millions and billions of those!), which is why GPUs are best at doing this since they can handle a lot of parallel math and know how to do those kind of computation (like matrix multiplication). But before doing this, they must load the value of the knobs in their memory, that's why we need more VRAM for bigger models.

Quantization (the FP8, NF4, Q4_K, etc...) is a way to reduce the footprint of the numbers in memory using less bits, sacrificing precision (aka quality of the result) in the process. When done right (only quantize the weights with less impact for example), you can save a lot of precious VRAM.

There is also pruning (removing knobs that don't matters a lot) and distilling (using a big model to train a smaller model to do something similar on a smaller range of input/results).

Stable Diffusion XL - More details

Stable diffusion is not one of those black boxes, but three. And it's a special kind of model: a diffusion model.

CLIP

Let's start by the easy part: the CLIP (aka the Text Encoder or TENC).

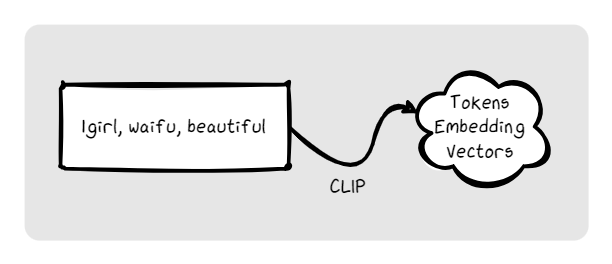

A prompt is text input provided by a human. It has a meaning for a human. But that's not MATH. And a model NEED math as an input (and human concepts are alien to computers, go figure ^^;)

That's where the CLIP/Text embeddings/Computer Vision models comes in. Those are models built (aka trained) to get the base text of the prompt, slightly processed, and convert it as mathematical values representing the "concepts" behind the prompt. There is of course the reverse too, those getting images, searching them for concepts and outputting the "human text" associated.

There is very good (aka huge) models for that, but stable diffusion leveraged a simple one at first, and then added a second different one (but still simple) to get two set of different math values for the same prompt, to try and catch concepts with one of the CLIP the other may struggle with.

And that also why stable diffusion and it's derivatives struggle more with natural language => Flux didn't bother with that and still leverage a CLIP, but also a "Sentence Embedding" model, a more advanced kind of model that can understand concepts not at words level but at part of sentences level.

As far as i understand, Pony and Illustrious didn't bother too much with that and choose to drop natural language in the prompts for training, preferring tags, which why you can get better results closer to what you expect if you prompt with this idea in mind instead of writing whole sentences like for Flux. Base SDXL still has the "original" training using natural language, but short sentences is the max you can do ^^; (after verification, Illustrious does use natural language too, but still not at Flux level)

VAE

Now that we have the prompt as math, we have our input. But what about the output? Images are just bits and bytes isn't it? So, they can be straight out math?

But spitting out images images directly (and working internally on them) is looking for troubles with models. That would make the number of knobs explode AND they are limited to 2 dimensional space (+colors). And models can handle math in more complex way.

That's when the VAE comes in. Those models are built (aka trained) to decode (and encode) stuff from "human usage" level to "computer usage" level. This "computer only" version of the image is the "latent", what is going to be the picture once the main model has done his magic... and speaking of magic...

UNET

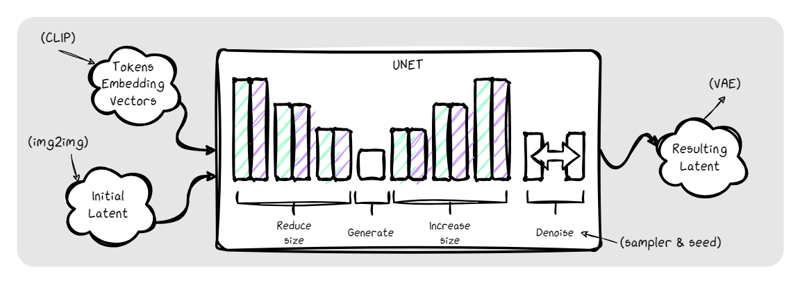

The UNET model is the main component of the diffusion model. And it's a very special kind of magic box. It has been trained to "denoise" a latent image following what it got from the CLIP.

The training has been done (short version of the story) this way:

Get images, tag/caption them, generate from tag/caption the "concept" (CLIP)

Generate latents for those images (VAE!)

Add noise (like, blur it with randomness, like old TV static) => the type of noise and how it's added is a parameter!

Input in the model the noisy latent and CLIP result, make it output a "denoised" latent

Compare and fix the knobs value to get better, because obviously it will not be good (with the loss function and various other math optimization stuff)

Rinse and repeat, with various level of noise, concepts and so on.

Tadah! Test you model with new prompts (CLIP) and check the resulting image (VAE).

BTW, the CLIP can be (and most of the time is) trained to integrate new concepts.

Once the model is strong enough, it can "denoise" even something that is ONLY noise, generating new stuff from nothing with only what he has learned.

But since it has been trained to denoise only a specific amount of added noise, only one "pass" would not be enough, hence the "steps". (short and basic version of the explanation, it is a bit more complicated than that).

The output is fed back as input for a number of times, with a specific "schedule" on how much noise (and how) he should remove each time, trying to "converge" to an image of a waifu.

Since here we are trying to get a final "clean" result, each step must go toward the same goal and not "break" what a previous step did. Some schedulers are better at this, with less steps (but sometimes longer steps) and with a different "feel" to the result since they don't work the same way (but they leverage the same model and input, so, they are not doing a completely different result either).

That's also here were the "prediction" comes in. There is several method to "advance" in the right decision, like using a fixed value of denoising to apply. That's the "Epsilon" prediction. But a more "advanced" method is the "V" prediction, where the value evolve with the amount of steps done (to converge to 0 at the end).

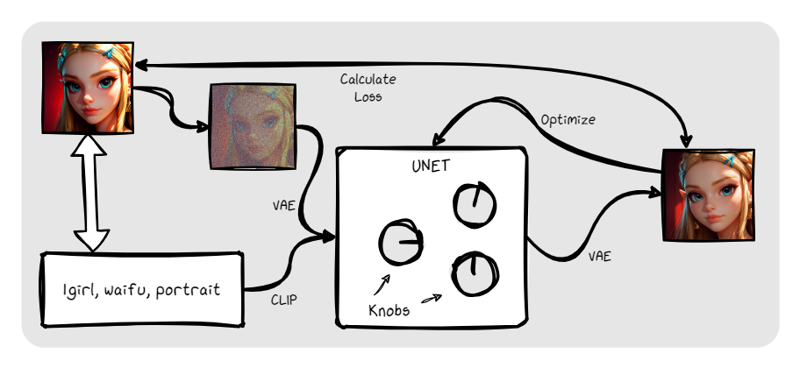

Training (or fine-tuning, aka training an already trained model some more) a diffusion model is done on the UNET/CLIP part. And that's DAYS of multiple GPUs grinding math since the models are HUGE. And then you have a new version that is several GB to distribute...

There must be a better way to handle this isn't it?

Low Rank Activation - LoRA

And that's when the idea of LoRA came in.

LoRA were introduced to help fine-tuning an other kind of models (the LLM, the models in the chatbots) but were adapted to diffusion models.

The idea is simple:

Built a VERY small model, a few thousands "knobs" at most.

Running it in parallel of the main model

Feed it the same input

Add its output to the main model output

When doing training, fix the knobs on the LoRA ONLY, not the main model.

And voilà, you got a "patch" like model, only a few MB, that can be plugged on the main model to alter it's result. It can't be used to completely change the output of the main model but it is good enough to add new clothes for you waifu (or new waifu!)

Training a LoRA

LoRA for SDXL - What are they?

So, they are a special kind of LoRA, adapted from the original idea, to be able to work with the CLIP/UNET way of working of SDXL. As far as i can tell, the initial idea is here: https://github.com/cloneofsimo/lora

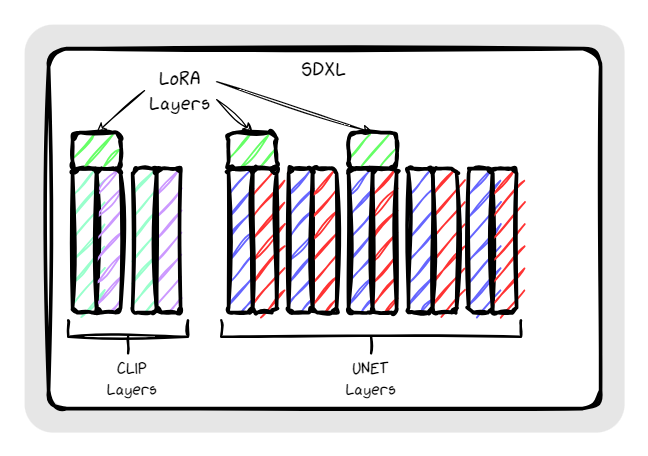

A base LoRA works with a single model but a LoRA for SDXL has layers for CLIP and for UNET (generally, as we will see later). Each of these layers are added to the corresponding layer of the associated model, using the layer names (UNET layer for UNET, CLIP for CLIP).

On top of that, there is several type of LoRA (standard, Lycoris, LoCon, DyLoRA, and so on), which is basically just the type of "knobs" and the way they are connected (to give better outputs with the same amount of knobs).

But an important part is the "dimension" of the LoRA. This basically translate to the amount of knobs and its performance. This can be 8/16/32/64/128 and so on with some odd values in between. Most LoRA works well with a size of 32, more complex concepts may need to go for 128. I found out i prefer 64 dimensions LoRA. And also, since a LoRA is just "a small modification", the number on the knobs tend to be small. That's why a technique was used: have a multiplier in there to help training with larger numbers to avoid losing precision, that's the "Alpha".

How can we train them?

There is several tools and of course, the on-site training tool is praised by a lot of people. But if you have access to a good GPU, you can train your own LoRA locally using for exemple Kohya_ss (and it's GUI).

Now, since this article is getting long (and i will add illustration to help understand some concepts), i'll stop it here and publish an other one later on specifics regarding the training :D

But the basic idea is to get a dataset of picture, much smaller than with model fine-tuning. Caption it, run a script to create an "empty" LoRA, feed the dataset and caption and train the knobs on the LoRA only.

This will leverage the same mechanism as with SDXL model (Learning Rate, steps, epoch, Optimizer, dropout), but since we are not afraid of breaking the main model (and there is far less knobs), instead of small learning rate, and ten of thousands of steps, we go to eleven with big changes with the risk of "overtraining".

AND THAT IS THE DIFFICULT PART:

do enough to actually do something

not do too much to destroy the end result

Here, most people are going with gut feelings, experimentation and "general agreement on values that kinda work". I am still there too, but i will try to explain the various parameters i feel are the most important (the one we should care about) and not leave at default value.