Results and Comparisons

To start off I would like to first demonstrate the results of my strategy when it came to making a Flux LoRa for the Glock Pistol.

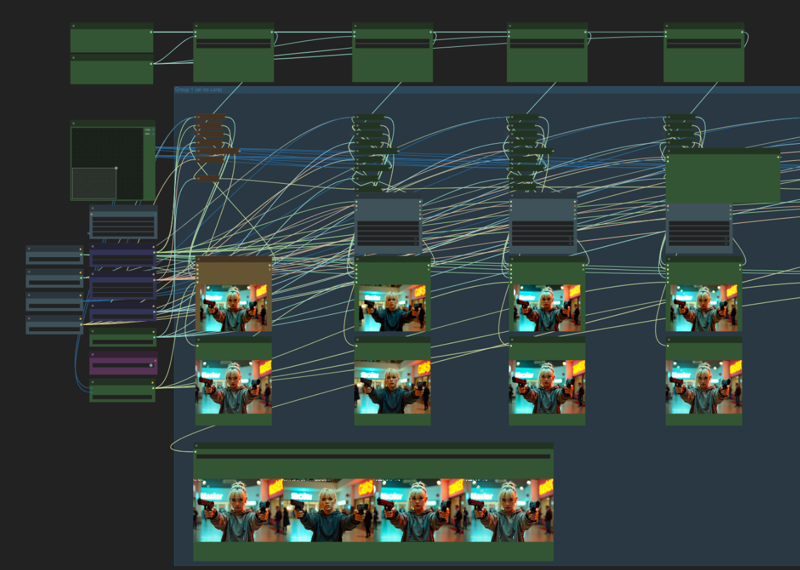

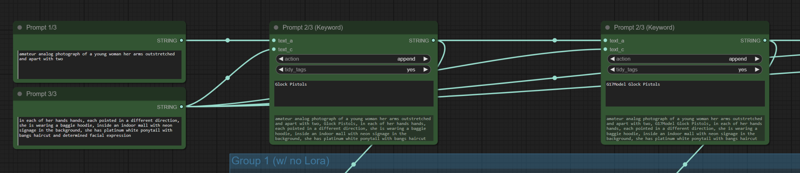

This is a comparison between no LoRa, my LoRa, a competitor Glock LoRa, and a gun LoRa.

I've redacted the names of the other LoRas because the purpose of this is just to show that the results of my strategy (in my opinion) is better able to execute the concept.

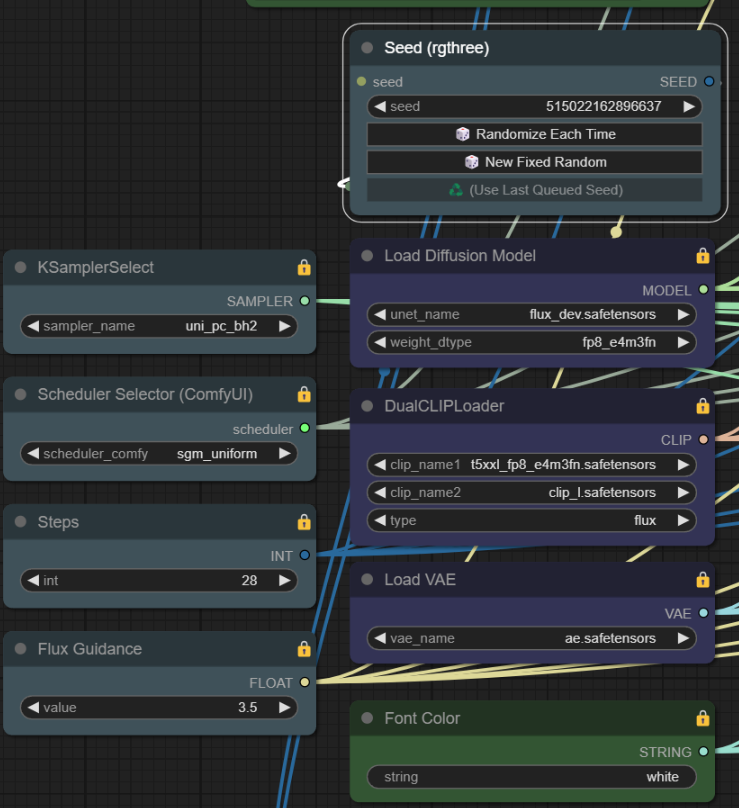

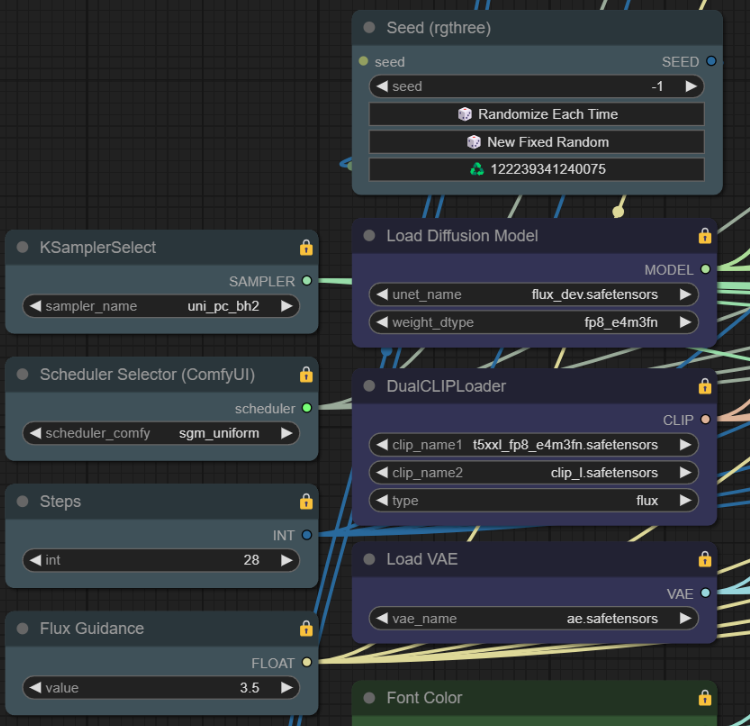

Below is the workflow I used in order to compare outputs:

All generations share the same seed, sampler, and steps, and each prompt is the same other than the keyword used to invoke the LoRa. I went to the model pages and made sure to follow the keyword provided (except for the gun one that did not provide any in which case I used the same keyword as no LoRa).

The prompt for the comparison image is a good demonstration of the LoRa's understanding of the concept because it requires that both sides of the Glock are shown at the same time.

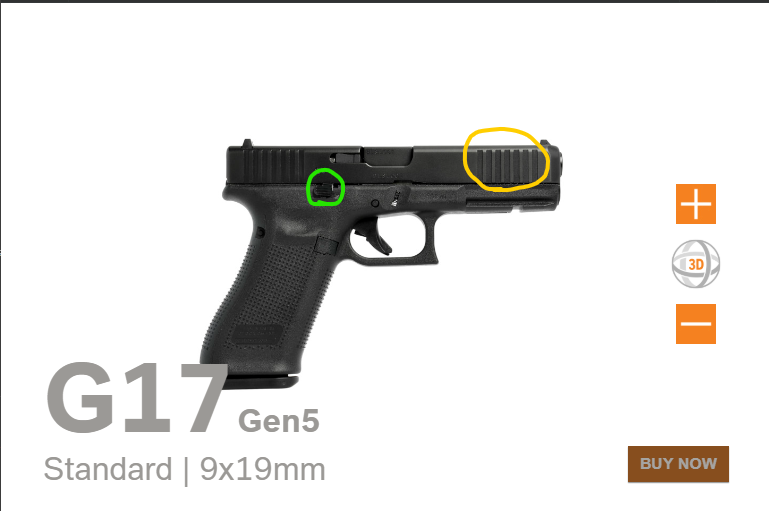

Below are images from Glock's official site:

It is safe to assume that all three LoRa's were trained on images of Gen 4 or earlier, which all share the features I'll be highlighting later, as none of the images from any of the LoRa's consistently create a Glock with front slide serrations that are only present in latter Gen 5 Glocks, that also have an ambidextrous slide release that is also present on the left side of the pistol from the barrel.

From the barrel's perspective, this is what the right side of a G17 Gen4 looks like, the distinguishing feature being that the slide release which is not present on the left side.

From the barrel's perspective, this is what the left side of a G17 Gen4 looks like, the distinguishing features being the ejection port and the extractor.

Here are all of those distinguishing features being represented correctly from my generation:

Flux seems to be able to only generate one type of pistol correctly, the M1911, and it's features often appear in any pistol that it generates. The relevant one here being the barrel bushing

A part that Glocks do not have:

We can see in some of the generations that the front end of the pistol resembles more of the M1911's barrel bushing, and I'll also highlight that every other generation either mirrors the ejection port, or mirror's the lack of it.

Here is a second comparison:

The Training Strategy - TLDR

Dataset quality and selection is a topic that has been covered by people with far more experience than me. What I feel like I've done differently here is the captioning strategy.

TL;DR: The captions should avoid all terminology that Flux has a strong association with completely. For the Glock Lora, the following words were specifically avoided:

Glock

gun

firearm

weapon

pistol

handgun

Instead, the Glock was referenced as a keyword "G17Model" and then features of it were described in context. When the model is done training, and in the context of prompting, using one of these terms may help with invoking the concept. For example my model advises to use the keyword "G17Model Glock Pistol".

Now I also spend time testing what epochs on what prompting that wasn't within the training will provide the most consistent results.

My Conjecture

Training LoRas for Flux often involves working around entrenched associations with certain concepts. These "stubborn" concepts can resist refinement due to how its foundational dataset was likely created for Flux. Based on my observations, these challenges arise from generalized captioning processes during pre-training, which failed to capture specificity, and the strong associations formed around dominant examples within certain categories.

Flux’s Generalized Understanding of Broad Classifications

Flux’s inability to accurately represent specific firearm models or nuanced configurations of wearable devices appears to result from generalized training processes during its creation. Here’s why this is significant:

Generalized Captioning During Pre-training

Flux is a 12-billion-parameter model, and it is almost certain that its vast dataset was captioned through automated processes. This is a reasonable and efficient approach for training a model of this scale, but it introduces limitations:

Lack of Specificity:

Automated captions are unlikely to differentiate between specific firearm models (e.g., a Glock 17 vs. a Sig Sauer P226). Instead, terms like “pistol” or “gun” were likely used universally, collapsing the distinctions between models into a single, generalized concept.

Minimal Curation:

Given the sheer scale of the dataset, it is unlikely that manual curation or specificity was applied to refine these captions.

The Conglomeration Effect

Because of this generalized captioning, Flux’s understanding of distinct categories (like pistols) becomes a conglomeration of all examples within that category:

Firearms:

Flux understands that a Glock is a pistol, but its idea of a pistol combines features from countless pistol models, leading to outputs that resemble hybrids rather than faithful reproductions of specific models.

Stubborn Concepts Due Limitations of Dataset

Some stubborn concepts I've come across don't seem to be learning broad categories all in one, but rather what I speculate to be the lack of diversity in the data set

Military Helmets and Night Vision

In the case of modern military helmets with night vision goggles, the difficulty was compounded: Flux’s understanding of military helmets was anchored in 1990s-era designs, while its concept of goggles was strongly tied to protective eyewear from the same period.

The Training Strategy - Long Version

The Solution: Sidestepping the Problematic Associations

The purpose of a LoRa is either to modify an existing concept or to introduce a new one. To deal with particularly entrenched concepts, the captions treat the training process as though the LoRa is introducing a completely new concept, avoiding problematic terms entirely and focusing on precise, observable details.

Key Principles of the Captioning Strategy

1. Avoid Prohibited Terms

To prevent the LoRa from competing with Flux’s entrenched understanding of broad categories, captions exclude terms associated with these concepts:

Firearms:

Avoid terms like “pistol,” “gun,” or “firearm.”

Use a neutral keyword (e.g., “G17Model”) and focus on physical attributes.

"The G17Model’s polymer frame features a textured grip, and the slide’s matte black finish catches the ambient light."

Wearable Devices:

Avoid terms like “helmet” and “goggles.”

Describe the system as n0ds and refer to components like “protective headpiece” or “optical device.”

"The n0ds include a quad-tube panoramic optical device with four evenly spaced lenses, mounted to a protective headpiece."

2. Emphasize Observable Features

Captions focus on physical, observable details of the target object, avoiding interpretive or categorical language:

G17Model:

Captions describe its slide, grip, and barrel, avoiding any mention of it being a pistol:

"The G17Model’s slide is engraved with serial markings, seen in sharp focus."

"Close-up of the G17Model’s barrel, with fine grooves visible at the muzzle."

n0ds:

Focus on details like lens alignment, mounting points, and materials:

"The protective headpiece is matte black, with modular mounting points and an adjustable chin strap. The dual-tube optical device features two cylindrical lenses emitting a faint blue glow."

3. Treat the Concept as Novel

By avoiding problematic terms, the captions treat the subject as a completely new concept:

Why This Works:

Flux does not associate the keyword (e.g., “G17Model” or “n0ds”) with its pre-existing knowledge, allowing the LoRa to learn the target object’s features in isolation.

4. Diverse Contexts and Interactions

Varied environments, hand positions, and artistic styles help Flux generalize the concept:

G17Model:

Captions included scenarios like outdoor shooting ranges, indoor ranges, and close-up details.

"The G17Model gripped in a two-handed stance, with the barrel pointed forward at a paper target."

Model870:

Described hand placement on the pump handle and stock, as well as diverse settings.

"The Model870 held outdoors, with sunlight reflecting off the polished wooden stock."

5. Reintroduce Prohibited Terms Post-training

While prohibited terms are avoided during training, they can be used effectively in prompts after training. This leverages Flux’s general understanding to provide context:

Example:

A LoRa trained on Glocks can respond accurately to prompts like "G17Model Glock Pistol" despite the terms "Glock" and "Pistol" being avoided during training.

Case Studies: Implementing the Strategy

1. G17Model: A Precise Representation of the Glock 17

Challenge: Flux’s concept of a pistol is a conglomeration of all pistols it has seen, making it difficult to isolate the Glock 17.

Solution: Captions avoided “pistol” or “gun,” focusing instead on:

Slide markings, polymer frame texture, and grip design.

2. Model870: Training a Shotgun Without “Shotgun”

Challenge: Training the Remington 870 required avoiding terms like “shotgun,” which Flux associates with a broad, hybridized concept, not only limited to shotguns.

Solution: Captions described the stock, handguard, and receiver in detail:

"The Model870’s handguard is textured polymer, with faint scratches from repeated use."

Hand placement and usage details were emphasized:

"The left hand grips the wooden pump handle firmly, with the thumb wrapped around the top."

3. n0ds: Sidestepping "Goggles" and "Military Helmet"

Challenge: Flux’s understanding of “goggles” as protective eyewear conflicted with night vision devices. and "Military Helmet" anchored in 1990s-era designs

Solution: Captions avoided “goggles” and described the system as “n0ds”, the night vision goggles as "tube optical devices" and "helmet" as "protective headpiece":

"The n0ds include a dual-tube optical device mounted on a protective headpiece with modular rails."

Execution and Example Strategies

I used GPT4 in order to craft specific instruction prompts and then create a Custom GPT for the express purpose of captioning a specific LorA.

This has been my strategy for my Glock model, shotgun model, and night vision model.

I consider the Glock one the most successful, but I suspect that was because I had the best dataset for it.

I've put these instructions I've used as .txt files attached to this article.

It's for more convenient to employ the use of an LLM for this captioning strategy, but can be done manually without one as well, as the general concept is not that complex.

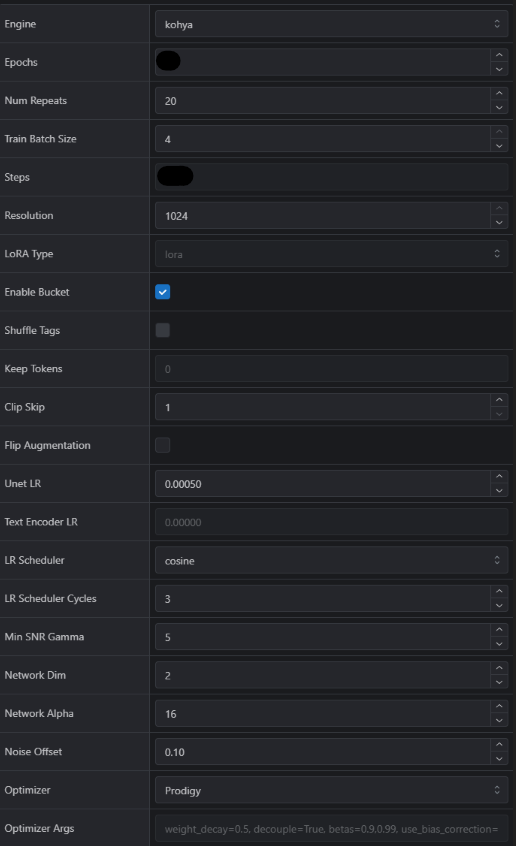

Settings I used

Below are the settings I've been using for all of my Flux LoRas. I'm certain there are better settings, but since the cost is relatively high, I haven't experimented much as I had success with my first Flux LoRa after finding these in a different article. I would be open to hearing suggestions

from those that have more experience and knowledge on the subject.

I've blacked out the epochs and steps to avoid confusion, as those will depend on the size of your dataset.