About

I haven't found a guide that works for me when creating original characters with Stable Diffusion so I tried experimenting with character design using VRoidStudio and ControlNet to help me get a more unique look.

Faces from any model tend to be very similar after a certain point and it is still difficult to get a consistent character. The current method that is commonly used by the community is to use a [LastName + FirstName] to get a binding for an OC but I found that approach tends to be reliant on luck to get a favorable result. Additionally, this method still lacks the fine control for designing an OC and random designs aren't very flexible for precise design. Character Creation Tools exist so I decided that it's best to not reinvent the wheel.

This falls under musing since it's all experimental. I haven't integrated this into a workflow yet. I thought that this was interesting enough to share. My main artstyle is anime-based so I am not certain if this can apply to other artstyles well enough.

Tools Used

Any Image Editor

ControlNet

VRoidStudio (Any 3D model or character creation tool will likely work, VRoidStudio has a lot of sliders for ease of adjustment)

Step 1:Creating a Face

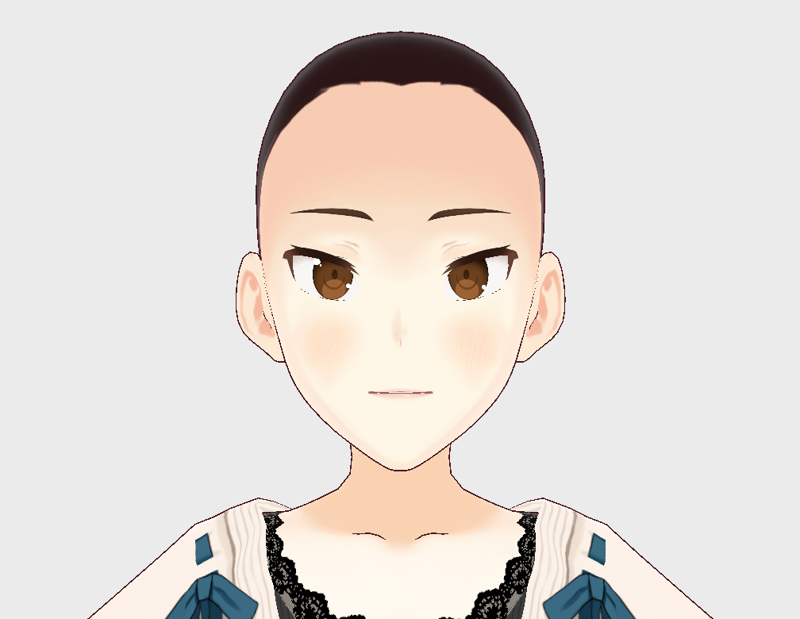

In a character creator software, adjust the facial features of your character to the desired liking and then take a screenshot.

(Example 1)

The character is bald for easier editing later. You can still leave the hair but that can cause ControlNet to leave some artifacts on the image. In this example, I made the character's eye shape to be sharper in order to keep it unique from the other eye shapes from my model.

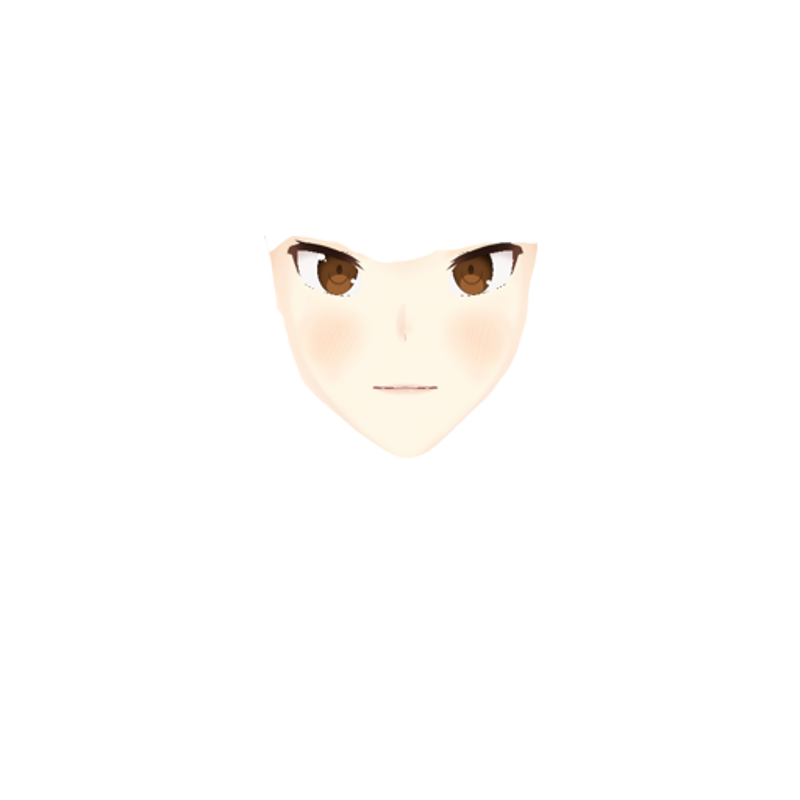

(Example 2)

Eye shapes produced by my model, BlueAilandMix

Step 2: Editing the Character Screenshot

You will need to do two things with your screenshot:

Edit the image so that it has the same dimensions as what you will be using in txt2img

Remove everything else from the image aside from the facial features

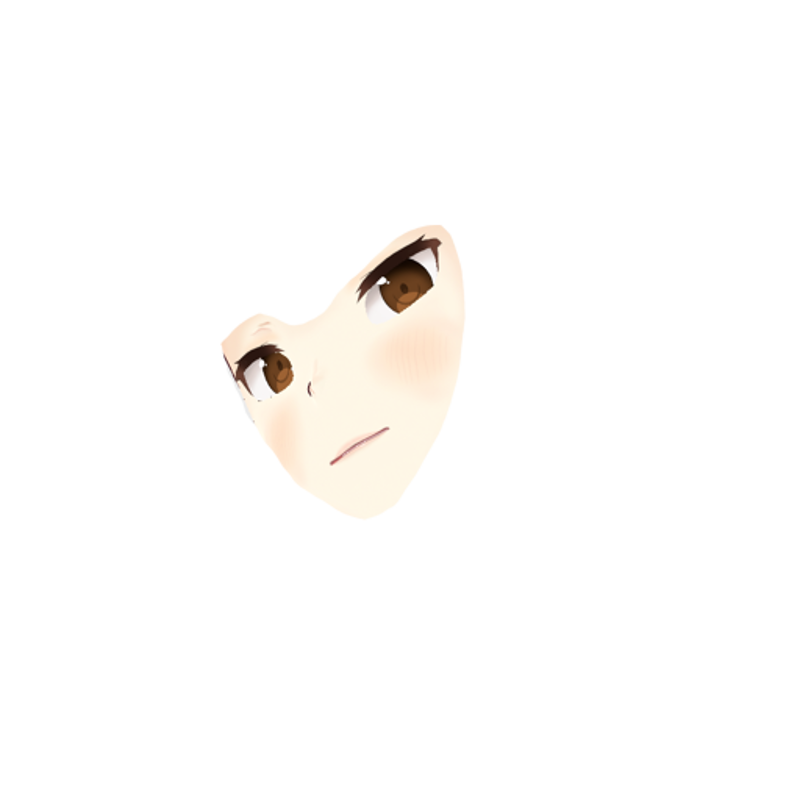

(Example 3) Resulting image after editing. I haven't fully experiment what you can leave in. I removed most of the character body since I want Stable Diffusion to generate the rest.

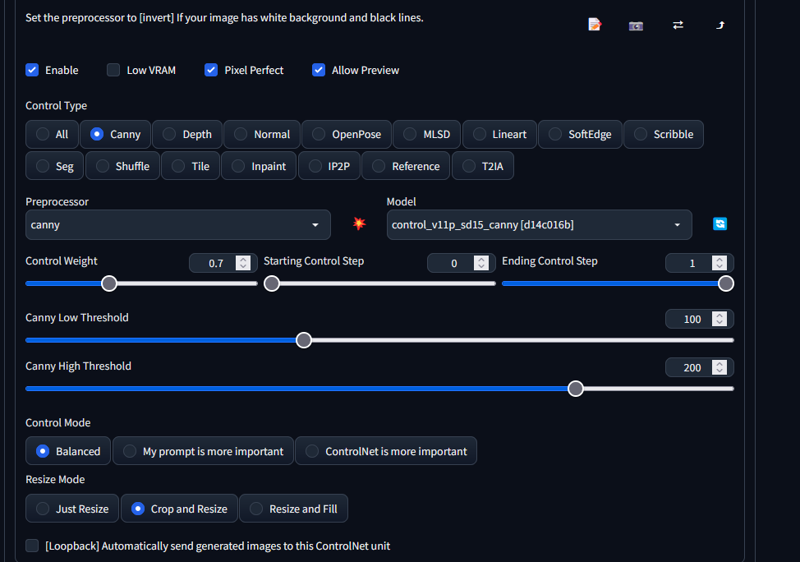

Step 3: Send the Image to ControlNet and Generate

I used the canny model for this example and the only other ControlNet that I found to work is lineart. The Control Weight affects how much Stable Diffusion should follow the controlnet example. Since the image is only the shape of a face, you do not want to raise the weight too high otherwise you'll just produce the same image of only a face. I have personally found that best optimal weights are around 0.7 ~ 1.

(Example 4) - ControlNet Settings for Canny

Prompt Settings

From the prompt settings, try to specify the pose setting.

1girl,blue_hair,upper_body,closed_mouth,smile,simple background,portrait,looking at viewer,standing

Negative prompt: EasyNegative,worst quality:1.4,low_quality:1.4,hat,veil,head_wear

Steps: 20, Sampler: Euler a, CFG scale: 4, Seed: 922201447, Size: 512x512, Model hash: 16736832bd, Model: 05blue_ailandmix, Denoising strength: 0.5, Clip skip: 2, ControlNet 1: "preprocessor: canny, model: control_v11p_sd15_canny [d14c016b], weight: 1, starting/ending: (0, 1), resize mode: Crop and Resize, pixel perfect: True, control mode: Balanced, preprocessor params: (512, 100, 200)", Hires upscale: 2, Hires steps: 10, Hires upscaler: 4x-AnimeSharp

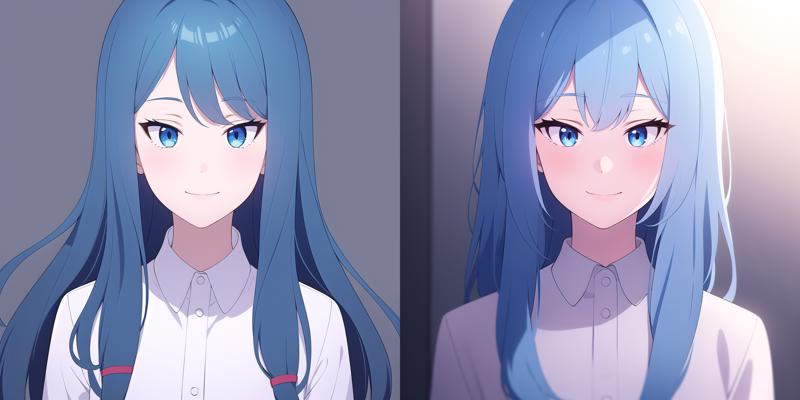

(Example 5)Produced Result with 0.7 Weight

(Example 6) Produced Result with 1 Weight. Higher weight is closer to the image.

As you can see, the shape is quite similar from (Example 1) and very different from (Example 2).

(Example 7) Toying with Dynamic Prompts to try out different designs.

Playing around with a complex positioning

I wanted to see if the results can still hold involving a complex pose so I tried with this example.

(Example 8) Character at an angle and distance

(Example 9) Edited Screenshot using the same methodology

(Example 10 - Pose Adjustment Result)

Well, it works and doesn't work. 1st and 4th are have a close eye shape but the 2nd and 3rd are wrong. I imagine for more complex poses that there's some gambling involved but it's more toned down by using ControlNet.

Other Notes

Other Processors

Lineart is the only other ControlNet model that I found to work. It requires a lower weight compared to canny

Reference-Only,Depth,Normal models do not seem to work. They produce an image that's too similar to the original (only a face) even at lower weights. You could first produce an image with this method and then send the generated image to reference_only to see if complex face poses can work.

Well, thanks for reading and I hope that this was interesting!