I have been followed Hunyuan Open source plan since the beginning of HunyuanDiT(Not Video) in the early time of this year. I really like their models so I also built 3 controlnet model for Hunyuan DiT(Not HunyuanVideo, previous Hunyuan Image model) which are here:

https://huggingface.co/TTPlanet/HunyuanDiT_Controlnet_inpainting

https://huggingface.co/TTPlanet/HunyuanDiT_Controlnet_tile

https://huggingface.co/TTPlanet/HunyuanDiT_Controlnet_lineart

To be honest all these 3 model are great and provide good control and effect on HunyuanDiT model especially the inpainting model.

To the next page:

The release of HunyuanVideo is truly astonishing; an open-source model with such high completion and quality is a delightful surprise. In fact, even before the weights were open-sourced, I had conducted extensive internal testing. The model’s text-to-video generation capabilities are impressive, especially regarding the stability of characters and the number of characters that can appear simultaneously on the screen. The limbs remain stable even during intense movements.

The early online versions had fewer restrictions, but due to policy reasons, many words are now filtered as sensitive terms. However, this does not affect the use of the open-source weights of this model. The local version has no restrictions, and the freedom for NOT S-F-W content is comparable to the Stable Diffusion 1.5 era. I believe that it is precisely the inclusion of NOT S-F-W data that enables such stability in the model’s video character movements.

Now, let me briefly introduce how to use this model.

because it is officially native supported by Comfyui, I will highly recommend you update it first. please read this page before you start to use: https://comfyanonymous.github.io/ComfyUI_examples/hunyuan_video/

from this link, you will find hunyuanVideo model and VAE link, also the workflow. you will find the clip file from here:

https://huggingface.co/Comfy-Org/HunyuanVideo_repackaged/tree/main/split_files/text_encoders

place them properly according to the comfyui models folder rules. after you start the comfyui and upload the workflow, you will see some workflow structure like this one. I have add KJ's node to extend the functions. you can find KJ's node from here:

Great thanks to KJ, before the native support from comfyui, the wrapper works really great!

https://github.com/kijai/ComfyUI-HunyuanVideoWrapper

because comfyui output is webp you can use KJ's node video combine to convert to mp4 which is easier to share. you can refer to my image to insert the lora into correct position.

That's it, you can now start your first try!, if you got a OMM you will need to change your resolution to smaller amount or frames to lower number! remember both resolution and frames affect the VRAM needs!

set frame to 1 to generate the image, trust me, it is also a good model for image:) just enjoy.

My experience tell me, 4090 with 848*480*121frames can run @ 21GB VRAM consumption.

Alternative workflow for V2V

The original workflow is from KJ's node, however now we can replace the most node by comfyui native, so following are the modified V2V workflow from KJ's and make it consistent to T2V native workflow:

I have upload the workflow here:

https://drive.google.com/file/d/1mFAUlsXLB8KLfkDxfNT44uS181WY7OKH/view?usp=drive_link

I will highly recommend you try KJ's resample test workflow in his example folder which are very interesting test.

Other models:

I will recommend you use formal Tencent BF16 model with weight_dtype combination in Comfyui, but if you like you can also us KJ's fp8 version or other distill model Like hunyuan fast here:

https://huggingface.co/FastVideo/FastHunyuan, but as I tested, I like original hunyuan better .

Remember, if you want to use fast model, set the step to 6-10, flow_shift to 17

Also, Tencent released their own fp8 model, which I heard they did detail job for each layers, but it can't be directly used in comfyui now, it will be soon supported after following update, I assume.

Online VS Local:

Hunyuan Video also provide the online generation here, it is free and you can have 2 times high-res generation and 4 times regular generation everyday, by joining their promotion activities, you can get unlimited season ticket to use their online model.

https://video.hunyuan.tencent.com/

you can also download their application as Yuanbao in the app store to generate video online.

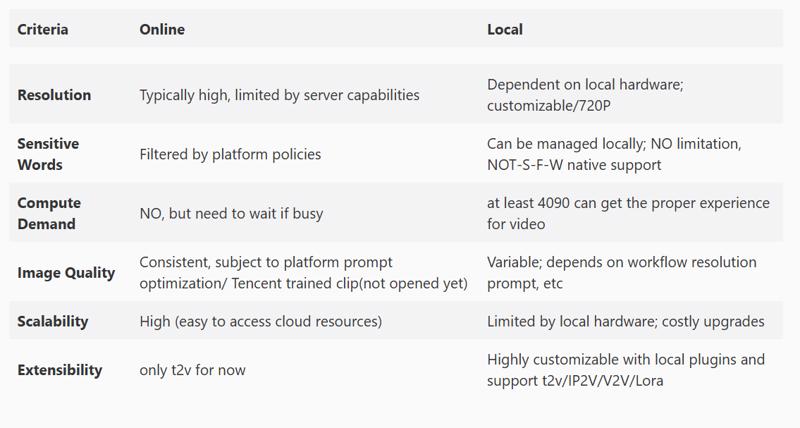

compare to offline model here is the difference:

here is some clip of video I want to share for comparison, I used same prompt for local and online, local baseline is 848*480 however online is 720p, both for 5 sec video.

inside the test, you can see Hunyuan online with 720p can generally provide better quality than local 480p(with RTX4090). however if you have a card like 6000ADA or newly announced RTX5090 you can build 720p@5s locally which definitely improve the quality!

All video without cherry pick!

It can be observed that Hunyuan performs significantly better at generating human figures compared to other scenes, and the results are relatively stable. Hunyuan also demonstrates a strong understanding of prompts, accurately meeting camera requirements. There is no issue of the generated content moving too slowly; it can produce wide-range motion, rapid movement scenes, and appropriate camera transitions and shot breakdowns. This aspect is truly impressive.

Lora Capability:

You can train lora, current support github is:

https://github.com/tdrussell/diffusion-pipe

please follow the instruction to install, please notice deepspeed is needed, so Linux is better, but wsl2 can also work!

you can train both image and video, just make your try!

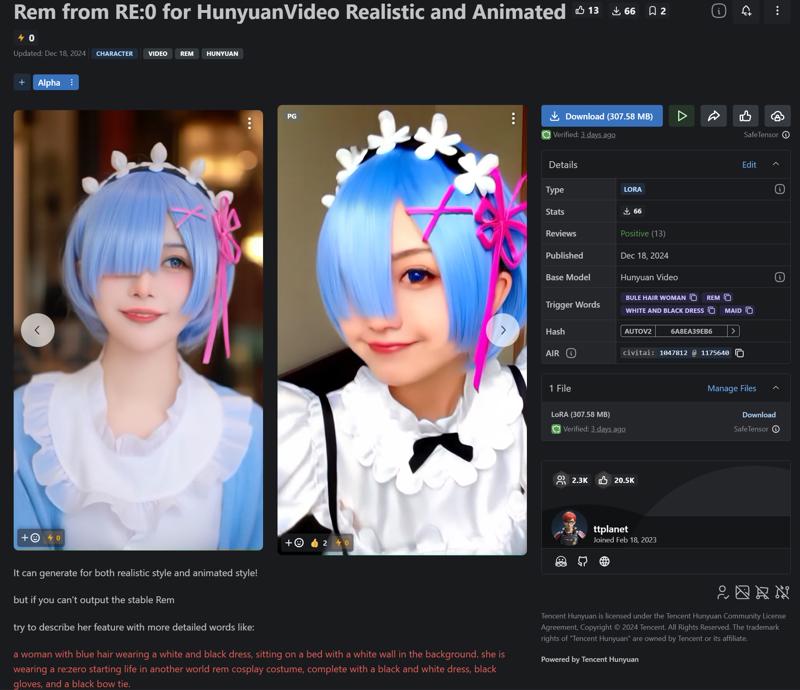

here is the case I trained for Rem, you can find my half done lora here, I will upload the final version once it is fully tested. my Rem lora can generate both for animation and realistic.

https://civitai.com/models/1047812/

click for case show:

Rem collection

wish you enjoy Hunyuan and thanks to Tencent opensource such a great model and also thanks to Kijai for quick wrapper and comfyui for quick native support.

This will bring more creativity to the community and looking forward to see the Hunyuan I2T performance in Jan 2025!

Truest me, more is coming, like new Hunyuan DiT image model....