todo:

tensorboard merge

proper tag pool count database

fix cheesechaser automationlink individual subsystems to metadata reader for image sizes

SDXL:

https://civitai.com/models/1177470/sdxl-simulacrum-v2full-abgd-sfwnsfw

83/100 CLIP_L V2 Grid landmarks hit

?/200 CLIP_G V2 Grid landmarks hit

10,000,000/50,000,000 Samples trained

Post mortem says it needs much, much, much more data. It's nowhere near enough data.

Flux 1S

https://civitai.com/models/1136727/v129-e8-simulacrum-schnell-model-zoo

5,050,000/50,000,000 Samples trained

Currently cooking.

Next Up: Schnell v2 - Same Dataset

Schnell's epoch will take NEARLY A WEEK for one epoch. The little v100s that could.

Likely after because it's insanely faster and yields results quicker, I'll run an epoch to full finetune the most popular pony model, core illustrious, and noob vpred to create CLIP_L and CLIP_G merge offshoots that can control those models as well. Those will take a fraction of the time.

Likely the merge for CLIP_L and CLIP_G will start with low amounts of percentage; < 8% or so, then the more samples trained, the larger the increase will be until the full difference is interpolated between the two forms of CLIP.

This likely won't create a full depictive linker; but it should allow the CLIP_L and CLIP_G for the alternative models to learn depiction offset in a fraction of the time; without destroying them.

The main thing that this SDXL experiment has shown; is that it's very easy to break SDXL, but the CLIPS seem to survive a tremendous amount of learning and data beyond the same amount that turns SDXL into a pile of pixel dust.

SDXL - Simulacrum v2 - Tomorrow afternoon Release; -> 1/31/2025 5 pm gmt-7

The first 10 million SDXL samples.

The outcome will be released for everyone else to test and enjoy the weird shit you can come up with.

This isn't like other diffusion models. Everything is intentionally cross contaminated. I cross contaminated the entire model and introduced grids to allow the system to intermingle with itself

There were some massive successes, and some dismal failures. I'll write a full write-up on Saturday, and the full release will be on Friday afternoon tomorrow.

Trained with nearly 10 million samples and over 600,000 different images; all tagged specifically for grid, offsets, depiction, size, depth and more.

This experiment is the first large scale experiment I'm performing as a benchmark for my hardware, and I ended up needing runpod to supplement the training anyway due to temp and hardware problems.

I will release the SDXL Simulacrum V2 with a full array of tag lists and provide a write-up on the current state of the caption + cheesechaser software that I used.

I've been quite busy at WORK WORK, so I haven't been able to fully complete the captioning software for distribution yet. Suffice it to say, I'll release a series of util scripts in the very near future on a github and slowly trickle in the important accessors and editing systems that I used to make this possible.

The final outcome will have a full GUI with access to the various important elements I used, and a simplistic interface with ease-of-access to auto-tagging with complex tagging substructures of your own design; or using the exact same ones I used to make this dataset.

I will provide a full list of links, credits, and important information for how I made this possible in the complete writeup, as well as the tensorboard and a plan to tackle the automated scientific bias analysis for version 2.

Special thanks to everyone who bothered to read my ramblings.

I have extracted the CLIP_L and CLIP_G dubbed CLIP_L_OMEGA4 and CLIP_G_OMEGA4 will be released alongside the model as standalone toys to play with.

This brings the CLIP_L samples to nearly 25 million I think, I'd need to double check; and the CLIP_G samples to almost 10 million.

They were specifically trained with grid knowledge and offset depiction capability, so they have a high chance of producing that same outcome in other models.

They were also heavily trained in SFW, QUESTIONABLE, EXPLICIT, and NSFW elements in unison together. I plan to use them frozen to train the T5 how to properly plain English use SD3 in the properly unabridged way that my CLIPS were trained in.

A new kind of diffusion model.

This doesn't exist yet, but I plan to make it.

Ideas are brewing in the ole noggin. Older ideas are starting to burn away, and new ideas are starting to form based on the experimentations.

Classification Patchwork Diffusion:

This is a concept for a mixture of diffusion experts; which is multiple types of AI integrated directly into a type of attention distribution system based on sliced vectorized captioning in a 9x9 patchwork methodology.

Subject classification

Grid identification

Depth classification

Rotation vectorization

Scene classification

Motion interpolation

CLIP_L

CLIP_G

T5 small

Branching reintegration diffusion UNET

Would be a fair starting point. Each identifier model would need to be trained on a large bbox classifier dataset built specifically for a 81 batch size integration of small segments.

Training larger images would be directly on the diffusion UNET attached to the CLIP_L, CLIP_G, and T5 small; which identified segments can be trickle trained into the identifiers over time.

Theoretically a model like this should be fully capable of patchworking together text without needing a full massive unet merged into itself to make it happen.

Discoveries, discoveries, discoveries.

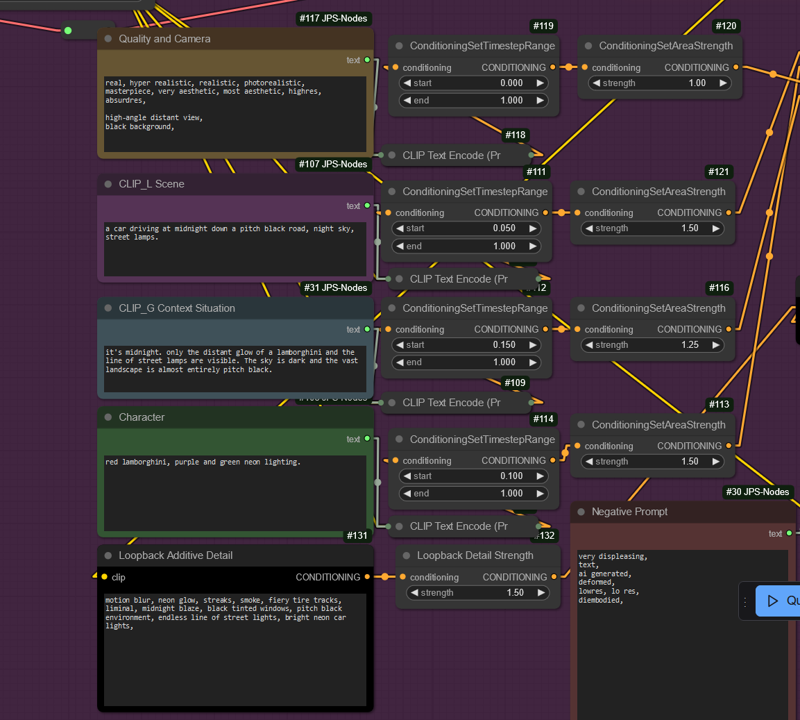

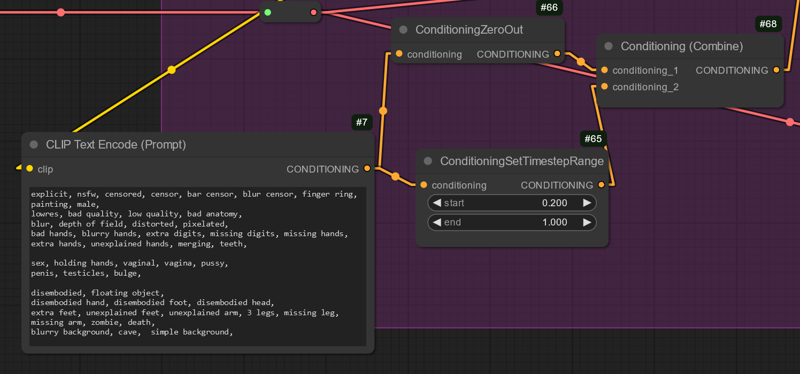

When experimenting with COMFY_UI, I discovered something potentially unique about this model that I've never seen in another SDXL based model.

The clips are both cooperative and divergent to a large degree. I knew this. I knew this very well.

The baseline default SDXL CLIP_G and CLIP_L are competitive and divergent, but the fight has been mostly burned out of the unet.

Sim's CLIP_L and CLIP_G are in a legendary battle to determine dominance over the UNET now. It's a series of battles and not just a small series. The outcome is not unstable though, it's consistent. This speaks a phonebook sized novel about it's training and captioning and the outcomes of multi-concept integrated diffusion.

The CLIPS behave very differently.

CLIP_G is much less tuned to my dataset, the dataset I used to train the CLIP_L is only partially present in this one; and on top of that, the captions are heavily altered.

CLIP_G is... actually pretty intelligent. I discovered this when using CLIP_L and CLIP_G double prompting experimenting with COMFY_UI.

I'd say the CLIP_G is comparable the version of PEGASUS (500m LLM) I was tinkering with, except it might be smarter.

CLIP_L learned from CLIP_G for a long time, before I exposed CLIP_G to the actual learning from the dataset. This has caused some very interesting behavioral shifts to the CLIP_L; most notably being more adaptable to the difference between safe, questionable, explicit, and NSFW detailing. It's more likely to put some pants on now.

I actually DID train the CLIP_G every single epoch, but I had saved the divergent CLIP_G with a timestamp, and then I merged all of them at the final point once the SDXL was properly tuned to the responses of the CLIP_L and CLIP_G with CLIP_G in it's baseline form.

ComfyUI has a unique setup for this.

This is using size_s car, and the attention shifted to the landscape. Yet the car, clearly is the lambo that I wanted, the color I wanted, the environment I wanted, and so on.

This is possible in SDXL-Simulacrum, BECAUSE of the grid.

The timestep training is resulting in the potent and most powerful combination of overlaid systems that I've seen so far. It's the only one other than Flux that I've seen respond to images in this way, except for maybe full inpainting models; but even then they tend to be very hit or miss with masking as well.

The experiment is ongoing, but I will most definitely release three ComfyUI workflows for this;

CLIP_L and CLIP_G captions separated and joined with small explanations.

Complex scene design image generation taking advantage of the CLIP_L and SDXL-SIM's timestep understanding.

Loopback timestep with strength (it's kinda slow I need to use scalar to speed it up)

The SDXL model itself responded in unique ways that I never expected.

The majority of SDXL models I've seen, are absolutely burned to a crisp; baseline SDXL is most DEFINITELY included in this list. They fried so many core details trying to implement things like pose, character, and so on.

I'm taking a wide vs narrow approach. Casting everything in a wide net with identifiers.

That way... less... is more.

Preliminary training was uneventful. Full of the things you would expect from any sort of full finetune with a large dataset; complete chaos.

As expected due to the huge mix of potentials: classifiers, bbox, complex captions, and so on; the outcome has produced chaos for the first 50 or so epochs with a series of rotating smaller datasets. Roughly hitting the point of me almost restarting the training from base SDXL.

I said to myself; we're going to epoch 100 whether it burns our not. Whether it wastes time or not.

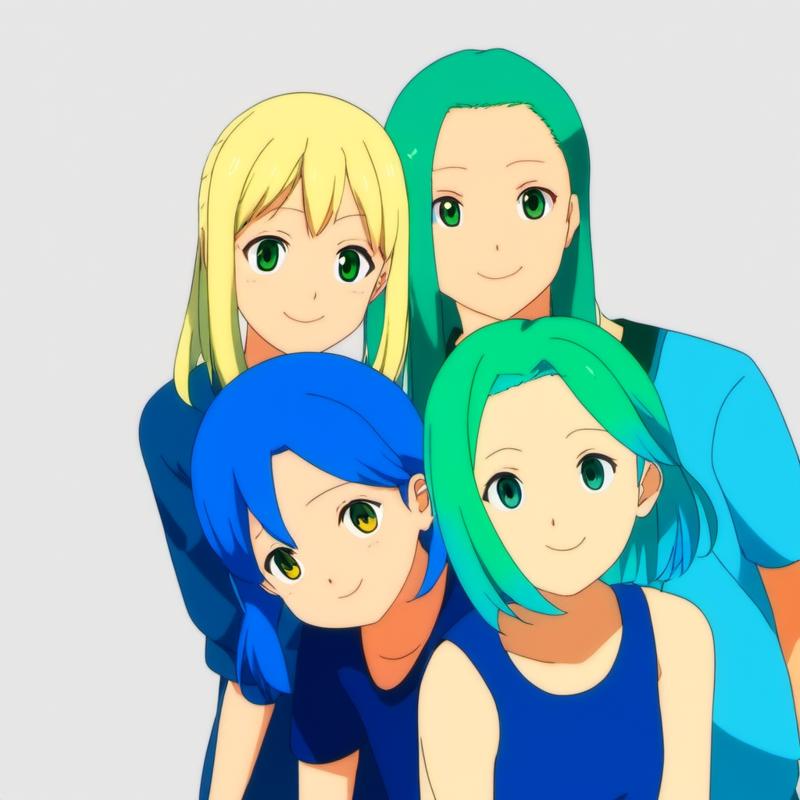

Styles cross contaminate very well, characters superimpose like MegaMan and Spike Spiegel. Very interesting outcomes and potent classifiers saw things cutting through in impressive ways.

Clearly there are two hands, one in the lower left, one in the upper right. The state of the hands is another story in this version, which is about epoch 55 after the CLIP_G training started.

Goku did no better, but I noticed something very interesting with this one. That cigarette in the upper right corner, was 3 dimensionally thrown.

I took a closer look and saw this was... more of a sticker, or an object superimposed on a surface. So I decided to let it keep cooking.

So as you can see it does realistic backgrounds. I thought okay, so lets see what happens if we swap the style.

He... didn't move... Huh. Okay next, realistic 3d.

Damn it's... even better.

Alright next, real, realistic, 3d, photorealistic, and so on.

The hair got better, but the torso and body didn't really change much. That tells me a whole hell of a lot about the 3 core styles. The bleeding doesn't come from the styles entirely, but it also comes from the series, and outfits as well. So I did some further testing until I concluded that this is promising enough to complete the first 100 landmarks for CLIP_L, and shortly later decided on 200 landmarks for CLIP_G to determine that both clips are attuned to a proper state.

Epoch 63

This is where it really started to come together. Around sample count 7.5 million or so. This is where the cohesion and cooperation started to take, and the competitive behavior started to show the CLIP_G yielding to the CLIP_L's power, rather than like before it would fight everything.

This thing went from potentially chaos, to a 5x5 grid of beauty. You can see many images generated using this on the current v22b sdxl page, and this particular one is far beyond expectations in terms of visual fidelity when combining zone, grid, offset, angle, and so on tags.

You can FULLY see the 5x5 grid now. Entirely depicted in every image, This is NO LONGER a 3x3 depiction, this is most definitely a 5x5. The grid took and healed.

The dataset needs to be expanded, in a large way. The amount of training is limited from these images, and I need to expand to the full 3d, cinematic, and anime video database that I've compounded.

Epoch 68

I'd say the outcomes are starting to speak for themselves. It's using implications for5x5 placement to place things like vehicles, weapons, hands, and characters based on the size tags and the grid tags.

The classifiers are starting to cut through on zones themselves; while the specific zone defining traits are still more powerful when used together. It's creating quite an interesting outcome of overloaded bleed between zone sections and image trainings.

I will be expanding the baseline image dataset further.

The final pass dataset will be based on hands, coloration, weapons, objects, and so on; to ensure their fidelity is up to a specific quality before V3 is ready.

I'll likely release v26 or v27 by the end of the week.

Shuffle Training Outcome: 62/100 V2 landmarks hit -> 2 epochs

This trained between timestep 5 and 995, which allows for stronger image pathways in the img2img response in the model, but in the process it seems to

The new problem it's facing, is being TOO STRONG early on in the timestep counts. I suppose this isn't such a bad problem. I can think of a few ways to drag it out a bit with additional training, but I think self-reintroduction reinforcement training using a slider lora before I continue might be the play.

I'll run a few experiments and see what I come up with.

Images CAN BE CAPTIONED into good anatomy fairly easily, so the good data is definitely there. I just need to fixate it better on it's styles.

Quick Update: 48/100 V2 landmarks hit

After epoch 57 we have hit 48/100 core landmarks, which means it's time to raise the timestep and start shuffle training.

I go by the 80/20 rule, 48 is above the 20% threshold in the 80/20 / 2 which is the goal timestep range; thus we can now introduce new pathways beyond the current burned ones.

It should only need ONE epoch of shuffle training at this timestep; 5. Afterword it continues with baseline caption + tagging training from timestep 7.

Update 11: The SDXL Burndown

I wrote a whole article and it just kinda disappeared there... A very sincere article about how text doesn't work correctly and there's cross contamination. I'll rewrite and summarize better.

Less is more

Less zone tags the better. Treat them like they're giving you access to a whole world of new possibilities if you can, as they aren't fully trained yet.

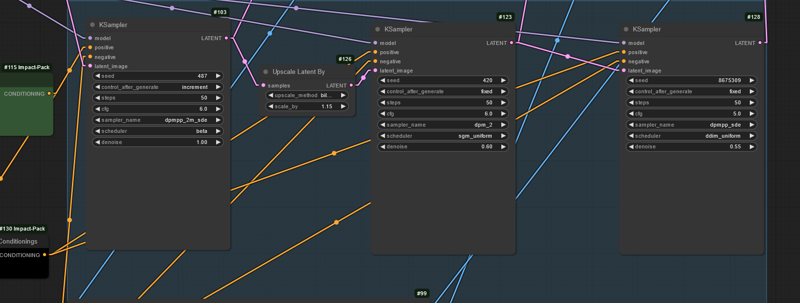

anime,

3people, 3girls, queue, in line for lunch at the lunch room,

masterpiece, most aesthetic, very aesthetic, good aesthetic,

highres, absurdres, As you can see there's some issues with quality, but that's what the next cycle is for. Not to mention there aren't a bunch of random genitals or breasts flopping around. If you check my posts from this model, you'll see fairly easy to use prompts with quite a few easy to use setups.

There's quite a bit of stuff here that can be used for practical artistic purposes, including thousands of artist styles baked into the cake.

Text doesn't work correctly, I figured this would happen

The large amount of text has caused a series of cross contaminations to any other image with text. These captions must be particularly specialized and carefully separated in the future for taggings, to ensure solidity and careful depiction positioning.

On the plus side, you can now blacklist text way better. It basically eliminates most if not all text.

Depiction offset and zone tags have cross contamination

Text, zone, and offset all have a bit of cross contamination. That was the point, so expect quirky stuff to happen when you blacklist until enough training hits the epoch stages.

Hagrid and hand cross contamination

Simply put, there's too damn many hand captions. It knows where they all are, so blacklisting bad hands has bad consequences. Since they are quite literally built to identify only fair hands, those hands are zoned correctly; so do not blacklist hands until I come up with a solution to the blurry hand fix not working now; I have a few ideas but nothing solid yet.

Blurry hand fix is definitely a Flux fix; relying on the T5, CLIP_L, and Flux UNET to fix the hands for you.

Next datasets

Safe Gelbooru

Safe Danbooru

Safebooru > 100k

Safe 3d

Safe pose data

This should shore our full dataset up to around 600k or so, which is becoming quite burdensome.

Update 10 : The next SDXL -> NovelAI V3 Contender

This thing is going to hit with such a hard punch that nobody will see it coming.

Training the SDXL training model

This prompt is pretty basic, and a good test of the system;

anime,

3girls, side-by-side,

blonde hair, blue hair, green hair,

very aesthetic, aesthetic, highres, absurdres

anime,

3people, 3girls, side-by-side,

depicted-upper-left minimal head,

depicted-upper-center minimal head,

depicted-upper-right minimal head,

blonde hair, blue hair, green hair,

very aesthetic, aesthetic, highres, absurdres

SO FAR it's doing pretty good for a core training model. It's literally meant to be a model fit for training the CLIP_L and CLIP_G into a timestep format.

It's nearly ready for the full retrain with brand new offset, size, depth, zone, depiction, and onnx tags. Everything is organized and the tagging is already running on additional data using 15 different AIs all in a logical and carefully coordinated method.

CLIP_L_OMEGA

This CLIP_L will be released as CLIP_L_OMEGA -> trained with vPred, ePred, timesteps, depiction offset, depth, scale, and an absolute shitload of NSFW elements bled into it's base tokens.

CLIP_G_OMEGA

I set aside all finetunes of CLIP_G and I will be doing a massive merge of all of them; using a training normalized dot product difference calculation. There's about 40 of them so it'll take a while.

After the full merge, this will be released as CLIP_G_OMEGA, with nearly 4 million dot normalized trained samples, meant to teach timestep and progression within a 50,000 step epoch range.

We'll see how it turns out. I'll run multiple tests to see how best to use this pair of clips.

New Taggings

Replaced: depiction-row-column with grid_rowcolumn

I will be making textualembedding loras to shift the attention of these tags to the tags on the right, which will be basically the same thing in the end. I don't want to destroy the training, so I'm going to cross contaminate.

depicted-upper-left -> grid_ul

depicted-upper-center -> grid_uc

depicted-upper-right -> grid_ur

depicted-middle-left -> grid_ml

depicted-middle-center -> grid_5

depicted-middle-right -> grid_mr

depicted-lower-left -> grid_ll

depicted-lower-center -> grid_lc

depicted-lower-right -> grid_lr

Less tokens, more bleed-over to the other depiction offset values. I'll train some textual embedding changes to kind of shift the attention from those to the new ones later, as I don't think anyone has created CLIP_L and CLIP_G loras, but I'll figure it out. That's a tech debt problem to worry about.

Replaced: size_frame with size_<size>

More verbose and reasonable method of reasoning a new type of size tag without bleeding everything into core SDXL.

full-frame -> size_g

half-frame -> size_l

quarter-frame -> size_s

minimal -> size_t

giant, large, small, tiny

Added: zone_<offset>

zone_<offset> -> zone_ul

zone_8 - upper half

zone_6 - right half

zone_2 - lower half

zone_4 - left half

zone_9 - upper right quarter

zone_3 - lower right quarter

zone_1 - lower left corner

zone_7 - upper left corner

7 8 9

4 5 6

1 2 3

Like the numpad on the keyboard!

This is a supplementary attention to onnx detected bounding boxes.

The 5x5 Grid

This thing is a further experiment on depiction offset and I'm not sure how well it'll work.

It uses a grid using A through E and 1 through 5

grid_a1, grid_a2, and so on.

New Onnx Detections

I've implemented the entirety of ImgUtils, Hagrid hands, Pose, and a multitude of analytical AIs meant to manipulate Flux. This data works with SDXL and I will bulk train using the same system.

Update 9: SDXL training + V100/A100 update + hardware problems + CLIP_L updates

4090 done fried my mobo.

My primary work PC has suffered a catastrophic failure. The 4090 seems to be fine as I tested it in another motherboard, but there appears to be burn marks on the motherboard it was in. I've ordered a new motherboard and it should be here by tomorrow, but I doubt my boss is happy.

4 V100s are operational, still missing an A100.

I managed to get my hands on 4 32 gig v100s for about $400 a pop from my local college after talking to the head computer science professor for a few hours about my research. After catching up with my old friend about where I've done in life, he said they had some in storage, and they could offload them to a researcher for a freelancer discount. He offered to sell me 16 more but I can't afford the power costs.

The four I have are putting in heavy work training SDXL right now. It's running a 64 dim 64 alpha lora run on it using the same data that I finetuned Flux Schnell with, using the same tags.

The single A100 seems to train much slower than the 4 V100s based on the benchmarks, so I've put that one on flux duty for now until the second one arrives. I should have just gotten an 80 gig one, this is probably on me. I think I can make the A100 faster if I somehow get more bits into it's buffer than the ssd brick can handle, so I'll run some timings and benchmarks to see how much data is actually moving while training some basic loras.

Currently I'm set up in the a100 mobo, so the A100 isn't running right this minute. Tomorrow I'll have it back up if everything goes to plan.

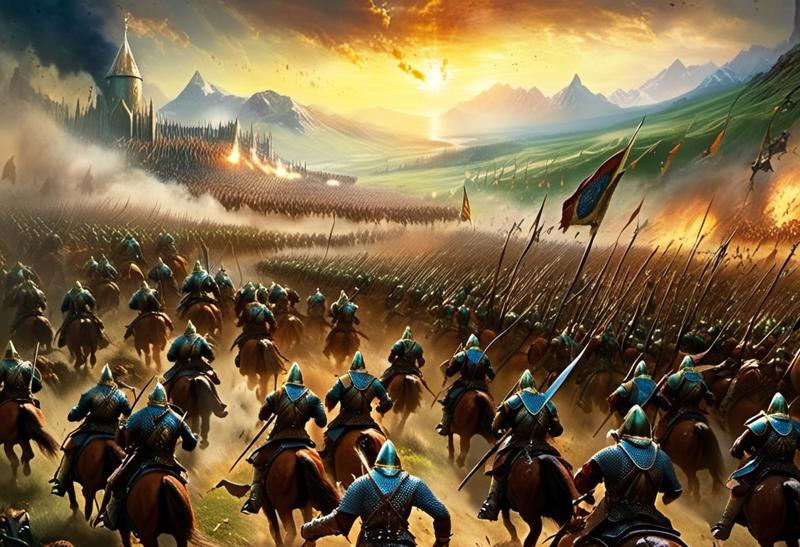

SDXL Epoch 18

Training params:

BF16 mixed lora training SDXL-Simulacrum-V1 ->frozen-CLIP_G ->CLIP_L_OMEGA_V1 UNET_LR / TE_LR Epoch 1 -> 0.0001 / 0.0000001 Epoch 2 Failure revert -> 0.0001 / 0.000001 (multires noise <<< BAD IDEA) ->Epoch 2-5 -> 0.0000314 / 0.00000314 Epoch 6-18 -> 0.0001 / 0.000001 Scheduler -> cosine with restarts -> 2 Optimizer -> AdamW8Bit relative_step=False scale_parameter=False warmup_init=False Dim 64 <- high number means high potential to learn derived patterns Alpha 64 <- high number means better pattern retention 225 max tokens for clip_l Noise Offset 0.05 Offset Type OriginalI meaaaan I kinda works? It's far ahead of flux in terms of epoch and sample counts, but it never seems to want to converge to the dataset. I need to test SDXL a considerable more I think to get a good grasp on how stupid the CLIP_G really is.

After each epoch I swap out the CLIP_G with the original, while continuing to train the CLIP_L into it's continued growth raid boss state.

Compared to where I WANT IT TO BE<<< it's falling short, but damn does it show some true beauty.

Not bad really, but it's not ready. Most of the guideposts haven't been hit because SDXL is FAR MORE STUBBORN than I anticipated. It's quite the annoying complainer and has many overcooked tokens that I need to map and figure out solutions for without destroying everything attached to them.

So far none of the canaries that cause evacuation have died, so I think we're doing pretty good here.

Convergence has begun

The convergence to the training has begun on epoch 21. The outcomes are shifting in ways the dataset intends, which means I don't need to do a large dataset tagging again just for SDXL. I can reuse the same dataset.

Current trained CLIP_L estimated samples:

9,250,000~

After epoch 100 -> ETA 60 hours, with this dataset I'll be releasing OMEGA as SDXL_CLIP_L_OMEGA, which will have learned how to behave properly with SDXL based models.

Estimated training samples on conclusion:

15,000,000~

Update 8: V128?9 release

Not sure if it's 128 or 129, I didn't check the guideposts yet.

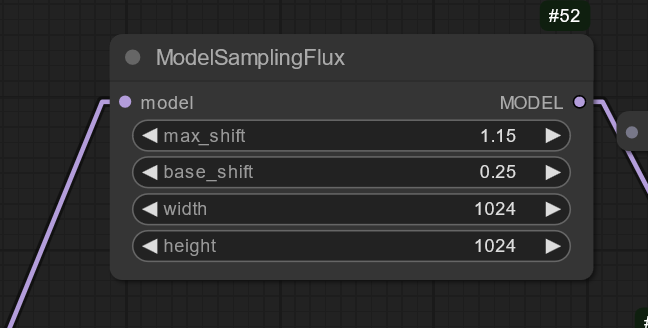

Depiction control is much sharper, region prompting works, and I advise using ComfyUI with this node setup here for the negative prompt.

Also experiment with this. This model has a ton of training, and the small bits are showing through when you adjust flux shift in big ways.

I suggest between 6 and 0.75 for max/base shift;

max / base

1.15 / 0.25

1.5 / 0.5

3 / 0.25

5 / 0.75

Also I suggest trying;

1216 x 64

64 x 1216

1216 x 1216

2048 x 2048

512 x 512

256 x 1024

1024 x 256

You get the picture. They produce different aesthetic results and often introduce or remove details depending on the prompts.

Update 7: Massive discoveries

Depiction Offset

I'll be releasing epoch 6 this afternoon at around 4pm gmt-7 for user and research testing.

I had decided that this likely wouldn't work yesterday, when I attempted to modify images using Shuttle. However, when I moved over to base Schnell fp8 using Epoch 6, I was SHOCKED to see it working. Not only does it work, it's actually capable of NEGATIVE PROMPTING things OUT OF THE IMAGES.

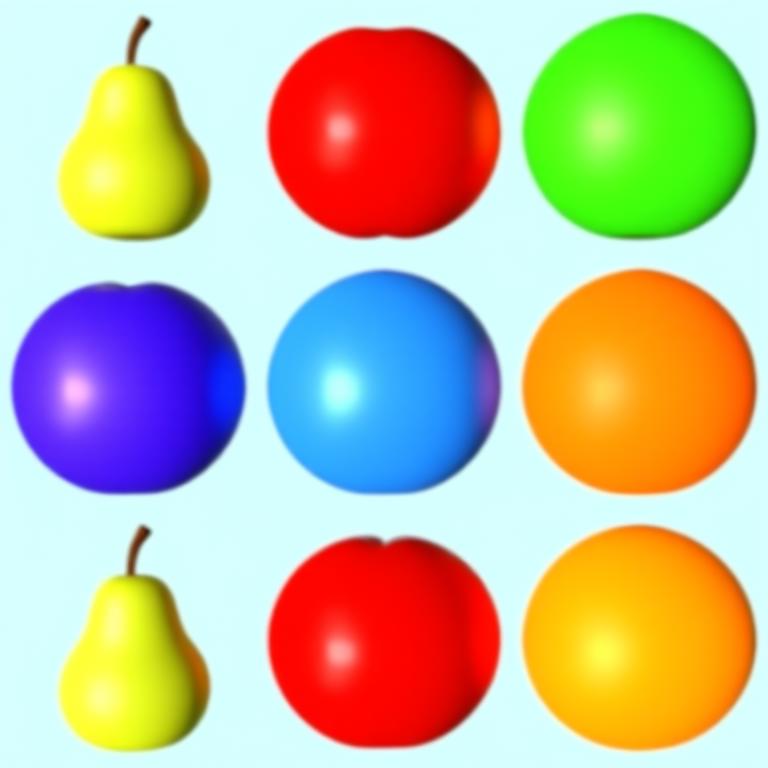

3x3 kinda works?

I wasn't really surprised, the system is based on 3x3 in a lot of ways.

Positive Prompt:

a three by three grid of 3d fruit for a total of nine fruits.

depicted-upper-left minimal red apple. a row of colored apple fruit. a red apple, a blue apple, a green apple.

depicted-middle-left minimal red orange. a row of colored orange fruit. a red orange, a blue orange, a green orange.

depicted-lower-left minimal red pear. a row of colored pear fruit. a red pear, a blue pear, a orange pear.

Negative Prompt:

censored, censor, bar censor, blur censor, finger ring,

lowres, bad quality, low quality, bad anatomy,

blur, depth of field, distorted, pixelated,

bad hands, blurry hands, extra digits, missing digits, missing hands, extra hands, unexplained hands, merging,

vaginal, vaginal penetration, vaginal sex,

anal, anal penetration, anal sex,

sex toy, dildo, condom, penis, pussy, vagina,

deformed, mutated, monster, vore,

disembodied, floating object,

disembodied hand, disembodied foot, disembodied head,

extra feet, unexplained feet, unexplained arm, 3 legs, missing leg, missing arm,

blurry background, cave, simple background, HOWEVER WHAT HAPPENS WHEN I WANT TO POST-SHIFT ATTENTION!?

Flux and it's kin, want to be orderly. What happens when I intentionally introduce chaos into this order and FORCE it to pay attention to something AFTER the prompt is already laid out?

NORMALLY Flux glues things together, and once they're glued into place you have a hell of a time training it out of that position.

This isn't a joke, this thing actually works. More training will yield more results. Smaller images taught to Schnell will DEFINITELY yield smaller results. Those about about 342 pixel segments, if I want a quarter section of it, it would be about 512x512. I told it quarter section, so the attention shifted heavily, and now we see the results.

a three by three grid of 3d fruit for a total of nine fruits.

depicted-upper-left minimal red apple. a row of colored apple fruit. a red apple, a blue apple, a green apple.

depicted-middle-left minimal red orange. a row of colored orange fruit. a red orange, a blue orange, a green orange.

depicted-lower-left minimal red pear. a row of colored pear fruit. a red pear, a blue pear, a orange pear.

anime,

depicted-upper-left quarter-frame head, blue eyes, blonde hair, smile.Depiction offset works to alter existing parameters, and I know how to make it better.

I guarantee this model is far more powerful than is expected of it, and the outcomes from training are far more powerful than expected. The QUALITY is suffering a bit currently, but the additional training is showing certain traits are most DEFINITELY clearing up over time, and specifically allocating new learned context in the required positions. It's building up to something more akin to the way Flux Pro responds to prompts. This is only going to compound when I provide it with more training and more information for the requests. I'm entering unknown territory here.

Potential avenues of inpainting training;

single classifier translated to simple caption dreambooth training

CIFAR100 32x32 -> 100k -> 100 classifiers

ImageNet 512/252/64 -> 1.2 million -> 1000 classifiers

Teaching it specifically smaller sizes in more detail.

single classifier with depiction and grid assignments

Hagrid cropped 50,000/50,000/50,000 good hands, blurry hands, censored hands.

COCO -> uncertain, know it works and has bounding box data.

Simulacrum V7 world pack -> specifically tailored and hand captioned nearly 5000 images for depiction solidity; with nearly 100,000 automated cropped smaller images (ratio 0.8/1.2 only - mostly square), with assigned bbox masks specific for inpainting.

This isn't a joke. This is real. All my research has led to this manifested controller breakthrough that I'm sure many have tried, but not like this.

This isn't inpainting, this is txt2img. With inpainting, it WILL control subsections of inpainting. It WILL understand what you're asking of it. It WILL give the user proper control of smaller sections.

This is essentially a form of training that would simply teach it where to put things and even allow internal inpainting in smaller zones of allocation due to the smaller image sizes.

I've already made proof of concept with SDXL, Flux1D, and Flux1S. It's time to build something real.

Update 6: Quick Update V122

I've been busy at work today, but the model cooked up nicely. Seems to function similarly to v12 but has a considerable amount more pose training so the limbs should be a little more at bay now.

The automation has produced a full epoch 5, so we're halfway there. Should be ready by the end of the week.

I'll post additional information later after full testing, but for now enjoy.

Update 5: Shuttle V3 inference + Version 2 prep - 1/15/2025 7:14 am gmt-7

I've been inferencing the lora trained on Schnell core model, using the Schnell Shuttle V3, which has turned out to be highly responsive to both positive and negative prompting. The negative prompt is shrinking rather than growing while using it.

Using shuttle REQUIRES a negative prompt, though this model will likely require a negative prompt indefinitely. It's far more powerful than any of the others I've made, and far more attentive to the requests.

Simulacrum is almost to epoch 4 then I'm going to release and halt training for mass tag preparation. I've been setting up the cheesechasers to access my local data this morning and it should be good to go a few hours after work, since I have a full docket today setting up another system as well.

I have the large list of tags and a series of filters that are going to grab this specifically and then auto-tag while I work.

Version 1 burndown

Style

Style is highly responsive to any of the three core modifiers;

anime, 3d, realistic

can be solidified with tags lags;

high fidelity, cinematic, best quality, vivid, sharp, etc

Characters definitely work but not very well

Some DEFINITELY work, for the most part. Many series work; many do not.

Hairstyle has issues; hair like "big hair" tends to not respond to what a character has for big hair, and it tends to merge that character with another from the same series if you use the series tag.

Solution

More popular and specific characters to be included into version 2 with larger image counts.

Depiction offset doesn't work very well.

I'll be rewording all of the depictions and including more complex offset training for the next version more akin to the 5x5 that NovelAI uses.

Currently slightly works, very hit or miss.

I ran a few logic games to imagine why, and I came to the conclusion that a 3x3 grid with sizes simply does not do a good job depicting an image. It requires more specifics, and size tags to be separated FROM the grid, instead of directly integrating the size tags into the grid like before.

grid_d3 face

The new grid will be considerably easier to use, rather than the verbose depiction tagging subset.

I'm concerned that my CLIP_L does not understand this well enough, so I may need to run more CLIP_L samples on a frozen shuttle simulacrum after basic burn has shown promise.

NSFW works too well.

NSFW and sex works very well with plain english, like way too well. It's one of the reasons why I'm reluctant to release this version; the synthetic faces seem to not have destroyed all the celebrities and public figures yet. This has caused a bit of a paradox with my intentions here, since I'm not goal targeting any individuals specifically, and the women and men portraits used have no names and often look like super generic peopple; yet the celebrities are still showing up when I try to proc some. So I have to be vigilant and bleed more captions together. Perhaps I need to do a direct Shuttle train to ensure that covers all bases with NSFW elements to comply with Civit.

Solution - create a list of popular public figures to train a caption flashbang.

currently it's kind of blending them with the synthetic women and men so I think it'll be fine... but I'm definitely planning some fixes here to ensure that realism IS NOT a celebrity deepfake sex churner.

I know most people aren't capable of actually just, scanning a model for token strength like I have tested and experimented with (I'll likely post my findings on this in the future, but it's an ongoing timings experiment); so Civit can't simply filter out models based on a simple scan unless they check the tag metadata which I'll be micro managing and releasing correct counts for when V1 released; but I would rather my images not look like any specific celebrity or public figure even if they are or aren't in the tag metadata.

New and tested NEGATIVE PROMPT for Shuttle V3

negative prompt removes many problems but it's only a bandaid until further training.

use CFG 3.5 for Shuttle.

nsfw, explicit, censored, censor, bar censor, blur censor, lowres, bad quality, low quality, blur, depth of field, distorted, pixelated, monochrome, greyscale, comic, 2koma, doujin, manga, bad hands, blurry hands, extra digits, missing digits, missing hands, extra hands, unexplained hands, penis, erection, flaccid, pussy, cameltoe, multi penis, deformed, mutated, monster, vore, pregnant, cum, ejaculation, messy, unexplained white liquid, disembodied, floating object, disembodied penis, disembodied hand, disembodied foot, disembodied head, jumping, floating, extra feet, unexplained feet, unexplained penis, unexplained arm, simple background, blurry background, cave,nsfw, explicit

marked increase in quality, removes many nsfw elements

censored, censor, bar censor, blur censor

removing censors from the list, which tends to remove much nsfw elements for safe images, and enables more clean images with less random distortions.

lowres, bad quality, low quality

marked increase in quality and removing annoying distortions, blur, and censoring of things that shouldn't be censored.

blur, depth of field, distorted, pixelated

tends to improve backgrounds and foreground quality, remove depth of field if you want blurry backgrounds.

monochrome, greyscale, comic, 2koma, doujin, manga

you can remove this if you want black and white, line drawings, comics, etc.

bad hands, blurry hands, extra digits, missing digits, missing hands, extra hands, unexplained hands

tends to fix hands, not every time. needs more bad hand training.

penis, erection, flaccid, pussy, cameltoe

removes any unexplained genitals that may be popping out of clothes.

penis, multi penis, deformed, mutated, monster, vore, pregnant

this can cause strange things to happen unless negative prompted, so these are more situational.

negative pregnant tends to allow other sizes to work properly, likely just needs pregnant training.

cum, ejaculation, messy, unexplained white liquid,

this tends to remove any unexplained white liquids that seem to appear on surfaces, apparently this causes a lot of problems.

disembodied, mutated, floating object, disembodied penis, disembodied hand, disembodied foot, disembodied head, jumping, floating, extra hands, unexplained hands, extra feet, unexplained feet, unexplained penis, unexplained arm

should remove most floating objects or unexplained things.

simple background, blurry background, cave

fixing the backgrounds when things tend to show up that you don't want.

cave tends to remove gross or distorted backgrounds

I will be working to test each potential issue over time and resolving them. I can manually tune the tags later using slider loras and merge them, so that's an option as well. It will likely require additional finetuning after.

Version 2 epoch values

50k -> 5k male anime/3d/realistic

50k -> 5k female anime/3d/realistic

25k -> 2.5k futanari anime/3d

25k -> 2.5k trap/femboy/other anime/3d

50k -> 5k sex pose anime/3d

50k -> 5k raw pose realism/3d

10 epoch goal ->

250k + 4.5k = 254.5k * 10

2,540,000~ samples for 10 epoch goal.So the plan here is simple; batches of 5k or 2.5k depending on the image clump (20k image burns); 10 full runs there will count as one epoch aka 250k images.

After every 50k I will run a 1/10th size first run for regularization, roughly 4500 images or so.

If this doesn't work I'll set up full regularization instead.

"cum" should work without a bunch of dicks showing up, and be easily blacklisted.

"realism" should provide a bit of actual realism such as blemishes and imperfections, rather than porcelain dolls.

Depiction changes

"depiction" is going to be shifted to a new 5x5 grid now that the basic training cycle is complete. I'll be sure to specifically list EACH potential offset based on the grid.

Rating problems

"nsfw/explicit/questionable/safe" don't really seem to work. Even blacklisting them seems to do nothing, so more experimentation may be necessary with these.

Aesthetic problems

"disgusting" and other aesthetic tag is to be removed and not used for this version; many images that are essentially core images seem to have been dubbed "disgusting" by the AI and many low quality images were given "masterpiece" that simply did not deserve it, so I'm going to evaluate aesthetic use and potential based more potentials; realism, 3d, and anime having different aesthetic types and different ai models being one. For now, just expect it to not be included for version 2, but it'll likely be trained into one of the epochs after version 2 is up, like maybe version 2.5 or version 2.6 depending on how many epochs I release or if revisions are required to solve problems.

potentially calculate using image size as a comparator for lowres, highres, and absurdres checks; but there's no easy way to access this in batch form without a lot of requests to my data brick; which can be slow.

maybe ntfs has something metadata-wise that I can quickly grab using a windows library? Seems like both ubuntu and windows suffer from this so maybe not.

I think I can run scripts directly on the brick since it has a quad core, so I'll look into implementing something there?

Update 4: Schnell Adafactor and Tensorboard

So the tensorboard is all over the damn place. Apparently starting and stopping the training like 20 times isn't the best idea when it comes to tensorboard data, so I'm going to need to figure out how to compound that information in a sane way.

I have shifted the baseline training parameters of the Schnell version to better reflect Block Swap, which allows me to train at a higher batch size than the current hardware would allow with flux.

Current Settings for Epoch 3: (The NEXT 50k)

BF16 mixed lora training

FP16 Schnell Model

FP16 t5xxl

FP16 Sim-v4-CLIP_L

UNET_LR 0.00005

TE_LR 0.000001

Scheduler -> Adafactor

Optimizer -> Adafactor

relative_step=False scale_parameter=False warmup_init=False

Dim 64 <- high number means high potential to learn derived patterns

Alpha 64 <- high number means better pattern retention

Flux Guidance 0

Shift

Discrete Flow Shift 3

Prediction Type Raw

Use t5 attention mask

255 max tokens for t5

Noise Offset 0.05

Offset Type OriginalI've crimped out some shortstack and yordle data, some futanari, and some AI_GENERATED data that seem to be heavily affecting the human form in ways that I'm not looking to train currently. I've also removed about a third of the hagrid "nameless" hand poses, which have been affecting things like basic grip and grabbing of objects in negative ways, and I will be evaluating ways to reintroduce this information in the future in a safe manner using object association rather than direct hand association. This reduces the core burn dataset to about 44500 give or take. Nothing too major yet.

I'll be releasing epoch 2 around 4 pm pacific time today, which has substantially more reliable results when it comes to NSFW content while still retaining the majority of SFW information. It's still failing at a large amount of trained information, but I see it clearing up every 100 or so steps that I've been testing.

Hands are STILL very awkward, so stay tuned for the modified negative prompting built into blurry and empty hands, likely by epoch 5.

I'm being VERY CAREFUL with this model. It's essentially being micro managed, where I play with it every 100 or so steps (1600 samples) to make sure nothing major is degrading or falling apart.

The reduced learn rate after the baseline epoch is improving the fidelity rather than adding a large amount of context, which is perfect for the target goalposts.

After the goalposts are met, we'll be moving the goalposts to a larger array of low learn rate information.

I'll be preparing the next 250k images ahead of time this time, since the pipeline is most DEFINITELY cutting into the speed of the training in a substantial way. At least until I properly optimize data delivery to the dockers.

First; Lets test out some hands.

Alright it's not doing TOO BADLY, that's good. I think it kinda has two thumbs though.

It's kind of having trouble with the classifiers, so I'll probably re-word them in a more concise way using "hands_" as a prefix or something like that, that should help when it comes to guaranteeing good hands from the classifier images. The baseline classifiers were never meant to be procc'd, but the offsets work so I may as well make them available.

Yep, definitely needs more cooking.

Lets try some women.

Well there's our 420 seed woman, background is a little wonky but that's to be expected. I'm not training backgrounds and background interactions yet.

Uhhhhh... Yeah something is definitely happening to backgrounds. They look like dilapidated and falling apart messes. Lets see if I can negative prompt that away, and make it so we aren't getting multiple angles.

Alright that wasn't as easy as I want it to be, so I'll need to address that one.

Positive Prompt:

a woman wearing a blue slit cocktail dress,

1girl, solo, from front, cowboy shot,

Negative Prompt:

explicit, nsfw, lowres, bad quality, bad anatomy, mutation, mutated, deformed, monochrome, greyscale, extra digits, bad legs, missing leg, amputee, simple background, blurry background, cave, multiple angles, multiple views, comic, 2koma, navel, Though I can probably increase her quality.

It still takes a considerable amount of time to generate, so I'll need to train a considerably more amount of epochs and iterations to improve speed as per the standard of papers based on this subject. Which means more cooking time and less learn rate, but that's fine. For now we'll just keep going.

If you SPOT ANYTHING MAJOR falling apart; such as nudity appearing where none is intended, broken limbs or bodyparts using the suggested settings, and so on; be sure to post and notify me of these problems. They can be fixed. I have a list of fixes that I need to implement already, and they're in the negative prompt.

explicit, nsfw, lowres, bad quality, bad anatomy, mutation, mutated, deformed, monochrome, greyscale, extra digits, bad legs, missing leg, amputee, simple background, blurry background, cave, multiple angles, multiple views, comic, 2koma, navel, bad hands, missing hand, armless, legless, quadruple amputee, One at a time.

Update 3: Schnell

V11 released. Feel free to check it out.

Curriculum datasets used for baseline conforming:

5k core simulacrum v5

10k Pose

10k 3d/realism

15k anime

7.5k real/hagrid/fixers

----------------------------

47.5k~ images usedTrained in this sequence specifically. One epoch being one pass of all images, which is technically multiple trainings done on the same lora.

This is as fraction of the full dataset to be trained, but it's probably the best images in the entire datset.

The preliminary 47.5k burn didn't go do too well. It basically fell apart, however after the findings from the first attempt; I've attempted a new strategy.

Teach Schnell using more... standard methods.

Version 11 training params;

BF16 mixed lora training

FP16 Schnell Model

FP16 t5xxl

FP16 Sim-v4-CLIP_L

UNET_LR 0.0001

TE_LR 0.000001

Cosine with 2 restarts <- my go-to for flux

AdamW8Bit

Dim 64 <- high number means high potential to learn derived patterns

Alpha 64 <- high number means better pattern retention

Flux Guidance 0

Shift <- I tried flux shift and had terrible results.

Euler Flow 3 <- this is where the model is learning CFG

Prediction Type Raw

Use t5 attention mask

255 max tokens for t5

Noise Offset 0.05

Offset Type OriginalAlright, so the original version did not do so well. I ran it with;

Version 1 training params;

Flux Guidance 0

Flux Shift

Euler Flow 1

Prediction Type Raw

NO ATTENTION MASKFirst our BASE image with SimV4 CLIP_L on Schnell Base fp8:

3d \(artwork\), 3d, realistic,

final fantasy vii,

1girl, tifa lockhart,

fighting stance,

red eyes,

blue dress,

Steps 24

CFG 1Clearly it has no idea who tifa is, even using my SimV4 CLIP_L (she's DEFINITELY be trained into that), but even so she's basically an aged character from the 1990s so she should be in any dataset; including LAION. I think they probably gutted all the names at some point, so this is sort of a thing that happens when you ruin public figures like aged video game characters. Lets try with standard CLIP_L instead of Simv4, see if maybe the problem is based on my CLIP_L.

Schnell... Is NOT very good at this thing at all. It seems like no matter what I teach it, it'll be an upgrade. Like, what the hell is even happening here? She has horns? She has weird boots? Clearly she's making the whatever do the whatever, but this is ass. Not the good kind of ass either. Her hands are fucked up too. Basically I need to force this thing to actually comply properly, since it clearly has no idea what it's supposed to be doing.

Epoch 1 Attempt 1 -> Suggested settings:

It started to show promise, but didn't even complete it's first epoch before it started falling apart.

I see why people say this model fails, because it learned very little. The hands are completely destroyed, the body is okay but the dress is all wrong. The entire style is wrong.

Now, see how it looks with CFG 1; the GOAL CFG.

3d \(artwork\), 3d, realistic,

final fantasy vii,

1girl, tifa lockhart,

fighting stance,

red eyes,

blue dress, Alright, clearly there's some legendary-grade levels of problems here.

However, as you saw, CFG5 shows promise. This is definitely a big issue, since this particular version amplifies the required amount of time to generate. Which defeats the purpose of Schnell. It needs to be FAST and RUN ON WEAKER HARDWARE.

So I did a retrain to test another first epoch using a different seed to see if there was some sort of degradation, a bad seed, or perhaps something went wrong and corrupted along the way. Both trains produced very similar results, which tells me the process doesn't work, rather than the dataset potentially having problems.

I did a few smaller trains, for example training a simple GAL GADOT lora to see if it was my custom sd-scripts, but the sd-scripts isn't the problem.

The outcomes from her are fine, however with her I'm not trying to fully restructure the model. That would just be one person.

It needs to be FAST. This model isn't fast. It's slow as molasses. I may as well be training SD15 if this is the outcome, since 1.5 is actually fast.

Even 5 epochs of the GAL GADOT lora is enough to derive Schnell.

She's clearly not ruined. That was just using Gal Gadot's likeness, I might be releasing that LORA, but it didn't turn out very good. It DID work though.

As per flux standards, you need to use the "woman" tag to proc her, even so, the model shifted as you can see without even adjusting any of the parameters RELATED to Tifa.

Now that I proc Gal Gadot directly using "a woman" (that's how she was trained), I end up with this.

WITHOUT SimV5:

WITH SimV5:

All the hagrid in the world isn't going to fix this.

Version 11 - Epoch 1 Attempt 2 -> Teaching CFG:

Version 11 is... another beast entirely.

The results... are a bit stunning, I must say.

I was told that this wouldn't be possible, and yet there she is.

Positive Prompt:

3d \(artwork\), 3d, realistic,

final fantasy vii,

1girl, tifa lockhart,

red eyes

Negative Prompt:

bad quality, very displeasing, displeasing, bad anatomy, bad hands, mutation, mutated, disembodied, pregnant, monochrome, greyscale, patreon logo, watermark

1024x1024

Steps 12

CFG 5

Seed 420Fantastic things are happening boys n grills. Looks like we'll have a new model soon.

Lets try 36 steps instead of 12.

It's a little washed out for now, but it's still the same position and pose. The pose system works. My anticipation is, more cooking will provide more consistency to the system. Schnell is a bit unstable on itself, so it's like a car that falls apart in the garage already.

Looks like I'm fixing it.

It's not perfect by any means yet, we'll see if it gets to that point or not. If not, I'll try something else.

Needs more hagrid hands.

Lets run it with Gal Gadot, see what happens.

CFG1

As you can see, even version 11 fails at CFG1, however that's not the goal here. Even if it's somewhat improved over the last, as you can see there's definitely some massive faults.

Here's CFG 5

Looks kinda like Gal Gadot doesn't it.

Lets run it without the Gal Gadot lora, CFG5.

Gorgeous. Absolutely massive improvement in fidelity and hand quality. This isn't cherry picking, this is real.

Hagrid clearly putting in work.

Schnell Simulacrum V1 Findings

SMALL DATASET LORAS are fine. You can make smaller loras with almost no issues in Schnell. They cook fast, and finish quickly. They can produce fair results, and even improve the base model a bit.

LARGE DATASET LORAS are another story. There is some high-grade problems with training using sd-scripts and the standard methods. These require many epochs on very low heat to force Schnell to conform to the dataset, rather than using the standard 0.0001 that I've been using for Flux.1D.

I'm letting settings 2 cook to epoch 10 to see how it turns out. It should be about 500k samples or so, so we'll see how it goes.

Update 2: New hardware

I am now the proud owner of 4 v100s (32gb ea) and 2 a100s (40gb). They weren't cheap, but they are definitely going to come in handy for research purposes. I purchased them all on very specific lower-cost deals however, which means they'll either work well or catch fire in a month I bet. Two of the v100s and one of the a100s haven't arrived yet, however the first a100 will be up and operational by this weekend.

I have acquired 3 motherboards, the dual a100 board having 128 gigs ripjaw ram, an amd 7900x, and full virtualization capability as per modern standard. I have ubuntu installed on there for now, but I'll likely choose whichever operating system will function best with the CUDA interfaces, and choose the most optimal compiled drivers to communicate between the hardware.

Both of the dual v100 boards have 5900x processors and 64 gigs ram each, with two v100s per board. Should be more than enough to train my classifier models and to prepare loras.

They all have passive cooling so I'm looking into keeping them cool, considering they'll be running non-stop for a while.

I am also now the owner of a 12 tb (16tb -> 4 wd blue 4tb ssds) RAID 10 ssd drive cluster with USB-C connector. I'm going to get another specifically dedicated to hooking into my network switch.

I was having serious data corruption issues with my current setup, so having a full raid setup with full raid capability, along with a network-based switch driven ssd cluster will alleviate most of my worries.

Considering the amount of money I've spent on these things in the last year, I should own an 80gb a100 but this will do. This sort of stuff isn't cheap after all.

With new hardware, lets up our expectations shall we. We are no longer bound by runpod run times and rental limitations.

Update 1: After the holidays

I and my entire family caught some sort of flu, so we've been spending some time recovering. I'm back to about 70% or so, so I'm taking it easy overall; my body is still weak but my mind feels like 100% so I've begun working on the captioning software again.

My current detection and caption software TODO will exist here for now and I plan to get the majority of these linked together today.

Each of these need to be written or integrated into automated tagging flow and then introduced into the pipeline.

RED works but not pipeline integrated yet

YELLOW does not work in pipeline due to compatibility or speed

GREEN works and integrated into pipeline

GREY not written yet

Almost ready.

Auto-Captioning/Tagging

joycaption v2 alpha

t5blipjoytagvit-tagger-largewd-14v2

vit-r34-21AC

deepdanbooru

mldanbooru

booru-normal

booru-s13nudenet

people body counts

half-body

heads

faces

eyes

imgutils hands + hagrid -> resnet18

text

Quality/Style detection

nsfw

aesthetic

monochrome

anime age/style

ai-check

anime_completeness already integratedtruncate_incompleteness already integrated

Intelligent algorithms

MIDAS relative depth approximator

creates depth map from an image for validation and additional tagging purposes

determines relative depth of detections in relation to others

rotation, offset, and size

midas + bbox mixes and then applies the grid math

detection combinator

create bodies into something logical to determine how complete characters are identified based on the detections

detection association sanity checks

ankle bone connected to the head bone?

deterministic English caption cleanerrestores and summarizes llm outputs

this isn't advanced enough to matter

tag and caption cleaner

final cleanup for the tags and captions to ensure there are no invalid characters or duplicate tags.

tag reordering

fits the captions and tags within the correct flow

It begins

I'll be performing a full multi-epoch finetune; cooking 1 million images this time. Alongside I'll be cooking three primary loras, devoted entirely to shifting the core attention of flux from one style to another without destroying the underlying engine.

If this goes well, and the planning says it will, I'll do the same to Schnell.

What I've been doing.

Education: I've educated myself in the basics of vision, resnet, attention heads, diffusion, latent diffusion, the sdxl architecture, the flux architecture, the sd3 architecture AI; and have begun planning an entirely new core model revolving around segmented patched subject fixation and latent diffusion, but I am not cooking that today.

Planning: I've spent a great deal of time shoring up my codebases; learning more about python, more about AI interactions, and more about training various AIs without needing anything but a few lines of code.

Gathering Data: I have spent a great deal of time gathering data in the past 6 months. I've accumulated a war chest of nearly 2 million high quality high grade images locally, with a series of external systems housing an additional 200 million images.

Thank the DeepGHS specifically for making much of this possible, and I did a great deal of slower scraping and hand selection for the more stubborn websites.

Video Sourced

Anime Sourced

Cartoon Sourced

Movie sourced

Safebooru for high fidelity anime

Danbooru for organized and known tagging

Gelbooru for deviations from known for a mixed bag.

Rule34xxx for a very mixed bag

Rule34us for high fidelity 3d

E621 for a multitude of creatures and beasts

Multi-layer HAGRID for hand classification affirmation

Multi-layer Civit for the Ai Generated source and high fidelity realism

My own personal "toasted" masterpiece-grade dataset provided by a friend

Human solidity pose dataset constructed of multiple smaller datasets

The complete simulacrum core dataset for reaffirmation

I've accumulated a bit of knowledge on this subject, and I can say without a doubt with the utmost certainty; model and lora finetunes fail due to bad tagging, bad configurations, improper planning, and lack of small grade tests. Iterative development is the cornerstone to a solid outcome in any aspect. There is no way around it. If your model fails, check between the seat and the keyboard. The core models work, and so do the hundreds of loras that exist for them. These are absolute proofs that cannot be argued.

The Tagging

This is the best part. My magnum opus. I've compounded a series of tagging systems.

The tagging tools and training will have their repo pushed to github, and the models necessary for it's integral usage either made available on huggingface, or the mentioned model tested for it's existence. All of the models and code used for this project will be made transparent and set up for reuse, no matter how disorganized it may be. Some tools are for the power user, some are meant to be simplified due to my own growing experience as I progressed through developing them.

I will use a specifically formatted tagging structure. This prevents inflicting too much damage to flux, while simultaneously training paths to navigate.

I will tag with a series of offset and depiction tags, condensed and boiled into small easy to use combinations.

I will guarantee certain booru tags exist and a multitude of other tags are carefully transposed into different acceptable forms for the system to cooperate with the T5 flux unet.

I will ensure all artists and artist contributions are tagged and available (this time), so the certainty of what is trained in the model is visible and transparent.

A hefty portion of the images are devoted to teaching only "ai generated", and this is going to be based on a multi-layered system of what is there, and what is not there. Intentionally teaching the AI what to remove and replace when training the intentionally burned ai generated tag. Low timesteps introduce terrible effects, higher timesteps will clean them. Cleanup crew. Just like what I did with hands.

Everything identified will be issued it's base tag, and it's offset tag + base tag combo immediately after. This should allow the end user to literally control the majority of the image if they use the specific pose identifiers meant for limbs, objects, interactions, and the more deviant acts as well.

Size tags are separated and will be called using 20% image sections.

I will include relative depth tags between identified offsets and depictions using midas.

I will include hagrid images and finetune with hagrid after to ensure the fidelity and strength of hands stay intact during this large train.

I have prepared a fidelity booster and quality juicer to improve the quality of the most likely to be damaged regions when the train is complete.

I will double and triple check the auto tagging pipeline system in small batches before I begin the multiple h100 train.

I will not damage the text system. I will not train any neurons to accept text for Double Block training, and instead train the single blocks with this information.

The Training

The entire training process and setup will be available through a jupyter notebook specifically formatted for runpod usage.

I will keep a full tensorboard record and documentation on the full training process with careful attention spent on specific details.

I will ensure the full finetune and the relevant finetune data is uploaded to huggingface for analysis.

I will train the CLIP_L additionally with even more samples than applied to the baseline model in an additional post-unet training cycle against the frozen unet.

I will train the CLIP_L standalone with additional samples beyond those samples.

I haven't fallen on the exact configuration for the exacting outcome yet, and it will likely be trained using a forked command-line sd-scripts specialized for this task, rather than running through kohya's GUI interface. I will likely borrow some tools from the GUI interface since it has some very useful utilities.

The image pipeline will prepare images with an overlapping excess of roughly 10% of the entire database during training, to pipeline generated latents into the dummy image representation system waiting for the images.

Curriculum organized training will be used to ensure specific tasks are overutilized while others are underutilized.

Verification during training time includes similarity checks and validation checks against the intended goals and the current positions within the image dataset. When large deviations occur in unintended or unwanted directions, the training will be halted for my attention. Only when my go-ahead is issued will the training continue.

Post Training

Study of the similarities and differences between the intended goals and the initial images will consist of three core parts; classification grade, accuracy, and bias.

The purpose of this overall post-training study is to determine the full utility and capability of the core simulacrum Flux model vs the original Flux model. We're going to find out if it's better than placebo, or just placebo with some added sprinkles.

Practical Simplified Utility and Bias comparator

This is a measure on simplicity of use; how easy it is to use a model to generate something, and how powerful the outcome is.

The utility of the model can be tested with the necessary guideposts, which will be based on multiple papers that I've read. The used information from the papers and the links to the papers will be available. This spans real world applications from photograph generation, to image control and manipulation, to creating articles, to biases in race, gender, and sexual orientation. Biases in forms, structures, and objects. The full study will be made available along with the core model release.

Base Flux1D, Flux1DD, Flux1S, and each epoch of Simulacrum V5 will be graded on a scale of 0 to 1 for each major category based on bias vs accuracy on the neutrality scale vs how much utility it can provide based on being or not being biased.

These parameters will include but will not be limited to:

multi-spectrum onnx classification and bbox check

nsfw score

age

nude net

aesthetic score

composition type

artifacts

imperfections

corruptions

unfinished

caption accuracy

3x3 offset

5x5 offset

7x7 offset

text display

accuracy

offset

depth

object and human count, offset, and depth.

gender

male

female

crossgender

futa

femboy

variants

species

humanoid

robotic

demonic

furry

anthro

more

body pose

head rotation

torso rotation

lower torso rotation

leg rotation

arm rotation

face

ear

type

size

color

texture

eyes

type

open/closed

mouth

open/closed

lips

texture

teeth

tongue

skin

texture

color

material

hair

color

texture

style

ties, bows, etc

fur

location

color

style

texture

genital

type

size

color

offset

texture

nipple

type

size

color

offset

hagrid

digit counts

position

offset

continuity

Flexibility Cross Contamination Bias:

Each bias parameter will be reinstituted here in a methodology to generate 1000 images using combinations of tags and parameters, allowing each category to potentially cross-contaminate each-other to determine categorical bias.

Based on the used mixes of tags, each mixture will be weighted and tested against each other for a full solidified expression of the output.

The training, research, and dataset will all be fully released on huggingface using a method that cheesechaser can draw from.

Legal

This is a purely recreational research endeavor. NO company NOR entity is backing my research and effort directly. I am an independent individual researcher and thus do not fall under the same developmental rules as a corporate or business licensed entity.

ALL the training renting and hardware costs come directly from my pocket, so I need to make sure I'm not wasting money.

I will be making NO INCOME FROM THIS PROJECT, nor ANYTHING RELATED TO IT. This is PURELY to ENHANCE the computer vision SFW and NSFW classification, and develop further diffusion industry based practices in the OPEN SOURCE DIRECTION that will save power, time, training iterations, and money for the open sourced community along with everyone else benefiting from the findings.

The Schnell version if it turns out well, can be licensed for use to everyone, but I won't do that. I'll release the model with the others, and I will be very vigilant with ensuring this model is not corporatized or abused without direct contribution to the open source community around developing it. The Schnell model is OUR property and was pioneered by BlackForest, not my property. I refuse to allow someone to claim it as their own and I will protect it if necessary.

Individuals and small businesses will be free to use the Schnell Simulacrum V1 model for monetary gain, but corporate entities and businesses above a certain stock value threshold MUST work out a licensing agreement with me when using this directly for gain in an official ongoing capacity, or the lawsuit will come, and I will win. Any money received after legal fees from this will be divided amongst open source AI research foundations TBD later. If it does come to this, I'll be fully transparent.

Also why be a dick? I'm basically giving it away, use the research and your resources to improve it. Make me look like a kid playing with tinker toys, and then I'll start using higher-grade tools to one-up you. Simple logistics really.

All the models will be available through CivitAI for testing, practice, and monetary gain for the individual and the small business.

CivitAI and Huggingface will be the sole locations to get these finetunes out of the gate. Afterword it's anything goes as you all know. Build to your hearts content.

I mention these things, because there is a high potential that I'm making a true progression to diffusion training practices with this process. The outcome will be fully documented and the release will likely have multiple official scientific papers released alongside it.

It's only a matter of time now. The machines are going.