Disclaimer: I am no expert on the topic, I just watch a lot of documentaries and know some random stuff. Almost all of this only applies to realism!

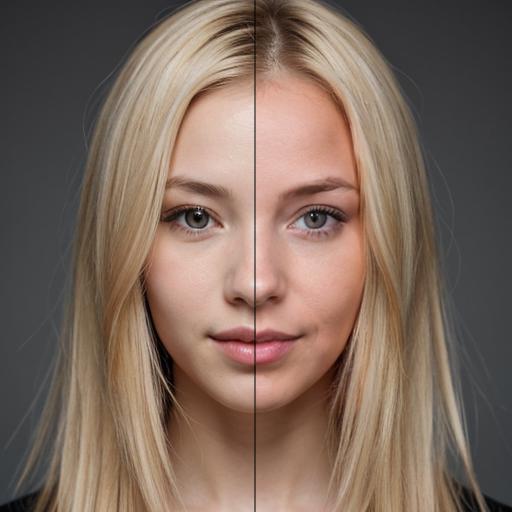

I would say that anyone who has been dealing with AI-generated images for a while can recognize them because they somehow all have the same "style". Especially in the field of photorealism, the prefect faces stand out. Perfectly chiseled and absolutely symmetrical. Everyone looks like supermodels in professionally retouched pictures.

And I think I now know the origin of this problem. We are the problem!

The Problem - Artificial Faces

Actually, there are even 2 problems, but they are directly related.

AI generates symmetrical, artificially beautiful faces

AI generated Celebrities, trained realistic characters, etc always look somehow "of".

I think there are 4 reasons for this:

Flipped data sets

Mainly/too many retouched or polished images in the dataset

Inconsistent data under one token

"AI Incest" Training AI on AI generated images

Flipped Data Sets

The problem here is actually quite simple, human faces are not symmetrical! At least the details in our faces are not. So when we flip images to "inflate" our dataset, we destroy this fine asymmetry in the training. "Generic" faces become simply symmetrical (witch looks pretty but unrealistic), but with celebrities or characters, we destroy the fine details and flaws that make these faces unique. That's why the AI-generated Emma Watson only looks almost like the real Emma Watson.

Flipping is in general a bit problematic for anything that is not symmetric.

Retouched or Polished Images

I think it's fair to claim that images for advertising, magazines, promoshots of celebritys, etc. are tuned and edited to an unrealistic standard of beauty. All impurities are removed, the make-up is digitally post-processed, the figure is slightly adjusted, the skin soft as silk. This makes faces more and more "perfectly Photoshoped". They don't look like real Humans.

Inconsistent Data

This is also pretty simple, what I mean is that, with Emma Watson again as example, appearences from different ages get mushed together because not everyone tags this age differences when working on data sets for celebritys. So the output for "Emma Watson" is this strange mix from Promoshots and movies at different age mashed together to generate something that looks, not like Emma Watson but what we think Emma Watson looks like. It is unrealistic and looks fake.

"AI Incest"

Why training AI models on AI generated Images is a bad thing is a whole other story. For now we just need to know it is bad!

Conclusion

I get that most don't see this "Artificial fake beauty" as problematic. But I think that for the future of AI in general and for Photorealistic depictions specific it would be better if we adopt somewhat different standards and ways on how we collect and prepare our data. Because trough merging all those issues come together and can bee seen in almost all popular models.