https://huggingface.co/AbstractPhil/clips/tree/main

https://huggingface.co/AbstractPhil/SD35-SIM-V1

If you have problems downloading or running them, you can hunt down the working versions on hugging face at my repo. I upload all of them there as well as here.

Operating the T5-Unchained

I believe I forgot to include the token weights layer, so I'll make sure each layer is correctly matching with parity for the next official release; my apologies for this version being imperfect, but the token weights CAN be acquired elsewhere.

This breaks the standard t5 while operating unchained mode, so keep a copy of your tokenizer and default config.

I have plans for making proper nodes to work with all this stuff on the weekend. I'll be making a full mockup of how they behave and their various linker points for engineering, development, testing, and debugging over the coming months.

The abs-clip-suite will be born this weekend. 4/12/2025-4/14/2025. I have already done a bit of ground work for node layouts but it's not ready for full development yet. This will be an iterative development process building the described nodes in my recent article.

If you're ABSOULTELY keen on using it before then, you can use it with this process. The not-so-easy process.

This isn't exactly simple yet;

Download the t5 version you want; fp8, fp16, bf16, whatever.

For comfy;

open comfy's entire folder using vscode

ctrl + shift + f vocab_length and find 37~~~ it's 30,000 something tokens it shouldn't be hard to find using the keyword vocab.

replace all of those configs with the t5xxl-unchained config.

Until my patches or official patches are implemented, this is how it must be done.

find every use of the t5 tokenizer in the various folders and replace them with the tokenizer in the t5xxl-unchained repo.

restart your comfy and run it

For forge;

Similar to comfy, open it on vscode and ctrl+shift+f all instances of vocab_length and replace the various config files with the t5-unchained config file.

replace the tokenizers as well; there isn't anything special code-wise that has to happen here, but it's a chore.

restart it, run the unchained.

What are... Clip Experts?

These are finetuned clips; allowing for more robust utilization of their parent models than average clips. They are trained with millions of samples each to additionally provide new data, augment old data, and create careful vectorized trajectories using that new and old data together.

Essentially... they are finetuned with a bunch of images and each is the expert in accessing routes for captions that otherwise would go completely overlooked; particularly when it comes to PLAIN ENGLISH.

What makes them different?

These clips are specifically tuned to the vpred refits of the models; but they don't necessarily conform to ONLY the VPRED models. These clips should merge directly, or indirectly with other clips from models in their same brand pool.

For example; NoobSim is based on the MOSTLY NOOB CLIPS; however, they were merged with the SimV3 SDE clips (Clip L Omega and Clip G omega 24 iterations), prior to partial or full conversion of their parent models to vpred.

I did not FEATURE infuse these clips in the way that I did their coupled UNETS when you get the entire model.

The unets were FULLY FINETUNED with the SAME EXACTLY DATASET after their infusion is complete. This can be finetuned in the standard methods, as the model leftover from the feature injection is a straightforward SDXL model with the student's configuration.

This gives them unique knowledge of both noise types based on a standard additive normalized merge, allowing for unique utilization that otherwise would not happen.

How can these even work how you say?

Yes it's well known that if you merge pony clips with standard sdxl clips; you get garbage noise on either model, usually resulting in a completely destroyed model.

However in this case, the models were directly infused with features of the other model, not in an additive, norm, subtraction, or whatnot style. I literally trained the models using the direct responses of the teacher model, implanting those direct responses into the student model.

You can see some semi-successful variations of those on my huggingface, but the big boys are already being finetuned as you can see here. They are the heavy hitters, the winners, the cream of the crop, the legitimate powerhouses that won the battle of the feature implantation.

What even is a feature? You keep saying that.

A feature, is a mapped trajectory route through the entire model from top to bottom based entirely on how long it took to get from point A to B, each neuron struck, and why those specific neurons were struck mathematically.

In essence, a feature, is the purest form of an algorithm, and them shits are big. Each feature layer capture is at least 2 megs of vram, accumulated upward per layer, in an exponential fashion, you end up with nearly 500 terabytes of feature data if you go from point A to B with ONE feature capture.

Welllll we can't fucking do all that can we, that's insane. It's akin to decrypting an image bomb so it won't explode without knowing the response that any of it's mechanisms will do, so you cannot possible capture THE ENTIRE response...

HOWEVER<<< we don't NEED the entire response. We need a trajectory, and we need to pass through each one at a time. So what we do, is during train time, we pass those same trajectories, and those response through the cached parent responses aka the routes and the expected outcomes, and then we determine the difference between those and the student. This is how we decide what to learn, and what NOT to learn.

FASTER means MORE LEARNED. This doesn't denote accurate however, but we aren't aiming for accuracy here, we are aiming for LEARNING!

So the faster the response, the better the outcome of the test, and therefore the more learned the task and route is.

Faster = better.

Okay, so we implanted these rules based on timings; we extracted sections of the neural net DIRECTLY during train time by using those valuations from the teacher and student to cross-correlate a difference using a simple logarithmic equation that I developed based on inverse cosine and the current timestep accessed.

Now, we determine the difference of those two features one layer at a time, and we accumulate those differences to determine WHICH differences we are to target with our next gradient accumulation trickle.

So now that we've identified each piece to train, we pass that directly through our difference mechanism, it hits each neuron one at a time to determine which is faster to which ask, and then once it's done poking both machines it learns the difference in a big fat tensor; that I call a feature.

What are you EVEN talking about?

Reverse inference. We are accessing what is there, by determine how quick it responds, and then taking the difference between the host model and this model; and then LEARNING THAT DIFFERENCE!

LITERALLY teaching one thing, the learned response of the other, DIRECTLY into it's neural pathways.

I call it feature grafting.

Okay!?!?!

Lets put it simply right. If we get the response of 1girl from one model 500 times in a similar neuron, the response of the other model will shift into that position slowly over time, and learning the difference between itself and that model.

The attention layers will slowly confirm to very specific routes, the dense nets will align, and the hidden layers will conform over time; however we can't simply SHOVE all this in, or you get incredibly incorrect responses. It has to be taught, like teaching a new form of math.

This, is a form of merger that is more akin to grafting and implantation than it is any traditional method.

In it's learn rate, it automatically saves SOME. It accumulates that difference at a HIGHER VALUE based on a few factors, and a secondary factor based on noise introduction similar to a direct inverse to which timestep and which locations are accessed at that time.

This prevents large scale destruction, while still allowing for the necessary features to be slowly implanted.

BASICALLY it learns less than it could, but more than it needs; causing the entire structure around A and B to shift.

It's really hard to describe it without showing the data and math.

Okay so why? Why make these?

You know all those features I was talking about? The algorithms, the routes, the paths? They are all being accumulated into a large pool of features. A massive database that is going to be used to train the difference machine that will allow all these features... to communicate together, using a different and yet well documented methodology based on LLMs. A very well paved road.

So when we teach this attention mechanism all these features, all these routes, and we force it to communicate to those mechanisms in a cross-correlative way using each, we can accumulate what I dub; a mixed diffusion feature model.

So if you think about it like, okay I'm going to ramp off Pony's 5313241341 neuron to Noob's 134134134th neuron, and then the system will automatically assume that you can ramp from that neuron to SDXL's 134314132th neuron; we can assume that each are simply LINKED now, rather than fully disconnected.

Now, at this point, we can combine all of them into a uniform object system, and trickle call each at the same exact time without disconnects or model barriers; allowing for a single packaged outcome with allllll the feature data captured and all the used neurons implanted into one single model meant to organize and delegate the others using a series of mathematical sliders based on the proofs and concepts that LLMS are built on; we can assume this will be the first of it's kind, a full-fledged mixture of experts using expert models that are already mixtures of experts, trained to withstand a series of different expert expectations.

The final goal here;

We take all 12 clips, we take all 6 sdxl models, and we mash them together like potluck at a picnic with an old boot sticking out of it.

Then we take all that potluck, and smack the smarted AI we can muster right on top like a hat. Likely going to use a form of LLAMA or MIXTRAL itself, hard to say right now, but this thing when it's ready, will be the first feature diffusion model that has basically been makeshifted out of duct tape and cardboard with toothpicks as a spine.

The attention shift mechanism;

There are a series of proofs based on this concept that I'll need to review. There are certain algorithms that I need to be sure will work how I need them to work; and if they do not I'll need to write and conform one based on the new need. There is a series of LLM-based attention mechanisms and the math to go with them that I need to review and fully understand from top to bottom, alongside the mechanisms for the various AI identification models I'll be including aka ; wd14 large tagger, anime/real, classification, and so on.

Each are meant to accumulate directly with the LLM itself, communication trajectory information based on the learned pathways and understanding of the tags on identification for IMG2IMG and ClipVision capability atop the current structure allowing for a full conserved identification process based on the learned features caption tokens and the set the system can cross-interpolate between each model.

As it stands it looks like the T5 unchained might be the target, but the HunYuan LLAVA is also a top contender, but only because it has a huge vocabulary and is multi-language.

The first version will only have a token vocabulary of nearly... let me see... I think it's around 4 million? So that's pretty good, but I don't think most of them will have the information they need to actually be useful, so that's where the LLM is going to need to condense to maybe less than 60k, otherwise it's going to be absolutely useless noise most of the time.

So there's still quite a few limitations with our current model that I'm working around, but suffice it to say, these are the experts that will be manning the ship.

TF did it do to the files? Looks like the BF and FP value somehow broke... the files? Like it renamed and gutted the config or something. I might need to upload as zips.

So forge can handle them but comfyui has trouble with the ones uploaded to civit. Grab the correct ones from the huggingface if you need them.

https://huggingface.co/AbstractPhil/clips/tree/main

If you have problems you can hunt them down here. I basically dumped all my clips.

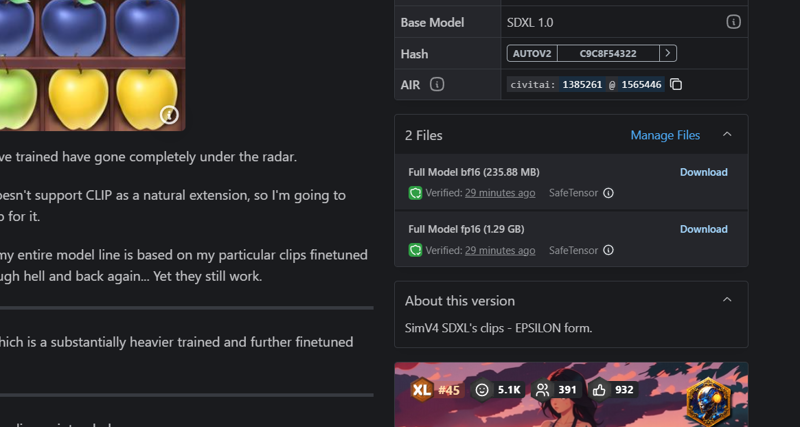

Due to regular request and my neglect, many of the clips I've trained have gone completely under the radar.

I don't expect these to have many downloads as the site doesn't support CLIP as a natural extension, so I'm going to simply document and store them here. If you need them go for it.

Multiple uploads have multiple files; smaller one being CLIP_L larger being CLIP_G, and if it's 4+ gigs it's probably a T5.

CLIP_LG are mostly BF16, but I needed to set one to FP16 to get them on the same file list.

APPARENTLY it also gutted the names, so you'll have to rename them to CLIP_L and CLIP_G.

Bleh...

I'll be keeping a log for very specific clip trainings here; as my entire model line is based on my particular clips finetuned from the base CLIP_L and CLIP_G clips that have gone through hell and back again... Yet they still work.

The true (released) successor for Omega24 is 72 Omega, which is a substantially heavier trained and further finetuned version with 10s of millions of more samples as a pair.

Every major SDXL VPRED finetune has left a big mark on the clips as intended.

Even with VERY LOW LEARN RATE, they have heavily diverged into alternative routes; which I deem clip experts in their own fields.

They can be mixed and merged with mixed results when using other models, but keep in mind they are finetuned specifically for their parent model, so you will get mixed results based on what you're smashing together.