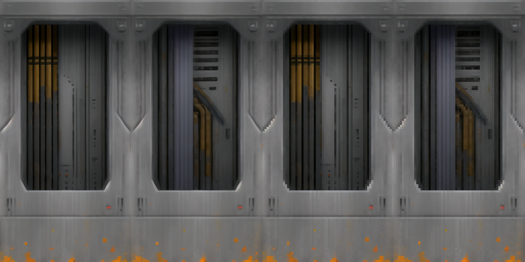

This is a test training on majority of doom 1 and 2 textures. Keep in mind that tan and bronze surfaces (if you're familiar with internal naming scheme) all got named as brown for variety sake.

LoRA differences

There are 3 LoRAs in this subset.

doom-archival-v1, doom-v1 and freedoom-v1.

Archival was a first attempt which used even more reduced dataset, but it has stronger power, and with some models (such as RevAnimated) producing good results at 1.0 power.

Doom and FreeDoom are trained on doom and freedoom textures with same tags but obviously, in case of freedoom - textures from freedoom used as stand-ins for doom textures. The resulting LoRAs came out a bit weaker, so for better results with strong-styled checkpoints (such as revanimated) you'll need to use power between 1 and 1.5.

All textures used for training were upscaled via 2x fakefaith esrgan model and then 2x again via mitchel upscale before training.

Also doom and freedoom have no switch images in them, and dataset was prepared with 512x512 images only: 128x128 were just upscaled, 64x64 were tiled 4 times, 64x128 were tiled twice horizontally.

Usage

The purpose of this is obviously not for direct use, because textures come out mostly blurry, but instead to permutate and downscale them. Probably apply palette. Here are some quick examples, original, downscaled via nearest neighbor and upscaled back up

Usage rights

The freedoom LoRA can be used to generate commercial images, just don't forget to chuck BSD license alongside whatever you using them in, as per terms and conditions of freedoom's BSD license. Other two are strictly noncommercial, for sake of obeying idsoftware licenses.

Prompting help

Tagging scheme which I used is photo-like so example of tag used for training is "brown wall with tech stuff and a switch". Take that for what you will, and keep it in mind, because i have no idea how tokenizer split it up.