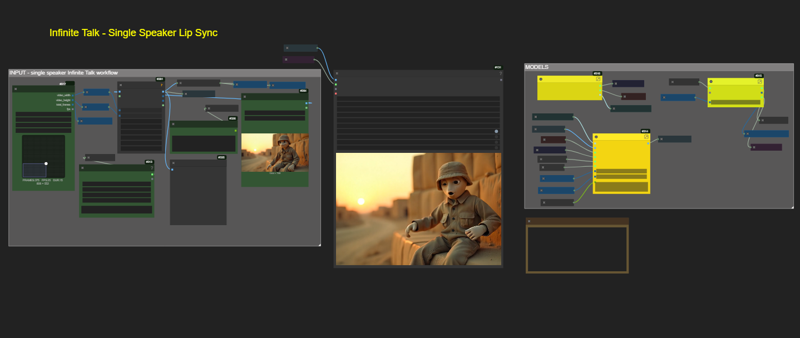

Switched over to InfiniteTalk model

Please note : this version uses subgraphs, please update your UI if necessary.

No spaghetti nightmare -- like some workflows.

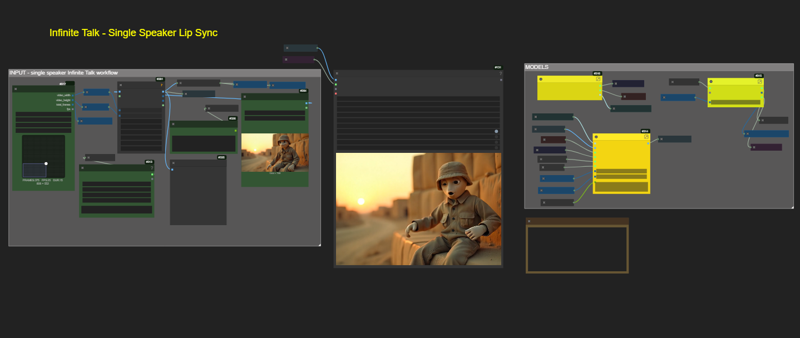

Switched over to InfiniteTalk model

Please note : this version uses subgraphs, please update your UI if necessary.

No spaghetti nightmare -- like some workflows.