Verified: a day ago

Other

The FLUX.1 [dev] Model is licensed by Black Forest Labs. Inc. under the FLUX.1 [dev] Non-Commercial License. Copyright Black Forest Labs. Inc.

IN NO EVENT SHALL BLACK FOREST LABS, INC. BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH USE OF THIS MODEL.

28.01.2026 v.6.3: Fast Z-Image fix (RES6LYF sampler replaced with standard Ksampler)

!!Please update Timesaver and RES6LYF nodes!!

This workflow support

Z-Image, Wan, Flux, Flux Kontext, Flux 2, Flux 2 Klein, Qwen Image, Qwen Image Edit, SDXL/Pony, Chroma and Lumina-Image 2.0

txt2img, img2img, Inpaint functionality.

SeedVR2 upscaler

ControlNet for text2img, img2img and inpainting in model patch mode (Z-Image, Qwen,Wan). It is enabled only for first half-1 steps.

Outpainting in Edit/Kontext mode

safetensor, gguf and svdq (Nunchaku) checkpoints

Powerful multi Lora loader (Lora manager)

LLM to describe images and enhance prompts

Face Detailer

Multi or single images load for Edit/Kontext

New in 6.2: Updated Timesaver node. Now it can work with single file Qwen safetensors models

New node: https://github.com/naku-yh/ComfyUI_Flux2ImageReference

Each model have its own lora loader for keeping settings between model after switching.

Use Linear\Euler Normal 8 steps for Z-Image and 10-12 steps for Z-Image with ControlNet

You can turn on or off not blocks using red switcher in the center of workflow.

Last update of timesaver nodes fixed installation issues, so LLM node is back to TS_Qwen3 (you can just delete LLM group if there is no plans to use it)

https://github.com/AlexYez/comfyui-timesaver

TS_Qwen3_Node node can describe images, translate prompts and enhance prompts.

==========old versions==========

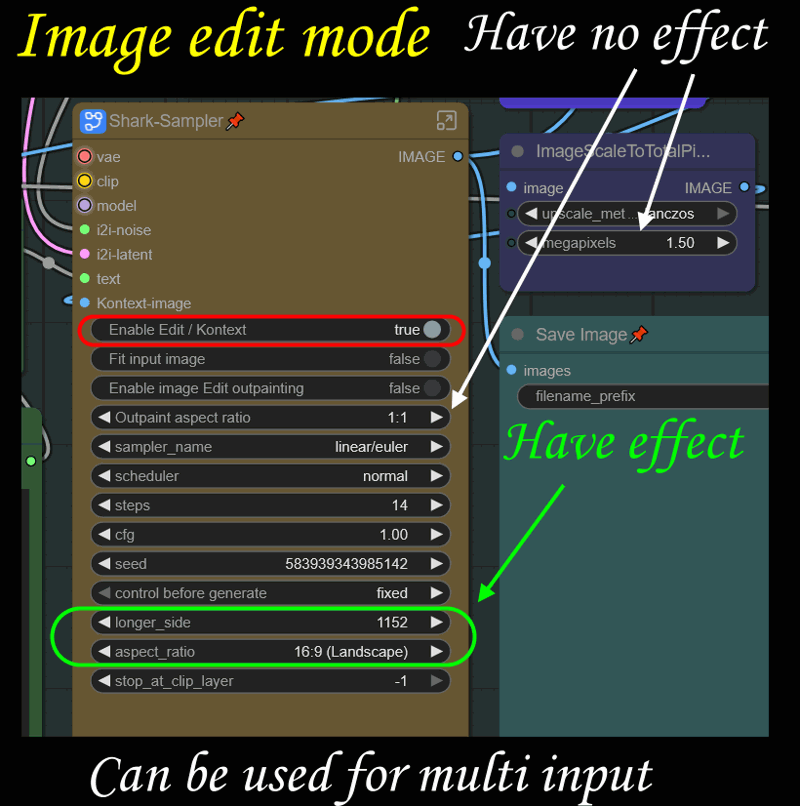

There are 3 switchers there:

Base edit mode can be used to edit images with manual output dimensions or multi image input.

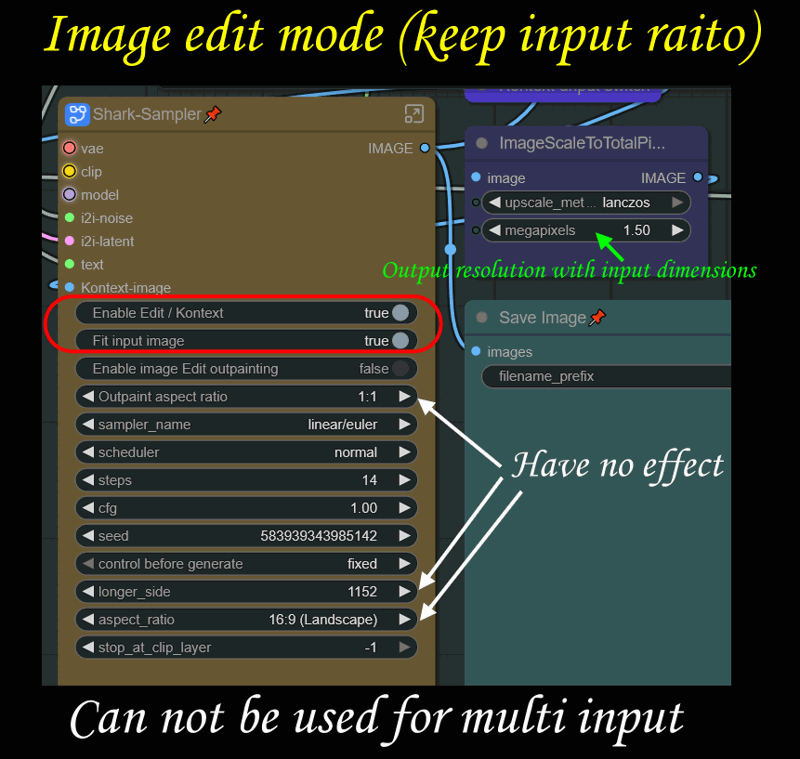

2. Keep aspect ratio mode can be used only for singe image inputs

2. Keep aspect ratio mode can be used only for singe image inputs

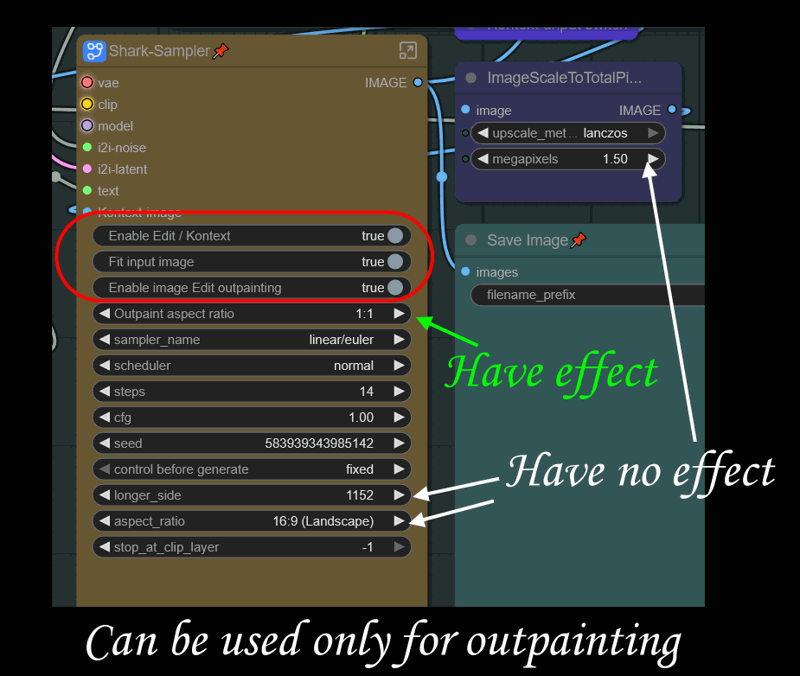

3. Outpainting mode

3. Outpainting mode

Due to difficulties with installing the Qwen_3 LLM node dependencies, it has been replaced with ComfyUI_VLM_nodes, which can be installed directly from the manager. At the moment, the LLM is used for describing images. The node supports many models, but my favorite option is using

Llama-Joycaption-Beta-One-Hf-Llava-Q8_0.gguf as a model and

llama-joycaption-beta-one-llava-mmproj-model-f16.gguf as clip

Both files must be located in models/LLavacheckpoints folder.

====old versions===

Besides there is a built-in LLM node (you can just delete LLM group if there is no plans to use it)

https://github.com/AlexYez/comfyui-timesaver

TS_Qwen3_Node node can describe images, translate prompts and enhance prompts.

If your operating system is Windows and you can't install Qwen3_Node dependencies (don’t have a compiler installed), try to download the .whl file from

https://github.com/boneylizard/llama-cpp-python-cu128-gemma3/releases

then close ComfyUI, open the python_embeded folder, type cmd in the address bar, and execute the following command.

.\python.exe -I -m pip install "path to downloaded.whl file"

after installing you can run ComfyUi and install missing custom nodes as normal way.

Edit: If .whl install fails, check your Python version and make sure that .whl was build for this version. If it is still fails, try to open .whl as archive and just extract all folders from archive to python_embeded\Lib\site-packages folder