This is just a small simple script that calculates buckets and downscales images to the closest biggest bucket. FOR DATASET TESTING PURPOSES ONLY I DO NOT RECOMMEND TO USE IT FOR TRAINING... unless you are trying to bypass upload size limits!

Also it is probably buggy as I don't normally do powershell.

I have updated this to a V2, now sorting the original images into buckets apart from making the previews. V3 added cropping and V4 selected alignment for cropping

V4

Added options for Up/down/center and Left/Right/center alignment for crop and fill. Just type U, D or C for up, down or center and L,R or C for left, right or center.

The behavior is: Left alignment will align the beginning of the image to the left cropping the right part or filling the right with white.

V3

Fixed some bugs and added a centered cropping option which will crop the largest side of the generated test image to make them perfectly fit their biggest compatible bucket, while cropping the least amount of the image.

With this you can theoretically use the downscaled images for training but i would still recommend to review/sharpen/filter them before doing so.

Remember to keep your original full size dataset!

V2:

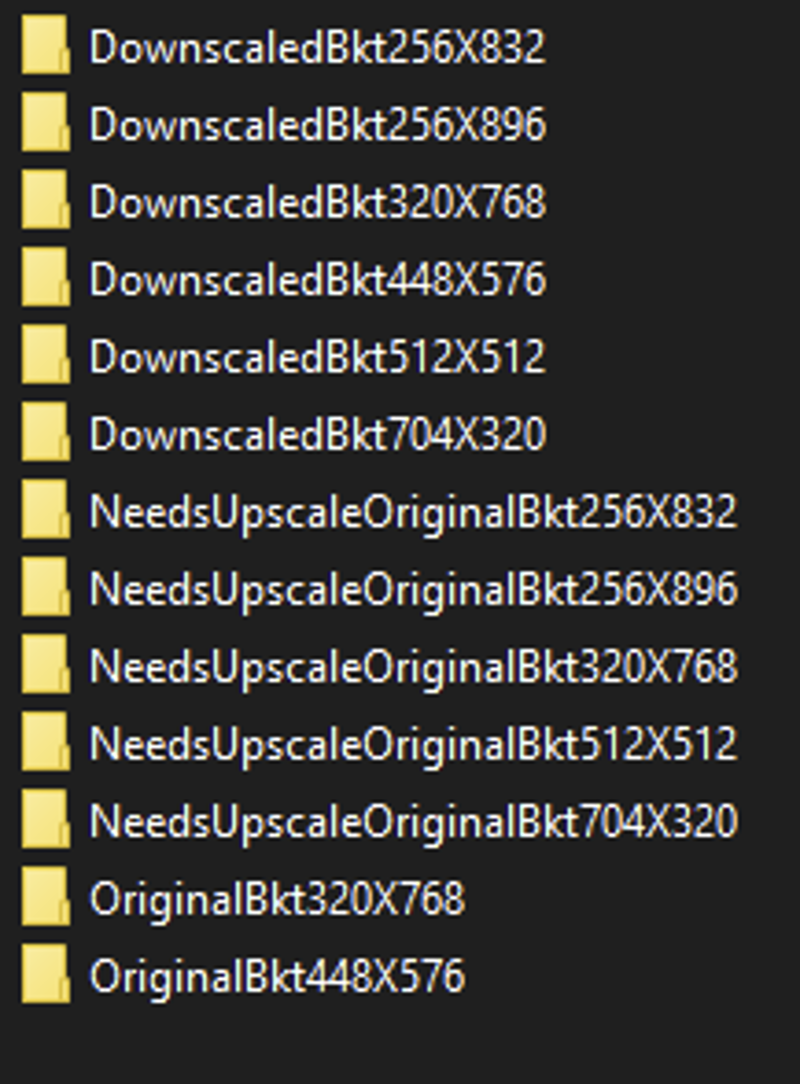

Some people asked If the script could sort the originals into their expected buckets, So I added an output folder which will create 3 types of sub folders, the images downscaled(or upscaled crappily) to their bucket, the originals sorted to their bucket and the originals which need upscaling to properly fill their bucket. Remember the downscaled images are for preview only don't use them to train.

Should look like this:

If your images are not PNG, they will be ignored and a PNG copy will be created and used instead, If your original is already a PNG it will be moved.

V1:

The purpose of this script is to validate dataset images to check if they don't loose too much detail in the dowscaling process. It is best to keep the images as highres as possible so they can be reused in the future, no need to use the downscaled images. You won't get any benefit in training doing this.

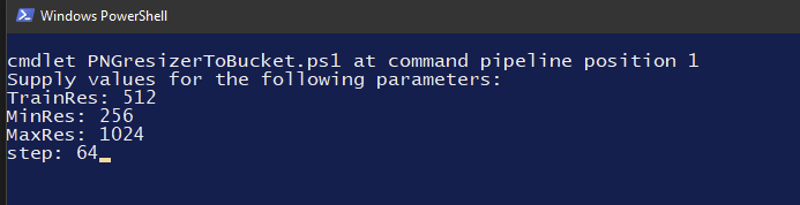

The scripts asks for your bucketing parameters. In LORA Easy training scripts(I am not sure about kohya-ss ones) the defaults are:

For SD1.5:

Training res: 512

Min res:256

Max res:1024

Step: 64

For SDXL(might vary):

Training res: 1024

Min res:512

Max res:2048

Step: 128

The script is recursive and will pick all images in the sub folders. I also forgot to remove the conversion part so it will take most types of images and spit pngs.

Anyway the script works by calculating the biggest available bucket for the image width to height ratio then downscaling and centering the image finally filling with white if some space remains.

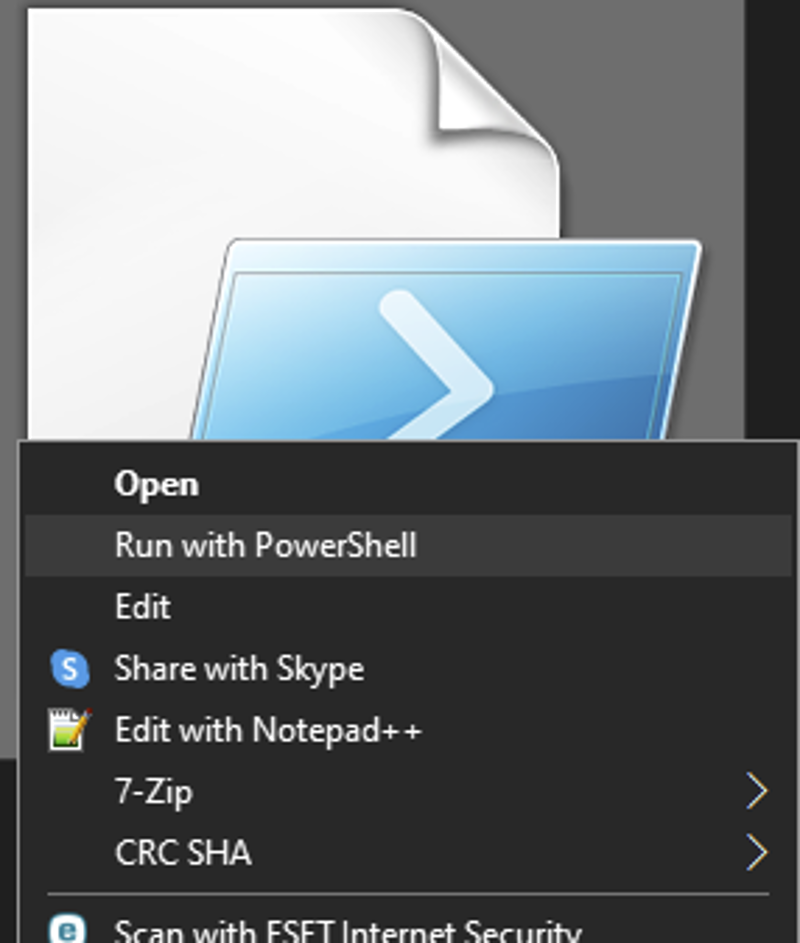

Steps to use:

Dump it in a folder

Dump a folder with images inside that folder.

right click on the powershell script and click execute

Type each required value clicking enter after each one.

Click enter and all images in any subfolder will have a new resized copy created.

Check the images for their quality. If any image looks bad or lost too much detail go to the original and either crop it or remove it from the dataset. Remember: Every empty space, unnecessary elements, every bit of a white background are wasted pixels that can be used for extra face details of your character. Be specially careful of fullbody images as those are specially prone to lose detail in the downscale.

When you finish, simply delete the downscaled images, you shouldn't use them to train If you have perfectly fine high res images to begin with.

NOTE: I hope it doesn't need extra permissions, but my environment is rather tweaked so it might ask.