Anime Diffusion

Introduction

This model aims to change the monotonic style of previous anime SD models and provides some suggestions that may be beneficial for further fine-tuning.

Usage Details

You can download two different models:

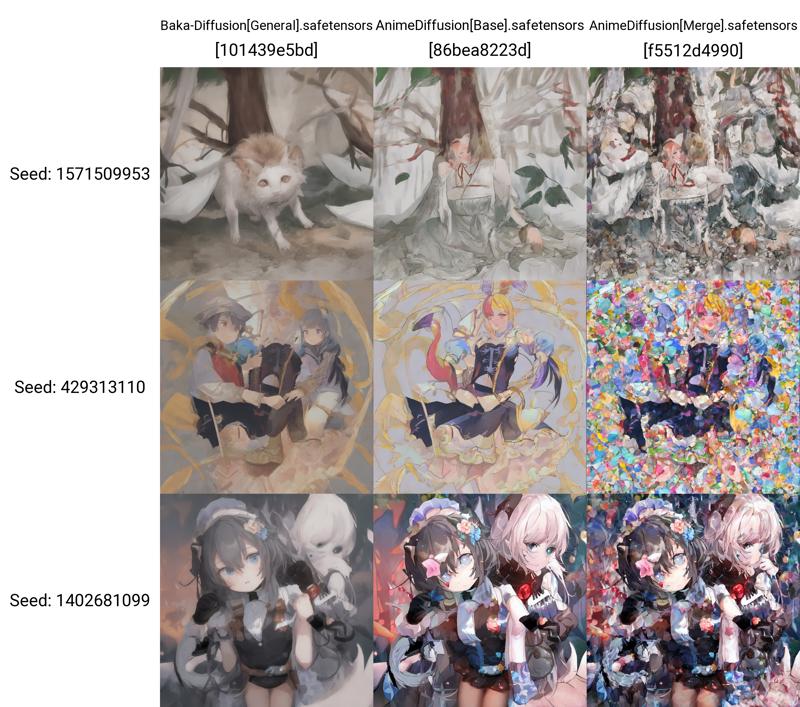

AnimeDiffusion[Base] (Pruned Model fp16): Fine-tuning model without model-merge. This model can generate images with lower quality but richer diversity.

AnimeDiffusion[Merge] (Full Model fp16): A merged model that merge AnimeDiffusion[Base] and Aurora. This model can generate higher quality images but has lower diversity.

This model supports Danbooru tags as prompt to generate images, such as:

Prompt:

masterpiece, best quality, 1girl, solo, ...Negative Prompt:

lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry

Training Details

AnimeDiffusion[Base] is a fine-tuning model that is trained with 430K-730K danbooru2022 images. Based on Baka-Diffusion[General], I first trained this model on 730K images for 5 epochs, then filtered the images with Cafe-aesthetic to obtain relatively high-quality images (430K) and trained it for 3 epochs.

Here are some important training hyperparameters:

noise_offset = 0.05This hyperparameter may help the model to generate darker or brighter images (e.g.white background).0.05is also used for the training of Stable Diffusion XL. (Detail)caption_dropout_rate = 0.2As we all know, Stable Diffusion is a Text-to-Image model, which is based on Classifier-Free Guidance (CFG) for condition generation. In the CFG approach, the diffusion model is trained both unconditionally and conditionally, which means that the model is capable of both unconditional and conditional generation. This formula shows how CFG is implemented:noise_pred = noise_pred_uncond + CFC_Scale * (noise_pred_cond - noise_pred_uncond), wherenoise_pred_uncondis the predicted noise when there is the null prompt (i.e. unconditional), andnoise_pred_condis the predicted noise when we provide a specific prompt (i.e. conditional). According to the CFG paper,caption_dropout_rate = 0.2 or 0.1is recommended. However, in the most of fine-tuning practices, this training strategy is never mentioned. Due to a number of issues, I am not currently doing more experiments on this, and would also appreciate active discussion on this point. (Detail)

Model-merge Guidance

Understanding how to do model-merge helps us to improve the performance of our models in specific ways.

SD contains a U-Net which consists of Convolutional layers and Transformer blocks. U-Net can be viewed as an Autoencoder that continuously downsamples a picture to reduce the resolution (64x64 -> 32x32 -> 16x16 -> 8x8) to obtain abstract semantic information about the images, and subsequently upsamples (8x8 -> 16x16 -> 32x32 -> 64x64) to recover the image from this abstract semantic information.

Overall, the blocks closer to the input and the output part of the U-Net (high resolution) are responsible for the high-frequency detailed information of the images, while the blocks in the middle (low resolution) are responsible for the low-frequency semantic information of the images. When we want to change the details of the image (e.g. texture or edges), we should prioritize changing the high-resolution blocks. When we want to change the overall image (e.g. character or poses), we should prioritize changing the low-resolution blocks.

AnimeDiffusion[Merge] is a merged model which is replaced with Aurora for the high-resolution blocks to improve the quality of images.

high-res_replace:

0,0,1,1,0.67,0.67,0.33,0.33,0,0,0,0,0,0,0,0,0,0,0,0.33,0.33,0.67,0.67,1,1,0low-res_replace :

0,0,0,0,0,0,0,0.33,0.33,0.67,0.67,1,1,1,1,1,0.67,0.67,0.33,0.33,0,0,0,0,0,0

Acknowledgements

[Danbooru2022 Datesets]: A dataset contains about 4M+ images.

[Baka-Diffusion]: The basic model used for fine-tuning.

[Aurora]: The model used for model-merge.

[Cafe-aesthetic]: Used to further filter out low-quality and non waifu images.

[SuperMerger]: Very useful for model-merge.