Odinson-SDXL

+ vid2vid

+ OpenPoseXL2

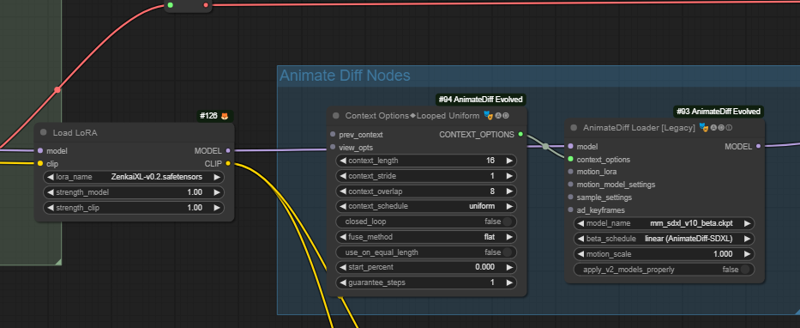

+ animateDiff XL

+ Lora loader

(default strength 1.0 <- change this)

Versions:

V9 - initial release (used OpenposeXL by default, slow)

V10 - desaturated input (used with Lora Sketch XL, fast)

V11 - same as V10, (Interpolation now after ksampler, fast)

use comfyui manage to install missing nodes & models

you will need:

Any SDXL Checkpoint

Any SDXL Lora

Any SDXL ControlNets

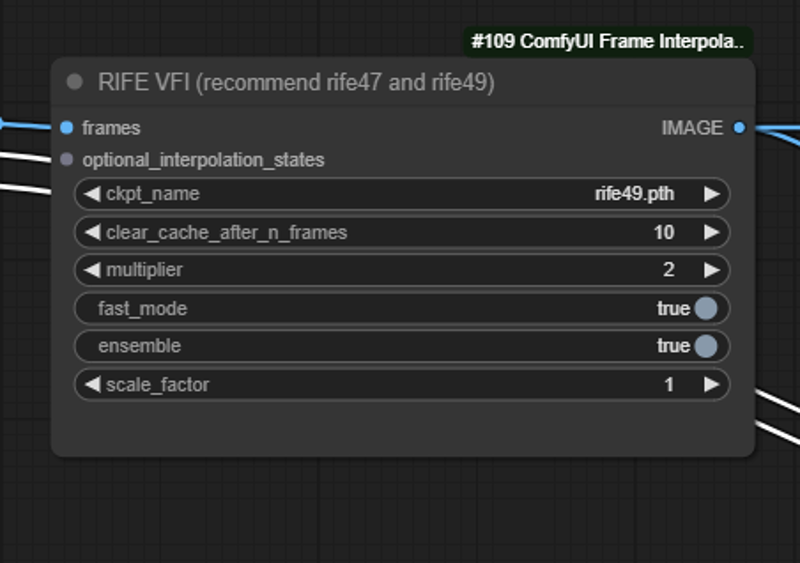

Rife49.pth (comfyui frame interpolation)

ip-adapter_sdxl_vit-h.safetensors (comfyui ipadapter plus)

CLIP-VIT-H-14-laion2B-s32B-b79k.safetensors (CLIP Vision)

mm_sdxl_v10_beta.ckpt (animateDiff evolved)

check the screenshots below!

at 1024 expect ~22GB VRAM

at 768 expect ~12GB

reduce the dimension to reduce the load.

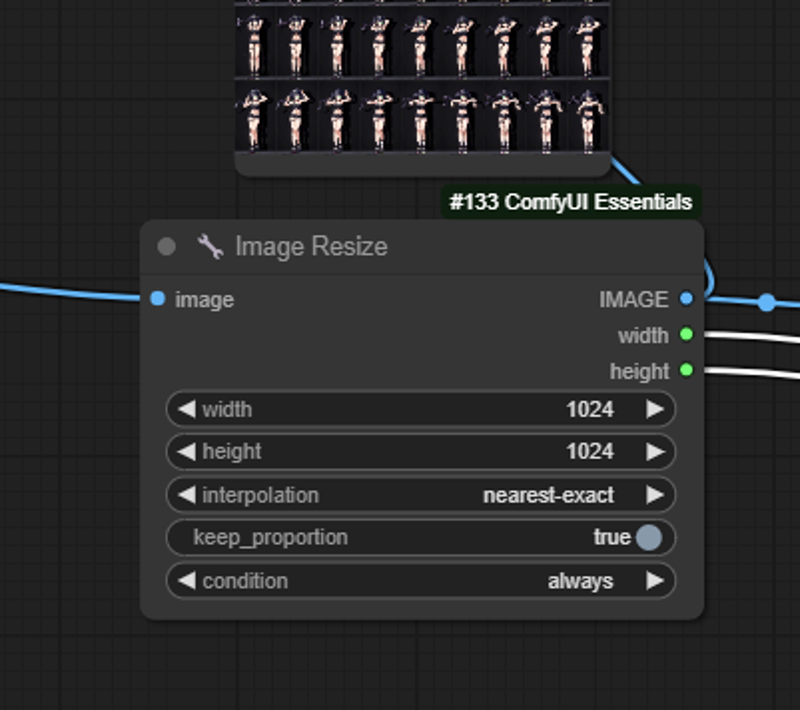

Be careful with your video size ! this is 1024x1024 (large)

Pick video input and set the frame range:

Check the maximum size for the longest side (clamp)

Check Frame interpolation (2 = double input frames)

select the ControlNet (OpenposeXL2 shown)

Check IP Adapter settings and load style image

Set final resolution here (use 1024 for no upscale)

Set Final Framerate

Load Lora & check Animatediff settings (should be the same)

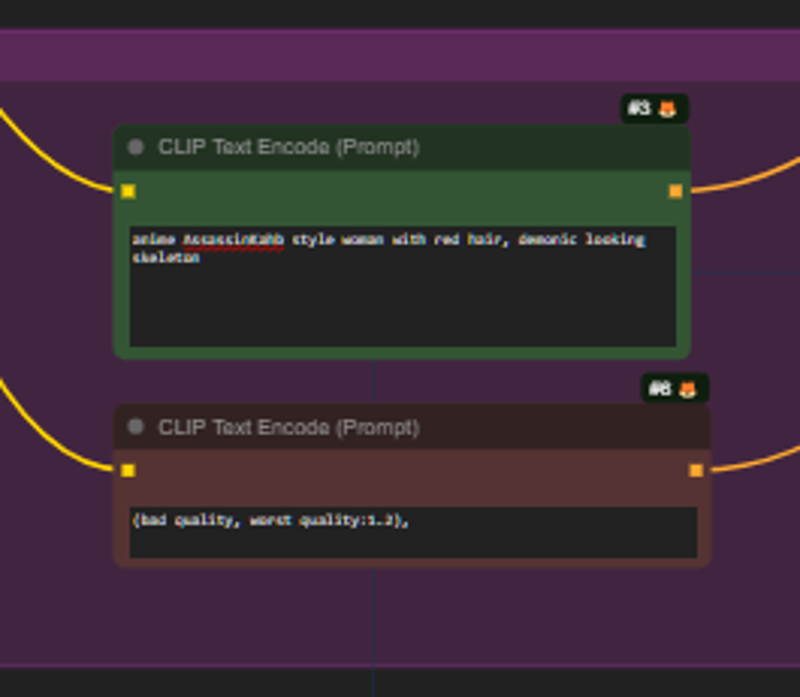

Finally set your Prompt and you can start the generation