RobMix CosXL Edit got an upgrade.

I've been really pleased with the results I'm getting with RobMix Zenith, so I wanted to see how it would mesh with CosXL Edit. This one has performed admirably as well, so I wanted to release it in case it'll help you, too.

My previous version was a quick and dirty simple merge. On this one, I spent some more time tuning the mix, adjusting weights block by block to draw out the best quality and prompt adherence from the image model, with the best instruction following from the Edit model.

Under the hood, this version adds all of the good stuff that came with Zenith.

Try it with my style prompt library.

Most of the sample images were made using my style prompts and character prompts verbatim as the positive prompt, with Text CFG set to 3 to 4 and Image CFG set to 1.

Prompts are optimized for RobMix Zenith.

This is a CosXL Edit checkpoint. Read before you download.

Download ComfyUI example workflow here.

From the Stability CosXL HuggingFace

"Cos Stable Diffusion XL 1.0 Base is tuned to use a Cosine-Continuous EDM VPred schedule. The most notable feature of this schedule change is its capacity to produce the full color range from pitch black to pure white, alongside more subtle improvements to the model's rate-of-change to images across each step."

"Edit Stable Diffusion XL 1.0 Base is tuned to use a Cosine-Continuous EDM VPred schedule, and then upgraded to perform instructed image editing. This model takes a source image as input alongside a prompt, and interprets the prompt as an instruction for how to alter the image."

In my early tests, the CosXL Edit base model was already fantastic, but I wanted to merge in some of my recent SDXL checkpoint merges to give it a bit more, je ne sais quoi.

Pros and Cons

Pros:

Unparalleled contrast

Super sharp

Seems to have more interesting compositions and diversity than SDXL

Cons:

Super touchy with CFG. If you go beyond 4.0, expect artifacts, even with rescale

Limited subject knowledge

How to use CosXL checkpoints

As of the time I'm posting this, ComfyUI and StableSwarmUI support CosXL out of the box. I have not heard of support for other web UIs.

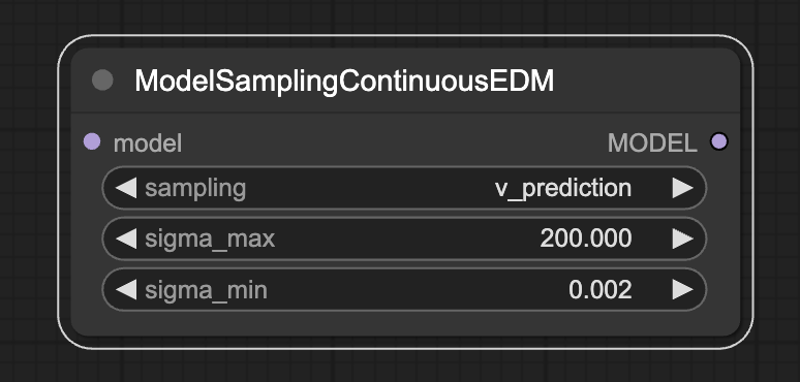

For finer control of the contrast and detail, add a ModelSamplingContinuousEDM node to the model pipeline and adjust your sigma_max and sigma_min settings.

Don't quote me on this, but I understand those to represent the max and min amount of noise that can be removed from the image in each step.

Don't quote me on this, but I understand those to represent the max and min amount of noise that can be removed from the image in each step.

Raising sigma_max adds more contrast to the image, but overdoing it tends to burn the image a bit. It seems 120 is standard, I like slightly higher values.

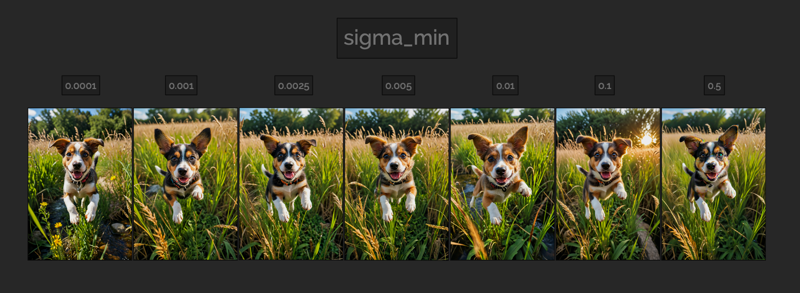

You can lower sigma_min to absurdly low values, but I find that anything below 0.0001 or anything above 0.1 result in obvious artifacts.

You can lower sigma_min to absurdly low values, but I find that anything below 0.0001 or anything above 0.1 result in obvious artifacts.

Prompting CosXL Edit

Prompting CosXL Edit

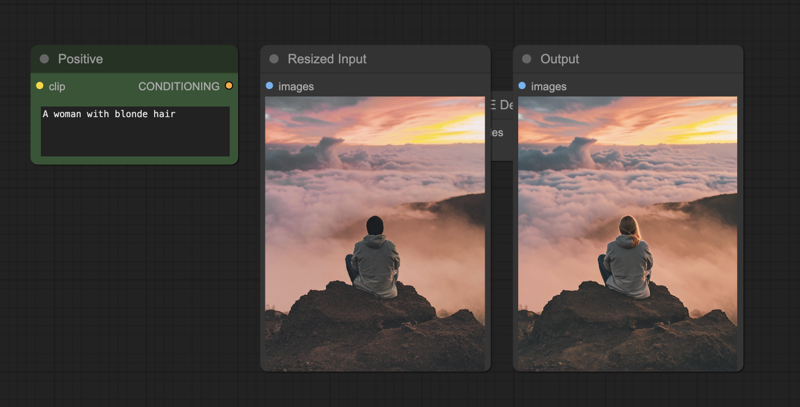

Prompting is straightforward. Just type what you want to change. E.g. if your image contains a guy sitting on a mountaintop, you just need to prompt "a woman with blonde hair."

Recommended Settings

Recommended Settings

Download the example ComfyUI workflow here.

These models seem super sensitive about CFG, so keep your values low. Adjust the ratio of cfg_text to cfg_image to weigh the contribution of each to the final image. High CFGs will burn the image pretty quickly.

First Pass

40 steps

cfg_text: 2.5

cfg_image: 1.5

DPM++ 3M SDE Karras

For an added bonus, you can add the following:

FreeU

B1: 1.05

B2: 1.08

S1: 0.95

S2: 0.88

Self-Attention Guidance

scale: 0.5-0.8

blur_sigma: 2.0-4.0

CFG Rescale: ~0.3