LOOPED MOTION V2

with EasyAnimateV3

thanks kijai!

Just add your images and start the Queue, included fast upsizing, RIFE or FILM interpolation and examples for use with ABBA and ABCDA animation, requiring 2 and 4 images respectively, animation can be guided with a general prompt.

also included is a bonus workflow, LoopedMotionV2-auto, which used one button prompt and auto negative custom nodes, to reduce the load when using many images to provide simple prompt enrichment.

pack includes some demo images to get started and the previous V1 pack contents

V1 ABCDA animation loop took up to 2400 seconds to complete 30FPS

V2 ABCDA animation loop took up to 2000 seconds to complete 60FPS

enjoy!

You will need:

install custom nodes with Git URL if you cannot use manager

https://github.com/kijai/ComfyUI-EasyAnimateWrapper/tree/main

Models Required:

https://huggingface.co/alibaba-pai/EasyAnimateV3-XL-2-InP-768x768/tree/main

place all inside /models/easyanimate/common (except /transformer/ folder)

https://huggingface.co/Kijai/EasyAnimate-bf16/tree/main

place at least 768x768 version inside /models/easyanimate/transformer/

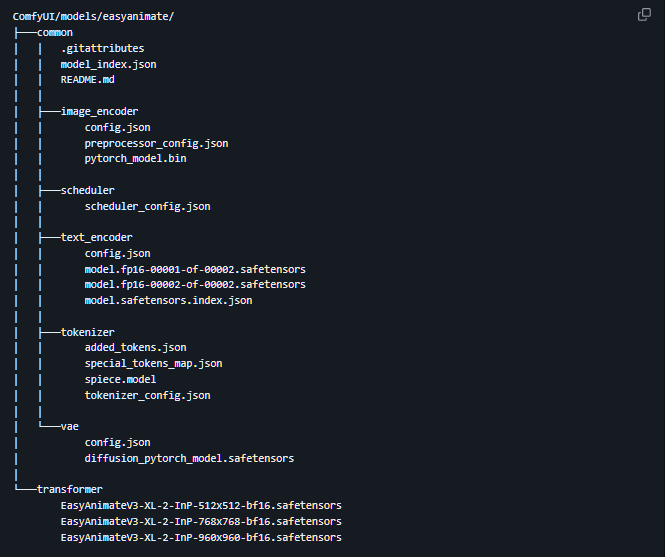

the file structure of /models/easyanimate/ should look like this:

V1 Pack video (SD1.5 with AnimateDiff method)

Looped Motion is a workflow with slight modifications to exploit training the same dataset on both SDXL and SD1.5. This allow you to load both and gain more accuracy to your trained models.

Added DJZ-LonelyDriversPack

(collection of drivers for QR code animation with AnimateDiff/LoopedMotion workflows)

(All in 12FPS .MOV to play best with the IPAdapter and ControlNet)

The V1 Workflow pack contains:

LoopedMotion-2Lora

Used the matching SDXL & SD1.5 Lora pair, one to drive the initial image generation (SDXL), then another to drive the animations (SD1.5 animateDiff).

example; matching Lora Pair here: https://civitai.com/models/401458

LoopedMotion-1Lora

With the SD1.5 Lora disabled, you can use any SD1.5 model for other effects. Of course, you can change the workflow by adding your favourite SD1.5 generation in place of the disabled SDXL generation sections.

LoopedMotion-4Image

Setting the image input switch boolean to false allows you to select 4 images to use as guidance, however it will make a variation of your image in the animation. This is why a small lora can reinforce the model and increase accuracy.

You Need These Models !

place these inside ComfyUI\models\animatediff_models\

v3_sd15_mm.ckpt 1.67GB

v3_sd15_adapter.ckpt 102mb

place these inside ComfyUI\models\animatediff_motion_lora

place these inside ComfyUI\models\controlnet

v3_sd15_sparsectrl_rgb.ckpt 1.99GB

control_v11u_sd15_tile_fp16.safetensors

control_v1p_sd15_qrcode_monster.safetensors

place these inside ComfyUI\models\ipadapter

ip-adapter-plus_sd15.safetensors

place these inside ComfyUI\models\loras

AnimateLCM_sd15_t2v_lora.safetensors

place these inside /ComfyUI/models/clip_vision

Download and rename to "CLIP-ViT-H-14-laion2B-s32B-b79K.safetensors"

Download and rename to "CLIP-ViT-bigG-14-laion2B-39B-b160k.safetensors"

Guidance Animation URL:

This workflow was originally built by Abe

Thanks for your effort!!