Updated: Jul 17, 2024

styleac·cre·tion disk /əˈkrēSHən disk/

(noun) a rotating disk of matter formed by accretion around a massive body (such as a black hole) under the influence of gravitation.

Introduction

This is kind of a wonky model. In all honesty, I feel like MIX-GEM-QromEW (check MyMix-J/GEM in the recommended models tab, or my profile page if you're viewing on Yodayo or Tensor.art) has better composition sense and does a better job of doing darker blacks and color contrast. However, this does do a better job of affecting the painterly style than QromEW and is somewhat better at proportions so it does have its own merits. My disappointment might just be that I'm setting my standards too high. Who knows?

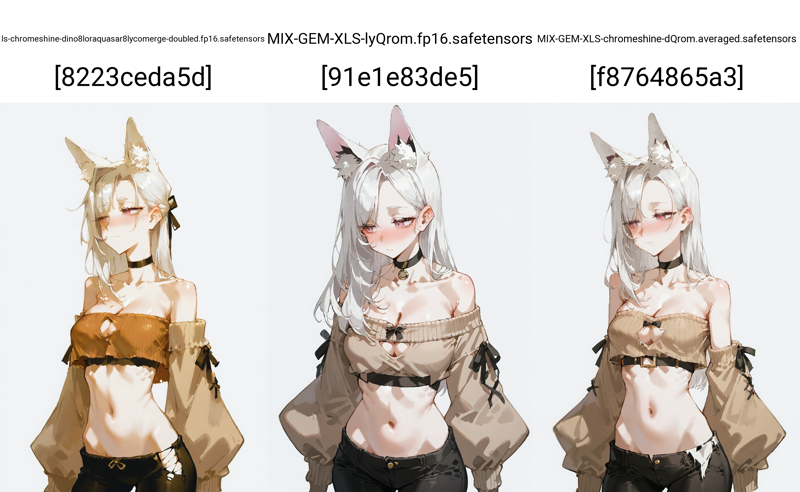

Anyway this model was created through a 50/50 mix of two different models, MIX-GEM-lyQrom (which is essentially a slight rework on QromEW) and another model with the working name of ls-chromeshine-dino8loraquasar8lycomerge-doubled. I may upload the lyQrom model later since it's usable but ls-chromeshine-dino8loraquasar8lycomerge-doubled is somewhat difficult to work with. The former is a simple lora+lycoris merge on top of LS Equos V1, the latter is a lora + lycoris merge on top of trained LS Equos. Some of the outputs ls-chromeshine-dino8loraquasar8lycomerge-doubled actually did better but overall it ended up too unstable, which is why lyQrom was merged in. This averaged out the highs and lows which made it way more usable as a model. These are some cherry-picked gens which represented ls-chromeshine-dino8loraquasar8lycomerge-doubled at its best and can illustrate the effect merging it with lyQrom had. I want to preface this display by stating that this is definitely not representative of the average, otherwise I would have just uploaded ls-chromeshine-dino8loraquasar8lycomerge-doubled.

As you can see the merge was successfully carrying some of the style effect onto the much more stable lyQrom. Additionally some other flaws that ls-chromeshine-dino8loraquasar8lycomerge-doubled had were that it had too much sepia and the hair would end up being too "spikey", for lack of another word, were corrected by the merge. However, the retention is a bit iffy- if I revisit this some point the goal would be to create a version that retains even more of ls-chromeshine-dino8loraquasar8lycomerge-doubled's unique qualia while adopting the composition strength of QromEW, potentially through block merging.

As you can see the merge was successfully carrying some of the style effect onto the much more stable lyQrom. Additionally some other flaws that ls-chromeshine-dino8loraquasar8lycomerge-doubled had were that it had too much sepia and the hair would end up being too "spikey", for lack of another word, were corrected by the merge. However, the retention is a bit iffy- if I revisit this some point the goal would be to create a version that retains even more of ls-chromeshine-dino8loraquasar8lycomerge-doubled's unique qualia while adopting the composition strength of QromEW, potentially through block merging.

Prompting

This is a tag-based model, which means you should try to utilize tags primarily, and natural language secondarily, if at all. If you are unfamiliar with the sort of tags the model responds to, most training data for anime style models is pulled from either Danbooru or e621. Both websites contain helpful tag-wikis, which should aid you as a reference.

In any case do not use subjective terms when it comes to AI. This is an observation I often have, but when it comes to tags like best quality, high quality, very aesthetic or score_9, score_8, score_7_up these are not concepts that AI naturally understands, but qualifier tags that are trained into the model (usually based on user score metrics because individually determining the quality of millions of pieces of artwork is impossible for us puny humans). The golden rule of AI is that it only knows what you feed it. (This also means tags like beautiful woman or perfect face do not have an effect unless they were tagged during training, which is very unlikely given source/autotaggers.)

Anyway, for negatives, it's up to you. The best solution, of course, is to slowly modify each negative for every generation over multiple successive prompt modifications on the same seed, but if you don't have an eternity some helpful tags for negatives are low quality, extra digits, artistic error, watermark, artist name, signature. e621_p_low serves as an inbuilt general purpose negative quality tag that uses less token count than score_6, score_5, score_4. If you don't trust it, you can always opt to go for the full quality tag chain instead, but IMO it is a better substitute. The preview images serve as a helpful example but as you are, of course, free to modify your own negatives as you see fit.

Sampling and Other Parameters

As is the case with all diffusion models, negatives will have more of an effect the higher the Classifier-Free Guidance Scale (CFG) is. While prompts are what the text encoder conditions the latents with, the CFG modulates the strength. It would take a lot of words to explain how the prompts actually guide the latents, but a quick summary is that unconditional_conditioning (negatives) inhibit certain vectors from being applied to latent space and the higher the CFG, the stronger the inhibition is (and the stronger the conditioning (positives) also are). Of course, excessively high CFG has a tendency to burn the image by inducing too strong of an effect on the de-noising process. My recommendation is to either use Perturbed Attention Guidance (PAG) to enhance the guidance scale without increasing CFG or use Dynamic Thresholding CFG to clamp CFG at early step stages.

My recommended Sampler is Euler A with whatever scheduler you like the most. I've found SGMUniform to work the best (and fastest) for me, but others report liking the AYS Sampler. My personal experience with the AYS sampler is that it's generally more accurate to the prompt most of the time but it also magnifies some of the less desirable qualia that the model learned (due to insufficient data cleaning, mostly) and will occasionally inject things like text or watermarks. If you're willing to try more esoteric samplers, I've found that Euler dy Negative sampler is especially clean. Subjectively it is 'less ambitious' than Euler a, however it is very good at making simple, clear cut, clean generations.

I do recommend 25-35 step count. My default is 28. Frankly speaking you should not venture far beyond that range. Increasing the step count on non-converging samplers (Stochastic samplers and ancestral ones are the two types that immediately come to mind) will dramatically change your image and returns on doing on converging samplers are exceeding minimal once step count exceeds 35. You're just wasting a bunch of compute on inference for no reason. A better solution would just to adjust your other parameters (prompt probably) instead of assuming more steps will fix whatever flaw you're encountering.

Model performs best at 832x1216 or 768x1344