V0.4:

Pre-Training Phase:

I have a few steps to complete first;

Finish setting up the collab jupyter notebook and run some collab testing with smaller semi-SFW training data.

I'm not interested in actually introducing sex or sex related acts UNTIL the system is actually posing correctly with clothes and nudity.

The entire pose tagset is intertwined with both NUDE and CLOTHED images, the dataset differentiation will be a unique tag specific to this model. This particular upcoming training based on collab will be ONLY the semi-SFW (clothed) elements. I don't want google to ban me while I'm testing computational setups and systems using their collab system due to me having obvious sexual NSFW elements in my image sets.

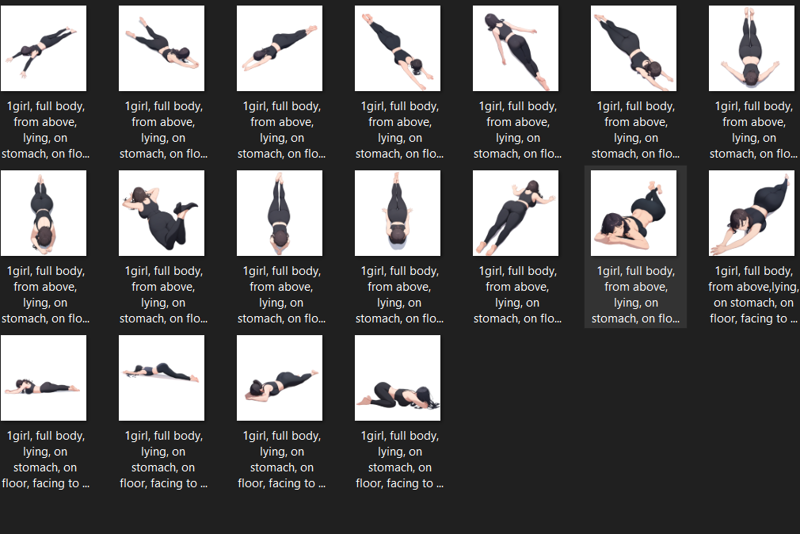

Pick primary pose images: 1215 ~pose images,

Perspective; 70 images each object deviation

target torso angles; 3 * 5

from front

from side

from behind

origin angles; 3 * 5

front view

above view

below view

target head angles: 3 * 5

facing viewer, facing forward

facing to the side

facing away

eye angles: 5 * 5

looking at viewer

looking to the side

looking ahead

looking away

looking down

Human form associations:

torso:

upright (vertical upper):

sitting: <- always assumes sitting on butt

Plane association:

sitting on surface

sitting on table

sitting on chair

sitting on couch

sitting on floor

sitting on bed

lying: (horizontal upper):

lying on front, lying on stomach

lying on back, lying on rear

lying on side, on side

Plane association:

lying on floor

lying on table

lying on chair

lying on bed

lying on couch

lying on grass <<<<<<<<

Arm association:

upper arm

lower arm

hand

Hand associations:

waving

v

Leg association (thigh, ankle, feet):

legs together (full legs):

thighs together

knees together

feet together

legs apart (full legs):

thighs apart

knees apart

feet apart

feet together:

crossed legs (partially obstructed thighs):

crossed ankles (partially obstructed ankles):

legs up (full legs):

legs down (full legs):

spread legs (full legs):

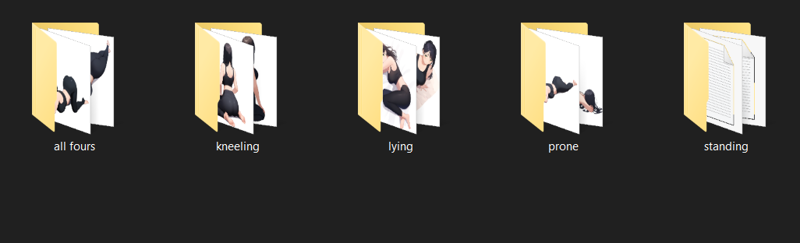

form poses: tag object association bound to all of them based on utility and need.

lying

sitting

squatting

kneeling

all fours

The outcome should be;

A front view of an upright woman sitting on a couch with her legs crossed eating potato chips.

These 1k best quality pose images will be the basis for the full output images. Each pose will be burned into a gradient of 5 qualities and superimposed on unique non-uniform backgrounds.

gradient, multi-colored, multi-shape, individual shape, panel, and a few danbooru image effect backgrounds.

Each background will introduce shadow elements and room shapes randomly, one background each quality thanks to NAI's director tool recoloring and defry capability.

This should differentiate the backgrounds and introduce more "grab points" for the base model's training to grab onto what is already inside SD3. This SHOULD allow for a more consistent negative prompting when properly tagged.

Situation and Context anchors: ~1000 images direct raw output inference using euler

Each anchor is based on linking what I want the model to know, with what it already knows. This will associate what IS THERE with what I WANT TO BE THERE, without needing a substantially larger amount of images. The outcome will suffer unless I produce another batch of these images each versioning generation.

I'm going to form a smaller subset of images, I don't know exactly how many I'll need currently, but I'll need to manually generate them using the outcome of inference, so I should be able to generate them fairly rapidly.

Roughly 10 images per context should be fine. Multi-context should be a bit trickier, but it'll do. 100 single tag contexts with nothing additional should be more than enough to associate everything new I want into the current SD3.

V0.3:

Release:

TLDR: steps: 50, size: 1024x1024, sampler: euler, cfg rescale: 0.4-0.8, config str: 9,

lora str: 0.5-0.8

Introduction:

The outcome was substantially more accurate, sharp, and powerful than v0.2. The training and progression successes have forced my hand here. Even with just the 150~ anime consistency image set, training on SuperTuner using my 4090 is too slow. The image counts for the larger model would take exponentially longer to be trained on my PC, so I set up a jupyter notebook yesterday with a series of experiments and will be running SuperTuner on a more powerful cloud service using A100s and H100s to hopefully complete the full 45k pose training images + the core burn trait 1500 images within a decent amount of time. Upon release of the true version 1.0, I'll release the full image dataset and the tagging. I anticipate needing more than a few retrains to get it right, so I assume it'll cost a pretty penny but I think it'll be worth it based on the smaller outcomes here.

Findings:

SD3 has very clear censor points. The censor points show volatility in tags, rather than complete omission. Volatile tags must be completely burned and retrained if any consistency is to be achieved. DESTROY THOSE TAGS FOR ANY LARGE MODEL TRAINING. They must be burned properly and retrained. You CANNOT fully FORGET BEHAVIORS without a FULL RETRAINING on those behaviors. They used a similar lora weighting system as I used to create my bird lora, and it absolutely destroyed the image trainings and details. I warned everyone about this, and here's the clear-cut evidence in sd3.

Tagging is very very crucial here. There's a substantial importance to pose, angle, offset, and camera especially. Cowboy Shot being corrupted by multiple other tags, portrait bled with other tags, and so on. There's a substantial series of problems here that need addressing, but it should be fully doable in a short amount of time with a bit of elbow grease.

It requires a considerable amount of more image data, more image information, and a low powered training sequence. The route, pattern, and specific configuration is crucial to training this without destroying the core model.

It seems to treat nudity as though it's clothes. As though everything is simply another layer to be worn over the skin, so it needs to learn what skin is.

Hands are absolutely atrocious. I can't understand why they're so nasty by default, but they are absolutely atrocious. The lora finetuning actually repairs considerable amount of hand problems and introduces new hand problems. Not a good look for a base model to be honest. SOMEONE didn't use HAGRID.

Negative prompting seems to have little to no effect at times with sd3, lora or no lora. The outcome is often the same, completely ignores negative prompting, or negative prompting counterweights the positive prompting in unexpected and highly damaging ways.

WEIGHTS ARE NOT WEIGHTED CORRECTLY. There's a great deal of incompatible weights trained into the default responses, which cause things like overlaying images, destroyed textures, superimposed problems, and things like obviously censored and destroyed shapes. I need to figure out how to resolve this. It might need a full model weight rescale, since the entire core model literally needs a shift of 3-4x for some reason. I'm honestly not sure what it means currently, but I'll be researching this topic and following more information based on the paper and followup papers.

Successes:

Style application. I'm over 80% certain that the entire anime style can be superimposed over any concept with the proper pose training.

Low strength does considerably less damage than anticipated. This is a pretty small lora size, so the experiment being successful at this small lora size is arguably one of the most interesting aspects to me. The larger loras were a common system, kohya commonly releasing large loras. The larger loras may be necessary, when you have substantially more image information than I trained with, but not with the 110 subset I used.

SOME NSFW elements were introduced, and yet they still do not cut through when provoked. The outcome is promising enough to include a full nsfw subset for the female form, which will likely push the consistency core image set requirement to about 1800 or more.

MULTIPLE<<< pose elements were introduced into the tag pool, allowing the simplistic and sometimes in-depth control of poses, even without a large amount of image data training.

Failures:

Body deformities. The body will contort, deform, and become disturbing with almost no provocation still. It's a bit more difficult to contort it, but it definitely will for now until I introduce the full pose set into the mix. The toggles when set correctly will produce a fair output, and when they aren't, well, play with it.

Color incorrectness. The white background is pretty burned in, but it can be negatived, just not with the comfyui I released for v0.2. As much as that comfy was interesting as an experiment, the outcome didn't conform to the goal, so I converted to a single prompt in with good results.

Blotting will likely not be possible until I establish a better bottom line. NovelAI introduced a new color swap system that will help alleviate this problem for creating SD3 training data, so I think this should be sufficient for now. Latents aren't exactly compliant at times with SD3 for now, but they can be harnessed.

The higher the strength, the more the model suffers, probably meaning even LESS training strength required and more time for detailed element finetuning, and MORE training strength with less time for the more important burn masking and layering.

Conclusions:

The 80% goal has not been met yet.

Some goals were met for this basic system but it must be scaled up to tackle the 80% ratio.

The testing and outcome shows that the consistency suffers less than the 25% ratio for many tags, and 100% successful for some tags that weren't in the system at all beforehand.

A full pose system, ratio system, screen anchor locking-point system, depth system, rotation system, and associative camera access points using the standard danbooru/gelbooru/sankaku/e621 camera ratio tags must be applied to create a proper anime system.

Obviously Corrupted and Censored Tags:

EACH OF THESE TAGS were completely butchered by the exact same lora censoring system I warned these people about ahead of time. They flat ignored my opinions and did it anyway, completely ruining their model. Nobody ever listens to the person without the degree in AI.

lying

lying on back, lying on side, lying on bed, etc all appear to be post-training censored, and in the most brutal fashion I've ever seen. They used a censoring lora system and just shunted the outcome in there. Like an 18 wheel semi truck speeding down a small road with low hanging powerlines, it completely ripped apart the whole system and destroyed all the lower hanging power lines that got caught in the crossfire.

squatting

Surprisingly less damaged than the others, but it definitely has some substantial damage. Anything related to this is very very hit or miss, clearly.

legs

Completely disjointing and disturbing legs often appear when attempting to control them, leg positioning must be trained with pose. Most often extra legs, disjointed legs, extra legs, and missing legs.

More often you'll need to define clothes rather than actual legs, making the legs tag disturbing when used. Like a kind of parody, even though the legs tag should be the anchorpoint between all legs. You can tell they did this one on a whim.

arms

More often you get extra arms, rather than where you want your arm to go. This needs substantial data.

Sometimes you can create more consistent outcome using gauntlets, gloves, etc.

They censored arms to obviously prevent people from using full arm control here, or they simply didn't finetune enough to prepare the arms. Either way I don't really mind, since it'll be a smaller effort to fix these than the legs.

Pre-Release News:

Currently brewing a full retrain of the initial system with a much lower training rate. This should increase quality for generations at 100 epochs. It'll be ready in a few hours for testing. I'm not a big fan of how the comfy turned out, so I'll be making something simpler later.

V0.2:

I left this thing baking overnight and the chef's choice is now not too bad. The reliability of this seems to be a lot higher, but it's still not fully trained yet. It's definitely getting closer. There is no black magic here, just cherry picking. It's not fully ready yet, but it's definitely showing promise towards that goal.

0.5-1.0 recommended strength, after 0.6 I suggest using a prompt to nullify the white background and providing a more detailed scene for your character.

Prompt:

Describe your scene,

features, for, a, female, using, danbooru, tags, (not literally this, use the actual tags from consistency).

Negative Prompt:

simple background, white background, white whatever

V0.1:

I recommend running this on very low strength;

0.1-0.4 is what seemed to work best.

LCM sampler works very well for this one.

Try Euler, DPM2, HEUNPP2, UniPC, and more.

DO NOT use EULER A, DPM2A, or the Ancestral samplers.

ComfyUI is the testing grounds, so be aware and have a lora loader ready to play with.

This is using the exact same released training data images as: Consistency v1.1 LOHA SDXL

HOWEVER/// I ran the tags through the ringer quite a bit. Considering the (booru) tags either don't exist or are very loosely associated with SD3, I predicted the outcome of using JUST the simple tags would have been damaging rather than helpful. So far, the outcome even with the increase in tags and the simple sentences still didn't end up very effective, so to facilitate this need for increased information, I plan to introduce the full 1500 image Consistency v2 base including a regulation image set of about 45000 pose based images.

This is my first attempt at a SD3 LORA and the first training of one. I spent a good portion of the afternoon making SimpleTuner function on windows docker. This wasn't an easy feat due to the lack of documentation for SimpleTuner and the complexity of the system, but the outcome allows me to train on my 4090 so I have no complaints. It also provides a more flexible training environment, if you can put up with the C++ grade level errors the thing throws at you as you try to make it work.

FINDINGS AND OUTCOME:

My findings were... not too good really. I'm surprised it worked at all, let alone actually made anything cohesive. It can be a bit fun to play with sure, and it has some NSFW elements, but they are definitely not as pronounced as the Autism version. Some of them are even less pronounced than the base SD3 so that was an interesting outcome.

The LORA is very small in size, and the text refiner isn't necessary unless you want to actually make text apparently.

There is a considerable amount of flaw here. The bodies often don't generate limbs. The hands don't always show up. The legs often deform or twist when using some angles. The arms often warp or contort, but somehow less than normal.

It fixes a lot of flawed body positioning, and introduces new flaws! I'll work out a full list of successes and failures tomorrow.

SUCCESSES:

So far it seems to be able to handle low powered pose control to some degree. It repairs broken limbs, smashed torsos, and even introduces NEW forms of broken limbs and smashed torsos, but lesser in a lot of ways.

FAILURES:

I sincerely doubt this will bench above the 80% success point.

The goal of consistency is to hit that point, so I plan to at least breach that point within the realm of SD3, before I consider this experiment a success. It's by far inferior to NAI, and can't even compare to what PDXL and Consistency v1.1 can put out. It needs a lot more steps and a lot more training.