Verified: a year ago

Other

The FLUX.1 [dev] Model is licensed by Black Forest Labs. Inc. under the FLUX.1 [dev] Non-Commercial License. Copyright Black Forest Labs. Inc.

IN NO EVENT SHALL BLACK FOREST LABS, INC. BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH USE OF THIS MODEL.

Over 60% faster Rendering with enable_sequential_cpu_offload set to false

For >=24gb vram

You can now prepare your image with my Outpainting FLUX/SDXL for CogVideo

Animate from still using CogVideoX-5b-I2V

Make sure all 3 parts of the .safetensors down loaded models/CogVideo/CogVideoX-5b-I2V/transformer

diffusion_pytorch_model-00001-of-00003 4.64 GB

diffusion_pytorch_model-00002-of-00003 4.64 GB

diffusion_pytorch_model-00003-of-00003 1.18 GB

https://huggingface.co/THUDM/CogVideoX-5b-I2V/tree/main/transformer

I found this on the github page it looks like the error some people are having:

https://github.com/kijai/ComfyUI-CogVideoXWrapper/issues/55

if taking a long time to render : in CogVideo Sampler try changing the "steps" from 50 lower to something like 20 or 25 you may get very little motion but it might work.

It looks like it only wants 49 in "num_frames"

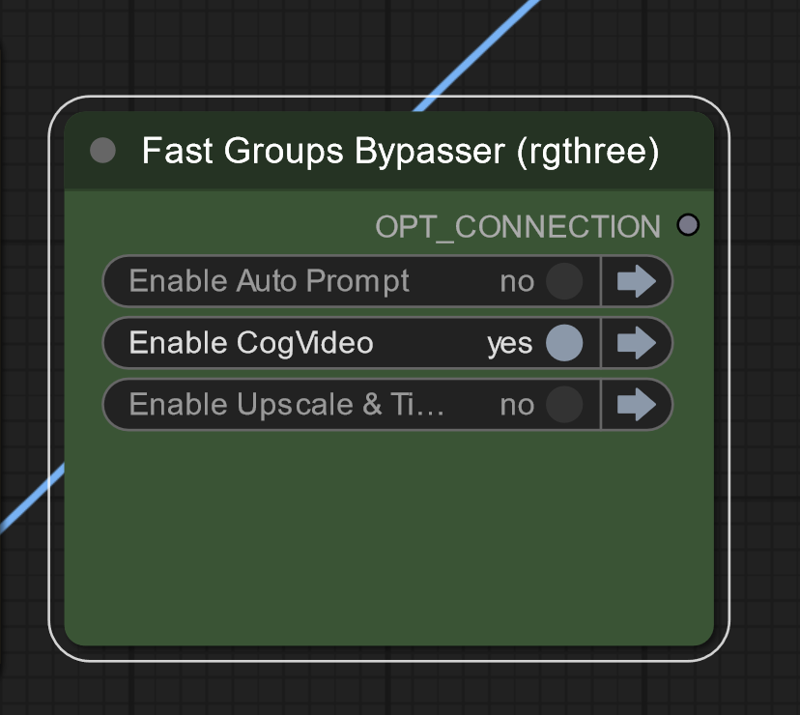

On lower vram systems run groups separately

On lower vram systems run groups separately