Verified: a year ago

SafeTensor

The FLUX.1 [dev] Model is licensed by Black Forest Labs. Inc. under the FLUX.1 [dev] Non-Commercial License. Copyright Black Forest Labs. Inc.

IN NO EVENT SHALL BLACK FOREST LABS, INC. BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH USE OF THIS MODEL.

Want to generate anime style images but aren't a prompt wizard? AnimEasy only needs a couple of words to generate decent images (sometimes even one is enough). Capable of generating both semi-realistic and anime style images along with decent text performance. See the show reel for some examples.

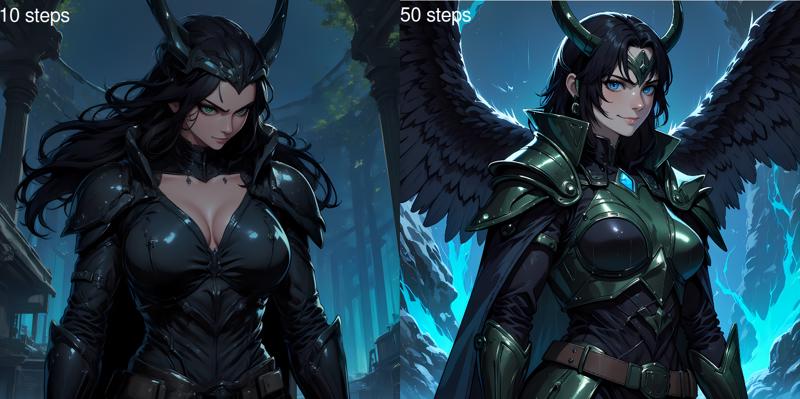

Using the DDIM sampler, a guidance factor of 3.5, and 10 steps works well enough - if you find some images are a bit half-baked, increase the number of steps and let them cook a bit more (more steps rarely hurts the image and even at 50 steps, there's still an improvement). To improve performance, I recommend the flux version of TAESD VAE. All examples were generated in ComfyUI with the fp16 version of this checkpoint and the stock FLUX CLIP models.

If you don't explicitly use a keyword like "anime", "manga", or "cartoon", you may find some images take on a more... illustration-y look to them. One way to counter this without actually changing the prompt is to increase the number of steps, this tends to sharpen the line-art and flatten the colours a bit without any major stylistic or compositional changes (although it will take proportionally longer to finish cooking).

N.B. Even using the fp8 checkpoint with fp8 CLIP models still needs a good 13-14GB of VRAM to run. If you want to have everything run native (fp16/bf16) you'll need a good 23-24GB VRAM. FLUX is also a very compute-intensive model, as a point of reference, each fp16 step takes ~1.2s on an RTX3090.

If you don't have the VRAM to run the fp16 model natively, I'd recommend getting the GGUF loader (if you use ComfyUI), the Q8 model seems a lot closer to the original than the fp8 model, and the loader also works with Q4 and Q2 quantized models. The only advantage fp8 has is that it has native support in a lot more programs whereas GGUF sometimes needs third-party plugins to work. Just be aware that any quantization has a bit of a compute penalty, I saw an ~30% increase in step time on an RTX3090 no matter whether I was using Q8, Q4 or Q2.

"backyard anime picnic" - steps:50, cfg:3.5, seed:918081932971977

"backyard anime picnic" - steps:50, cfg:3.5, seed:918081932971977