L.O.F.I: Limitless Originality Free from Interference

[update:240515]

🚀 LOFI V5 - Final

This is the last version in the LOFI series of models. There likely won't be any more updates to the LOFI model in the future (unless an SD3.0 is released, in which case, a LOFI version for 3.0 might be trained).

Version 5 is quite special, as it even produces an effect somewhat similar to using "LCM". You must use a very low CFG to utilize it. This model can also be effectively combined with LCM or HyperSD for enhanced performance.

Additionally, this model is extremely sensitive to "prompt word attention". From my tests, no prompt word weight should exceed 1.2, and oftentimes, removing all prompt weight leads to better results, indicating that the model has a precise understanding of the importance and relevance of prompts.

🛠️ Recommended settings:

Sampler: DPM++ (series) / Restart

Steps: 15-55 (35 recommended)

CFG: 2-5 (4 recommended)

> The lower the CFG, the more creative the generated image may be.

📸 nobody issue:

This model has a strong preference for a photographic "subject". It could prove difficult to generate images lacking a definite subject. If you do wish to generate landscape pictures with this model, the use of controlnet-depth is recommended to manage the output.

💡 PS: In fact, this model was completed quite some time ago, but I believed that the 1.5 model community might not remain active following the release of SDXL, so I held back the release. It now appears that the 1.5 model is still very much in use, often employed as a refiner for SDXL by many, including myself.

💡PPS: In order to show the true ability of the model, all showcases try not to use lora and post-processing. If reasonable lora and post-processing are used, better results should be achieved.

🆕 and, a new distillation model has been released:

- CaseH (lofi_v4 fork)

[update:230822]

LOFI v4 released

What updated

More training and fine-tuning

Inject sdxl1.0 knowledge (about Portraits and Machinery)

Fix some screen composition bug (bug in v3 version: often generate broken cloth and scenes)

Advices

The v4 does not require any prompt to improve quality. Try it without (best quality, masterpiece...).

The v4 does not require strong prompt weight adjustments. Try removing all prompt weights.

The v4 does not require Hi-RES. Try big steps (>50steps) and use a DPM++ series sampler.

The v4 still contains a little bit of Asian-style, if you don't want it, please follow this model 👉 EPIC-v1

---

V4 vs V3

提示词精准不漂移,彻底移除默认人脸风格

Precise application of prompt words, completely remove the default face style

---

[update:230720]

一个新的模型,使用 LOFIv3 + SDXL0.9 微调而来: RawXs

a new model, fine-tuned using LOFIv3 + SDXL0.9: RawXs

LOFI will focus on portrait generation, RawXs will be more realistic

[update:230624] LOFI V3

re-compose various types of LOFI-sub lora, fix overfitting

for V3 sugestions:

sampler: DPM adaptive / DPM++ / DDIM

feature to control camera is still available

extensions sugestions:

Stable Diffusion Dynamic Thresholding (CFG Scale Fix)

https://github.com/mcmonkeyprojects/sd-dynamic-thresholding/tree/master

my configuration:

Dynamic thresholding enabled: True, Mimic scale: 4, Threshold percentile: 100, Mimic mode: Linear Up, Mimic scale minimum: 0, CFG mode: Linear Up, CFG scale minimum: 0,ControlNet

https://github.com/Mikubill/sd-webui-controlnet

FaceEditor (better than restore-face)

https://github.com/ototadana/sd-face-editor

(It is suitable for image generation with a relatively small proportion of faces. If it is a close-up photo, there is no need to use it)

---

v3 inpaint

---

huggingface

https://huggingface.co/lenML/LOFI-v3/tree/main

[update:230602] LOFI V2.2

know more fake, know more real better

For version V22, here are some suggestions:

Negative prompts have a significant effect and can greatly improve quality. This model has a strong understanding of what is considered "bad-thing".

The camera control is not as strong as v21, but it also includes fine-tuning models (about 30% of the camera control capability of v21).

This training used a large amount of Asian images (~5GB), so drawing other races may require different Lora or Ti models.

[Strongly recommended] Use ControlNet. This version works perfectly with ControlNet, restricting certain parts of the image will make the model output infinitely closer to reality.

Use DPM++ series sampler, the higher the step, the more detailed the output, and cfg can also be increased.

enjoy AI world.

--

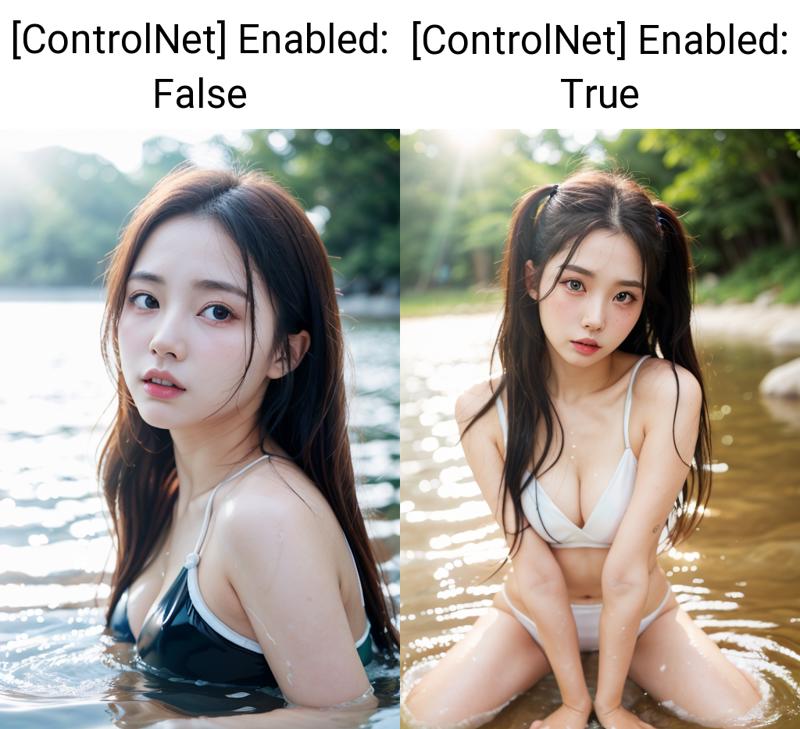

work good with ControlNet:

👈Left one, the composition is correct, but the hairstyle, expression, pose, and lighting are all inconsistent with the prompt.

👉Right one, controlnet enabled, layout of the scene been contorled, and all prompts are correctly reflected in the generated image! awsome!!!

--

LOFI V22 inpainting model released~showcase here:

--

now, my models backup on huggingface:

https://huggingface.co/lenML/LOFI-v2_2/tree/main

https://huggingface.co/lenML/LOFI-V2_2-inpainting/tree/main

https://huggingface.co/lenML/LOFI-v2pre/tree/main

--

[update:230414] LOFI V2.1

🧙more realistic details from more iterative training (200epochs)

🩹a little bit facial effect rollback (to LOFI V2pre)

🖌️add inpainting model (base on LOFI V2.1)

tips (about inpainting model):

You need to ensure that your inpaint model filename is in a format supported by the webui, such as LOFI_V21.inpainting.safetensors

(This is likely a bug with Civitai, resulting in the downloaded model file having a different name than what was uploaded... so you need to manually modify it to ensure the webui can load the model correctly.)

--

Note: because I used some of the optimization of torch2.1, it is possible that you could not generate exactly same image with same parameters (but quality was good)

[update:230325] LOFI V2

Finally, v2 is released

Based on the LOFI-v1 model, finetuned 80,000 steps / 300 epochs

📷more camera concept

🎨exact palette

[update:230224] LOFI V2pre

version 2 pre-release. This is a pre-release version, not just the merge model, it also includes some model parts that I have fine-tuned through training, including the previously released TAF and some other train models that improve image quality

But it may cause the model to focus on “portrait of characters”, so this is only a pre-release version and needs to be updated. LOFI model aims to be general and high-quality.

Prompt suggestions

Since the text-encoder used is enough trained version, do not use a very high attention to control weight, which will cause some wrong drawing, it is recommended that all attention weights be no higher than 1.2

If there is no special composition requirement, there is no need for a lot of negative prompts, such as "missing hands", which may affect human body drawing, and DeepNegative is enough for negative prompts

It is strongly recommended to use hires.fix to generate, Recommended parameters:

(final output 512*768)

Steps: 20, Sampler: Euler a, CFG scale: 7, Size: 256x384, Denoising strength: 0.75, Hires upscale: 2, Hires steps: 40, Hires upscaler: Latent (bicubic antialiased)

Most of the sample images are generated with hires.fix

Note: that if you use hires.fix, you may not be able to reproduce the image with the same set of parameters in webui, because hires.fix introduces double randomness