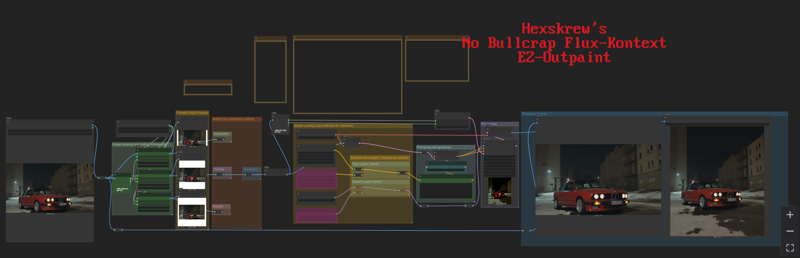

Flux-Kontext No Crap GGUF compatible Outpainting Workflow. Easy, no extra junk.

**** Click the top image from this post of just the BMW, then click the download button on the upper right for the actual workflow****

**** Click the top image from this post of just the BMW, then click the download button on the upper right for the actual workflow****

Super easy workflow for outpainting. Only other module required besides latest Comfy Core plugins are the gguf plugins. Pull the image from the bottom of this post into comfy for the workflow.

[tutorial for standard flux kontext, I haven't looked much at it](http://docs.comfy.org/tutorials/flux/flux-1-kontext-dev) | [教程](http://docs.comfy.org/zh-CN/tutorials/flux/flux-1-kontext-dev)

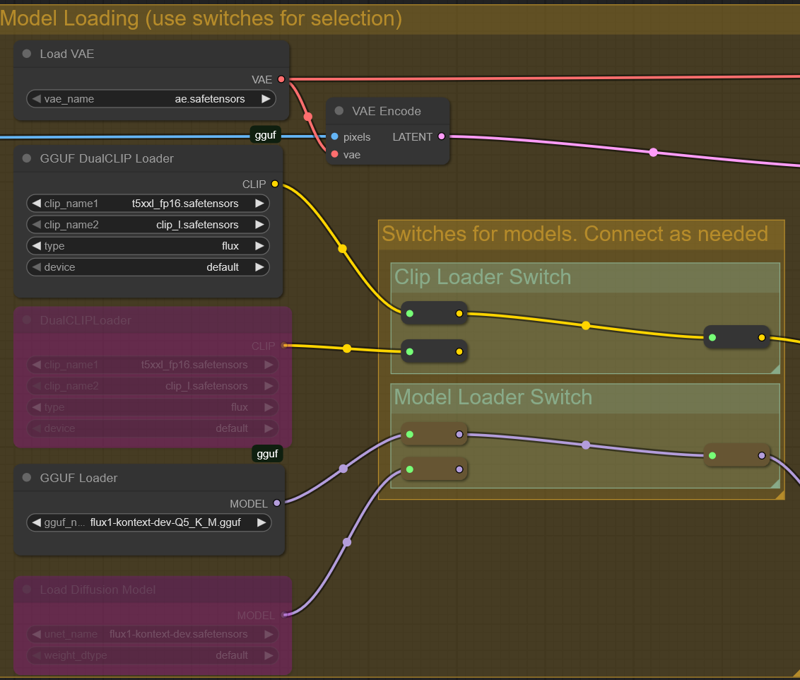

Diffusion models (Use the node 'Switches for models' to connect either the gguf nodes or diffusion and clip nodes to their end points):

..GGUFs for consumer grade video cards (Only suggestions, higher versions may work for you, but pick which one that corresponds with how much VRAM you have):

- [6gb VRAM - ex. 3050, 2060](https://huggingface.co/QuantStack/FLUX.1-Kontext-dev-GGUF/resolve/main/flux1-kontext-dev-Q2_K.gguf?download=true)

- [8gb VRAM - ex. 2070, 2080, 3060, 3070, 4060/ti, 5060](https://huggingface.co/QuantStack/FLUX.1-Kontext-dev-GGUF/resolve/main/flux1-kontext-dev-Q3_K_M.gguf?download=true)

- [10gb VRAM - ex. 2080ti, 3080 10gb](https://huggingface.co/QuantStack/FLUX.1-Kontext-dev-GGUF/resolve/main/flux1-kontext-dev-Q4_K_M.gguf?download=true)

- [12gb VRAM - ex. 3060 12gb, 3080 12gb/ti, 4070/ti/Super, 5070](https://huggingface.co/QuantStack/FLUX.1-Kontext-dev-GGUF/resolve/main/flux1-kontext-dev-Q5_K_S.gguf?download=true)

- [16gb VRAM - ex. 4060ti 16gb/ti Super, 4070ti Super, 5060ti, 5070ti, 5080](https://huggingface.co/QuantStack/FLUX.1-Kontext-dev-GGUF/resolve/main/flux1-kontext-dev-Q6_K.gguf?download=true)

..Model for workstation class video cards: (IE, 90 series, A6000 and higher)

- [Workstation class or higher (90 series)](https://huggingface.co/black-forest-labs/FLUX.1-Kontext-dev/resolve/main/flux1-kontext-dev.safetensors?download=true)

vae

- [ae.safetensors](https://huggingface.co/Comfy-Org/Lumina_Image_2.0_Repackaged/blob/main/split_files/vae/ae.safetensors)

text encoder

- [clip_l.safetensors](https://huggingface.co/comfyanonymous/flux_text_encoders/blob/main/clip_l.safetensors)

- [t5xxl_fp16.safetensors](https://huggingface.co/comfyanonymous/flux_text_encoders/resolve/main/t5xxl_fp16.safetensors) or [t5xxl_fp8_e4m3fn_scaled.safetensors](https://huggingface.co/comfyanonymous/flux_text_encoders/resolve/main/t5xxl_fp8_e4m3fn_scaled.safetensors)

Model Storage Location

```

📂 ComfyUI/

├── 📂 models/

│ ├── 📂 diffusion_models/

│ │ └── flux1-dev-kontext-dev-Qx_x_x.safetensors (GGUF FILE) OR flux1-dev-kontext.safetensors (24GB+ Video Cards)

│ ├── 📂 vae/

│ │ └── ae.safetensor

│ └── 📂 text_encoders/

│ ├── clip_l.safetensors

│ └── t5xxl_fp16.safetensors OR t5xxl_fp8_e4m3fn_scaled.safetensors

```

Reference Links:

[Flux.1 Dev by BlackForestLabs](https://huggingface.co/black-forest-labs/FLUX.1-Kontext-dev)

[Flux.1-Kontext GGUF's by QuantStack](https://huggingface.co/QuantStack/FLUX.1-Kontext-dev-GGUF)

Pick your diffusion model type (gguf or regular) and gguf or regular clip loader by dragging the link from one reroute to the other. Outside of that, you download the models and put them in place with the handy shortcuts for which vram size you require, shove your ugly mug in the load image and hit run. Super simple, no fiddling with crap, no 'anywhere' nodes, just simplicity.

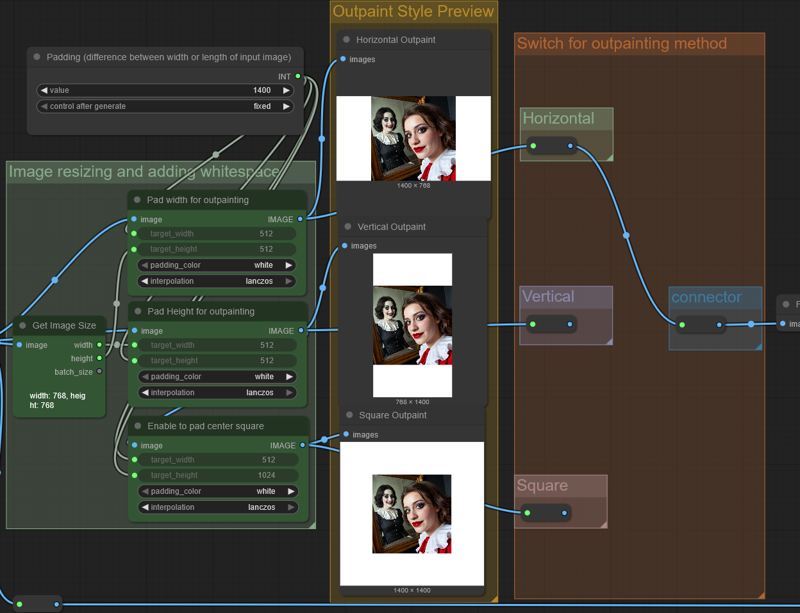

This workflow does not use image stitching. Instead you adjust the amount of padding you want to add to your image and connect which type of inpainting you want (vertical, horizontal, or square. Be aware square is fiddly. It's easier to do horizontal, then vertical).

I believe in simplicity in workflows... Many times over someone posts 'check out my workflow it's super easy and it does amazing things' just for my eyes to bleed profusely at the amount of random pointless custom nodes in the workflow and endless... Truly endless amounts of wires, groups, group pickers, image previews, etc etc etc... Crap that would take days to digest and actually try to understand..

People learn easier when you show them exactly what is going on. That is what I strive for. No hidden nodes, no compacted nodes, no pointless groups, and no multi-functional workflows. Just simply the matter at hand.

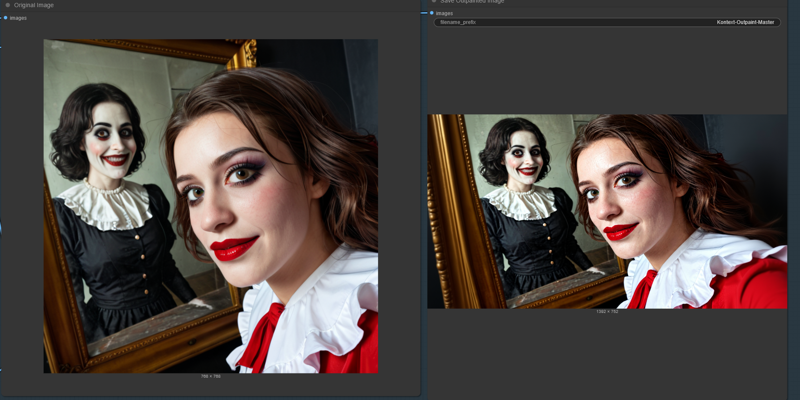

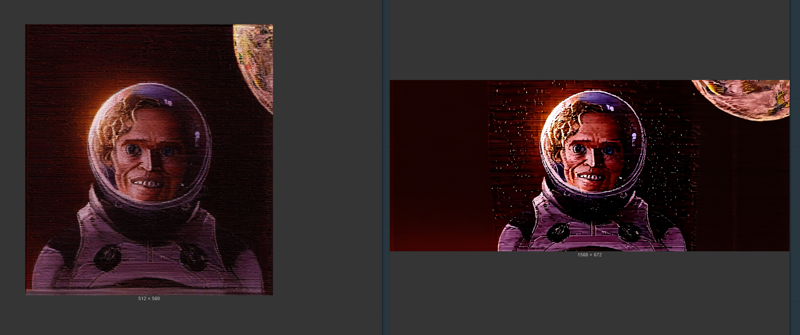

Examples: