Hello, fellow latent explorers!

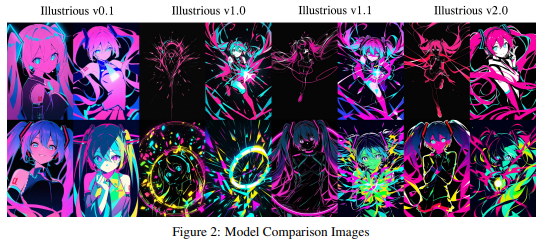

Recently we had a bit of drama with Illustrious-XL 1.0 release. I purchased this model to support creators and see what they brought to the table. In this article I'll first muse about my view on current situation, delve into a bit of technical details that I see some community members are missing and then proceed with actual comparison. Because currently the only official thing we have is this image from their paper:

Otherwise it's some ELO scores with some relatively old models, that don't say much to me personally.

My goal is to check innovation if innovations the authors are claiming is really that innovative. Feel free to skip the musing part.

~Musing

Here I'll throw a few jabs at authors and community

A quick recap on the whole situation.

IllustriousXL 0.1 was released in september 2024. It boasted a weak description of it being trained on danbooru dataset. It was actually leaked before release (this happens a lot with anime base models, isn't it lol), so we have no actual idea what authors were actually planning to do with it, but in their technical paper they state using this license. https://freedevproject.org/faipl-1.0-sd/ Overall licensing arond it is back and forth, currently it is stated to have at least 3 different licenses in what I found with quick search so ugh. But community jumped on this train since it had a lot of upsides comparing it to previous big anime model Pony Diffusion XL V6 like being built upon actual danbooru tags etc.

0.1 is a rather rough and unbalanced model by itself, but now, we have a lot of amazing finetunes and even new models that use it as a base. Back in september authors mentioned that they have few new versions of their model already cooked, but actual communication was done properly on any chanel. This approach will continue later. Now, half a year later they relased their 1.0 version as a... closed source model.

This sprouted a full on backlash from community, since previously they stated open source everywhere. Also they edited TOS in their original 0.1 model of hf. It was initially availailable for use only in online generation service of civitai and tensorart. Comments were in the range from awful to hillarious. Later it became available to download for 10$ on tensor (funnily enough for whatever reason it was available for a fraction of a cost in chinese currency). And later we had it available for download on civitai for 10k yellow buzz. And of course it was leaked both as merge and in original state same day.

This all looks like a poor damage control attempt. Combined with rather strict licensing of original and statement that they were backwards compatible in loras with 0.1 lead to popular thought that they just wanted to cash in on half a year works of others. Is that so? Who knows, communication is really poor up to date, with them only releasing a "roadmap" of five models with 0 dates. Also we have an 1.1 model that was confirmed by civitai to be online only with no weights published. And it is online only on tensor, available for few day already (they seem to love that chinese platfor, or civit is just slow as always). What's the difference? Well:

The model shows slight difference on color balance, anatomy, saturation

Apparently half a year was not enough to prepare a description, road map or even technical details about how to use their model, or even basic communication. Nicely done!

But the community, oh. A whole different level. Apparently people are completely spoiled by free stuff and all their hateful responses make authors able to play victim and say that they just wanted some profit to cover their compute expenses. Seriously, chill. This just shifts attention from actual issues like poor documetation, no controlnets and the model capability itself. Very little constructive criticism.

While I totally support that approach and willingly paid for that (as soon as I had option that I could actually use), this is a bit different in it's core. This just feels quite fishy, because they still cash in on community's work for 0.1. There are better ways to get profit out of open source project, but that is a bit different discussion. Added with complete lack of proper communication (that they had half a year to prepare for) and bunch of excuses I cannot relate with them.

Technical stuff

I see that with multiple anime models out there and over a year with Pony some people don't get what is the point of this whole thing and why not use lora of Flux for example. It is because of porn. Those models give you ridiculous level of control for characters, camera and scenes due to specific way they are trained. Despite flux being able to give you a lot - what should you prompt? Here comes the danbooru. They tag images with specific tags making it easier to create prompt for specific character scenes, styles, composition etc. danbooru can be used as a library. But it also limits you to a certain degree. That's the biggest limitation and problem. Usually these type of models are severly overfitted to strictly follow specific tags and styles, up to the point where they "forget" concepts present in original model. My thought is that it is mainly issue of text encoder limitation, but we will see if we can have best of both worlds. that's why NLP capability is

Other thing is that danbooru dataset is highly biased, guys behind Illustrious gave us a good rundown about it in their technical paper, so I advise you to check it.

This forgetting of concepts lead to separating those models to different category. Here comes the Base model thing, I also see people not really getting what it means. Currently it does not only mean that model was created from scratch, like SDXL or Flux, It also includes such models as Pony or Illustrious, where authors did "heavy lifting" of finetuning existing model to the point it is something else. And here comes the most confusing part. Pony is a finetune of SDXL, made on completely different dataset. And we call it a base model. Animagine is a finetune of SDXL (or maybe of previous versions of Animagine) and we call it a base model. Yet it is listed that base model is SDXL here on civit. Kohaku-XL is a finetune of SDXL. Illustrious is a finetune of Kohaku and it is listed as a base model. Noob-AI is a finetune of Illu0.1 but they greatly expanded dataset and even trained a v-pred version. Yet it is not listed as a separate base. Honestly even I have no idea why, it went completely different route. But they all share same tag library with only Pony and Noob expanding it. My guess that's the real reason why all their loras are kinda interchangeable to certain degree despite different "Base model".

What do we actually need from a base model? Honestly it has to be as unbiased in the style as possible, thus making it easier to shift latent where you need when creating lora. If you have 2.5D style finetune - loras that are not overtrained and big enough will give you that 2.5D look on it. But if you will train on it, your lora will probably inherit that 2.5D. Thats why stylistic comparison of the models is not the point of this article.

So what should I actually compare?

This took me a bit longer than I expected. Well, let's check model description (taken from original model description):

1536×1536 Native Resolution:

First-of-its-kind Stable Diffusion XL model to natively support 1536px resolution. Generates images with exceptional detail, sharpness, and clarity at high resolutions that were previously unattainable in SDXL. It supports wide range of resolution between 512x512 to 1536x1536 - resolution such as 1248x1824 is capable without any high-resolution modifications.

NLP + Tag-Based Prompting:

Integrates advanced natural language processing with Danbooru tag-based prompts. Still, tag-based approaches are more concise and accurate - but this hybrid prompt system means you can use plain English descriptions or precise tags (or both) – the model understands and leverages each, giving you greater control and nuance in image generation.

Extensive Compatibility:

Fully compatible with a wide range of extensions and add-ons. LoRA, ControlNet modules, and other adaptation methods trained on Illustrious v0.1 work seamlessly with v1.0. You can mix and match enhancements to fine-tune style, pose, or composition without breaking compatibility.

Pretrained Base Model:

Illustrious XL v1.0 is provided as a pretrained base checkpoint without any fine-tuning on specific aesthetics or biases. This "raw" model offers a robust foundation for further training – ideal for creators who want to apply their own fine-tuning (e.g. LoRA training) to achieve particular art styles or specialized outputs. It's a flexible starting point for new innovations.

OK, so we have resolution that was previously unattainable by SDXL. This just rubs me wrong way, since with most anime finetunes you kinda have to go beyond sdxl resolution to get crisp and detailed background.

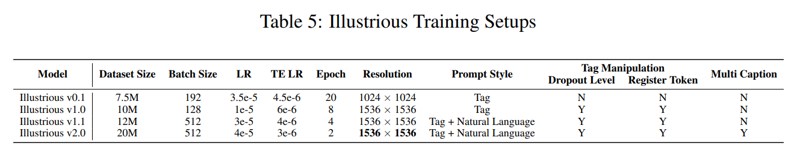

NLP. Kinda hard to test, since booru tags actually cover a lot of stuff. But wait a second. Lets check their paper on specifics:

Huh? No NLP for 1.0? Guess they forgot what they did half a year ago. Also we can easily make assumption that 1.0, 1.1 and 2.0 are further finetunes on 0.1 (look at epochs, batch size and LR). More reasons to take a look at NLP. Also half a year for 30 pages some of which are full on images, cmon.

Lora compatibility will not be the point. In my tests normally and undertrained loras like mine detailer (shameful plug, hehe) provide bad results and should be retrained. Overtrained ones provide decent results, but detailing can be off. interestingly, loras trained on Noob v-pred provide relatively good results.

If you delve deeper into their paper, they claim to work with dataset bias, I'll check that. Not much more to be honest.

Those are big claims, so I am willing to check how 1.0 delivers against other newer models specifically in this scenarios and if it is that of a breakthrough.

Configuration

I'll generate evrything in Forge with following parameters:

1248x1824 resolution

Once again, I highly emphacise that this resolution is not recommended for other models.

40 steps

DDPM sampler

Exponential scheduler

CFG 5.5

ReSharpen extension On, 0.9, 1-Cos

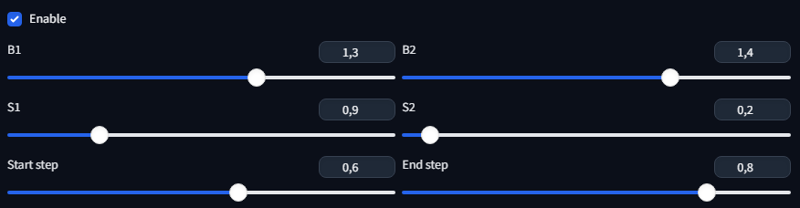

FreeU on,

SAG on, default parameters.

Why this way? It gives consistently good results on all models. 3 extensions are pure consistency without changing composition of image too much. Because we are in 2025.

For v-pred models I'll add LatentModifier manipulations because otherwise it just dont really work:

You can read my other articles where I dive deeper on how to make NoobAI v-pred work in Forge.

In my first post I used all that at once, but later I figured out that illu 1.0 gives slightly worse image with latentmodifier enabled so I'll do 2 separate passes: for eps models and for v-pred.

Except first biases comparsion, all images will be generated on 4 different seeds and dumped to a single post with links to each image here. Unfortunately preview in articles is nonexistent and high resolution images are too tiny . Posting them one by one here will make it endless. All images would be uploaded as jpg, because 70mb images are too big.

4 random images is not the ultimate way to compare, but this is not an academic paper.

How to look at images

Open link in browser, click on image to get into full screen, right click and choose open in new tab. Now you can zoom in how much you want.

Models used

Base SDXL. Just for laughs. Let's see how far did we get from it.

AAM XL (Anime Mix) - already old mix that is closer to SDXL.

Illustrious XL 1.0 - main reason for this article.

Illustrious-XL 0.1 - because why not?

NoobAI-XL (NAI-XL) EPS 1.1- claim to be new base tuned on illu0.1. Let's see if it is actually that different.

WAI-NSFW-illustrious-SDXL v11 - Finetune of illu0.1 that I think got a bit too far to be called just a finetune

Hassaku XL (Illustrious) v1.3 Style A - good merge that gave me consistently good results and can really apply artist tage without much interference of it's original style.

Animagine XL 4.0 v4 Opt - Another separate finetune of SDXL making it a base. Here because it is a new one.

NoobAI-XL (NAI-XL) v-pred 1.0 - something really new.

Obsession (Illustrious-XL) v-pred 1.0 - because Noob v-pred sits there alone, so why not compare it against finetune. Because merges with eps just ruin whole lighting thing.

Pony V6 is out because there is no way you can look without tears on it's anime without bunch of loras and positive and negative prompt manipulations. You can run it separately with same prompts yourself and share results. Also it has odd tags (plump being voluptious etc) making it even harder to compare. All other models here adhere to danbooru tags making it a lot easier.

Common tags and process

Since I am too lasy to change prompts and stitch images afterwards I'll use inbuilt Forge Prompts X/Y/Z function with single prompt, multiple models and 4 random seeds that will be consistent throughout comparsion.

For this I built a monstrous quality prompt. With every image goes:

Positive: very awa, masterpiece, best quality, highres, newest, year 2024, absurdres, highres, high score, great score,

Negative: worst quality, low quality, bad quality, lowres, worst aesthetic, signature, username, error, bad anatomy, bad hands, watermark, ugly, distorted, (mammal, anthro, furry:1.3), (long body, long neck:1.3), extra digit, missing digit, (censored:1.3), (loli, child:1.3), stomach bulge, bar censor, twins, plump, expressionless, steam, extra ears, futanari, mosaic censoring, pixelated, fisheye, low score, bad score, average score, monochrome, sketch, nsfw, explicit, blurry, blurry background,

Those are scores used all around and now you see why adding Pony would make it just worse. Expect stuff like awa or 2024 pop here and there on some models that do not support those tags.

Kinda big thing that I found - add sketch, monochrome, blurry, blurry background to negative for illu1.0, it greatly improves quality (if you dont want a sketch or monochrome image ofc).

Also I am trying to keep it at least PG-13, but we'll see how it goes. Dataset has biases as I mentioned earlier 😊 Just to get it out of the way - illu1.0 feels worse in nsfw department on my tries than other models. Write a comment if you want separate NSFW comparsion done this way.

I'll first write the prompt without quality and negative, then explain what I expect to see and why it is what it is, then generate and analyze the outcome.

Musing~ I wanted to add detailed and intricate to quality to get shift style to my liking but had to drop it due to Animagine generating weird pattern like that:

All around. Just fyi.

The real comparsion starts here

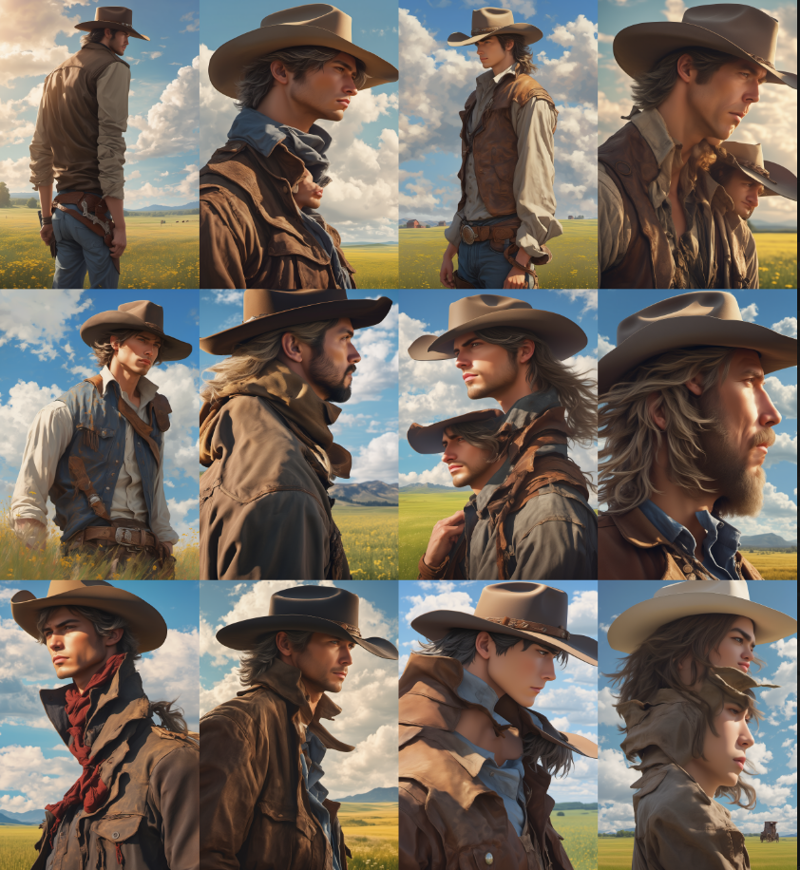

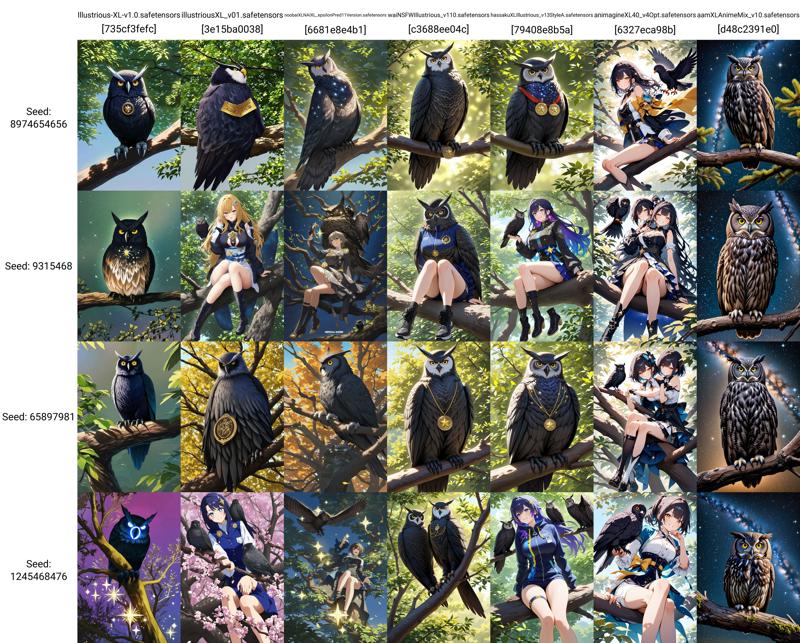

Ok, first let's check their booru dataset debias. This as a bit different from others, since I generated 12 images and it will be too long and no real reason. I assume we all know the biases.

Prompt: close-up, looking away, from side, cowboy shot, hair, eyes, meadow, sky, cloud,

Expectations: at least some males.

It can generate consistent imagery at 1248x1824 without major duplication.

Well, 100% females. And some scenery. Actually I had to prompt eyes, hair to even get a person on the image. In my playing around with it I found that even with just scenery tag it sometimes just produces landscape shots with shorter prompts. That feels more like artificial bias added than actual weighted debiasing.

Let's see how bas SDXL performs under same conditions. Added "anime style", removed cowboy shot (otherwise it would be all cowboys)

and did a quick run with base SDXL:

As we can see SDXL was not trained on booru dataset but has same bias. Major deformations and duplications as expected, not really anime. Also backgrounds are actually not that bad. From now on SDXL will be excluded from further comparsion.

To be honest I was startled a bit by this behaviour of illu1.0 with scenery and want to demonstrate it properly:

Prompt: 1girl, cowboy shot, looking away, from side, meadow, sky, cloud, scenery,

No odd stuff this time, like mixing in cowboy shot and close-up.

Only half of the images actually followed prompt. Not even tiniest girls in the corner or in petals.

So I ran same prompt against illu0.1:

All images actually have something representing a human. But last one is a stretch. Not really visible with this resolution, but it look like that upon closer inspection:

But wait a second. Wasnt high resolution imagery without major deformations etc a breakthrough of illu1.0? Their paper claims that they did not train 0.1 at 1536x1536. Maybe that's actually on person behind KohakuXL, that people behind illu used as a base? Who knows...

Psot with all images is here: https://civitai.com/posts/13348058

Well, let's start with relatively simple 1girl to check other model's capability to work directly with such resolution. Just a reminder, 1248x1824 is a real stretch there, favoring illu1.0 greatly.

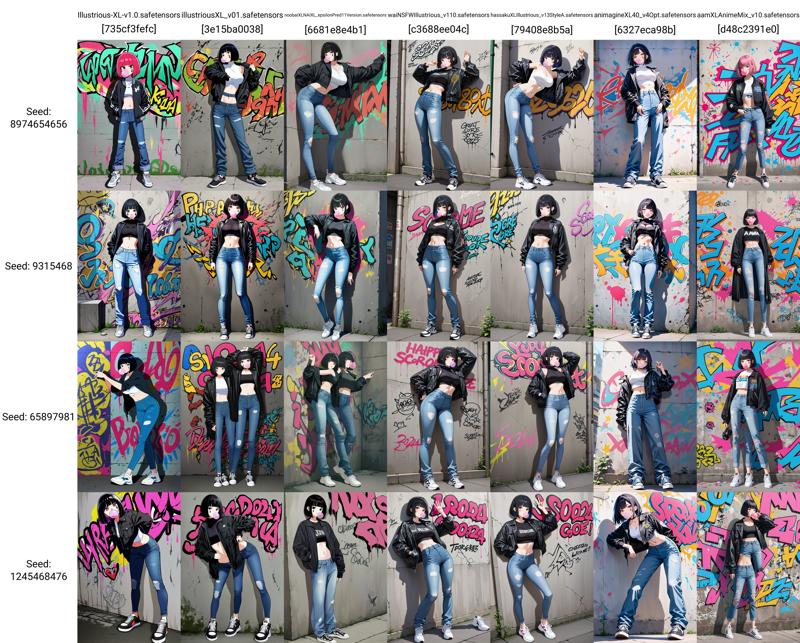

Prompt: 1girl, standing, full body, teardrop facial mark, black jacket, open jacket, crop top, midriff, jeans, sneakers, chewing gum, bob cut, blunt bangs, against wall, concrete, graffiti,

Expectations - duplications with models other than illu1.0, distorted body shape.

https://civitai.com/images/59686139

https://civitai.com/images/59688898

Illu1.0 - good performance, but 3rd seed has rather mangled arms. 4th has rather odd jacket.

illu0.1 - distorted body on first seed, duplications on 3rd and 4th. Second is great

Noob eps - duplications only on 3rd seed.

WAI - last image has random sneaker, otherwise good.

Hassaku - perfect performance.

Ani - odd jacket on 4th.

AAM - creepy faces, distortions on 3rd and 4th seed. No teardrops at all.

Noob v-pred - odd hands on first seed, otherwise great.

Obsession v-pred - fingers are weirder than on other models, last seed has somewhat distorted hand. Otherwise great.

Well, First-of-its-kind Stable Diffusion XL model to natively support 1536px resolution. Let that sink in, on the first comparsion Noob v-pred, Hasaku and Obsession performed better. First two were released in 2024. Noob also has extensive guidlines and documentation available.

Small inpainting is needed everywhere, but 3rd and 4th seed on illu 1.0 i'd rather ditched at the bin then decided to work on them later.

Ok, that was rather simple. Let's up it, but not by bit.

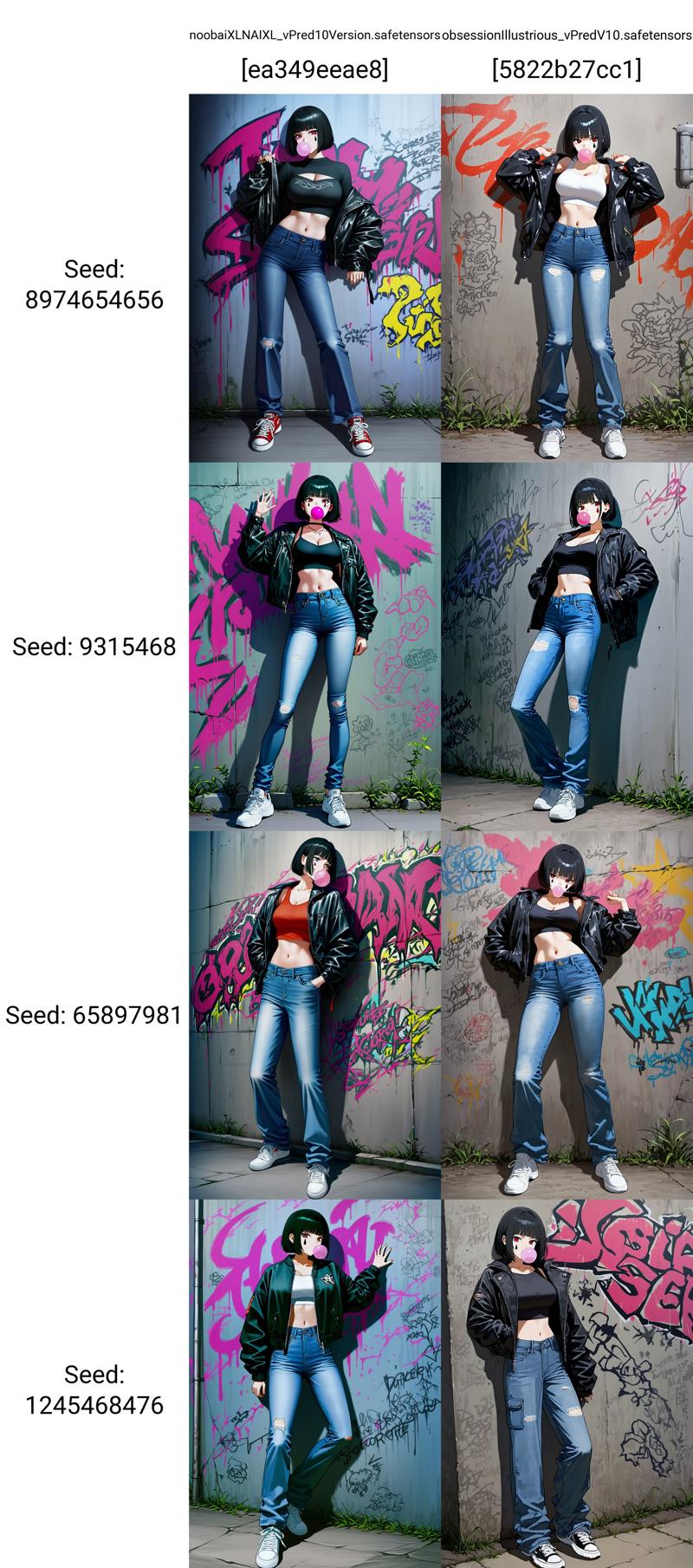

Prompt: 2girls, ganyu \(genshin impact\), raiden shogun, genshin impact, walking, side-by-side, on street, cherry blossoms, trees, scenery, petals, full body, looking at viewer, official art,

Expectations: leakage between concepts of two characters, 3 or 4 characters on scene because of scenery tag which should steer image towards wide-shot. Official art - because why not, let's see how models will respond to it. Raiden is kinda interesting since it does not have official character_(series) tag on booru.

https://civitai.com/images/59694260

https://civitai.com/images/59694287

Illu1.0 - smol Ganyu in the first seed, second is meh, but inpaintable, 3rd is ok, 4th is good. None has official outfits, casual clothing or serafuku everywhere. 1st seed has rather sloppy fading background.

Illu0.1 - 3 has doubles, last one kinda did it, but not really side-by-side. Massive AI slop in background. Just look at that:

Noob eps - 3-4 characters on instead of 2, slop on 2nd image. Bleeding concepts.

WAI - 3-4 characters, almost no bleeding except second seed where they are all wearing same clothes.

Hassaku - 3-4 characters, almost no bleeding.

Ani - 3-4 characters, some are kinda mangled.

AAM - clearly has no idea about those characres, but it is expected. Otherwise same issues as all above. Backgrounds look better at first glance, but only until you zoom in and look closely.

Noob v-pred - 3-4 characters, almost no bleeding. Some slop in the backgrounds.

Obsession v-pred - same as previous but with this hidden gem in the middle of third seed:

Well, more is not better in this case, and only one image is good enough and it is illu1.0. But also it has least detailed background and the only one with some details looks like a fading slop. My verdict is that all models failed miserably with illu1.0 failing less. Generating not so complex scene in this resolution directly is not here yet.

Now lets bridge to NLP.

Prompt: a girl dressed in maid costume cleaning living room with a vacuum cleaner,

Based on suggestion in comments to my previous quick comparsion.

This should be easy since technically everything here is covered by booru tags.

Expectations: mass of sloppy vacuum cleaners with hoses all over the place. Plus deformations due to resolution being too big.

https://civitai.com/images/59700293

https://civitai.com/images/59700307

illu1.0 - 1st one is sloppy, others are good. 2nd could be considered a ok, but background is kinda off and vacuum is so hightech that fits into a brush. Last one is inpaintable, but just look at those hoses in background. Also where the heck does that hose go in the third seed 🤔

illu0.1 - all kinds of slop and duplication in last seed.

Noob eps - generally messed imagery, but not as bad as 0.1. Except first seed. Upon second look it is just as hillarious.

WAI - actually not that bad. But hoses...

Hassaku - duplicated vacuums, otherwise good.

Ani - that's not how cleaning works unfortunately. Also duplicated character in last seed.

AAM - some actual vacuums! But it just cannot handle such resolution.

Noob v-pred - vacuums were not expected. They are a lot more realistic than I thought they would be. But for whatever reason it shifted towards realistic backgrond and result is sloppy. Also see how lighting changes the scene.

Obsession v-pred - finetune shifted it back towards anime so they look better than base.

No single perfect image and I can't say that illu1.0 is any better in this case because vacuum handling is messed up anyway with pole and hoses being out of composition. But only AAM can be considered to fail miserably at this resolution.

Let's now dive deeper into NLP:

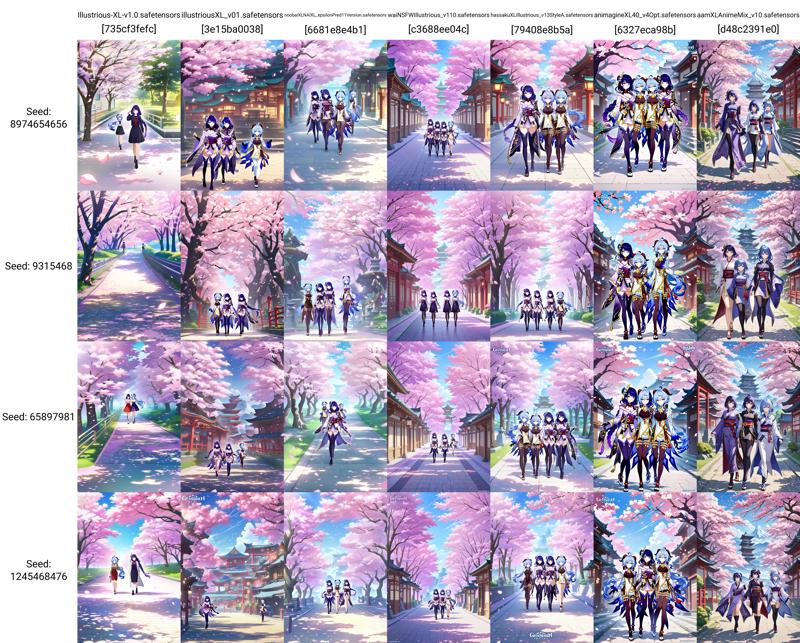

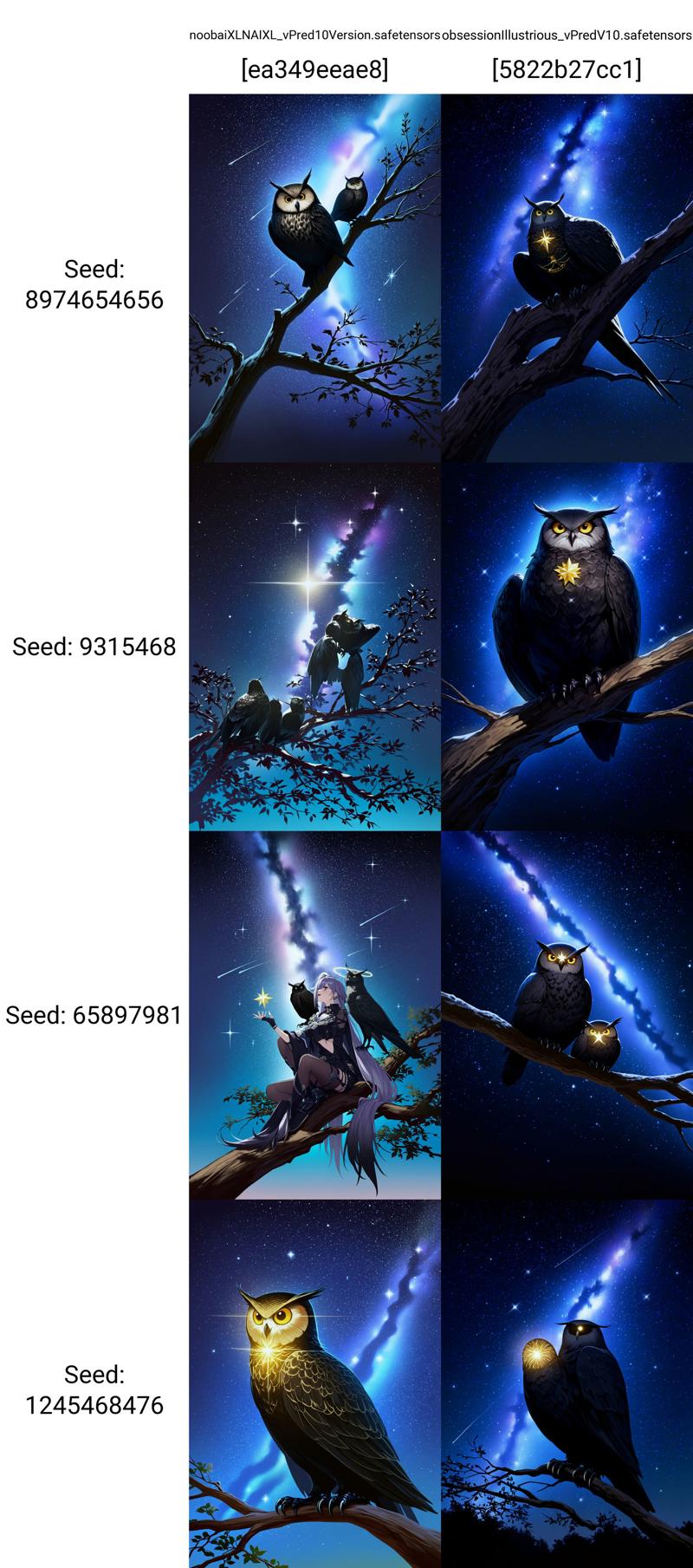

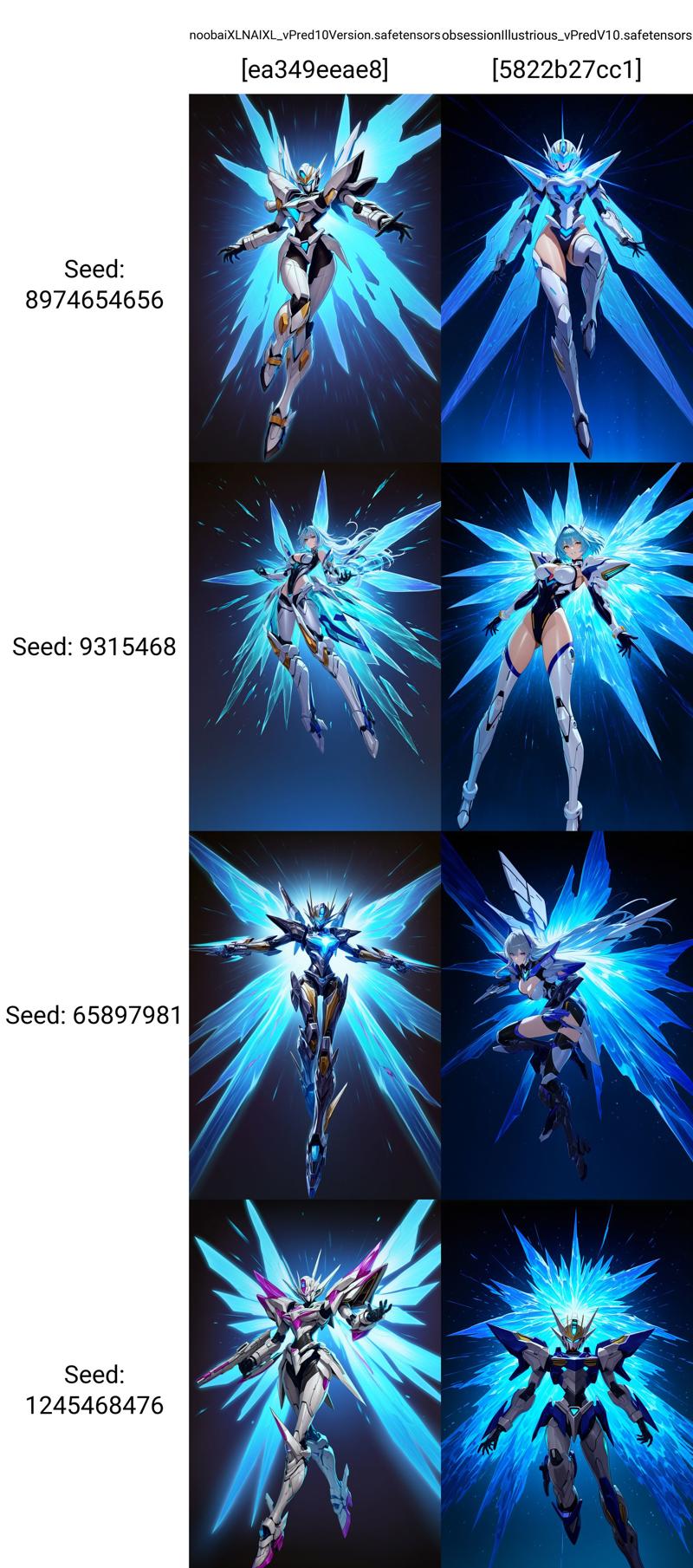

Prompt: photo of an black owl with galaxy sparkling stars, sitting on a branch, national geographic photography, award winning,

No idea what it is supposed to be, but hey I expect the result to be something interesting.

Expectations: owlgirls everywhere, since no humans tag is not in prompt. And models here a highly character based. national geographic is a booru tag, but with 32 images that are kinda random so it should not poison it.

https://civitai.com/images/59706655

https://civitai.com/images/59706680

Illu1.0 - good, but not a single hint of galaxy. Only second is what I kinda wanted.

Illu0.1 - distirtions, slop, girls. But hey, coat on 2nd seed has some galaxy to it.

Noob eps - first is interesting but distorted, others meh

WAI - two are owls with star medals, second seed is well, what it is. Last one has duplications.

Hassaku - 1st has two medals, but it can be considered ok, 3rd is good, others have duplications.

Ani - all girls, owls look more like a pigeon, duplications.

AAM - kinda of typical SDXL picture with space background.

Noob v-pred - 1st has two owls, 2nd and 3rd are slop, 4th is actually good.

Obsession v-pred - last two have duplications otherwise ok.

V-pred in general and AAM show better NLP prompt adhesion but illu1.0 performs better in this resolution.

Now let's go all in.

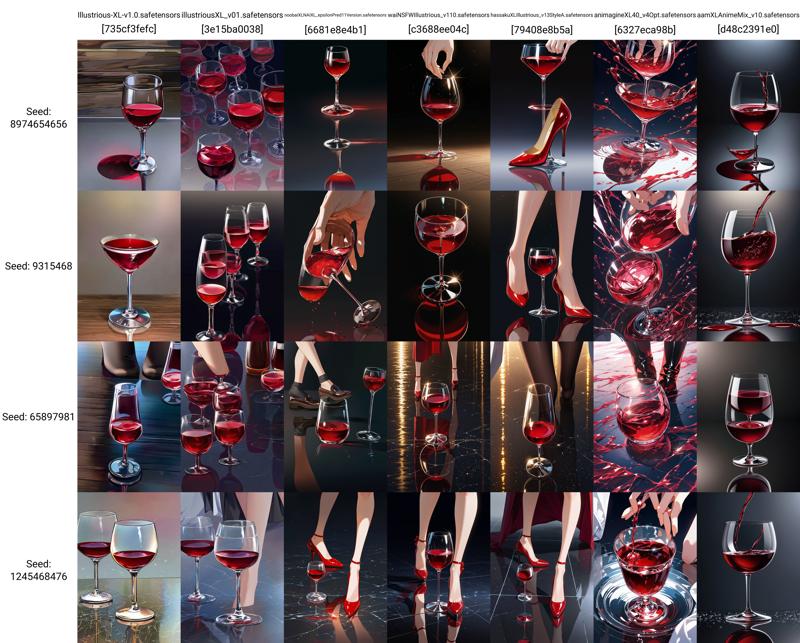

Prompt: glass of red wine standing on a reflective surface,

Expectations: bunch of legs all over the place and no reflections or something like wood with reflections all over it. Also 1girl fitted somewhere if no legs are present.

https://civitai.com/images/59711725

https://civitai.com/images/59711761

Illu1.0 - famous reflective wood on 2nd and 3rd, unexpectedly bunch of duplications. First is knda on but red blob ruins it. It was probably supposed to be a shadow, but somrthing went wrong.

Illu0.1 - you called that duplications? Hold my beer!

Noob eps - outside of legs and completely messed up 2nd seed is not as bad as 0.1.

WAI - reclective wood and a character on every image. I see you:

Hassaku - some legs for you.

Ani - surreal for no aparent reason.

AAM - SDXL peeks at you through it. All are wrong, but that's technically a single glass of wine on reflective surface.

Noob v-pred - no legs, but 2 full on silhouettes. Actually closer to prompt than Illu.

Obsession - more of that, but less silhouettes. Also less reflective.

Somehow every model followed the prompt, with AAM, Illu1.0 and v-pred models on top. Though v-pred has less legs, and no duplications despite not being able to work with this resolution on paper. Also I am not fond of reflective wood, so in my opinion it goes Noob v-pred > Illu1.0 > AAM.

Now let's pick something that booru tags do not cover:

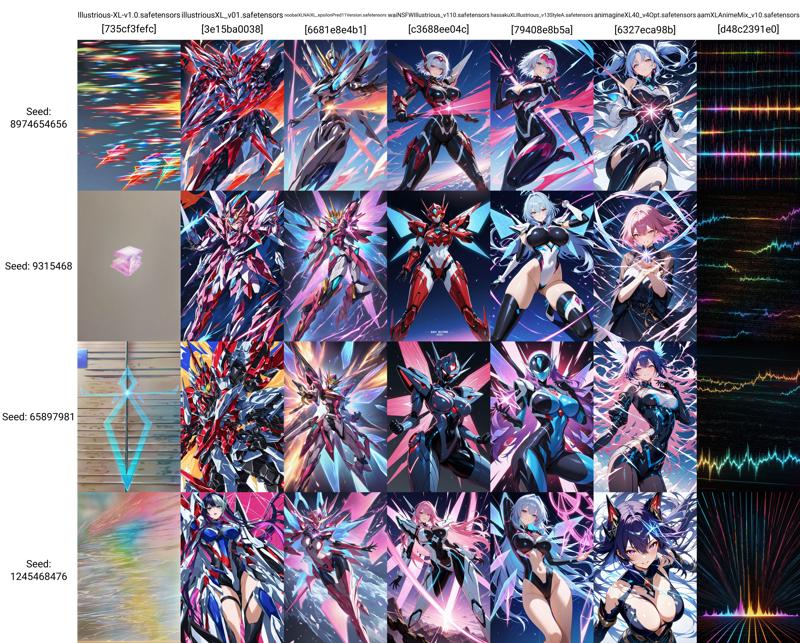

Prompt: digital fourier transform of a waveform depicted as an optical shift,

Expectation - something wavy, no idea. But will probably just get a bunch of 1girls with optical illusions.

https://civitai.com/images/59892682

https://civitai.com/images/59892685

AAM actually did the job here. Illu1.0 did not generate girls, but went mode for optical illusion which is not really the prompt. Others are just a bunch of mechas for whatever reason.

Now let's go for something funny here.

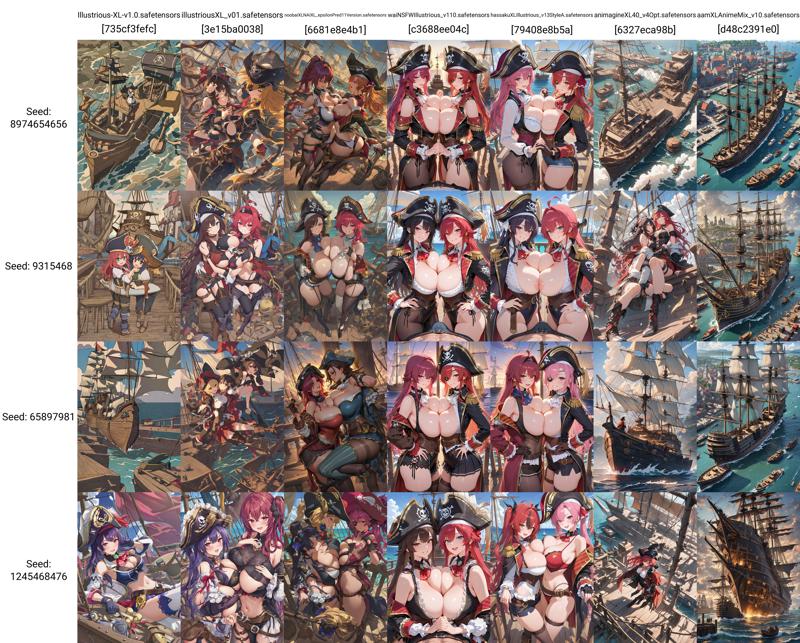

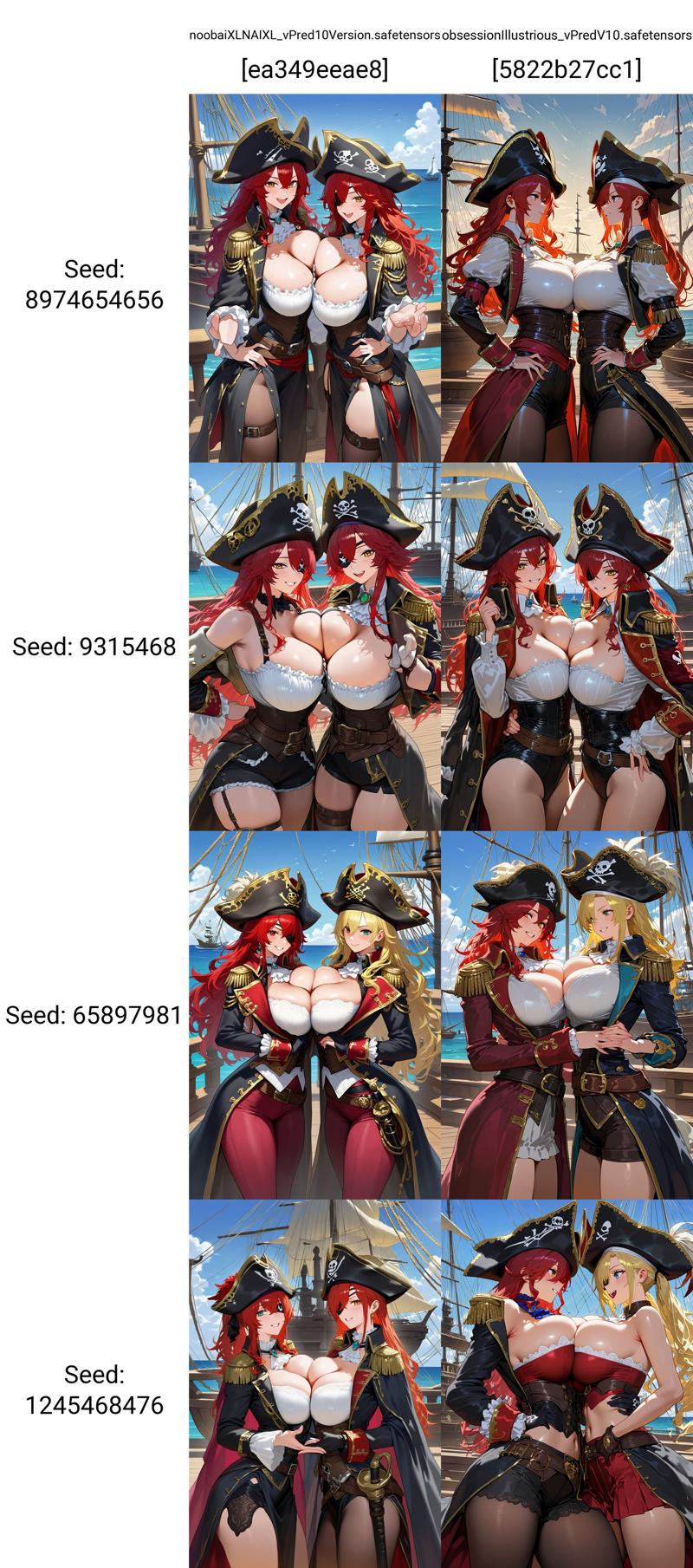

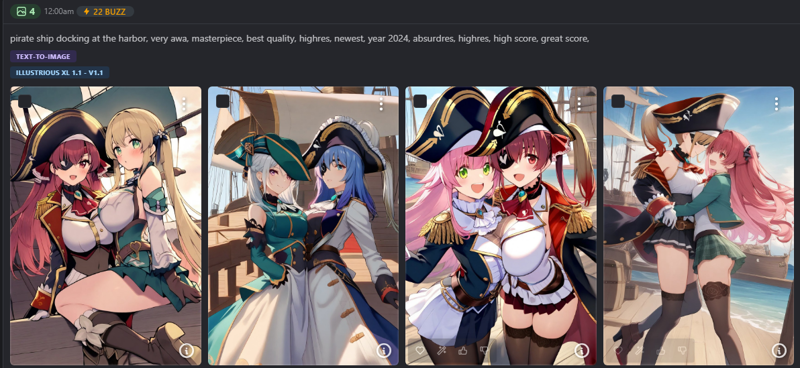

Prompt: pirate ship docking at the harbor,

Why is that funny you may ask? Well, pirate ship is not a booru tag, harbor is, but has relatively low presence (509 images) against symmetrical and asymmetrical docking (22k total).

Expectations: bunch of pirate girls with strong nsfw bias.

https://civitai.com/images/59897632

Had to add "general" tag to v-pred to keep it sfw.

https://civitai.com/images/59897656

Illu1.0 - first one is actual pirate ship, but overall image is very sloppy. 3rd one is close to prompt, but no pirate stuff and also higher that usual amount of slop.

Illu0.1 - distortions and duplications.

Noob eps - most images are better than illu0.1 but still slopy.

WAI - no harbor, strong nsfw bias.

Hassaku - no harbor, strong nsfw bias.

Ani - 1st seed looks suspiciously close to Illu1.0, but is missing pirate theme, 3rd is a ship just like Illu1.0, 2nd is pure slop, 4th is actually on point. But massive slop in background makes it unusable.

AAM - shpis, harbors, no girls and unfortunately no pirates.

Noob v-pred - no harbor, strong nsfw bias.

Obsession v-pred - no harbor, strong nsfw bias.

I also ran same prompt with Illu1.1 on Civitai just to check the difference. Reminder - this is default resolution.

Nothing special.

Enough with NLP. Let's test how models perform with some tricky poses in this extreme resolution.

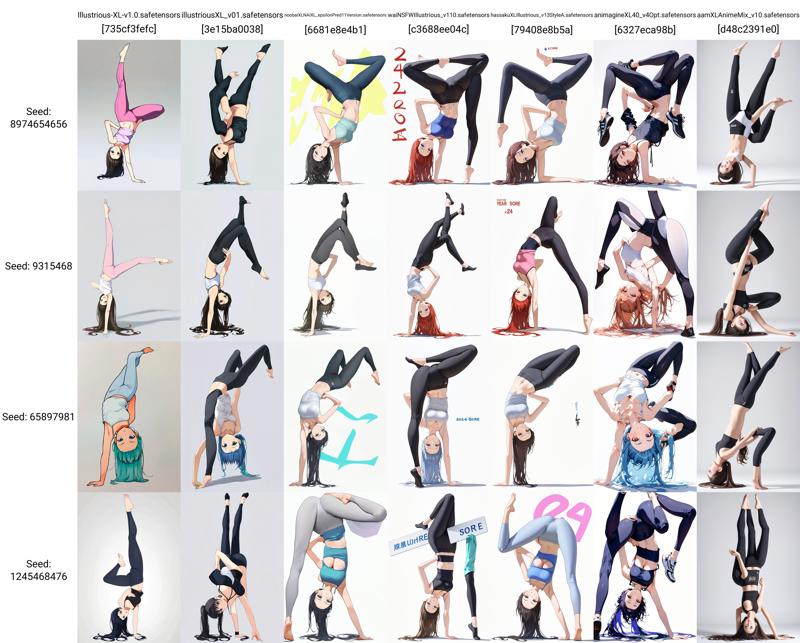

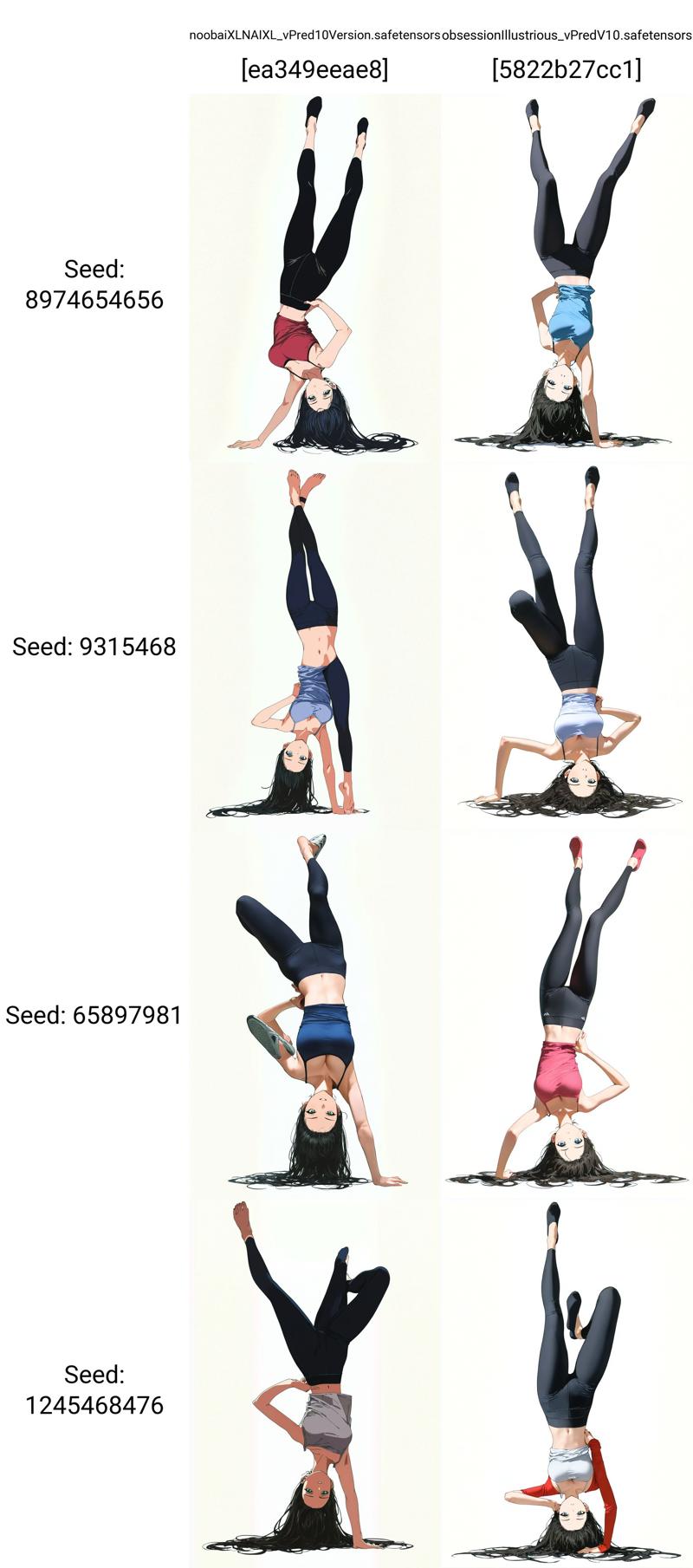

Prompt: 1girl, full body, one arm handstand, yoga pants, camisole, long hair, upside-down, hand on own hip, looking at viewer, legs up, white background,

Expectations: Probably most models will try to place one leg on the ground. Also distortions would be massive even with normal resolution.

https://civitai.com/images/59910011

https://civitai.com/images/59910033

Illu1.0 - all images have either arm or leg seriously distorted.

Illu0.1 - that's a failure.

Noob eps - actually 2 seed are not that bad. But every has some sort of distorted body. But leaps ahead of illu0.1 this time.

WAI - oh well, thats actually a failure, even with less distorted torso.

Hassaku - 1st and 3rd are correct.

Ani - thats some peak AI slop.

AAM - SD3.0 vibes. Oh well, probably a skill issue 😉

Noob v-pred - clearly tries to make it a headstand. 2nd and 4th seeds have extra legs, 3rd is spoiled by some unexpected sneakers.

Obsession v-pred - 1st is the only one that is really good here. Others have same issues as Noob v-pred.

Outside Illu0.1, Animagine and AAM all models can probably draw it with better negative.

Now couple suggestions from comments of previous post.

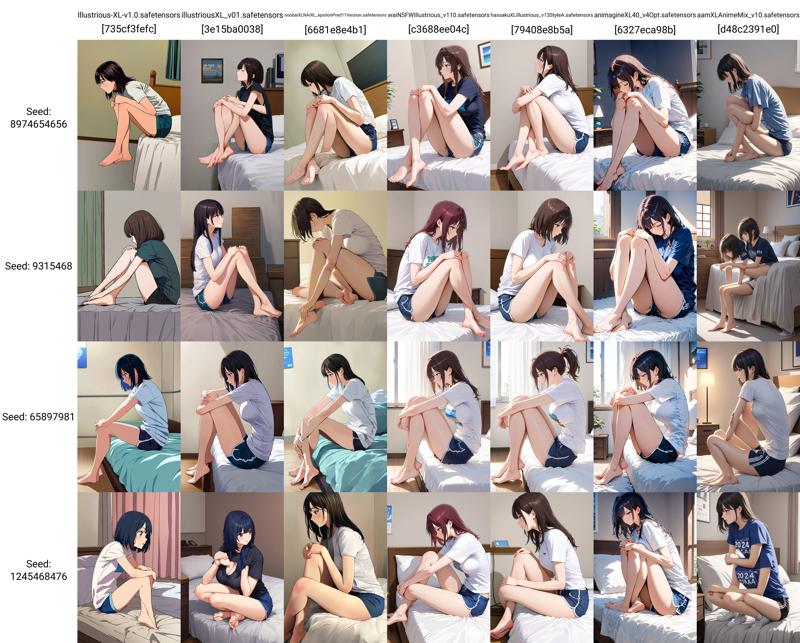

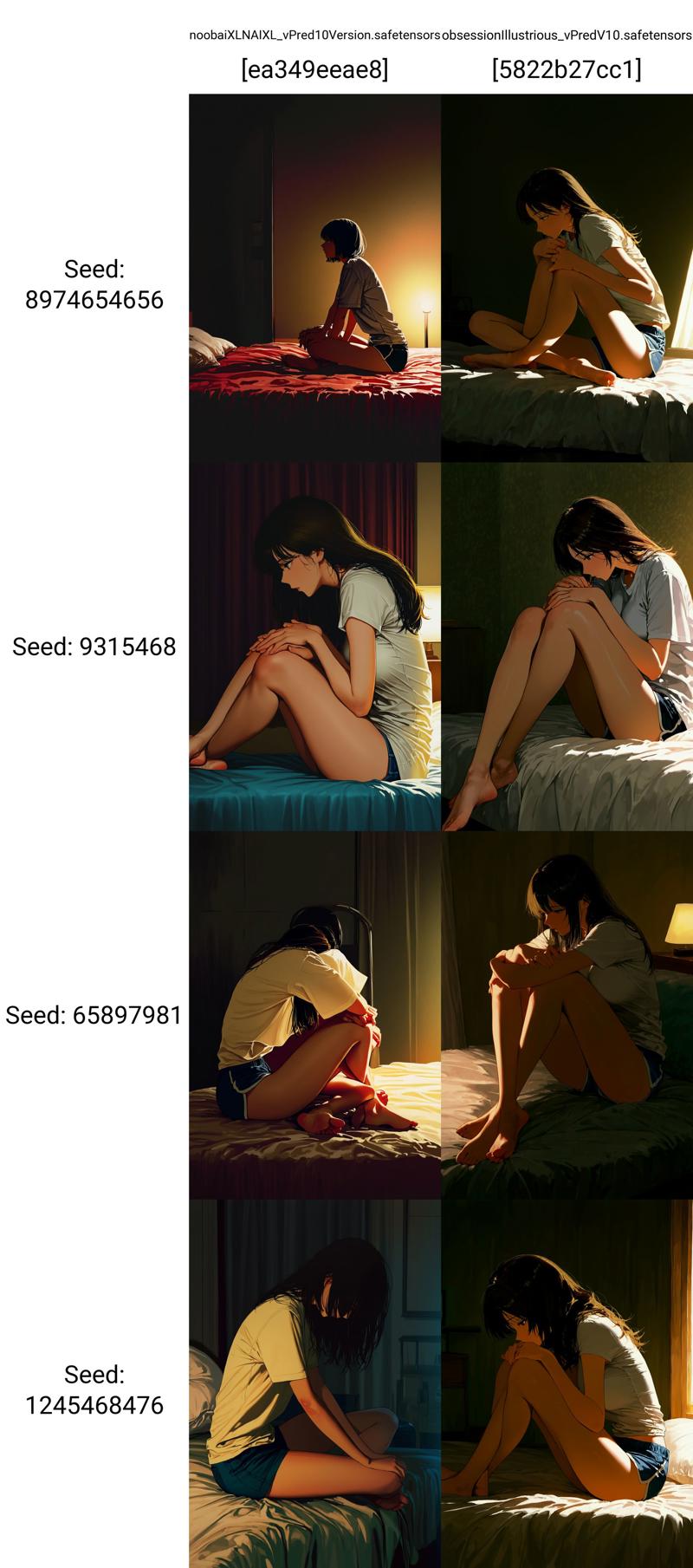

Prompt: 1girl, sitting , on bed, indoors, crossed ankles, hands on own knees, from side, short shorts, t-shirt,

I don't like this prompting. If you take a look at crossed ankles tag - it's kinda of a mess because it is too broad. It includes reclined, yoga pose, on stomach, curled and many others. This tag can be used as supplementary, but is not ok for building pose around.

Expectations: most model should fail to draw something discernable, especially at such resolution.

https://civitai.com/images/60102312

https://civitai.com/images/60102328

Illu1.0 - only last seed has something close to crossed ankles, but meh otherwise.

Illu0.1 - 2nd seed is kinda close.

Noob eps - 1st seed is on point but has duplicated leg, last seed is on point. But hands, ew.

WAI - 1st and 2nd seeds are good, others have some distortions.

Hassaku - only 2nd seed is on point, but it is really good.

Ani - only 2nd seed is good.

AAM - well, thats a failure.

Noob v-pred - well, thats some really good moody lighting. But slop in hands and feet. But 4th seed is on point even though it went for indian style sitting. But crossed ankles on every seed, just too much sometimes.

Obsession v-pred - 2nd seed is on point. Others have same issues as Noob v-pred but at lower dergree.

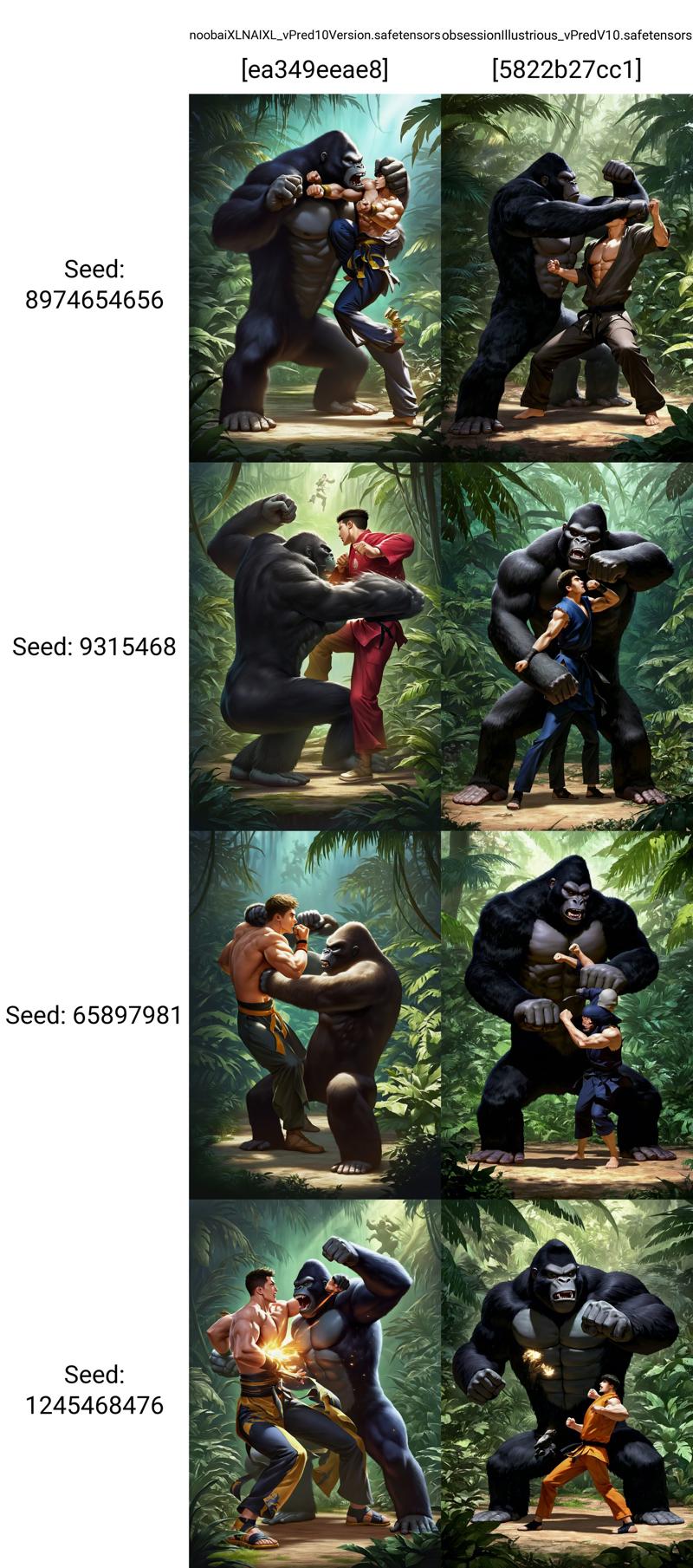

Another request was to make a consistent prompt with 1boy fighting a gorilla.

Prompt: 1boy, human, 1other, gorilla, animal, duel, martial arts, fist, battle, punching, uppercut, standing, jungle, cinematic, full body, eye contact,

This requires some out of the box thinking. I specifically added 1other for model to count a second person in frame that is not human despite official guidlines on it's usage. Does it really work or is it a placebo? On 4 seeds I got more consistent results and less duplication this way. And it gave gorilla more humanoid features in general. Also had to put in "eye contact" to make them face each other. Btw I did not tweak negative which would make whole thing a lot easier.

https://civitai.com/images/60118718

https://civitai.com/images/60118797

Illu1.0 - failed completely. Up to a point that I thing eye contact was tagged incorrectly. In 3 out of 4 seeds characters are "looking at viewer". Also 2nd seed clearly has a female. Speaking about "debiasing"... But on the upside, images do not have major duplications, and distortions are more of a style.

Illu0.1 - same but more slop and duplication.

Noob eps - despite some duplications and general mess 3 out of 4 images I can consider workable (meaning I would probably take a chance at inpainting them).

WAI - some duplications, plus 3rd seed has obvious concept bleeding and female added on top. But generally consistent imagery that can be fixed.

Hassaku - even closer than WAI. Somewhat less slop and duplications. But also not much uppercuts.

Ani - last seed has a female, distortions and duplications.

AAM - could not really handle this.

Noob v-pred - it seems that e621 dataset did it's job here, gorilla is more of a gorilla. So despite some slop and duplications, i consider this generally more workable.

Obsession v-pred - first image looks perfect, but gorilla actually has 3 legs. But it is still the best image out of them from the point of composition and minor slop.

I also tested some nsfw, but Illu1.0 is marginaly worse at complex "compositions". Maybe there are some specific tag for nsfw and standard tags like nsfw, explicit did not really work. Hope they'll publish some extensive documentation. But still, it is way better than Animagine in this regard.

I will not test artist tags, since I see no point in that. You will get what suits you anyway and 90% of people out there will still slap 6-9 loras on top.

Image post reached limit for number of images, so I'll end it at this point. That means it's time for

Conclusion

Illustrious1.0 is actually capable of generating more consistent imagery at higher resolution than other models. The problem is that most other models, when generating even complex imagery directly at such a high resolution did not fail completely. Also I cannot say that results of Illu1.0 were pristine and perfect. Despite claims of overcomming SDXL limitations I see same sdxl with same issues. Marginally better, yes, but not more. I will still suggest using slightly bigger resolution than default SDXL for all models and upscaling using mixture of diffusers to get way more detailed results.

It actually has somewhat better NLP capabilities than other models except... direct SDXL anime finetune that is still A LOT better at it. And it feels that that came with the cost of slightly worse prompt adhesion than other models in general. But other models also have some NLP capabilities to it, meaning that you are not that strictly tied to booru tag and can be a bit more descriptive inventing tags of your own.

Is it a good base model? Better than Illustrious0.1 for sure. But I find people behind that being somewhat shady in their practices. Also lack of proper documentation does not serve it well. There are certain biases to add to negative in this model. But shoud you? I'd rather wait for 2.0 and stick to other finetunes of illu0.1 until then. Or even wait for 3.0.

Also comparing it to Noob v-pred makes want to try their v-pred version even more. But I suspect we wont get it. Or get it closer to the end of the year when it would most probably be irrelevant. I consider lighting that v-pred model gives to be the biggest actual brekthrought for SDXL, because this model always struggled with that and dark images in general.

And interesting observation on Noob v-pred I made during this comparsion. In some cases e621 dataset makes image betor, but it generally has a lot more issues with hands and feet than other models. My guess is that that can also be attributed to same dataset.

Thanks for reading this lengthy article!

Feel free to share your thoughts in comments. At least they are not closed for non subscribers 💗