Objective : Testing the new model WAN2.1

The 26th of February ComfyUI released an update to support the Wan2.1 model.

Wan2.1 is a series of 4 video generation models, including:

Text-to-video 14B: Supports both 480P and 720P

Image-to-video 14B 720P: Supports 720P

Image-to-video 14B 480P: Supports 480P

Text-to-video 1.3B: Supports 480P

You can find all the files and workflow to download from the link : https://blog.comfy.org/p/wan21-video-model-native-support

Both models Image-to-video 14B are more than 32GB of space.

After downloading all the files an put on the right folders:

Choose one of the diffusion models → Place in

ComfyUI/models/diffusion_modelsumt5_xxl_fp8_e4m3fn_scaled.safetensors → Place in

ComfyUI/models/text_encodersclip_vision_h.safetensors → Place in

ComfyUI/models/clip_visionwan_2.1_vae.safetensors → Place in

ComfyUI/models/vae

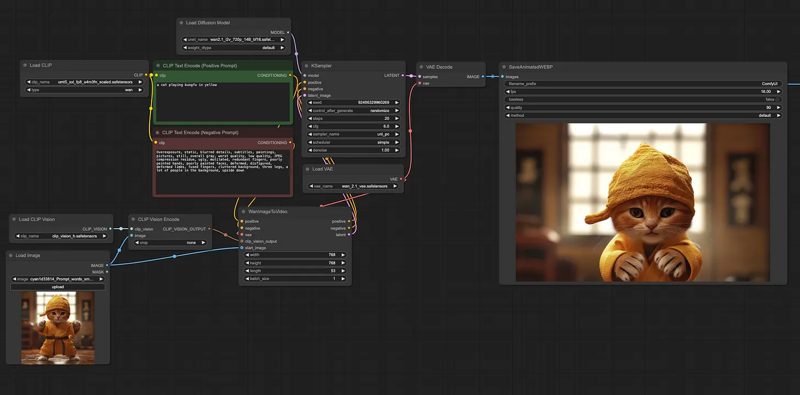

I tested the workflow on the site with an inage

With my 4080Nvidia took about 5 minute to generate a video of 2 seconds.

The quality is great and specially hands are working well now.

Faces are not perfect, at the end of the process I use a workflow to increase x2 the size and improve the face:

Here are couple of video posted in Civitai : https://civitai.com/posts/13600566

I decided to generate with some images from one folder a short on YouTube with this procedure:

I happy for the quality, but really takes too long with my 16GB of VRAM.

So searching for civitai I found this :

https://civitai.com/models/1301129/wan-video-fastest-native-gguf-workflow-i2vandt2v

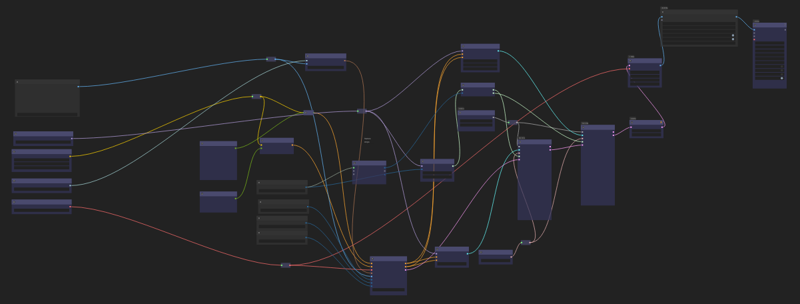

I have to say really thanks to the author https://civitai.com/user/Flow2 , the workflow worked perfectly.

Size of the workflow was set to 368x656 with 49 frames. It took less than 2 minutes to generate a video.

Only Issue, it had some issues to generate an API workflow, when exporting sometimes was given an error.

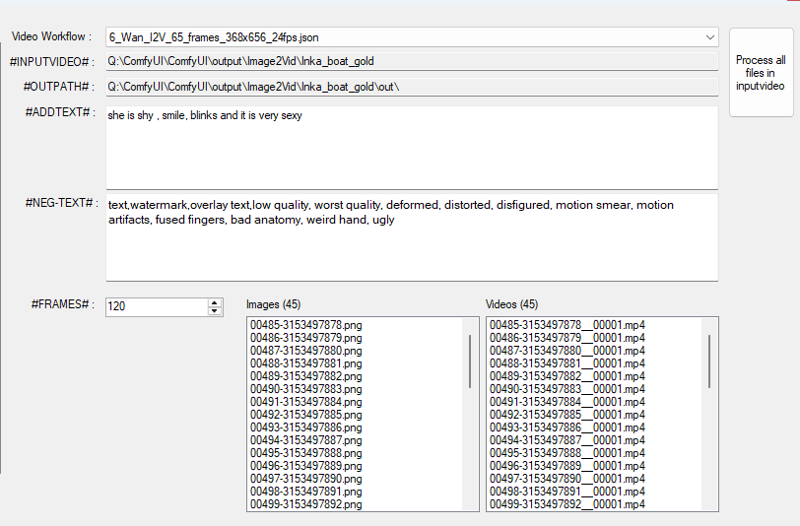

So I decide to change it a little bit (attached you see my version: Wan_I2V_65frames_368x656_24fps.json )

My idea was to create a workflow with some special words that I can replace and call the API.

I have a "personal tool" (I will release in the futuro in my itch profile https://misterm.itch.io/ but for now it is still work in progress)

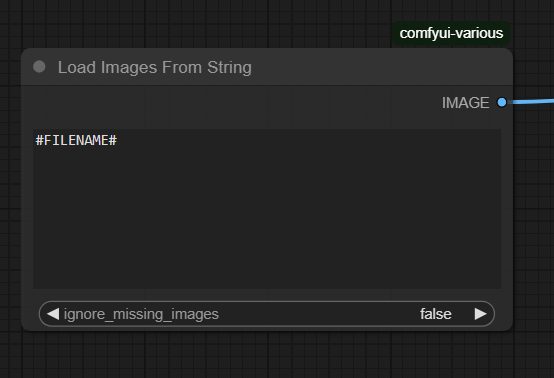

I hate the Standard "Load Image" because you need to add you image to the input folder

Instead I use "Load Images from String" where you can write the filename with all the path.

In my case I wrote "#FILENAME#" to replace the value of it.

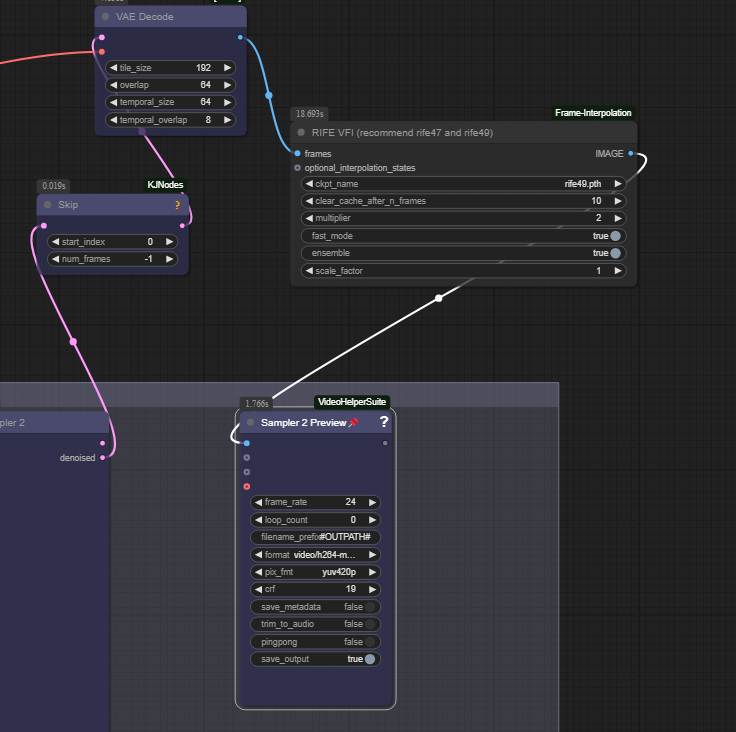

At the end of the workflow I added a RIFE VFI to increase by 2 the number of frames and I use the Video Combine to save it with 24fps in an #OUTPUTPATH# (I will replace it also with an api)

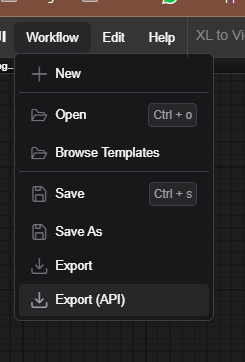

I have exported my workflow in API format

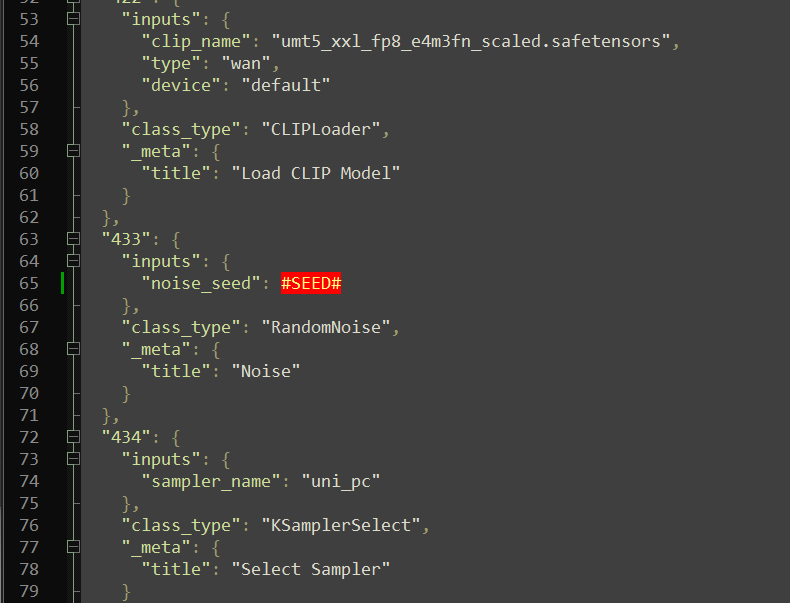

To avoid generating always the same seed, I change the value of the seed to #SEED# , normally my software will

You can run manually the images one by one, but my simple tool will process a folder with the api from a list, then I add some POSITIVE and NEGATIVE prompt . The I run to process all my files.

Technically it works quite fast, it takes an average of 180s (3 minutes) to generate a video of 5 seconds. Resolution 368x656 with 24fps.

The out is ok but still is not perfect, specially the faces.

When I finish my process, I check the video and remove the ugly ones.

The I do a second run with all the output with a workflow to upscale and change the face :

check out this article about : https://civitai.com/articles/8291/comfyui-face-swap-using-reactor

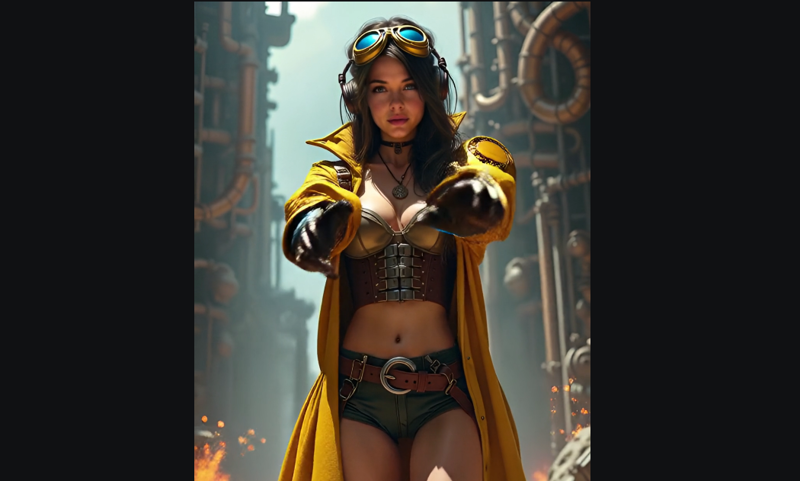

Here is an example: upcaled by 2 with face change to keep the same Model in all the videos.

Check out my toons channel for examples using this upscale tecnique (in this video below I used LTX instead of WAN 2). LTX is great but has some issues with hands and often writes annoying text and watermark on the screen.

Conclusion:

I am really happy with the results of WAN2.1 using the GUFF checkpoint, the quality of hands and details is much better that LTX. LTX is much faster and make longer videos but you have to check them all, often more than 30% of videos produced are bad and other videos you have to split some parts.