Epoch 4 complete

The SD35-M epoch 4 training is complete, and with that my break from big trains begins. It's time to only fixate on setting up training tools and setting up smaller trains.

EPOCH 1 - Did very little, showed very little change.

EPOCH 2 - broke SD35 and turned Flux into an object spawner

EPOCH 3 - restored much of the functionality for the models and the training normalized.

EPOCH 4 - Untested.

A big reason why I'm shunting a single tokenizer around like this, is because ComfyUI requires a tokenizer attached to the CLIP pipeline. This is an annoying conversion quirk, where different tokenizer objects are created by like 30 different classes. So in this case, I'm treating the tokenizer as a single object for convenience in many instances.

I have a whole array of convenience util functions built specifically for a ton of this stuff, so I'm going to implement all of them to convenient utilities.

Epoch 3 Lora release

There is a lora now. Just load it up, and ComfyUI will recognize it. Make sure you've set up your comfyui with the t5xxl-unchained compatibility, otherwise the results are unexpected.

Run the clip pipeline through your lora and you're good. It doesn't have UNET model weights but ComfyUI will probably complain if you don't pass the model through.

You'll want to inference flux using RES4LYF. The bongsampler is pretty good to get started. Nice and simple, yet highly effective.

You'll want to disable Flux1D's guidance and run with RES4LYF's native system. It's far superior to the built in flux guidance in many ways, but I'm unfamiliar with how it works so I'll need to investigate further.

20 steps

1 cfg

res_2s_sde is good

res_2m is faster

dei is good

Everything is good.

Unchained won't simply unlock new behavior though. You'll need to train a lora with it as your t5. You likely won't want to train the T5 unchained further, but instead train the lora using the T5xxl-unchained as your t5xxl-unchained.

In any case, the clip-suite is building to this. If you're interested in following the development of that, I have an article based on developing the clip-suite.

https://civitai.com/articles/13548/preparing-the-comfyui-clip-suite

The showcase image there was edited using NovelAI's V4 to add the text; here's the original.

Still though, that's actually real sd3.5.

SD35-M might not be bad. It generates fast and it still shows some pretty powerful traits.

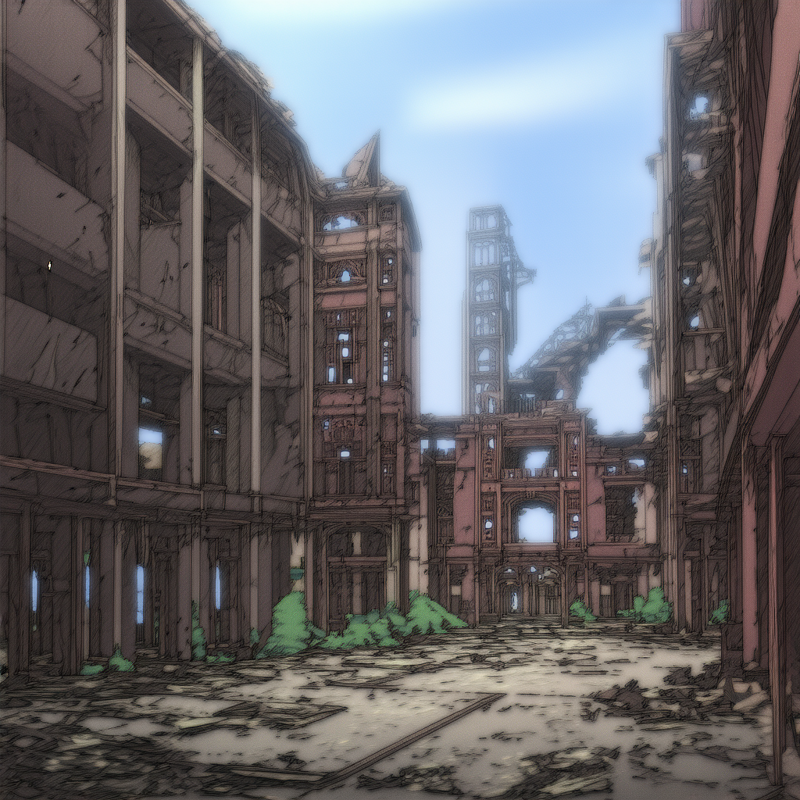

This one below was generated within SD35 unchained - text and all.

I don't even have much creepy shit in the data. The coloration does all the work. Something SDXL failed at primarily due to epsilon noise; but NoobXL V-PRED seemed to be the only one capable of doing, even my models failed.

Flux was capable of doing this, somewhat, but not to this extent. This is another level due to the unchained and dual blocks in SD35 being tuned to the unchained training data.

Deep and dark contrasting colors...Damn. Looks good.

There's some sort of bf16 conversion error with kohya

Once the T5 hits a certain depth the bf16 it shits itself, but since I converted it to fp16 it's had no issues and has ran smoothly.

a liminal landscape full of complex and difficult to understand deep hallways, five story shattered and destroyed building interior, massive building,

We are baking liminal clean into the T5 now.

5people, 5girls, lineup, real, realistic,

grid_c1 person black hair business suit woman,

grid_c2 person red hair business suit woman,

grid_c3 person blonde hair business suit woman,

grid_c4 person pink hair business suit woman,

grid_c5 person orange hair business suit woman,

Sd3 looks kinda shit, but it's getting there. The T5 has most definitely manifested new behavior.

Made it to about epoch 1.5 and then it shit itself.

sd35-sim-v1-t5-refit-v2-step00016250.safetensors

This is where I'm continuing from. The parity is impossible now, so I'm switching seeds. Lets pray it doesn't shit itself again in the next day or two.

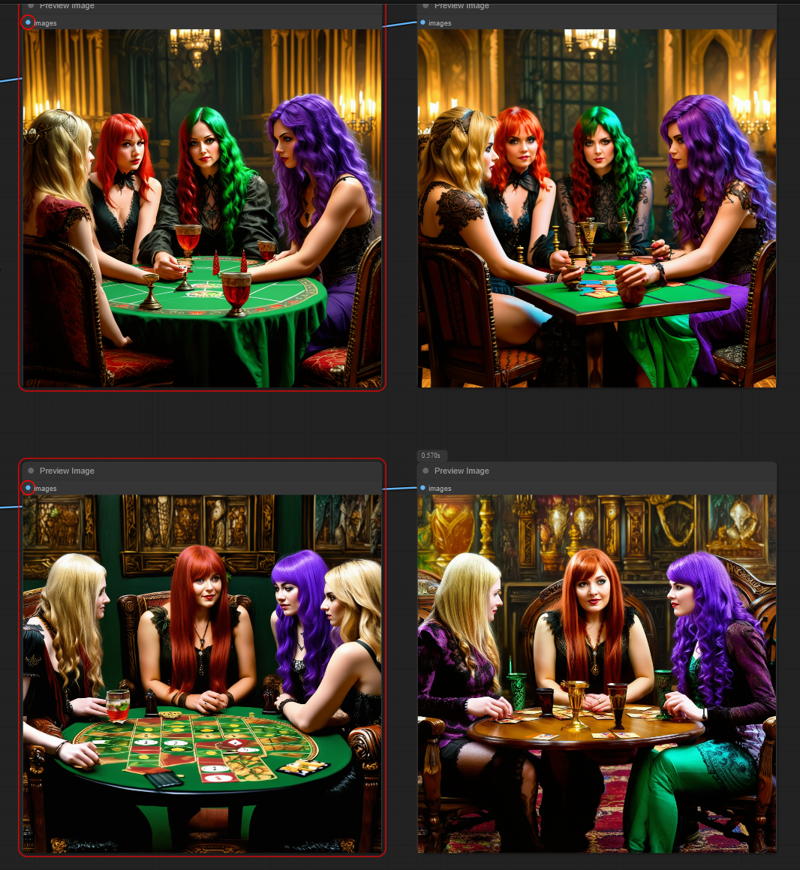

Houston, we have Unchained.

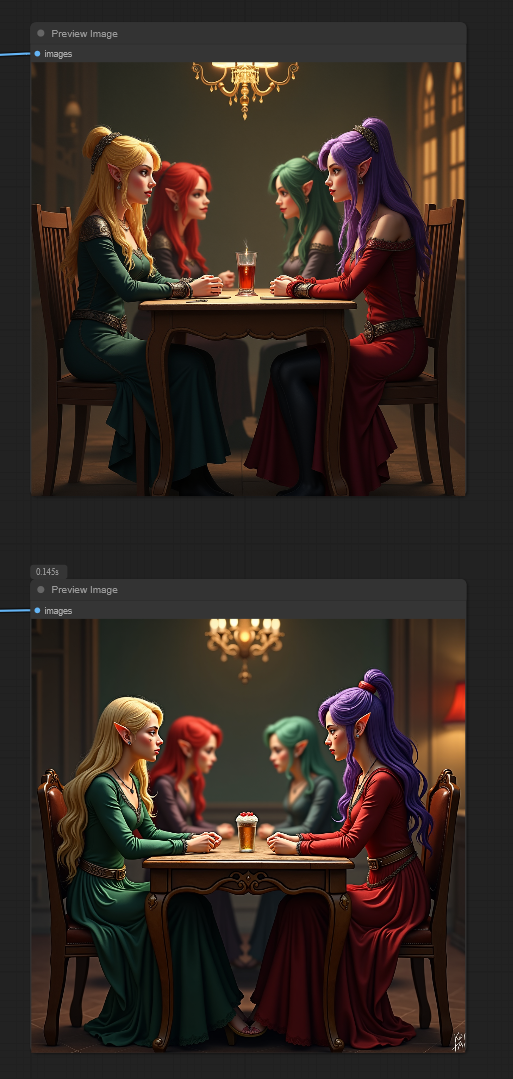

Left unchained fp16, right unchained 5750 fp16.

women sitting and playing dungeons and dragons, final fantasy,

(4people, 4girls,:1.55)

gothic,

grid_c1 person blonde hair,

grid_c2 person red hair,

grid_c3 person green hair,

grid_c4 person purple hair,

grid_c5 table.

grid_e5 chair,As per usual, SD3.5 m looks like shit; but...

There are changes. Very obvious changes.

HOWEVER, in the Flux realm we see something powerful manifesting. The true power is brewing, the goal.

Shuttle Schnell;

Flux fp8 1D unchained base;

Flux fp8 1D unchained 5750;

This is clearly having a massive impact. A huge impact in fact. I haven't touched Flux1D with this training, and that's just base 1D fp8. The outcomes are showing huge differences in solidity.

Still censored, not strong enough yet. It'll likely need at least 10 epochs even with this data, which is 10 days training and over $1000.

Pray it'll work in the next few days, because if it doesn't it's being halted and moved over to my local devices for slow cooking. Beatrix isn't going to like that.

This is why I wanted to shock it into shape instead of a slow train. The math didn't line up though.

https://huggingface.co/AbstractPhil/SD35-SIM-V1/tree/main/REFIT-V1

samples = steps x 12 x 5 aka 12 batch size 5 l40s per optimizer update aka STEP!

Aiming for... around... oh... 1 epoch should be plenty to get a good starting point.

So our target step count is 12,160.6; aka 915,000 samples learned.

The amount of learning SD3.5 will do, will create an entirely new core model. 100% this is going to work, and sd3 WILL survive this train.

Text still works, kind of.

1250

1000

Weekend Plans;

Starting on something I called the clip test suite.

Clip loader that can load any type of clip at common sizes;

configuration pipeline in and out

fp8, fp16, fp32, fp64, bf16, q4, q8, and so on. Everything to do this is very streamlined and integrated in torch or similar systems.

huggingface direct link for grabbing certain things.

OpenClip loader

Presents a list of OpenClip models capable of functioning in a similar fashion as any of the ones you're used to.

Will connect directly to the Clip Standardizer to convert into a usable form if possible. Many models are capable of this and many are not. That's for you to decide, not for me.

Configuration nodes

configuration file location

configuration manually editing after loading

configuration saving

Full Clip pipeline

A pipeline dedicated to housing all of the clip models you want to move from point A to B.

Has no limitations on what goes in or what comes out, it's on the user to decide.

Keep snapping them to it. It will open passages on both ends the more you snap; as long as they are considered clip-suite models or pipelines.

Clip splitter

splits off certain clips if desired with presets for models like

sd3, sd2, sd1.5, flux, and so on.

ensures pipelines aren't sent with excess clips

Clip joiner

attaches clips loaded individually or manually at runtime

fully configured clips and llms can be applied to this joiner and they join the pipeline with the others

Clip Standardizer

converts the clips from the suite form to the standard comfyui form for utilization.

will have multiple presets or simply join together or split off clips as necessary.

will create a standard clip output for utilization

Clip vision adapter

attaches a chosen version of VIT to a model arbitrarily. It will not care what you try to use, it will simply attach with presented FP and BF counts.

An automatic rescale option; which will allow clips to conform to vision with automatic adjustment.

Will produce the standard clip_vision output that conforms to standardized vision systems such as ipadapter.

Clip interrogator

Talk to your clips and figure out if the picture you see is actually what you think it will be, or if the flavors say different.

Flavor file location selector, git target, or hf hub targets available here.

Takes in string alongside prompt to allow for templates.

Instruct node available for LLMS; which is a string formatted in a way that conforms to easy instruct capability with common models.

String out for utilization with standard prompt mechanisms.

Layer strength sliders

Provides direct strength control of your model's layers on a slider.

Upon connecting, the system will decide which layers are connected, and assign toggles to all of those allowing for sliders for all of them.

Slider is a conversion scalar that multiplies the layer before passing it through to the next point.

Dedicated LLM pipeline

Specifically for LLMS will present common configurations; as well as access to the configuration node for utilization.

Will be capable of converting to standard clip for inference in the future; if your AI model requires this utility.

The output of this is entirely based on a different kind of pipe that can be merged into the standard pipe at any given time if desired.

Usage Requirements;

Download the T5xxl-Unchained fp16

Manually swap the tokenizer.json in comfyui or forge.

Manually swap the config.json in comfyui or forge.

Swap the ClipSave class in ComfyUI with this code here.

"ComfyUI\comfy_extras\nodes_model_merging.py"class CLIPSave:

def __init__(self):

self.output_dir = folder_paths.get_output_directory()

@classmethod

def INPUT_TYPES(s):

return {"required": { "clip": ("CLIP",),

"filename_prefix": ("STRING", {"default": "clip/ComfyUI"}),},

"hidden": {"prompt": "PROMPT", "extra_pnginfo": "EXTRA_PNGINFO"},}

RETURN_TYPES = ()

FUNCTION = "save"

OUTPUT_NODE = True

CATEGORY = "advanced/model_merging"

def save(self, clip, filename_prefix, prompt=None, extra_pnginfo=None):

prompt_info = ""

if prompt is not None:

prompt_info = json.dumps(prompt)

metadata = {}

if not args.disable_metadata:

metadata["format"] = "pt"

metadata["prompt"] = prompt_info

if extra_pnginfo is not None:

for x in extra_pnginfo:

metadata[x] = json.dumps(extra_pnginfo[x])

comfy.model_management.load_models_gpu([clip.load_model()], force_patch_weights=True)

clip_sd = clip.get_sd()

for prefix in ["clip_l.", "clip_g.", ""]:

k = list(filter(lambda a: a.startswith(prefix), clip_sd.keys()))

current_clip_sd = {}

for x in k:

current_clip_sd[x] = clip_sd.pop(x)

if len(current_clip_sd) == 0:

continue

p = prefix[:-1]

replace_prefix = {}

filename_prefix_ = filename_prefix

if len(p) > 0:

filename_prefix_ = "{}_{}".format(filename_prefix_, p)

replace_prefix[prefix] = ""

full_output_folder, filename, counter, subfolder, filename_prefix_ = folder_paths.get_save_image_path(filename_prefix_, self.output_dir)

output_checkpoint = f"{filename}_{counter:05}_.safetensors"

output_checkpoint = os.path.join(full_output_folder, output_checkpoint)

if prefix == "" and clip_sd is not None:

# lets assume this is the t5 so we need to rename ke key prefixes properly;

prefix_assign = "t5xxl.transformer."

swap_prefix = ""

prepared_clip_sd = {}

for k in current_clip_sd.keys():

if k.startswith(prefix_assign):

prepared_clip_sd[k.replace(prefix_assign, swap_prefix)] = current_clip_sd[k]

else:

prepared_clip_sd[k] = current_clip_sd[k]

del current_clip_sd

current_clip_sd = prepared_clip_sd

else:

# other clip models saved normally

current_clip_sd = comfy.utils.state_dict_prefix_replace(current_clip_sd, replace_prefix)

comfy.utils.save_torch_file(current_clip_sd, output_checkpoint, metadata=metadata)

return {}This correctly saves the T5xxl for reuse with the correct pattern, completely separated from the CLIP_L and CLIP_G data; which is important.

Load CLIP_L CLIP_G and the T5xxl-Unchained FP16 in SD3 or Flux format.

Flux format only CLIP_L and T5xxl-Unchained FP16.

Link it to ComfyUI's save clip node

Generate an image to ensure the system works correctly; ensuring images generate and no errors are present in the console.

Save your clips after passing it through the lora. This will save CLIP_L CLIP_G and T5- the T5 will be basically unlabeled but larger.

Refresh ComfyUI, and load up your brand new T5xxl.

Yeah this will be streamlined, but for those who are closely following, this is a simple starting point.

I'll prepare a diffusers soon, which will allow for a proper utilization, but for now just jam that shit straight into the square and pray.

It's a damn miracle.

https://huggingface.co/AbstractPhil/SD35-SIM-V1/tree/main/REFIT-V1

You are no kraken, beast.

I've tamed stronger than you and you are next.

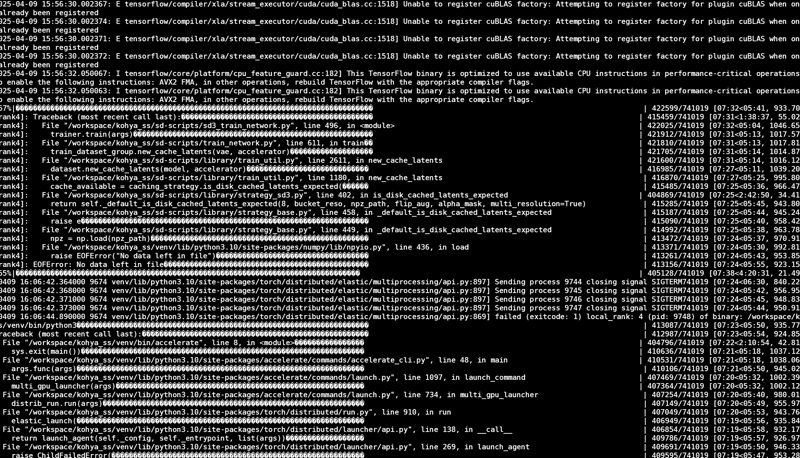

Attempt 312874; past the worst

Hopefully.

Errors like this happen after you run out of drive space. It just hits multiple failpoints for a long time until the latents catch up; unable to determine WHICH latents are at fault since the system hard crashed.

My version has all the assertions removed for the most part; making it much slower at caching and determining validity, but also much less crash prone on prep time. Assertions are fast but terrible for production use if you don't carefully control their fail points. In systems like this you don't want assertions for much of this, as the checks are often entirely out of the scope of the sub structure.

In base sd-scripts the assertions are everywhere. Hard to curate the outcome from them without simply wrapping them in exceptions, which slow the entire system even more than just curating the output carefully.

THE BIGGEST PROBLEM isn't that it doesn't work. It works fine, if you're just slapping in a few thousand images. It fires through them and prepares the train almost instantly.

The problems come when you get in the 500k+ range. Where you start seeing some nasty errors; like python version errors, math differences based on versions, numpy ram errors, variable overflow and bleed-over from space to space, unexplained crashes with only minimal logging, unexplained latent data missing errors that hard crash, unexplained overlapped data and size differentiations, and so on.

I lost some sleep on this one. I would need to get up regularly to check it was caching still, only to see it wasn't. I'm going to set up a sound notification for failures and so on. This is getting painful.

My SDXL bucketing worked slightly differently, so the system required different forms of buckets. Base sd-scripts does not behave the same way, and it appears that it's faster. A lot faster.

However, it hits deep error points. Nasty wastes of time that can be upward of 2 hours at times; reminding me of my fp32 sd-scripts version that I basically mothballed.

There are TOO MANY deep points that can fail in these scripts to be fully reliable. I need an iterative caching system that functions more optimally; similar to how I trained Beatrix's interpolator, but that's a long journey and a lot of failures to get something like that prepared.

I haven't explored much of sd-scripts, so the expansions I added often break other elements for other pieces without me knowing.

Patching my version might be the correct route at this point, depending how well it responds. This shit needs to be on a github too, I'm tired of version controlling on my runpod.

A little number reminder before I go to sleep; the infinity isn't always the correct infinity.

Corrupt Latents found; well at least there's a good reason it failed.

Roughly 55,000 of them. Damn. That's nasty.

Never uncheck this until your data is fully verified.

The last one didn't actually fail. There were a multitude of corrupt latents that somehow bypassed the checks. I ran some tests and found the latents were actually substantially larger in some cases than they were supposed to be.

I updated my bmalitas Kohya scripts manually and the dependencies in the past hour or so. The new version is currently caching latents on a newer version of the dev branch. So far it's going smoothly.

I stashed my vpred kohya for now, so it's just kind of stashed away. For now lets work out sd3.

Attempt 2; big mama train; 128 dims 128 alpha.

angry grumbles

I really didn't want to do this, because it's akin to leaving it up to the universe to decide rather than just hitting it with determinism.

The learn rate of my other was overzealous; which caused sd3.5 to suffer it's catastrophic death by step 500 and the T5 to follow suit by step 1000.

Instead, I plan to use similar settings to my SDXL trains; very similar, actually. Training the T5's text encoders to SD3.5 might be a bad idea; but if it tunes correctly we shouldn't need 3.5.

1024x1024 this time, no room for shattering 3.5 there.

MinSNR 7, bucketing canvas size scale bound to 1 resulting in a solid MinSNR 7. Nothing special there.

Gamma 0.1 for regularization.

Train 2 begins; and it'll be up and operational in about 30 minutes hopefully.

It won't make the T5 conform though. It'll just nudge it, which should be a good showcase, but not what I wanted. I wanted a full conformity but alpha behaves differently than topically described; so I had to dive into theorem which I don't want to do after work any day.

Essentially, the math is wrong, and often touted as correct.

First attempt; 737 alpha 81 dims failure.

The T5 suffered catastrophic death by step 500.

Catastrophic failure; training restart with much lower values.

Day 2; Training begins finally

Only 500 steps in and the thing is already shattered like a dropped vase.

SD3.5 is as topical as topical can be it seems. Training it may not be possible, I may need to just flat train flux.

I'll let it run for a few thousand and then test it against Flux.

Day 2; Latents are too big.

Much larger than their SDXL counterpart. It's resulted in me deleting all of the latents and spare models from the 4 tb runpod network drive, only to have the outcome still be too large to be cached properly to the drive.

I've omitted nearly 100,000 images that shouldn't be in there for this train and shoved them into my bigdata local drive; only to see them taking up too much space even there. 350 gigs of data isn't exactly a small amount after all.

Adding additional levels of compression on top of the data is exacerbating the issue, causing the latent prep to take that much longer.

Even after backing up all of my trained models to my local raid10, it still isn't enough space with the 4 tb drive. I'm under the impression that there is something erroring on runpod's end, so I'll run a couple space check commands and then determine from there. As it stands the majority of bulk size within the workspace is contained in the dataset folder, so a few size checks should figure out if their system is failing or if my drive is just too damn full.

Instead of getting a simple solution I may need to modify sd-scripts again, likely breaking something in the process, or training in batches of about 500k instead of 1 million. Leaving something like this open-ended is kind of a pain in the ass, as it tends to cause cascade failures with bucketing if you simply just drop a bunch of images in and have it train those images and discard them.

Bucketing is essentially a form of random selection on large data, and it will be affected no matter what I do here due to the sheer size of the dataset and the 4 tb limit on the runpod network drive. One option would be to train everything small first and then head up the chain, rather than having it select random buckets. Kind of give it a chance to catch up with the BlueLeaf positional embeddings, but there's no guarantee that will work either.

Another option is to fully refit to the parque style, which would likely take me at least 3-4 days of headaches, pain, and a pissed off producer wondering where my game dev code is; so I won't be doing that this week.

SD3.5 M is doomed to shatter either way, but the T5 needs to not get caught in the crossfire; but I'd like to at least have a toy to play with later if possible. Other than the forged in fire Unchained.

Day 1; Preparing

It's been a long road, but at the end we see our goal.

The true unchained Flux.

Our CLIP_L Omega is ready. Our CLIP_G Omega is ready.

It's time to begin the final stages of preparation.

Oh also, all mentions of SD3 imply that we're using SD3.5 not SD3 base.

Step 0; Clip Preparation complete.

The plan here is simple, and the math is not.

SD3.5 has specific levels of dimensions that conform exactly to our T5 needs, and it was trained using the same text encoder parameters with CLIP_L.

We... have our target. We are going to completely burn away SD3.5.

CLIP_L and CLIP_G are conformed and perfect for the task. They are seasoned war chiefs on a battlefield of everything omitted from the SDXL and Flux realm. The gladiators of the depraved. The altruistic demons of the underworld.

The masters of identifying everything they shouldn't be able to.

Someone had to do it...

After interrogating them with a multitude of attempts; I found attaching the vision portions to them did not really require much vision finetuning if at all for plain captions. I just want the vision portion to correctly reflect the desired tokens, so that's going to be a fun task in the future.

In any case, we have our masters; not just experts, but masters of the depraved. Forged from the Illustrious Zer0Int CLIPS fused with ViT-L-14's base text_encoder fused with the NoobXL clips and finally fused with the Pony clips, truncates a powerful new era in clip architecture. They work. They work, very well together when simple merged. There is no sorcery with the clip merges, they just work... and these have some some shit.

To say the least. CLIP_G already saw LAION_2B, so it really just needed a nudge in the goal direction. CLIP_L however was a bit more stubborn as it had a multitude of different stages and integrations, but it has conformed correctly to the goal given enough samples; roughly 150 million or so.

These clips have been fused and prepared for this. The finetunes aren't expensive. The outcomes are very good when curated.

I can say this with the utmost confident fact when BeatriXL releases; the outcome will speak entirely for itself. BeatriXL's clips are the strongest clips that I've made, and the basis for this training here. The masters of the depraved and the experts at spotting the universe itself.

Stage 1; Destroying SD3 to train the T5.

This is hosted on HuggingFace and is fully public.

https://huggingface.co/AbstractPhil/SD35-SIM-V1/

This is going to be very controversial when I say it, I guarantee it. The desire to keep order in these machines is... high, especially since the baseline training is so important.

What we have here though... is an opportunity to prove a hypothesis.

I believe, that the T5xxL will withstand the storm like that old oak.

The math shows it. The methods of training show it. The outcomes from using it show it.

The T5 will endure, and the SD3 will not; mathematically.

So... let me put this one simply, I can't full finetune the T5-Unchained. As Felldude puts it; you need a datacenter and more data than fits on CIVIT to train the T5 in an effective way. I agree, for a full finetune that this is fairly accurate. I simply cannot afford that.

So I got to thinking... and then I did some baseline math with LORA trainings.

How can we train this... using cost-effective methods, that don't completely obliterate my wallet, and also will give us an approximation of the desired outcome with minimal time?

You can see the first prototype here;

https://huggingface.co/AbstractPhil/SD35-SIM-V1/tree/main/REFIT-V1

I actually expected it to be bigger. I ran 500 steps of 10000 images and the outcome shifted the entirety of the core of SD35 with almost no updates, and yet the T5 is just fine. Endured actually, showcases new behavior in the unet that wasn't there in almost no steps; and doesn't even really need the UNET from the lora.

It uses 81 dimensions and 737 alpha.

cosine_with_repeats 9 repeats

min_snr 7 will help a little but it'll still shatter like glass soon.

No matter what I put this model at, the alpha will burn the core away in no time. The learn rate becomes too high.

This, will completely destroy SD3 given one epoch with my 1 million images. There will be nothing left of what it was. However... I'm setting up canary prompts here. A multitude of high-depth high-complexity prompts meant to show every little potential failpoint with the model as it learns.

Those won't matter though, the real outcome is data.

Stage 2; After SD3 is destroyed the first time, I will pick the T5 from the ashes.

My frozen CLIP_L and CLIP_G steadfast ready for another battle.

Our new T5 is going to be the blueprint.

Test 1; difference test

I'll compare this T5 with the original Unchained and test each layer's differences systemically and programmatically.

If the values fit within the intended goal percentage we'll be golden. If not, it'll need to be burned again.

Next I'll take the inference values tested from the encoders and compare the outcome valuations for a couple hundred thousand checks.

If my assessment is correct, we will have forged a new T5 that still works very similarly to the old one; our steady companions CLIP_L and CLIP_G keeping SD3 together as long as possible as the T5 learns it's trade from a new perspective. A new dynamic.

Test 2; Reinfusing original T5-Unchained layers to supplement damage.

I would be shocked if there aren't tons of burnt ends; however these can be identified programmatically, and the original T5 layers from before can be re-merged with the new layers depending on the damage dealt to certain layers.

This is a programmatic process and will likely happen next weekend after the first destruction.