I wanted to make this small article about standalone embeddings aka Textual Inversion to clarify some aspects of what they are and how they work. I'll keep it short and to the point, but it could be useful to read my article about how SDXL works.

In any case, i'll do a quick recap:

SDXL is made of three parts:

the CLIP that converts the prompt into a "guide" for what kind of image you want

the UNET that "makes" the picture in an latent form

the VAE that convert the latent picture into a real picture

LoRA are a patch that is applied to the CLIP and UNET:

new concepts in the prompt can be used thanks to the CLIP patch

the UNET patch allow generating new concept or altering existing concepts

Textual Inversion (TI) acts as a part of the prompt but WITHOUT going through the CLIP.

There is two main way to generate a new TI:

train it based on images

generate it based on words

When building my own embeddings, i do it by generating them using a set of words and an extension for A1111. But what is really an embedding?

A bit of math

In AI/ML, concepts are often represented as a vector, "an arrow" pointing to a specific direction in space and those arrows are not distributed randomly:

All similar concepts are in the same vicinity in this space

Addition and substraction of those is normally possible to find other concepts, like "cat - adult + child = kitten"

Those arrows are what are called embeddings in LLM and diffusion models.

What a CLIP model does is convert your prompt into several of those arrows that are then fed to the UNET to generate the picture associated with the concept = that's the "conditionning" of the UNET.

Now, what are TI? Simple: Textual Inversion are pre-computed arrows

When you add them in the prompt, the values of the arrows are not coming from your model CLIP, they are already in the format that the CLIP output and as such, are added as-is in the "conditionning".

This works differently than a LoRA: when you add a LoRA, the CLIP of your model is altered and the words of your prompt generate slightly different arrows (plus a LoRA also alter the what the UNETcan do, meanwhile TI do influence the UNET since they are in the conditionning but that's it, they will not modify what the UNET can generate).

And now, for the fun part:

Some models have very different CLIP: Base SDXL, Pony and Illustrious clearly knows different concepts and even have added special tags like the score_9 and so on in Pony or the Danbooru tags that SDXL don't know about

The embedding merge extension mentionned earlier is simply generating TI by parsing a prompt using the CLIP and packaging those embeddings in the right format (and allow doing some math on the fly with the arrows)

When i generate an embedding, the extension will leverage the CLIP of my currently loaded model to generate the arrows... which means that when using one of my embeddings, you are actually leveraging for those pre-packaged words the CLIP from an other model!

Since the CLIP are normally not too different in the same "family" of models, it should not have too much impact, but still, it can make a slight difference

When i generate embeddings for Illustrious, i generally have one of my AnBan model loaded.

A Textual Inversion is not counting as a single word in the prompt = it can contain between 1 and 75 tokens represented as embeddings. The shorter the embedding, the better it can integrate in your prompt without needing to chunk it (most tools can break longer prompts into 75 tokens chunks that are then concatenated to make the final conditionning).

From my tests with Civitai on-site generator, some larger embeddings are not loaded and i still haven't understood why.

And that's it! As a final demo, let's dig in an example:

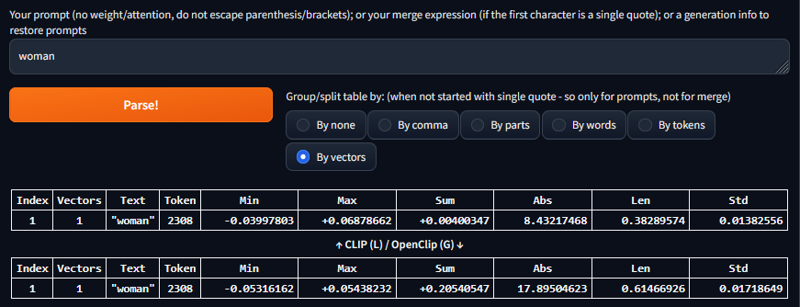

Using the extension, i generated two embeddings for the word "woman", one with Wai 14.0, one with Illustrious V2.0

In both case, this word is a single token of the vocabulary list of SDXL = the token 2308

Now, let's see what's in there:

(venv) ubuntu@webui:~/webui/embeddings/embedding_merge$ python

Python 3.10.12 (main, Feb 4 2025, 14:57:36) [GCC 11.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> from safetensors.torch import load_file

>>> wai = load_file("woman-wai14.safetensors")

>>> ixl = load_file("woman-ixl2.safetensors")

>>> wai.keys()

dict_keys(['clip_g', 'clip_l'])

>>> ixl.keys()

dict_keys(['clip_g', 'clip_l'])Both are containing the CLIP G and CLIP L values for the embeddings (SDXL use two Text Encoder models). CLIP L generate 768 dimensions arrows will CLIP G generate 1280 dimensions arrows.

How many arrows are in there?

>>> wai["clip_l"].size()

torch.Size([1, 768])

>>> wai["clip_g"].size()

torch.Size([1, 1280])

>>> ixl["clip_l"].size()

torch.Size([1, 768])

>>> ixl["clip_g"].size()

torch.Size([1, 1280])Good, only one arrow since "woman" is a single token (some words are more than one token).

Now, let's compare those:

>>> wai["clip_l"][0][0:10]

tensor([ 0.0212, 0.0053, -0.0010, -0.0252, 0.0013, -0.0053, -0.0105, 0.0195,

0.0023, -0.0204])

>>> ixl["clip_l"][0][0:10]

tensor([ 0.0213, -0.0005, 0.0006, -0.0261, -0.0024, -0.0067, -0.0111, 0.0190,

0.0027, -0.0210])Even with just the first 10 values of the arrow, there is already a difference! Let's see how much difference:

>>> torch.mean(torch.abs(wai["clip_l"][0]-ixl["clip_l"][0]))

tensor(0.0011)

>>> torch.mean(torch.abs(wai["clip_g"][0]-ixl["clip_g"][0]))

tensor(0.0010)That's not a trivial difference isn't it? 😉

Some examples

Now, let's see if this really has an impact. I'll be using Wai 14.0 to generate the same image but with three differents version of the embedding: wai14, ixl2 and hoj2 from my own checkpoint.

<embedding for the word woman>, summer dress, beach, from side, looking awayWai14 (which btw produce the same result if i just put "woman" instead of the embedding):

Ixl2:

Between those two, the difference is almost invisible, only the hair in front of the forehead and the dress ribon changed slightly, but what about the one generated with my own checkpoint?

Hoj2:

The eye color changed! Of course, it is due to the fact that i didn't add any info in the prompt regarding that, but still, now, you can see why Textual Inversion may provide a difference from checkpoint to checkpoint.

Thank you for reading! 💖