Over the past month, I've been researching WanLoRA training, with my computer running almost 24/7 for training. As someone who started video training completely from scratch, I encountered many pitfalls in data collection, construction, and processing. Therefore, I've written this article, hoping to assist friends who are training for the first time.(The whole article is translated by Gemini 2.0, forgive me if it has any translation problem.)

1. Video Data Processing Tools

(Simple application scripts can be found in the attachments; please install and download the software yourself

Video download tools: ytdlp (for YouTube, TikTok, etc.), IDM

Video cutting and processing: PySceneDetect, ffmpeg/python-ffmpeg,LossLessCut or other editing software

Video annotation API: Qwen-vl-max/Gemini-2.0/2.5

2. Video Training Workflow

2.1 Video Data Acquisition

Before training, you need to determine the concept of the Lora you want to train: is it a style Lora, character Lora, or shot Lora? If you lack inspiration, you can watch movie review YouTubers for ideas; for instance, my inspiration for the Dolly zoom Lora came from watching StudioBinder on YouTube. Once you've decided on the Lora concept, you need to find data. Compared to image data, video data is much scarcer in quantity and annotation, making video data acquisition more challenging. I'll analyze this in two scenarios

(1) Style Data Acquisition

Style data is simpler. Style Lora primarily trains T2V models, which support both images and videos. However, a Lora trained purely with image datasets can lead to a decline in motion quality, so it's advisable to include video data. The best way to obtain style data is to find films of the corresponding style, then use PySceneDetect for automatic segmentation, followed by manual screening. Many Wan Lora authors mention that 10-20 video data points can achieve good results, but more is always better. Here, I recommend the experience sharing from the author of Civitai Ghibli/Redline style Lora (https://civitai.com/models/1404755/studio-ghibli-wan21-t2v-14b). They use a large number of video data points (over 100), set many different Target Frames, and adopt a strategy of low (3e-5-6e-5) constant learning rate (lr) and high steps, which yields excellent fitting results, though it requires longer training time

(2) Action/Special Effects Data

Compared to style data, action/special effects shot data is harder to find, mainly because many action special effects in movies are not captioned, such as invisibility effects. I can only offer some preliminary thoughts on this. Personally, I usually use two approaches: 1. Ask LLMs like ChatGPT for movies with such action/special effects shots; although sometimes they might give nonsensical answers, they still provide many useful ones. 2. Search on YouTube/TikTok; many special effects shots have tutorial videos. Additionally, some famous shots have movie review videos, such as the Dolly Zoom and CrashZoom; for example, StudioBinder has a video summarizing movies with Dolly Zoom, where you can find corresponding films to extract clips from.

2.2 Video Data Processing

After acquiring the relevant video data, you first need to cut the videos. If it's style data, it's recommended to use PySceneDetect for cutting, which typically results in 1-30 second videos, then manual screening is advised. For a 4090 graphics card with 24GB VRAM, stable training can be achieved at [360,360] resolution and blockswap=28 for max_frame=81 (1+16*5), so I would choose videos under 5 seconds. The recommendation for video data processing is to select cut video lengths based on your VRAM; if you have more VRAM, you can use longer videos. Of course, in the convert latent stage, you can also process data by setting the cutting method directly, such as "head" to extract the first N frames of the video, or "uniform+frame_sample" for frame sampling. However, it's still recommended to do this in advance, as "head" might lead to inconsistencies between video captions and latents, so pre-processing is advised.

Besides video length, one detail to pay attention to is FPS. Due to different data sources, training materials generally have varying FPS. It's recommended that after cutting, you use ffmpeg to convert video files to 16fps (the FPS used by Wan), so that later when using target_fps, source_fps, and max_frame, you can accurately calculate how much material was used. If not handled properly, and the training data has a high framerate while max_frame and target_frame settings only capture the first N frames, only a portion of the training data will be used for training. For example, when I trained moonwalk, it appeared in slow motion (left: Lora trained at 16fps, right: Lora trained without proper FPS setting). In summary, my personal processing method is to first cut videos to within 1-5 seconds, then convert them to 16fps using ffmpeg, completing the video data processing.(File:Attachments:FPScompare.MP4)

2.3 Video Data Captioning

There aren't many large models that support video data captioning. If you in Mainland China and don't have VPNs, you can use the Qwen-vl-max API under Alibaba Cloud Bailian. Because videos of a few seconds are generally small, the price is very cheap. However, Qwen-vl-max in base64 format does not support video transmission shorter than 2 seconds or larger than 10M, so this needs attention. If you not in Mainland China or you have VPNs, Gemini-2.0/2.5 API is more recommended, as it's free and highly accurate. However, note that Hong Kong’s VPNs might not work well, and it needs to be uploaded via inline mode (I couldn't upload files no matter what I tried....). Also, the inline mode has a 20M size limit, which 1-5 second videos generally won't exceed. Gemini-2.0's code includes structured output, covering scene, actor actions, actor appearance, lighting, etc.; if all of these are added to the caption, it would be quite long, so it's also saved as JSON.Currently, many caption managers do not support videos, such as BooruDatasetTagManager. A lazy personal method is to generate thumbnails and then organize captions.

2.4 Training

Explanation of the following experiences: First, the following experiences are purely from single dataset, one-time training, so they have an element of randomness. Different datasets will also have different optimal parameters. Therefore, the following experiences are only applicable to first-time trainers as a starting point. I reiterate: different datasets have different optimal parameter settings, thank you.

Regarding the hyperparameter tuning experimental process, based on WAN_I2V_14B_720P, with lora_dim=32, lora_alpha=32, we first establish a set of settings to produce a good Lora. Then, keeping the dataset and other parameters unchanged, we individually adjust ar_bucket, frame_extraction, lr, etc., perform training, compare the training results, and summarize the following experiences.

Most people currently use diffusion-pipe and musubi-tuner for Wan training; I've used both. Personally, I recommend musubi-tuner. While using diffusion-pipe, I encountered WSL memory explosion and blockswap error issues. After switching to musubi-tuner, these problems disappeared. Here, I recommend sdbds's installation and training scripts.(https://github.com/sdbds/musubi-tuner-scripts) Musubi-tuner supports more hyperparameter settings, and it separates caching latents and training into two steps, allowing for more strategies to convert videos into latents, such as "full+max_frame," "uniform," and "chunk" mentioned earlier. Therefore, I personally recommend musubi-tuner.

For the parameters, first is resolution_bucket. I tested with [256,256] and [360,360] as bases. I personally found that [256,256] can yield very good results. In my tests with several different training sets, [360,360] did not significantly improve the Lora's effect, but [360,360] greatly increased the required VRAM and time. Taking a 4090 as an example, for [360,360] with 81maxframe, blockswap needs to be set to approximately 28, but for [256,256], blockswap can be set to approximately 10. Therefore, from an economic and operational efficiency perspective, I would recommend[256,256].

Next is the frame_extraction strategy. In musubi-tuner, the Wan frame bucket automatically truncates to 4N+1. Many people set multiple target_frame values, such as target_frame = [1,33,49]. However, for my personal training, I tend to use the full+max_frame method because video data is generally 1-5 seconds long, and using target_frame would result in longer videos having more data, causing data imbalance. I still prefer full+max_frame. I also conducted a test and personally feel that the results of full+max_frame are not significantly different from target_frame, and may even be slightly better.

Then there are step and epoch. In musubi-tuner's dataset_config, you can set num_repeat, so sometimes other Lora authors might say that tens of epochs yield good results; this might be due to a large amount of data or a high num_repeat, so it's recommended to look at the total steps. My personal experience with over a dozen LoRAs is that for most datasets, with batch_size=1 and gradient_accumulation_steps=1, a total of 2000-6000 steps can achieve good results. Of course, this is also related to hyperparameters like lr, lora_dim, lora_alpha, and the dataset itself, so this is just for reference. However, for first-time training, it's recommended to train with a higher steps and then save the interval LoRAs to find the best one. Additionally, I personally feel that T2V converges faster than I2V, though since their tasks are different, no experiments can be done, this is purely a feeling.

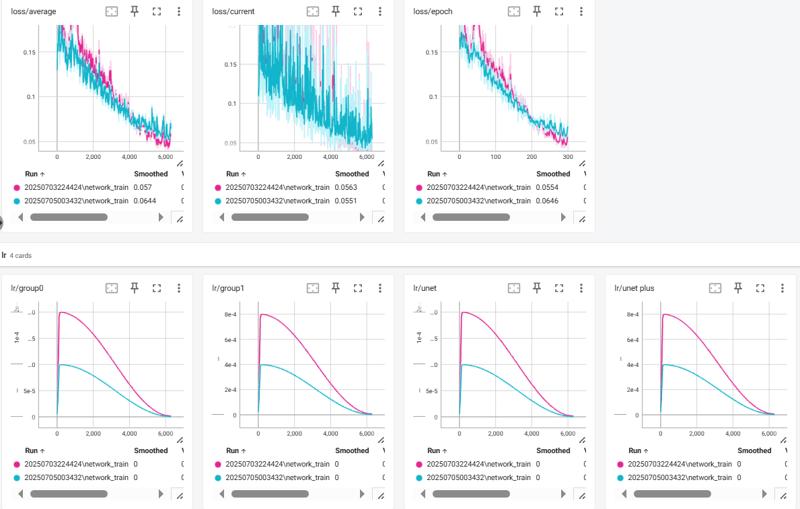

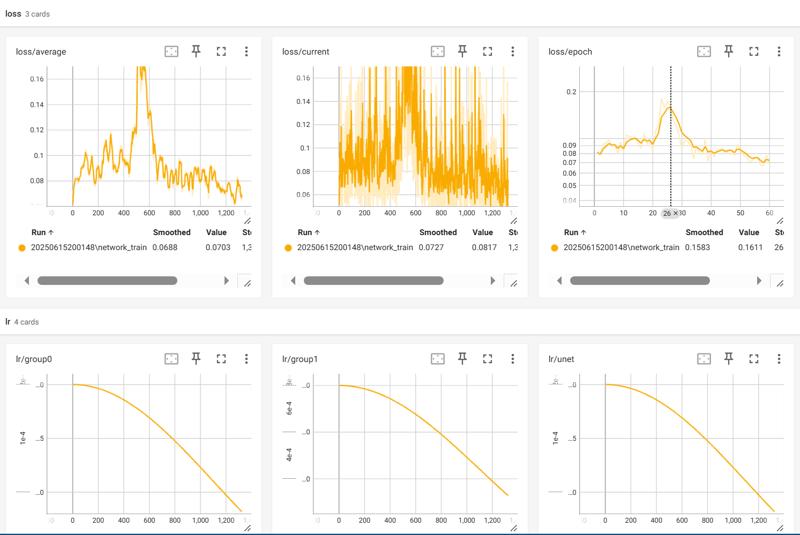

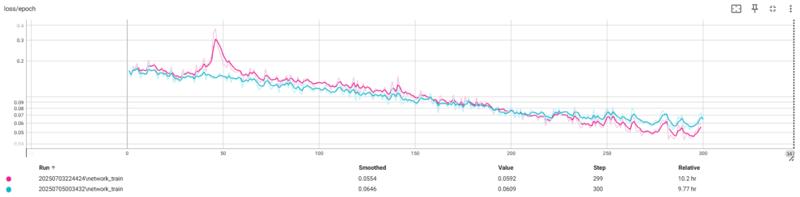

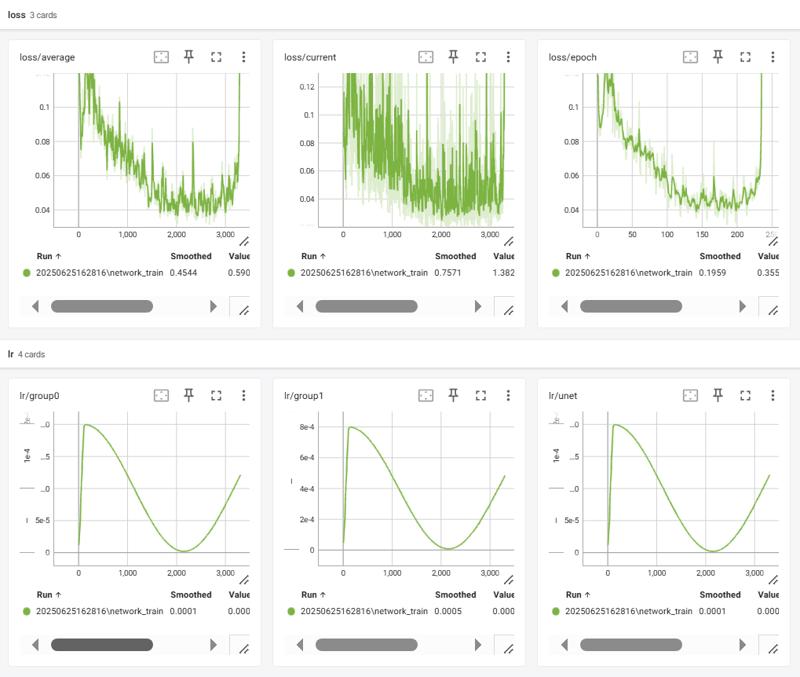

Finally, lr. First, I emphasize again that the experiments here used WAN_I2V_14B_720P (I'm currently doing some pure image style training and found that for pure image training with WAN_T2V_14B, 1e-4 might still be too high; I need to test lr for pure image training), with lora_dim=32, lora_alpha=32, and adamw8bit. Many people set lora_alpha to 1, and generally, as alpha decreases, lr needs to be increased, so when looking at lr, a dialectical view is needed. For example, if alpha is not filled in musubi-tuner, it defaults to 1, so the sample lr given is 2e-4. The author of Civitai Redline/Ghibli style Lora used lora_dim=32, lora_alpha=32, and provided parameters of constant_with_warmup, lr 3e-5-6e-5.Personally, after training over a dozen LoRAs, I recommend the combination of 1e-4 with cosine with min lr-0.01. In most cases, although 2e-4 can converge like 1e-4, 1e-4 yields better results. Here, I use two trainings from the "diving competition" dataset as an example. The two trainings were identical in all parameters except lr (blue is 1e-4, pink is 2e-4), and the dataset and settings were also identical (Figure 1). Ultimately, the orange curve was significantly better than the pink. Moreover, when I used 2e-4 for training, there might have been a surge in the early stages of training, as shown in Figure 2, and a similar situation is seen in the enlarged loss graph of this experiment (Figure 3). This might be one of the reasons why, despite convergence, the final effect is not ideal. Therefore, I personally recommend trying 1e-4 + cosine with min lr-0.01 for first-time training. I've tried this setting on over a dozen LoRAs, and it's relatively versatile, offering both a smooth descent curve and good results. There's another detail to note: in musubi-tuner, cosine supports restarts. If a high lr like 2e-4 is set and a restart is accidentally configured, the loss might fail to decrease or even explode in the later stages of training, as shown in Figure 4.

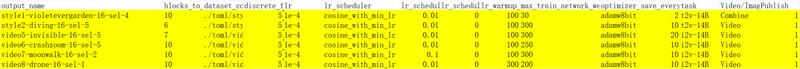

I emphasize again that this is my personal training experience from over a dozen LoRAs, and there are elements of randomness. Different datasets will also have different optimal parameters. Below is my training data record from later musubi-tuner sessions (I didn't have the awareness to record training data in the early stages, so the early training parameter data is lost). The highlighted rows are the LoRAs I released. From the highlighted rows, it's evident that most used an lr of 1e-4 and min_lr of 0.01 (except for moonwalk, where the chosen epoch was 170, and the later effects were not good; I personally suspect this was due to a relatively high min_lr leading to the lr still being too high in the later stages of training).

,(colorful_1.3),(masterpiece_1.2),best quality,original,extremely detailed wallpaper,looking at viewer,upperbody.jpeg)