1. Introduction — What is LoRA and Who is it For?

💡 What is LoRA?

LoRA (Low-Rank Adaptation) is a technique that lets you teach an existing AI model something new — a style, a character, or even a specific visual effect — without retraining the entire model.

Instead of replacing all the weights in the base model, LoRA stores only the differences needed to achieve your goal. These differences are applied on top of the base model during generation.

⚠ LoRA doesn’t create a completely new architecture — it adapts and fine-tunes an existing model. If the base model can’t produce certain elements at all, LoRA won’t magically invent them.

⚙ How It Works — Simple Overview

[Base Model] + [LoRA Adjustments] → [Customized Output]Base Model — A powerful general model (e.g., Pony, Illustrious, SDXL) that already “knows” a lot about visual features.

LoRA Adjustments — Small layers trained specifically on your dataset.

Merged Output — The original model’s strengths + your unique style or subject.

🚀 Why LoRA Instead of a Full Model?

Faster — Hours instead of weeks.

Smaller — Usually 50–300 MB instead of several GB.

Flexible — One base model can work with hundreds of LoRAs.

Efficient — Less GPU VRAM and storage required.

🎯 Who This Guide Is For

Style Creators — Bringing unique art styles to life.

Character Artists — Keeping characters consistent across scenes.

Content Creators — Quickly personalizing AI outputs.

Hobbyists & Professionals — Anyone wanting a clean, no-nonsense workflow for CivitAi online training and local Kohya training.

📝 Why This Guide is Different

This is not a generic “click here, type this” tutorial.

It’s my personal, field-tested workflow built on:

Years of experience training in-demand LoRAs

Mistakes I’ve made and solved so you don’t have to

My own tools and shortcuts for dataset preparation, tagging, and testing

Optimized parameters for both realistic and stylized LoRAs

Time-saving methods that let you prepare datasets in minutes, not hours

You’ll get exactly what I use when training Pony and Illustrious style LoRAs that the community actually uses.

💬 ”I’ve trained hundreds of LoRAs — some realistic, some purely stylized — and each one taught me something. This guide is everything I wish I had when I started.”

2. 📂 Dataset Collection — Building the Perfect Training Set

A great LoRA starts with a strong dataset.

Whether you’re training a style, a character, or a specific object, image quality matters more than quantity.

🔍 Step 1: Collecting Images

To train a LoRA model in a specific style, gather high-quality images from reliable sources:

Sources:

▶ JoyReactor — filter by artist for consistent style.

▶ Pixiv — good source for high-resolution artwork.

▶ Danbooru — excellent for tagged images.

Quantity:

Small, specific subject — 15–25 images

Balanced coverage — 30–50 images

Broad style or complex concept — 60+ images

💡 Better to have 25 perfect images than 100 random ones.

🧹 Step 2: Cleaning Images

Make sure all images are relevant, clean, and high resolution:

No heavy compression, watermarks, or distracting elements.

Remove watermarks/text using Photoshop or even the default Windows 11 image editor.

All images should match the target style, but variety in lighting and pose is good for flexibility.

📏 Step 3: Resizing & Renaming Images

Standardize the dataset so all images are ready for training.

Target Resolution: 1024 px along the longest side (XL models).

If an image is smaller — upscale it before resizing.

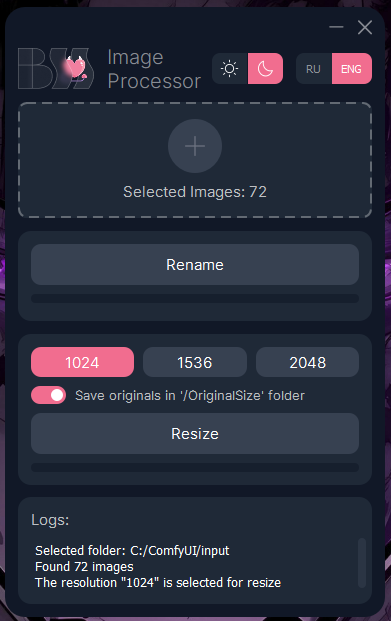

My custom tool:

I use my own utility — BSS Image processor — which:

Automatically renames all images in a folder sequentially:

01.png,02.png,03.png…Resizes all images in the folder to the selected resolution (1024, 1536, or 2048 px on the long side) in one click.

Alternative tools:

Online: BulkImageResize, RedKetchup

Local: ComfyUI upscalers or any preferred app

🗂 Step 4: Organizing the Dataset

Prepare the folder so it’s ready for tagging and training:

File names in sequential order

Keep everything in one folder for now

Example:

dataset/

├── 01.png

├── 02.png

├── 03.png🏷 Step 5: Tagging Images (Descriptions)

Every image needs a matching .txt file with its tags.

Tags describe what’s in the image and help the model learn.

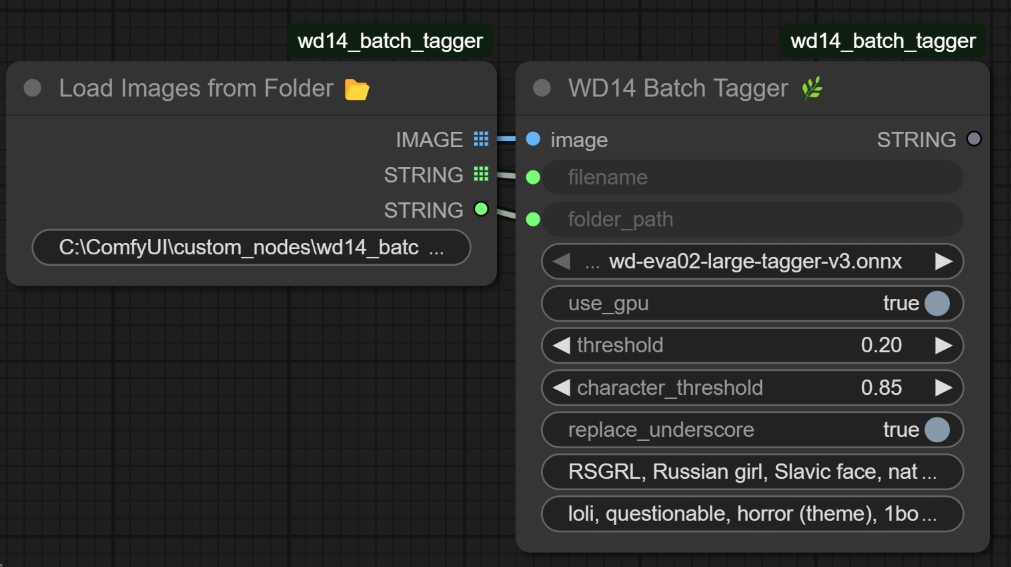

Automated Tagging Tools:

Local: My custom WD14 Batch tagger nodes for ComfyUI — process the whole folder of images automatically.

Online: Hugging Face WD14 Tagger (free cloud tagging).

Process:

Run the tagging tool with strict parameters (e.g., threshold 0.3, max 30 tags).

Manually review and remove irrelevant or incorrect tags.

Add a unique trigger word at the start of each description.

Example:

GLSHS, 1girl, solo, brown hair, green eyes, portrait, …The trigger word must be unique so the model links it only to your dataset.

Folder after tagging:

dataset/

├── 01.png

├── 01.txt

├── 02.png

├── 02.txt

├── 03.png

├── 03.txt⚠ Common Mistakes to Avoid

Mixing styles in one dataset (applies mainly to style LoRAs; for character or pose LoRAs, mixing styles can be intentional).

Using low-quality or blurry images.

Too many almost-identical shots (overfitting).

Missing variety in angles/poses for characters and objects.

💬 ”Think of your dataset like ingredients — if they’re low quality, no amount of skill will save the dish.”

3. ⚙ Training Settings — Optimal Parameters for LoRA

My primary training method is online on Civitai, since I don’t have powerful local hardware.

However, all recommended parameters below work equally well for local training with Kohya_ss or similar frameworks.

🌐 Online Training with Civitai

Convenient cloud-based training with user-friendly UI

No need to set up or maintain hardware

Easy upload of zipped datasets (images +

.txttags)Supports most training parameters for LoRA

Limited by cloud resource allocation and session length

🖥 Local Training with Kohya_ss (Optional)

Requires powerful GPU (NVIDIA with CUDA)

Full control over training process and scripts

More customization options, but steeper learning curve

Dataset structure and parameters mostly identical to Civitai

⚙ Recommended Training Parameters (Universal)

These settings are optimized for Pony, Illustrious, and other XL models, balancing quality and training time:

Epochs: 4

Use 4 epochs for initial testing. If overtraining signs appear, revert to earlier epochs.Train Batch Size: 2

Larger batch sizes speed up training but may reduce model quality.Num Repeats: 50–100

Adjust repeats based on dataset size to keep total training steps within limits (see calculation below).Resolution: 1024

1024 pixels along the longest side is sufficient; 2048 rarely justifies extra resource use.Enable Bucket: Yes

Optimizes training by grouping images of similar sizes, improving speed and memory usage.Shuffle Tags: No

Keep tags in order for consistent training results.Keep Tokens: 1

To keep the trigger word if requiredClip Skip: 2

Standard setting for both realistic and unrealistic models.Flip Augmentation: No

Avoids random flips that can confuse the model.Unet Learning Rate: 0.00010 (1e-4)

Standard learning rate for the U-Net model.Text Encoder Learning Rate: 0.00005 (5e-5)

Typical value; can be set lower or frozen for style-focused LoRAs.Learning Rate Scheduler: Cosine

Smooth decay of learning rate improves training stability.LR Scheduler Cycles: 3

Number of cosine cycles during training; works well with the scheduler.Min SNR Gamma: 0

Rarely needed; keep zero unless specific training issues arise.Network Dimension: 32

Controls model complexity; balances size and performance.Network Alpha: 16

Affects LoRA strength; paired with dimension for ~218 MB model size.Noise Offset: 0.03

Adds slight noise for better generalization.Optimizer: AdamW8bit

Efficient and recommended optimizer for LoRA training.

📊 Calculating Total Training Steps

To avoid overtraining, keep total training steps ≤ 4000.

Use this formula:

Total Steps = (Number of Images × Num Repeats × Epochs) ÷ Train Batch SizeExample:

Dataset: 30 images

Num Repeats: 60

Epochs: 4

Train Batch Size: 2

Calculation:

(30 × 60 × 4) ÷ 2 = 3600 steps — safe under the 4000 step limit.

Adjust Num Repeats to fit your dataset size and avoid going over 4000 total steps.

💡 Tips for Monitoring Training Quality

Evaluate model outputs after each epoch to detect overtraining signs.

Look for artifacts, unnatural details, or loss of flexibility in generated images.

Test with your unique trigger word in prompts to verify style accuracy.

If overtraining occurs, reduce epochs or repeats accordingly.

⚠ Common Technical Mistakes

Setting a Network Dimension far too high — drastically increases LoRA size with minimal quality gain.

Skipping tag review — incorrect or irrelevant tags in .txt files will mislead the model.

Disabling bucket mode with mixed image sizes — can lead to stretched faces and artifacts.

💬 ”If Kohya_ss is like cooking with a full kitchen, Civitai Trainer is like ordering from a good restaurant — less control, but faster and easier.”

4. 🧪 Training and Testing — How to Ensure the Best LoRA Quality

🌐 Online Training on Civitai

Upload your zipped dataset (images +

.txtfiles) to CivitaiSet the recommended training parameters (see previous section)

Start training and monitor progress through the web interface

🖥 Local Training with Kohya_ss (Optional)

Use your organized dataset and parameters from this guide

Monitor GPU usage and training logs carefully

Adjust parameters if needed according to Kohya’s documentation

🔍 Testing and Evaluation

After training, it's crucial to evaluate your model's quality and find the best epoch:

Generate samples for each epoch using a tool like ComfyUI.

Compare outputs visually focusing on:

Style Accuracy: Does the output match your training dataset’s style?

Detail Quality: Are details like eyes, hair, and clothing sharp and correct?

Overtraining Signs: Watch for artifacts, unnatural distortions, or lack of flexibility (e.g., the model fails on varied prompts).

Select the Best Epoch:

Usually, earlier epochs (2 or 3) might perform better if later ones show overfitting.

Choose the balance between style fidelity and flexibility.

💡 Tips for Accurate Comparison

Use the same random seed and prompt settings when generating images for different epochs — this improves comparison accuracy.

Try different CFG scales and sampling methods to find the best output quality.

Keep your unique trigger word in prompts to confirm the model understands your style.

Test your LoRA on one or two other base models to check generalization — a well-trained LoRA should retain its core style while adapting to different model baselines.

⚠ Additional Notes

Since I mainly use Civitai, local training on Kohya_ss may require extra parameter tuning and dataset verification.

Don’t hesitate to experiment with repeats, epochs, and testing prompts depending on your dataset and goals.

💬 “Testing your LoRA is like tasting your dish — you want it just right, not burnt or undercooked.”

5. 🎯 Wrapping Up — Bringing It All Together

By now, you have everything you need to train LoRAs that actually work — not just “another file on Civitai,” but models people will want to download and use.

📌 Key Takeaways

• Quality beats quantity — a clean, consistent dataset is the #1 success factor.

• Parameters matter — small tweaks can make the difference between a perfect LoRA and a failed one.

• Test smart — use consistent seeds, compare epochs, and check results on multiple base models.

⚠ Final Pitfall Reminder

Don’t rush your first LoRA just to “see what happens.”

Bad habits in dataset building or parameter tuning will follow you into every project.

🚀 Your Next Steps

Pick a base model you know and trust.

Collect and prepare a small, perfect dataset.

Train using the parameters from Section 3.

Test, compare, and share your results — learn from every run.

💬 A finished LoRA is like a signature dish — once you’ve mastered the recipe, you can serve it to the world, confident it carries your unique flavor.

💖 Support & Exclusive Access

If you found this guide valuable and want to support my work, you can join me on Boosty.

🌟 Boosty Supporters Get:

Exclusive access to my private tools mentioned in this guide (image processor, WD14 Batch Tagger, workflows).

Priority answers to your LoRA training questions.

Behind-the-scenes updates on my ongoing AI projects.

Early access to my newest LoRAs before public release.

Your support helps me keep creating guides, tools, and resources for the AI art community. Whether you’re here to learn, create, or innovate — you’re part of this journey.

🗨 Stay Involved & Create Together

If you enjoyed this guide, please leave a reaction and share your comments — your feedback helps me make better resources for everyone.

And if you’re looking for the perfect semi-realistic base model for your LoRA experiments, try my 🌗 Equinox model.

It’s ideal for portraits, character art, and stylistic hybrids — a versatile foundation for both beginners and advanced creators.

You might even make your very first LoRA using Equinox as the base — and I’d love to see the results.

💬 “I’ve shared everything I wish I had when I started — now it’s your turn to make something amazing.”

![[BSS] - Lora Training Guide](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/b9ba7bb8-bfcc-4717-8e23-cefaf7932b8f/width=1320/LTG.jpeg)