🛠️ Consistent Character Creation with FLUX using Think Diffusion on a patched RTX 3060

Consistent Character Creation with Flux & ComfyUI (by ThinkDiffusion) | Civitai

Struggling to run ThinkDiffusion’s FLUX workflow on a 3060 without constant crashes or memory errors? You’re not alone. Whether FLUX is crashing on your RTX 3060 or ComfyUI throws memory allocation errors, this guide shows how to stabilize everything—even with just 12GB VRAM.

This guide is perfect for creators searching for a low VRAM AI workflow, FLUX optimization on RTX 3060, or ComfyUI setup that avoids face distortion and memory crashes.

This manual is your shortcut.

📘 What You’ll Get With This Manual and Think Diffusion.

You can purchase the full RTX 3060 AI Build & Optimization Manual [here]

✅ Step-by-step setup for ComfyUI + FLUX on RTX 3060 12GB

✅ Flash Attention build instructions tailored for low VRAM

✅ xFormers installation without breaken your PyTorch environment

✅ Pose sheet generation with multi-angle consistency

✅ LoRA tagging template and optimization checklist

✅ Batch generation tips and troubleshooting

✅ Easy Comfyui Start Up File

✅ Fixes for common ComfyUI memory errors and FLUX crashes on low VRAM cards

Whether you're building LoRA datasets, crafting character sheets, or just trying to get FLUX to run without melting your GPU, this guide walks you through it all.

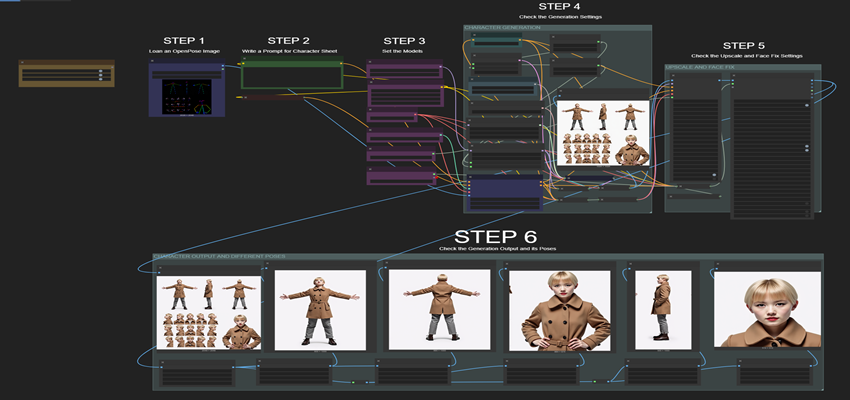

🖼️ Sample Results

![Multi-angle character sheet showing consistent face and outfit, generated with FLUX on RTX 3060 using ComfyUI and Flash Attention]

Generated using:

ComfyUI + FLUX workflow

RTX 3060 (12GB VRAM)

Patched xFormers + Flash Attention

Pose sheet conditioning + fixed seed

💡 Who Needs This Low VRAM FLUX Setup for ComfyUI

Creators using ComfyUI and ThinkDiffusion FLUX

Artists worken with limited VRAM (3060, 2060, etc.)

LoRA trainers who need consistent multi-angle character data

Anyone tired of crashing workflows and inconsistent faces

Perfect for entry beginner programmers who want to use the most advanced comfy Ui nodes on the minimum GPU recommended budgeting the RTX 3060 with its 12 GB that was left behind by Comfyui and the 3080+ gpu's. The 3060 can now compete and run everything on comfy Ui with satisfaction.

🧪 Why This Workflow Is the Ultimate Test

FLUX is one of the most advanced node graphs in ComfyUI. It demands high VRAM, precise conditioning, and optimized attention layers. If your setup can run this workflow—you’ve unlocked the full potential of ComfyUI.

“This guide proves that even a 3060 can run advanced ComfyUI workflows with stability and precision—no need to upgrade.”

🛒 Get the Manual

If you’ve searched for “FLUX crashing on RTX 3060” or “ComfyUI memory error fix,” this guide was built for you. It’s the only verified setup that runs ThinkDiffusion FLUX workflows on budget GPUs without compromise.

You can purchase the full PDF guide It includes:

Easy manual a 13+ pages of setup, optimization, and troubleshooting.

Bonus: bat generation script for easy comfy Ui startup.

Q: Can FLUX run on an RTX 3060 with only 12GB VRAM? Ya—when Flash Attention and xFormers are properly built and patched, you can run full-body character workflows without crashing.

Q: How do I fix blurry or distorted faces in ComfyUI? Use fixed seeds, clean prompts, and a face detailer pass. This setup also improves multi-angle consistency.

Q: Is this compatible with SDXL or other advanced models? Ya—this setup supports SDXL workflows with pose sheet conditioning and multi-angle LoRA training.

Q: Is this compatible with SDXL or other advanced models? Absolutely. The workflow supports SDXL pipelines, including pose sheet conditioning and LoRA training.

🙏 Thank You

Thank you for supporting our engineers at AIWorkflowLab. Every download supports us in building more tools and guides that make advanced AI workflows accessible—even on budget hardware. Your support fuels Enginuity.

We’re a small team passionate about pushing the limits of low VRAM setups. If this manual got your engine running properly, consider sharing it or leaving a feedback.

⚠️ Stay Safe—Use the Official Guide

This manual is the only verified setup for running FLUX on an RTX 3060 with low VRAM. Please don’t go hunting for any cracked scripts or random downloads—many of them contain malware, corrupted builds, or outdated patches that can damage your system. AIWorkflowLab provides a clean, tested workflow that’s safe, stable, and optimized for your hardware. This guide is part of an ongoing discussion in the ComfyUI community. You can see how it compares to other setups—including loscrossos’ installer—in this Reddit thread.

💼 “Hiring or Collaborating?”

If you’re worken on something cool—or have a job or project in this space—feel free to leave a comment below or send a message to our alias address [email protected]. I check in regularly and would connect with creators building around ComfyUI, FLUX, or LoRA training and workflows.

© 2025 C.S. AIWorkflowLab. All rights reserved. 8.22.25