AI Render Scope - Windows Utility for Metadata Inspection

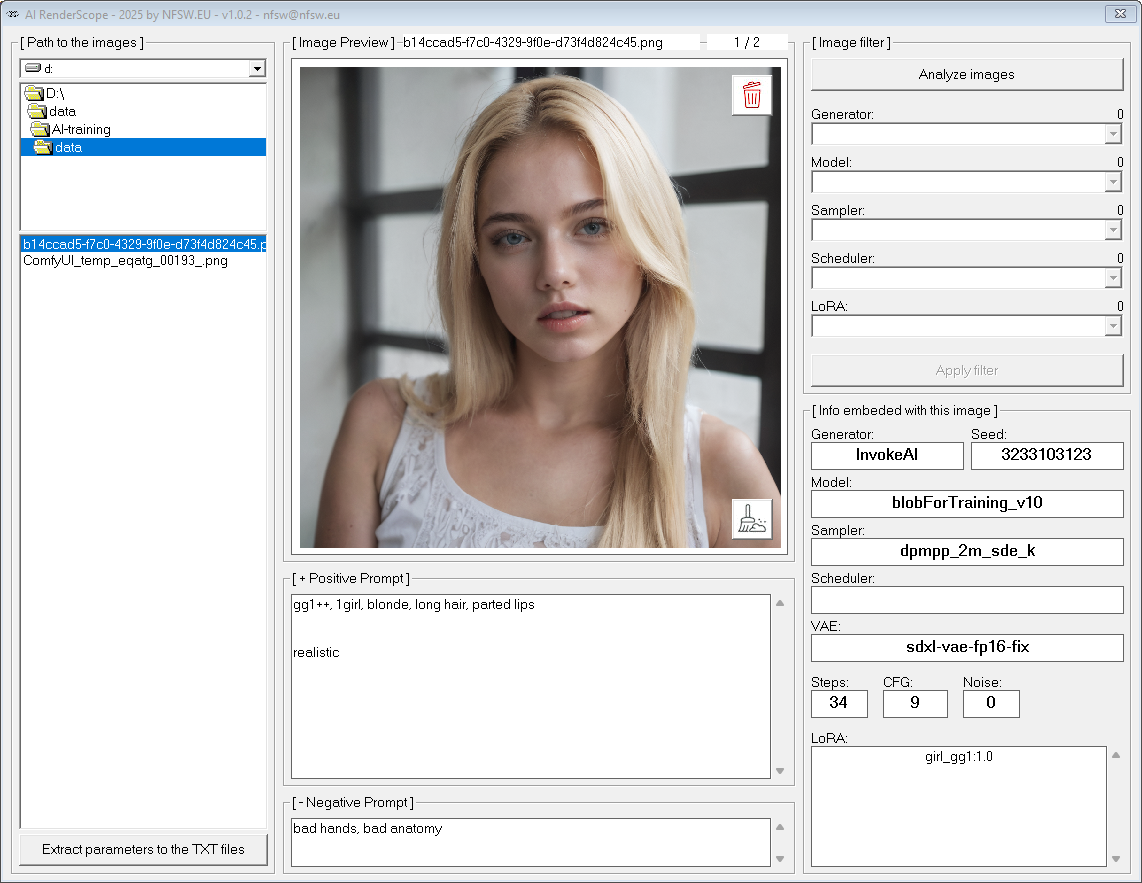

I’ve prepared a small Windows-only utility for anyone who works with AI image generation and wants to inspect metadata and prompts afterwards. It has easy GUI for Windows users.

The goal is simple: when you are testing different parameter settings, models, or LoRA adapters, you can later go back and evaluate the results with all metadata at hand.

Currently, the app supports InvokeAI and ComfyUI.

In InvokeAI, metadata are stored quite straightforwardly.

In ComfyUI it’s less direct, so the utility searches for the KSampler node in the graph and retrieves values from there.

In A1111, metadata are stored as parameter, most easy to read

This is the third release (v1.1.3).

It will be further developed together with my tagger project, and new features will be added gradually.

Quick Manual

Description

AI Image Prompt Viewer is a simple desktop viewer.

It does not generate new images. Instead, it lets you browse datasets and check:

Positive & Negative Prompts

Generator, Model, Sampler, Scheduler,VAE

Seed, Steps, CFG, Noise values

LoRA modules with weights

Clicking on the preview image opens a separate external window with the picture at full resolution, which you can move to another monitor or resize for detailed inspection.

Parameters Overview

Generator – Backend used for generation (InvokeAI, ComfyUI).

Model – The model checkpoint used. Helps with reproducibility.

Sampler – Diffusion sampling algorithm. Influences style and consistency.

Scheduler – Noise schedule per step (not always present).

VAE (Variational Autoencoder) – Defines the encoder/decoder used for converting latent space into pixels. Different VAEs can significantly affect color accuracy, contrast, and overall visual quality. Choosing the correct VAE is especially important when a model was trained with a specific one.

Seed – Random number seed. Re-using it with the same settings usually reproduces the image.

Steps – Number of denoising iterations. More steps = more detail (up to a point).

CFG (Classifier-Free Guidance) – Balances fidelity to the prompt vs. naturalness. Common range 6–12.

Noise – Initial noise strength (if recorded).

LoRA – Lightweight adapters with assigned weight (e.g.

characterLora:0.8).

Extraction Functions

The app also includes extraction features:

Filter – You can check or uncheck specific parameters to quickly export only the fields you’re interested in (e.g., just model + sampler, or only prompts).

Extraction – Selected metadata can be exported into plain

.txtfiles with the same name as the image.This makes it easy to build a structured dataset of prompts and parameters.

Useful for training pipelines where captions and settings need to be stored separately.

Sweep and Edit (Broom Icon 🧹)

The broom icon activates “Sweep and Edit” mode:

It removes all non-essential metadata from the image, keeping only the basic generation parameters (prompt, model, sampler, steps, CFG, seed, LoRA, VAE).

This is especially important because ComfyUI often stores a full workflow graph inside the image metadata – which can include unnecessary or even private information.

After sweeping, you can edit and save a clean version of the image.

This way, your images remain lightweight and privacy-safe, while still containing all the important information needed to reproduce results.

Image Filter Panel

On the right-hand side, there is an Image Filter Panel that shows the main generation parameters:

Generator – Which backend created the image (InvokeAI, ComfyUI, etc.).

Model – The model checkpoint that was active.

Sampler – Diffusion sampling method used.

Scheduler – Step scheduling (if available).

LoRA – LoRA modules with their weights.

Installation

Its exe file in ZIP. Just unpack and run.

No extra programs needed, no Python, no external utils. Runs as is.

No uploads, runs locally in private

Notes

This is version 1.1.3, should be fine, but still some possibility for the bugs and limitations.

Currently works with InvokeAI, A1111 and ComfyUI outputs.

Planned improvements include broader format support and integration with my tagger tool.

Part of the NFSW Tools Ecosystem

This application is part of a broader ecosystem of free utilities we’re building to make working with AI models easier, clearer, and more creative:

LoraScope – inspect LoRA training metadata and keyword frequencies.

AI RapidTagger – manage tags, build prompts, and organize datasets.

AI RenderScope – explore, analyze, and filter metadata from generated images.

Prompt Builder (coming soon) – a streamlined tool for composing and testing prompts interactively.

Why do we build these? Because AI isn’t just about generating pretty pictures – it’s about understanding, controlling, and refining the process. These tools save time, make results reproducible, and give creators the freedom to focus on creativity instead of guesswork.

If you’re experimenting with LoRAs, building datasets, or refining prompts, this ecosystem will become your everyday toolbox – lightweight, focused, and made for real AI workflow needs.

NEWS

2026/01/19 - 1.1.4 - just fixes some cleaning issues

2025/09/30 - 1.1.3 - is out :)

2025/10/01 - 1.1.2 - no big change, just some speed improvement

Any kind words and bright ideas are awaited on [email protected]