Pivotal tuning is the technique of training embeddings and network (unet and potentially text encoder) at the same time.

It is commonly used in the literature [1,2,3], implemented in cloneofsimo's LoRA repository (the first implementation of LoRA for Stable Diffusion), and has its benefits widely demonstrated.

In spite of this, pivotal tuning is a less common practice among casual LoRA trainers, probably due to its lack of support from both training and generation sides. I will dig into this in this post.

Why you should care about pivotal tuning

1. Minimal text encoder corruption

2. Customized naming (and no more headache in determining trigger word to avoid inappropriate token or token conflict)

3. Separation between core characteristic (in embedding) and the rest (in fine-tuned weight differences)

4. Better transferability (consequence of 3)

5. Possibility of giving an appropriate initialization word to embedding while not interfering with how the text encoder interprets this word

---

I will illustrate this with my new gekidol model which comes with 16 embeddings---15 for characters and one for style.

To begin, since you are now using embeddings instead of fixed words, it is pretty clear that you have the liberty to rename the embeddings. Training of LoRA is associated to the embeddings instead of fixed trigger words, so you don't need to worry about all the problems related to how your chosen trigger word is understood by the text encoder.

Next, let me give a concrete example on the first and the third points

(Higher resolution version of the second and third images can be found on model page, unless they get removed by civitai for reasons I don't understand)

In the above three images, embeddings are used throughout, while we make the following change for different rows (columns)

We use the entire LoRA

We only use the unet of the trained LoRA

We only use the text encoder of the trained LoRA

We do not use the LoRA

As we can see from the images, we can pretty much get the characters even without using the fine-tuned text encoder (while it is indeed trained). This suggests that we have shifted the work originally attributed to text encoder to embeddings, minimizing the risk of corrupting the text encoder because they need to map whatever input token to things that describe the character trait.

* Note that with the same learning setup but without embedding training, disabling text encoder of the obtained LoRA would cause severe lost in character similarity.

Furthermore, in the forth row, even though the LoRA is not used at all, we still get some characteristics of the target character, suggesting a separation of tasks between embedding and network training. This phenomenon is also well documented in the mix-of-show paper [3]. More investigation will be needed to find a good balance between embedding training and LoRA training.

Finally, thanks to this separation, the learned concepts can be more easily transferred to other models. The embedding would still work to some extent and you can lower unet weight to avoid style conflict. Here are some examples:

In the following image I even do not use LoRA at all!

Yes, you can transfer character and get style from different base model with standard LoRA training, but as I have shown in A Certain Theory for LoRa Transfer. This generally requires you to use source models rather than downstream models. Surprisingly, the gekidol model is trained on Crosstyan/BPModel, which is more a downstream model, and according to the said post, has bad transferability to other popular models. Yet, this problem is partially solved by pivotal tuning, because embeddings are more transferable than weight differences!

(I am not sure if you have this experience, you have LoRAs and embeddings trained on top of different models and using completely different data. However, combining the two gives you better result, even though the LoRA is meant to be associated to some trigger word instead of this embedding. This means what the LoRA learns in Unet helps when we put the right embedding .)

Training

As far as I am aware, pivotal training is unfortunately still not available in Kohya trainer at the moment (which a lot of popular colabs and guis build on). You have yet still a few choices, and I personally recommend HCP-Diffusion which is always in active development.

Warning: due to a bug in how HCP deals with embedding training you cannot use embedding names with single token for the moment. There are also some bugs in tokenizer which makes some words both double token and single token (e.g. ansel). For the moment the safer way is to always include _ in embedding name. We are contacting the developer of HCP to solve this.

Beyond pivotal tuning, HCP-Diffusion also provides the possibility to weight reconstruction losses by soft masks and the ability of training DreamArtist++ (training of both positive and negative LoRAs in te same time). Its great flexibility and highly configurable nature makes it probably the trainer of choice for more advanced users.

What's more, LyCORIS support is also coming soon!

At this point, it is worth mentioning that the automatic pipeline of narugo1992 also uses HCP-Diffusion. Pivotal tuning is used in all their models (with only one embedding in their case as for now they focus on single-character models), with this configuration file. Although they have not fully taken advantage of pivotal tuning because of their old data processing strategy does not involve tag pruning, this will be solved in their updated 1.5 pipeline.

As for my use case, the script to generate corresponding config files for all-in-one training has been incorporated into my dataset construction pipeline: https://github.com/cyber-meow/anime_screenshot_pipeline/blob/main/docs/Start_training.md#training-with-hcp-diffusion

Usage

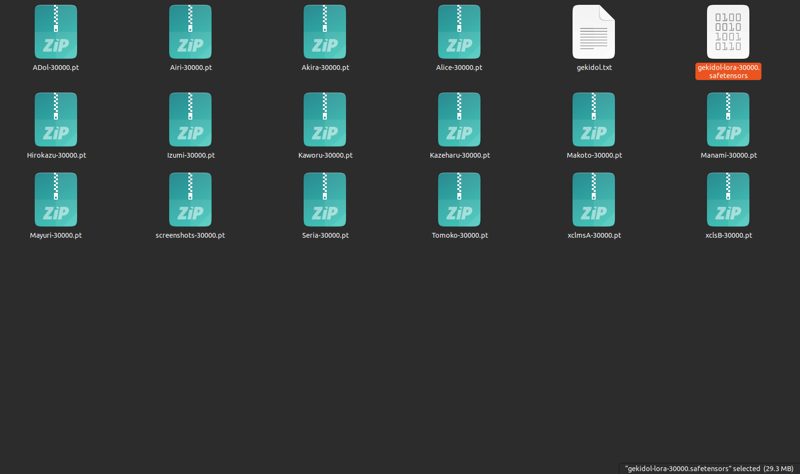

Another hurdle that prevents from popularizing pivotal tuning is that one fine-tuning can come with a lot of embeddings. For example, for my gekidol model we have the following

Organizing them can be troublesome. The good news is that we are also providing a solution to this: https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/13568

Kohaku, the developer of LyCORIS has implemented a bundle system to save embeddings and LoRA together! (Update: you can now use it in the dev branch of webui)

In the futureNow, you just need to download one file, and once this LoRA/LyCORIS is activated, you can also use the accompanying embeddings by entering their names. An example is https://civitai.com/models/173081

For conversion between bundle and lora+embeddings you can use https://github.com/cyber-meow/anime_screenshot_pipeline/blob/main/post_training/batch_bundle_convert.py

With the following extension you can see the embeddings saved in each bundle lora by just right clicking on it https://github.com/a2569875/lora-prompt-tool

For the moment, there is an issue in the use of bundle with regional prompter https://github.com/hako-mikan/sd-webui-regional-prompter/issues/258

---

[1] Kumari, N., Zhang, B., Zhang, R., Shechtman, E., & Zhu, J. Y. (2023). Multi-concept customization of text-to-image diffusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (pp. 1931-1941).

[2] Smith, J. S., Hsu, Y. C., Zhang, L., Hua, T., Kira, Z., Shen, Y., & Jin, H. (2023). Continual diffusion: Continual customization of text-to-image diffusion with c-lora. arXiv preprint arXiv:2304.06027.

[3] Gu, Y., Wang, X., Wu, J. Z., Shi, Y., Chen, Y., Fan, Z., ... & Shou, M. Z. (2023). Mix-of-Show: Decentralized Low-Rank Adaptation for Multi-Concept Customization of Diffusion Models. arXiv preprint arXiv:2305.18292.