我不会英文,以下译文来自chatGPT

I don't know English, the following translation is from chatGPT.If there are any shortcomings, please forgive me.

Preface:

你是不是也像我一样,在使用SD1.5时经常被它的构图能力所劝退。比如明明提示词写的是portrait,它却跑出个close-up甚至upper body,要是偶尔一两次倒也罢了,但事实是跑对的概率并不高,跟抽奖一样,无奈的抽卡党们的万恶之源,家人们心累谁懂啊

有人可能会建议使用 ControlNet 来解决,当然,如果我们有合适的参考图,ControlNet又恰巧顺利无误的提取了其中的信息,在SD不抽其他风的前提下,我们是可以得到较好结果的,不过,这也太不人工智能了吧。

今天我想探讨的是,是否有一种简单的方法,既不像 ControlNet 那么繁琐,又可以比较准确的控制构图,比如用一个lora。这里是案例。

Many have struggled with the composition control in SD1.5. Often, when you intend to get a close-up shot, it provides you with a portrait or even upper body shot, which can be incredibly frustrating, testing our patience. Some may suggest that using ControlNet can address this issue. Certainly, finding an appropriate composition image and then extracting information through ControlNet can offer better control. However, what we are exploring today is whether there is a simpler method, such as using Lora.The lora link: SDXLrender

以上是我训练这个lora的初衷,以下是训练的关键点,分享出来,希望对炼丹师们有所启发。

Here's my approach:

Data Preparation: I used images rendered with SDXL. This way, SD1.5 can learn not only composition but also the lighting and textures from SDXL.

首先是收集素材,这里我使用SDXL拟真模型,针对每个构图提示词跑了一共有上百张图,再加以筛选,这样的好处是不仅SD1.5更容易学习,也可以将SDXL的质感一并训练进去。

Concise Prompting: The simpler, the better. I used only two prompt words, "character" and "composition," to make SD's learning more focused

第二步就是打标,由于我只针对构图提示词进行训练,所以只打了构图提示词的标,让SD更加聚焦。例图中只用了两个词“1girl, from above”

Training Data: I configured my setup to train only UNET, this is very important! meaning I only trained the images and not the prompt words. This significantly reduces contamination from the prompt words, preventing SD from learning irrelevant content.

第三步就是训练设置,相对简单的多,但有一个关键点很重要,就是选择仅训练UNET。虽说SD的clip能力一般,但是像构图这样的常用提示词,它还是能理解的,只是对应图像的偏差较大(我是这样理解的,说的不对请大佬指点)这就是我们要解决的问题。

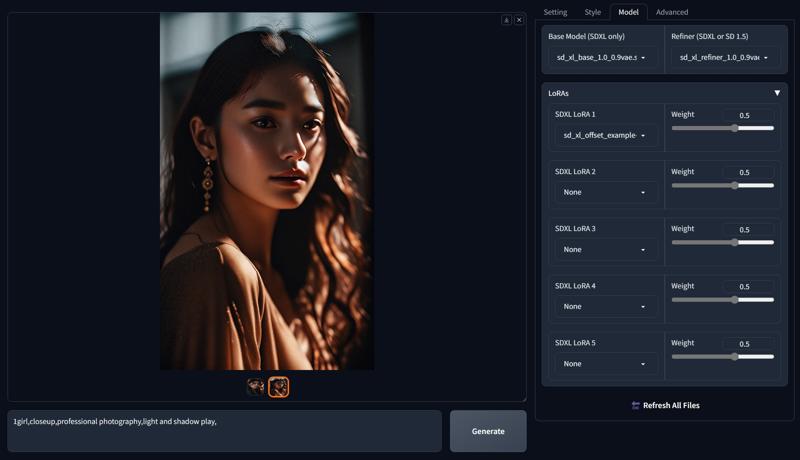

以下的是训练之后的测试图,这里我选择了最常用的九组构图提示词,结果均优于原模型的构图效果,同时也提升了出图的品质,这就是为什么我选用SDXL出的图作为训练集。

测试模型是RealisticVision_v5.1,lora权重为1。

Here are the results of the training

Epilogue

For training and testing purposes, I've uploaded its dataset

为了方便感兴趣的小伙伴们继续研究,我也将数据集一并分享出来,可到lora页下载

The lora link: SDXLrender

希望这篇分享对你有所帮助,hope you have fun!