Introduction

AnimateDiff for SDXL is a motion module which is used with SDXL to create animations. It is made by the same people who made the SD 1.5 models. As of writing of this it is in its beta phase, but I am sure some are eager to test it out. Most settings are the same with HotshotXL so this will serve as an appendix to that guide. The big difference between the two is that AnimateDiffXL was trained on 16 frame segments to hotshot's 8. These come with some pros and cons.

If this is the first time you are getting into AI animation I suggest you start with my AnimateDiff Guide https://civitai.com/articles/2379. Then go over my hotshot guide noted in the paragraph below.

If this is the first time you are doing SDXL animations please follow my Hotshot XL guide: https://civitai.com/articles/2601/. It will help you find the extra nodes/controlnets you need.

**WORKFLOWS ARE ATTACHED TO THIS POST TOP RIGHT CORNER TO DOWNLOAD UNDER ATTACHMENTS**

System Requirements

A Windows Computer with a NVIDIA Graphics card with at least 12GB of VRAM. Because the context window compared to hotshot XL is longer you end up using more VRAM. The resolution it allows is also higher so a TXT2VID workflow ends up using 11.5 GB VRAM if you use 1024x1024 resolution.

Unfortunately unless you have more that 12 GB VRAM that leads to no room for controlnets! However, I have tested lower resolutions (same as my hotshot workflows) and fortunately the motion module does not seem to degrade significantly!

Installing the Dependencies

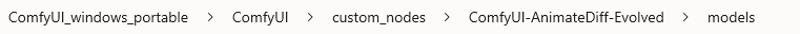

All you need to do is add the motion module! The motion module is available here : (Google Drive/HuggingFace/CivitAI) download it and place it here:

Hotshot-XL vs. AnimateDiffXL

I think for the time being given that it is in beta I wont do a full comparison and let you decide for yourself. Certainly there is a benefit to the native 16 frame context window when it comes to motion, however a lot of the outputs are not as sharp as Hotshot. I expect things to change as it gets fine tuned.

Node Explanations and Settings Guide

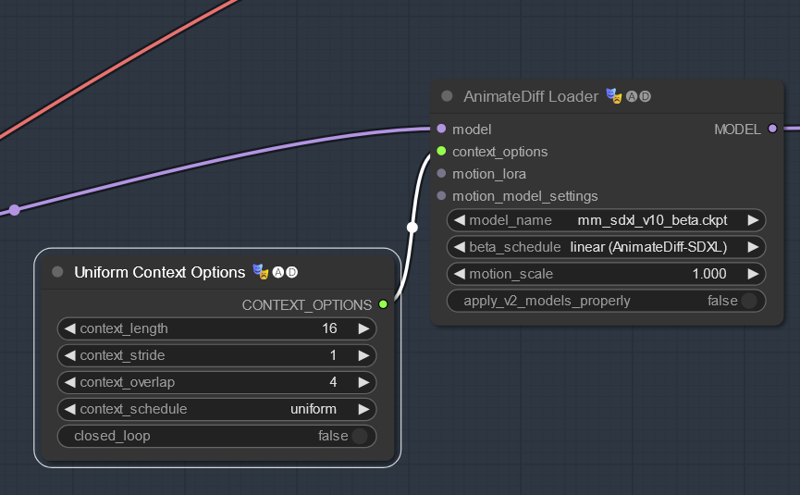

There are no new nodes - just different node settings that make AnimateDiffXL work .

The only things that change are:

model_name: Switch to the AnimateDiffXL Motion module

beta_schedule: Change to the AnimateDiff-SDXL schedule

context_length: Change to 16 as that is what this motion module was trained on

Making Videos with AnimateDiff-XL

I have attached a TXT2VID and VID2VID workflow that works with my 12GB VRAM card. I have had to adjust the resolution of the Vid2Vid a bit to make it fit within those constraints. Feel free to adjust resolution to your graphics card.

Otherwise I suggest going to my HotshotXL workflows and adjusting as above as they work fine with this motion module (despite the lower resolution). If wished can consider doing an upscale pass as in my everything bagel workflow there.

Troubleshooting

As this is very new things are bound to change/break. I will try to update this guide periodically as things change.

In Closing

I hope you enjoyed this tutorial. If you did enjoy it please consider subscribing to my YouTube channel (https://www.youtube.com/@Inner-Reflections-AI) or my Instagram/Tiktok/Twitter (https://linktr.ee/Inner_Reflections )

If you are a commercial entity and want some presets that might work for different style transformations feel free to contact me on Reddit or on my social accounts (Instagram and Twitter seem to have the best messenger so I use that mostly).

If you are would like to collab on something or have questions I am happy to be connect on Twitter/Instagram or on my social accounts.

If you’re going deep into Animatediff, you’re welcome to join this Discord for people who are building workflows, tinkering with the models, creating art, etc.

(If you go to the discord with issues please find the adsupport channel and use that so that discussion is in the right place)

Special Thanks

Kosinkadink - for making the nodes that made this all possible

Fizzledorf - for making the prompt travel nodes

The AnimateDiff Discord - for the support and technical knowledge to push this space forward

![[GUIDE] ComfyUI AnimateDiff XL Guide and Workflows - An Inner-Reflections Guide](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/11733b68-7848-4de8-b36a-8193d2ee7873/width=1320/11733b68-7848-4de8-b36a-8193d2ee7873.jpeg)