This is a small workflow guide on how to generate a dataset of images using Microsoft Bing! This is a free, but very manual-labour intensive way of getting a high-quality generated dataset.

This is part of a series on how to generate datasets with: ChatGPT API, ChatGPT, Bing, ComfyUI.

Bing Image Creator

https://www.bing.com/images/create/

Head on over to bing and let's create a dataset! You can of course generate whatever dataset you'd like, but I will share with you some tips on how to create Style / World Morph LoRAs that I usually make.

Now just enter the prompt for your generation, save the images and you're done! Easy.

Okay, let's dive a bit more into it.

Consistency

If you are creating a style LoRA, you are likely going to want a fairly consistent output of images to use for your training data. This is usually achieved by having a fairly long / complex prompt. The prompt should be very descriptive, but still allow the engine some creative freedoms.

You want to use the same prompt, but only switch out a few words to maintain a consistent style.

Prompting

When I have an idea for a style, I try to split it up into 3 parts. But it could have more as well.

I set it up like this:

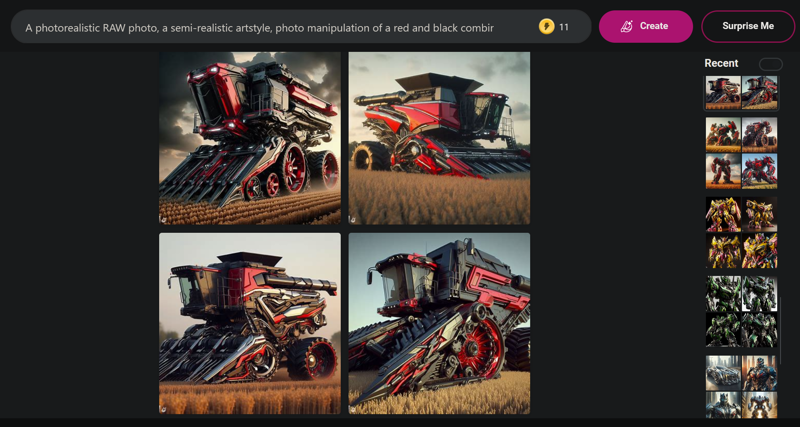

[Style-descriptive prefix with priority details] [SUBJECT] [Style-descriptive suffix with smaller details, some keywords etc.]

Example:

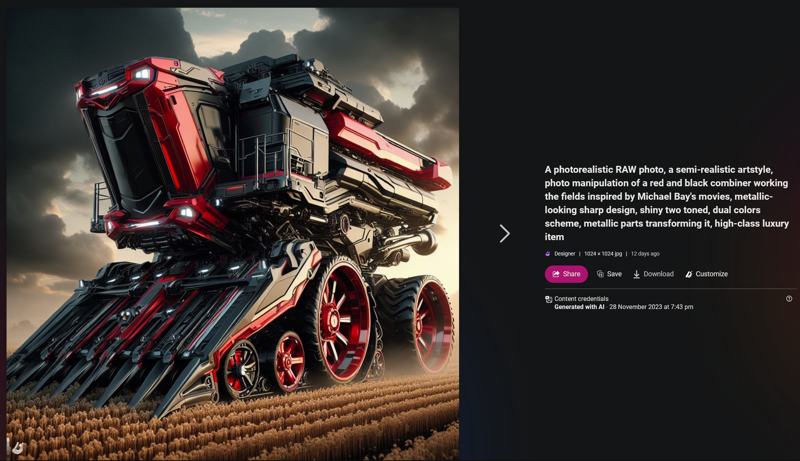

A photorealistic RAW photo, a semi-realistic artstyle, photo manipulation of a red and black combiner working the fields inspired by Michael Bay's movies, metallic-looking sharp design, shiny two toned, dual colors scheme, metallic parts transforming it, high-class luxury item

This is how I prompted for my Transformers-style.

For this model, I used two colors for each concept. This is because I felt like this was important to the design, and I wanted the model to be very color-tweakable. So I made an effort to ensure the dataset was two-colored (and captioned as such).

Lookdev - Finding your style

It's onlikely your first prompt nails the style right away. If it does, try it out with a different concept and see if it still works. If it does, you're probably good to go! If not, you'll need to tweak your prompting to produce a more consistent style output.

This is a lot of back and forth. You have to figure out what parts of your prompt is responsible for the things you don't want in there.

Example:

Prompt A

A photorealistic RAW photo, a semi-realistic artstyle, photo manipulation of a red and black combiner working the fields inspired by Michael Bay's Transformers, metallic-looking sharp design, shiny two toned, dual colors scheme, metallic parts transforming it, high-class luxury item

Prompt B

A photorealistic RAW photo, a semi-realistic artstyle, photo manipulation of a red and black combiner working the fields inspired by Michael Bay's movies, metallic-looking sharp design, shiny two toned, dual colors scheme, metallic parts transforming it, high-class luxury item

These 2 prompts look very similar, but the results are vastly different. I didn't want to add robots everywhere. I wanted things to feel like they they had the same design/style.

These subtleties in your prompting is going to make the key difference. Try using descriptive words, like "inspired by X" or "X-like in appearance". DallE-3 is actually very good at understanding the subtleties like that. Write around the subject. Instead of saying "Super Mario Style", you could say "Classic platforming game plumber style" perhaps. Not sure about that one specifically, but hopefully you understand the way you have to work around the problems until you find a style that is consistent across multiple concepts.

Environmental Prompting

I recommend prompting for different environments in your images. Otherwise DallE-3 is going to be lazy and likely just give you a simple single-colored background. I try to include a location into each of my [subject] prompts. A natural place for the object. Like for the combine harvester above, I have a location prompt of "working the fields". If you are creating an artstyle, you probably want to caption this environment too, but for the "World Morph"-styles I create, I have found that it's not necessary.

Generating and saving all the images

Once you have created your style, and tested it on a few different concepts, you should be ready for the next step. To repeat this over and over again until you have all your images! Now the fun begins...

Saving each image is a manual process, unless you have some browser extension which could do it automatically.

Click on an image to get it fullscreen. You should now see a "Download"-button to the right, under the prompt. Use this to download the image. Do not right-click and choose "Save Image". This will save your image name as "OIG", which means all images you download will have to be manually renamed. Using the Download-button will instead give it a random hashed name, which is fine. Something like "_35097d00-9d83-48cc-8146-41b8af20cca5".

Download each image that you are happy with, and generate the next set of images.

Speed Tip: Note that you can start generating the next set of images, and while it generates, you can go back to the previous generations and save them.

Warning: Bing will only save access to your 20 most recent generations. Everything else will not be accessible in the "Recent" list on the right side (or bottom side on mobile devices). So don't generate too many images without saving them.

You can however bookmark the each generation, or store them in a list for future viewing, but it's not guaranteed to last forever. I don't know how long it takes for them to expire.

Thanks J1B for the suggestion.

I usually go with 4-8 images for each concept. If I'm training a model that needs to be able to have good color control, I may do 4 images of 2/3 different colors for each concept. I just pick colors randomly.

File and folder management

It's up to you how you train your styles and maintain your files, but here's how I structure it.

For each concept (more on that below), I have a folder with the name of the concept. For example "airplane" is a concept. And so is "combine harvester" from the examples above.

Move the generated images for each concept into the right subfolder in order to keep a good structure. We can also use this to automatically generate captions for your images based on the folder structure.

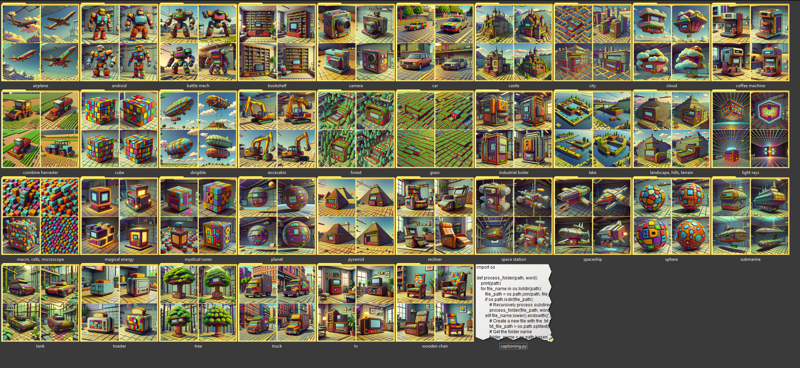

Here's what it can look like after a dataset of generations:

Simple Captioning Script

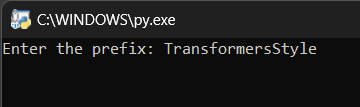

DonMischo wrote a small captioning script. I made a few tweaks to it, and you can download it here.

Place the script in the same folder as your concept-folders (remember, one folder per concept), and it should be named as the concept, because the script will generate captions for the images inside based on the folder name.

Double-click to run the script, and it will ask you for the "Prefix". This will be at the start of your caption files. If you are going to train with a trigger word, this is what you want to enter here. You can make it whatever you want. This is not a training guide, so I won't cover those topics here.

If everything is successful, you should now have gotten a caption file matching the image name for all images in your folders. See example image here.

You should now be ready to train! Good luck.

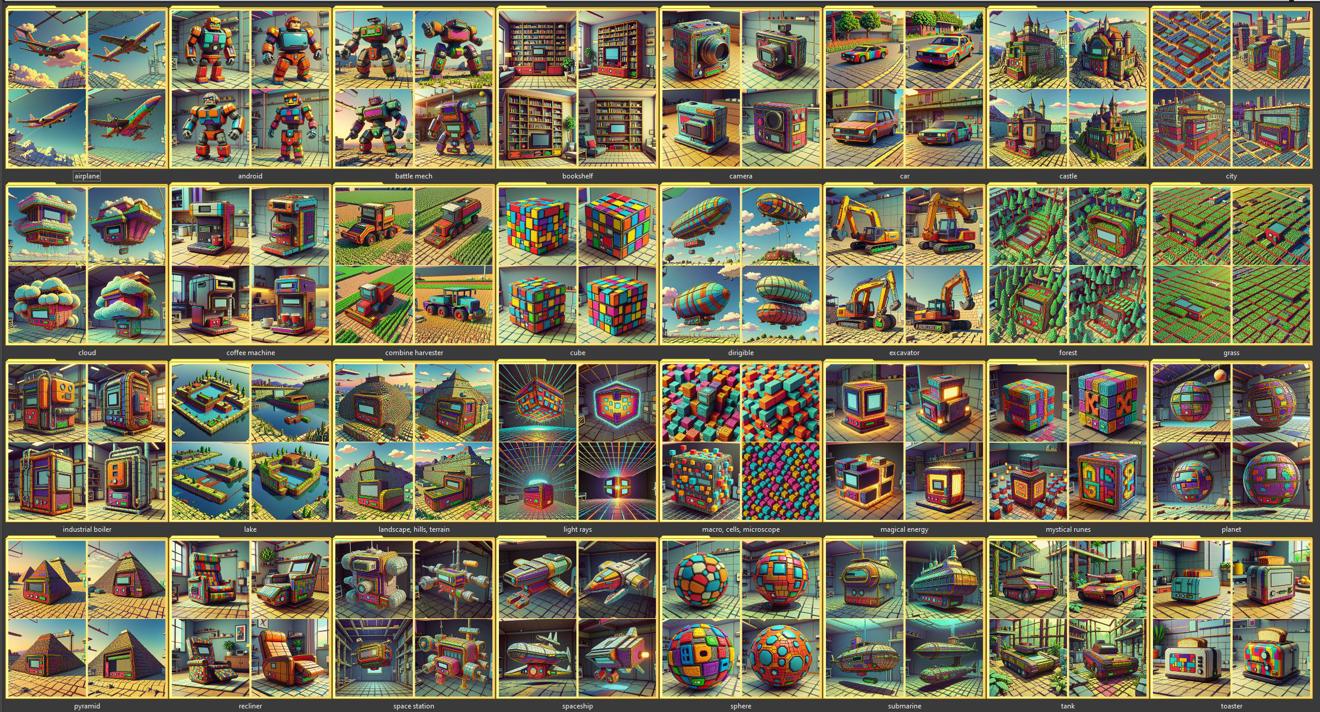

List of Concepts

You should figure out what kind of concepts works well for your training. But if you want to create something similar to the types of Style / World Morph LoRAs that I have created, here's the list that I use.

Big thanks to Konicony for writing the original guide to this workflow, and for Navimixu for teaching me additional tips and tricks, and providing the core for the list of concepts below.

Here's the list of generation prompt I use to create the dataset with inside of bing:

majestic airplane soaring through the skies

humanoid mechanical robotic cyborg character inside a spaceship

battle mech on a battlefield

bookshelf in a living room

vintage photo camera on a table in an office

car driving on a road

castle in the mountains

city skyline, closeup daytime

cloud in the skies, fluffy

coffee machine in a kitchen

combine harvester plowing the fields on the farm, planted harvest crops

cube hovering in the air, artifact

dirigible soaring the skies on a sunny day

excavator working the construction site

forest trees, close up of trees, stems and roots, branches in forest

industrial boiler, technical parts, pipes, gauges, in a warehouse

landscape with hills, (terrain:0.7), nature

light rays from a glowing center in space

cells and molecules and macro photography

magical energy swirling magic energies, glowing

mystical runes and magic energies

planet in space, detailed and realistic, stars and cosmos in the background

pyramid in the desert, sand

recliner in a living room

space station in outer space, ISS, hi-tech, starry cosmos background in space

spaceship in outer space, stars and cosmos background

sphere with hi-tech details, alien artifact

submarine deep under water

tank panzer wagon, gun barrel, driving through the jungle and desert, tech

toaster on a kitchen counter in a modern kitchen

lonely single tree, detailed leaves

truck driving in a city

vintage TV in a living room

wooden chair in a living roomAnd here's the same list, but just the concept names. This is what I use as the caption for the dataset ConceptName CONCEPT

Concepts:

airplane

android

battle mech

bookshelf

camera

car

castle

city

cloud

coffee machine

combine harvester

cube

dirigible

excavator

forest

industrial boiler

landscape, hills, terrain

light rays

macro, cells, microscope

magical energy

mystical runes

planet

pyramid

recliner

space station

spaceship

sphere

submarine

tank

toaster

tree

truck

tv

wooden chair