Introduction

Latent Consistency Modules (LCM) have existed for some time within Stable Diffusion. The main benefit they were designed to have is to decrease the number of steps and therefore the generation time for images. I has been applied to AI Video for some time, but the real breakthrough here is the training of an AnimateDiff motion module using LCM which improves the quality of the results substantially and opens use of models that previously did not generate good results.

This guide assumes you have installed AnimateDiff. The guide are avaliable here:

AnimateDiff: https://civitai.com/articles/2379

WORKFLOWS ARE ATTACHED TO THIS POST TOP RIGHT CORNER TO DOWNLOAD UNDER ATTACHMENTS

System Requirements

A Windows Computer with a NVIDIA Graphics card with at least 10GB of VRAM. The system requirements are exactly the same as using AnimateDiff in SD1.5

Installing the Dependencies

You need to go to: https://huggingface.co/wangfuyun/AnimateLCM/tree/main

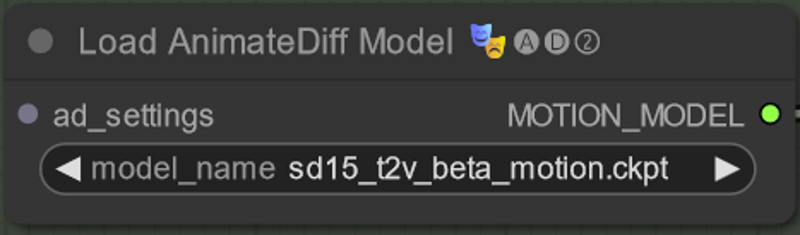

and Download: "sd15_t2v_beta.ckpt"

If you do not have the LCM LORA you also need to download: "sd15_lora_beta.safetensors"

Using AnimateDiff LCM and Settings

I will go through the important settings node by node.

Load the correct motion module!

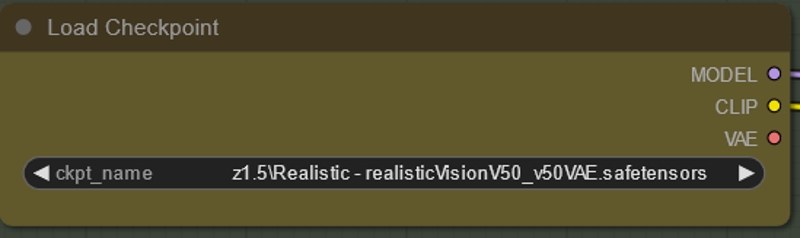

One of the most interesting advantages when it comes to realism is that LCM allows you to use models like RealisticVision which previously produced only very blurry results with regular AnimateDiff motion modules. So if there is a motion module that does not play well with the usual AnimateDiff this is likely to work much better with LCM

You need to use the LCM LORA with the motion module - you can defiantly work on changing the weights here to different effects.

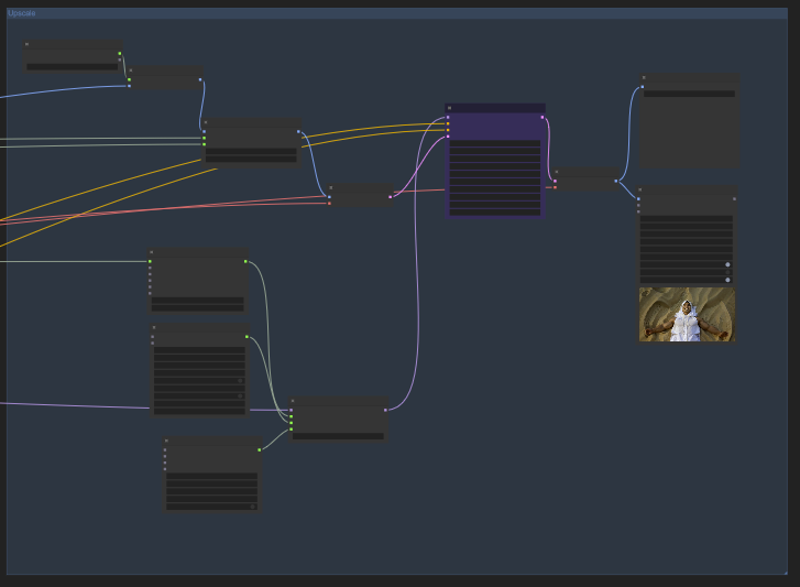

Although this setup may seem a bit overwhelming if you are used to the v1 AnimaDiff Nodes this is just a standard similar setup. There are two important sett ings here. First is the beta_schedule - all the LCM beta schedule work fine here - even the AnimateDiff one works too - choosing different may require adjustment to your CFG in my experience. (I actually thin lcm >> sqrt linear the most right now) The other relevant thing to discuss is noise_type which surprisingly has less effect on the result. For this workflow I am u sing empty noise - which means no noise at all! I think this results in the most stable results but you can use other noise types (even constant noise which usually breaks animatediff) to interesting effects.

I have not got good results with anything but the LCM sampler. You can use any scheduler you want more or less.

Deciding on step count is important here. One of the greatest advantages of the LCM workflow is that low steps means you can iterate very quickly. However if you are finding that you are getting context shift (ie. noticing things changing suddenly mid animation) I suggest increasing the step count. Quality does improve a lot with more steps so I suggest iterating at low steps but doing the final at higher steps like 25.

CFG also changes a lot with LCM which will burn at higher CFGs - too high and you get more context shifting in the animation. You can go as low as 1. You might need to increase the weight of some of your prompt for the model to follow it better. You will have to fine tune this for your prompt.

The upscale workflow is just one of many possibilities - I would detach or mute it while you are refining your prompt. Do know that some issues/inconsistencies really improve with upscaling to higher resolutions - so it is worth doing to your VRAM capacity once you are happy with a prompt. You can often use higher CFG here if you wish.

Workflows

I have uploaded both a 8 step (with upscale muted for max speed) and 25 step workflow (for better quality) in case you are new to comfy and don't want to mess around with the settings yourself. They are otherwise identical.

Important Notes/Issues

I will put common issues in this section if they arise.

In Closing

I hope you enjoyed this tutorial. If you did enjoy it please consider subscribing to my YouTube channel (https://www.youtube.com/@Inner-Reflections-AI) or my Instagram/Tiktok/Twitter (https://linktr.ee/Inner_Reflections )

If you are a commercial entity and want some presets that might work for different style transformations feel free to contact me on Reddit or on my social accounts (Instagram and Twitter seem to have the best messenger so I use that mostly).

If you are would like to collab on something or have questions I am happy to be connect on Twitter/Instagram or on my social accounts.

If you’re going deep into Animatediff, you’re welcome to join this Discord for people who are building workflows, tinkering with the models, creating art, etc.

(If you go to the discord with issues please find the adsupport channel and use that so that discussion is in the right place)

Special Thanks

Kosinkadink - for making the nodes that make AnimateDiff Possible (note he does now have a patreon in case case you want to support development of this great tool!)

The AnimateDiff Discord - for the support and technical knowledge to push this space forward

![[GUIDE] ComfyUI AnimateDiff LCM - An Inner-Reflections Guide](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/b773a141-f307-4970-82dc-4301966831a8/width=1320/b773a141-f307-4970-82dc-4301966831a8.jpeg)