tl;dr

Results here: https://civitai.com/images/8125320 (note that there are 3 image grids!)

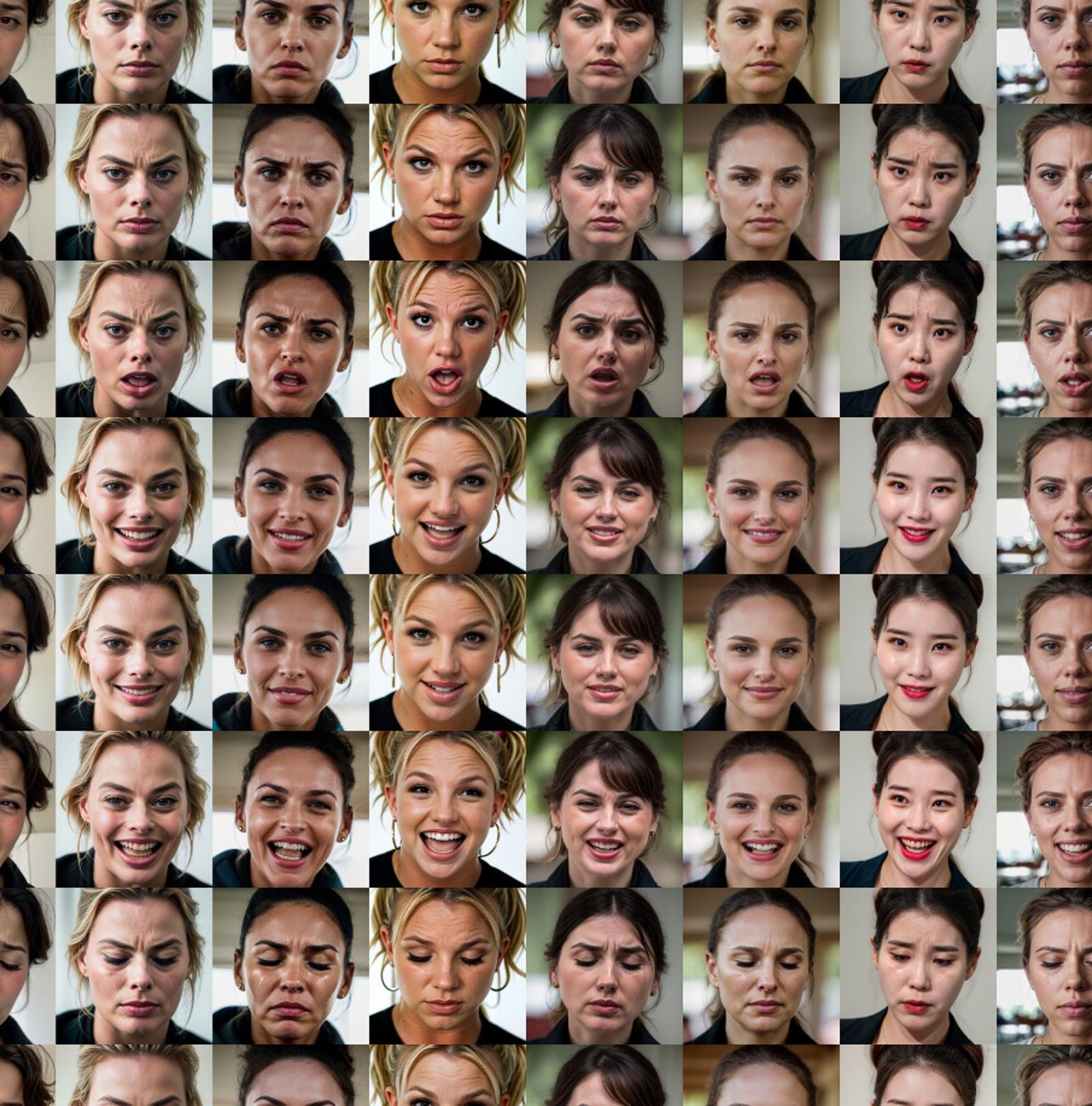

Rows are facial expressions

Columns are character models (LoRAs and embeddings representing celebrities and nobodies)

The first column is a reference (no consistent character, just facial expression). This column demonstrates that the model is capable of generating that expression without any additional tools like LoRAs.

Some character models appear to be more flexible than others with regard to facial expressions

Some facial expressions appear to be more difficult than others to generate, in general

Background

A prerequisite to telling stories with images (e.g. game or visual novel) is the ability to generate a consistent character with varying facial expressions. Popular fine tunes of SD1.5 can generate the most common expressions effortlessly, but anything beyond that is a struggle.

I'm personally tired of seeing AI images with "neutral" or "smiling" expressions. When we look at images of real people, their facial expressions convey personality, depth, nuance, variety, and scale. In comparison, most AI images feel like cloned zombies, contorted to the same generic smile as every other AI generated image.

Considering that it's difficult to achieve interesting facial expressions even for one off images, it's even more difficult to achieve them for consistent characters. A side effect of training a character embedding or LoRA is that it tends to encapsulate the most common expressions in the dataset (even if the intent of the training was to capture the likeness of the character, not the particular facial expressions used). Since people mostly train their character models with neutral or smiling expressions, that's what the character model will be able to portray - even if the base model would be able to produce a little bit more nuance and variety in expressions, it becomes constrained by the character model.

The solution to the problem should be facial expression LoRAs, and indeed, many people have published LoRAs for this specific purpose. Unfortunately these LoRAs seem to either work poorly or not at all (yes, I have tried all of them). For that reason I did not use any facial expression LoRAs in this study. I have recently been collecting datasets with the intent of making some facial expression LoRAs, though I'm not confident I will fare any better than my predecessors.

Research questions

Identify which character models (and by extension, which creators) are more flexible with regards to facial expressions.

Determine if embeddings are more flexible than LoRAs (as people often claim)

Compare the flexibility between celebrity and nobody characters (as celebrity characters are made with images of real people, one might expect them to have more variety and nuance in facial expressions)

Identify which facial expressions might be good candidates for LoRA development (we don't need LoRAs for expressions that are very easy for the base model, and we don't want to expend effort on LoRAs that are too hard for the base model)

Methods

I chose to use Realistic Vision 5.1 as the base model, since it is one of the most popular photorealism models. In the study of facial expressions, photorealism is superior to other styles, because there's only so much nuance you can express with more cartoonish styles.

The prompt

closeup of woman facing viewer, [hair, neck, eyes, lips: neutral face expression: 0.2], RAW photo, 1girlI was using A1111 script to replace "neutral face expression" from that prompt with different expressions, and "1girl" from that prompt with different character models.

The idea with this prompt is that we spend the first 20% of steps to force the composition, and then the remaining 80% of steps apply the facial expression. This prompt allows us to have stable columns, where the composition of the image does not change within a column (although it changes a little bit across columns, because the character model is applied to 100% of steps).

I tried to enforce photorealism with negative prompt "naked, illustration, 3d, drawing, cartoon, anime, 2girls" and also with "RAW photo" in the positive prompt. Although Realistic Vision is very good at photorealism, it was necessary to prompt for photorealism because some of the embeddings were rather cartoonish.

I cherrypicked the seed to produce a good reference for the first column (no consistent character, just facial expressions to demonstrate that the base model can generate those expressions).

How celeb models were chosen

I browsed the most popular celeb embeddings and LoRAs on Civitai

I selected models such that the same celeb was available both as an embedding and as a LoRA (to make better comparisons between LoRAs and embeddings)

I tried to select models from different creators (creators typically use the same methods to produce many models, so a single model from a particular creator will likely work similarly as other models from that creator - therefore, it's more useful to check one model from many creators, rather than checking many models from one creator)

I mostly skipped celebs that I didn't personally recognize (because evaluating character consistency is important here, too, and it's not possible to evaluate if you don't recognize the character)

How nobody models were chosen

I wanted to compare both embeddings and LoRAs, but unfortunately there were so few nobody LoRAs available on Civitai that I decided to look at nobody embeddings only

Again, I selected models from different creators rather than many models from one creator

How expressions were chosen

I experimented with a large variety of expressions

I excluded expressions that the base model was struggling to generate

I excluded or combined some expressions that were too similar in nature (e.g. "frowning" and "disappointed" combined into a single expression)

My selection of expressions is intended to illustrate both what these models are good at, and also what these models are bad at (e.g. the first 3 rows look very similar, I intentionally kept those as an example of bad)

In some cases I combined multiple keywords to produce a specific expression. The most flagrant example of this is the last row, which represents a "flirty" expression with keywords "teasing flirty playful suggestive seductive smirking squinting aroused horny". You can see from the first column reference what it's supposed to look like (and of course, variety would be welcome; it doesn't have to look exactly like this - you know what I mean).

Note that "closed mouth closed smiling" is not a typo. I wanted to hit both "closed mouth" and "mouth closed" with those keywords.

Analysis: Grid 1

Let's begin looking at the first grid: https://civitai.com/images/8125320

The columns in this grid are celebrity LoRAs. If you look through the first column, which has no character, you will see what the expressions are supposed to look like.

Grid 1 first 3 rows: neutral, bored, frowning

The reference in the first column demonstrates that the visual differences between these expressions are rather subtle. And yet, as humans we have learned to instantly recognize and differentiate between these expressions. Unfortunately, as we look at the celeb LoRAs for the first 3 rows, we can see that the results are almost identical. Basically, these expressions are so close to "neutral" that the neutral expressions from the training data of those models just burns through the nuanced expressions described by our prompts.

Grid 1 row 4: "open mouth confused surprised gasping"

The first column reference image is clearly too exaggerated, too strongly influenced by our keywords. But when used together with LoRAs, the results look surprisingly good. Every LoRA seems to perform well here, except for hannahowo and belle_delphine, which look like they're going for... ahegao look? I'm guessing there are too many ahegao images in the datasets used for those LoRAs, and/or lack of proper captioning.

Grid 1 rows 5-6: smiling with mouth open or closed

This one surprised me, because based on anecdotal experience, it's easy to generate smiling expressions. But it seems that most character LoRAs only know one type of smiling: either they smile with mouth closed, or they smile with mouth open. Both? Not possible it seems. The exceptions here were NataliePortman and Gal_Gadot, which handled both types of smiles perfectly.

Grid 1 row 7: laughing hysterically

Hannahowo looks like it's again failing because of ahegao images in its training set. Margotrobbie fails to produce a distinctive facial expression (looks exactly the same as the open mouth smile on row 5). All the other character LoRAs do a great job laughing hysterically, although some of them start to lose the resemblance to the celeb they are supposed to be (Belle Delphine in particular).

Grid 1 row 8: sad crying eyes closed

This was the only row where I needed to zoom in to spot the successes from failures. Most LoRAs were able to keep their eyes closed (with the exception of iu and belledelphine, which had eyes partially closed). EmiliaClarke has a sad expression and tears, but the tears don't look natural, more like an artifact. Hannahowo and Margotrobbie are lacking both sad expression and tears. Meganfox does the expression perfectly (although it doesn't really look like Megan Fox if you ask me). Britneyspears, AnaDeArmas and NataliePortman are clear failures. Iu is borderline acceptable. Scarlett Johanson, Belle Delphine, and Gal Gadot look unnattural and weird on this row.

Grid 1 row 9: flirty

I would say all the celeb LoRAs fail here. You can see a little bit of sparkle in the eye here and there, but it's just too subtle. The reference image in the first column is miles ahead.

Analysis: Grid 2

Grid 2: https://civitai.com/images/8125342

The columns in this grid represent celebrity embeddings.

These are the same celebrities as in grid 1. The first thing we notice immediately is that the results are more cartoonish and bear less resemblance compared to the LoRAs in grid 1. LoRAs can simply capture the likeness more accurately, as they can bring new information into the neural network, compared to embeddings which merely activate pieces of existing information in the network.

I'm excluding NataliePortman ("sjc0_jg") from the analysis, because the results don't look like her at all. This issue was related to the prompt; apparently that embedding only works with a very specific prompt.

Grid 2 first 3 rows: neutral, bored, frowning

Rows look almost identical; same problem as with LoRAs

Grid 2 row 4: "open mouth confused surprised gasping"

All embeddings seem to handle this expression nicely. Some skin wrinkle artifacts here and there, maybe CFG was too high for these embeddings? Hannahowo and belledelphine embeddings didn't have the same problem as the corresponding LoRAs (no ahegao related issues visible here). I suspect this is related to the datasets used in training, and not so much related to innate differences between embeddings and LoRAs more generally.

Grid 2 rows 5-6: smiling with mouth open or closed

Looks like these embeddings handle this distinction slightly better than the LoRAs, but the result is still far from perfect. For example, robbie3 seems to only smile with mouth closed (unless it's "laughing hysterically", which looks like it's barely smiling with mouth open).

Grid 2 row 7: laughing hysterically

With the exception of iulje and belledelphine, all embeddings were able to laugh in a manner that is visually distinct from "smiling with mouth open". Nice.

Grid 2 row 8: sad crying eyes closed

Surprisingly, it seems that the LoRAs (in grid1) did a better job of generating sad expressions and tears, compared to the embeddings (in grid2). In my opinion every single embedding failed here.

Grid 2 row 9: flirty

Embeddings don't seem to flirt any better than LoRAs. Again, sparkle in the eye here and there, but too subtle. When comparing the LoRAs in grid1 to the embeddings in grid2, one notable exception is the belledelphine embedding, which appears to lose all resemblance when it's spammed with many keywords like this (I don't think the embedding had much resemblance to Belle Delphine even on the other rows, but whatever resemblance it had, it lost it on the last row).

Analysis: Grid 3

Grid 3: https://civitai.com/images/8125391

The columns in this grid represent nobody embeddings.

I am excluding ca45mv7-100 and MarileeSD15 from the analysis, because they appear to fail with very similar "eyes closed" expressions on almost every row. I have no idea why they broke like that.

Grid 3 first 3 rows: neutral, bored, frowning

All embeddings fail similarly as celeb LoRAs and celeb embeddings.

Grid 3 row 4: "open mouth confused surprised gasping"

These nobody results appear to be worse than celeb results. Notable exceptions are z03-001 and evalyn_noexist, which do a good job at this expression.

Grid 3 rows 5-6: smiling with mouth open or closed

Only evalyn_noexist succeeds at smiling both ways.

Grid 3 row 7: laughing hysterically

Only z03-001 succeeds at laughing. The others look like smiling.

Grid 3 row 8: sad crying eyes closed

Tears look very artifact-y. Maybe CFG was too high? All nobody embeddings lack the sad expression.

Grid 3 row 9: flirty

Complete fail for all nobody embeddings, except for z03-001, which does a good job. I would say that also KimberlyNobody looks flirtatious, but then again, it looks just like its neutral expression, so I will count that as a fail.

Conclusions

It's time to connect the dots and answer our research questions as best we can.

Best characters for facial expressions

From the nobody embeddings, evalyn_noexist and z03-001 were clear winners, far surpassing the other embeddings in this test.

Evalyn_noexist is created by galaxytimemachine, who has also published some other nobody embeddings, so maybe those work well with facial expressions as well?

z03-001 is "Zoe" by fspn2000. They have also published more nobody embeddings, so worth a look!

Are embeddings more flexible than LoRAs? (as people often claim)

No? The answer in the context of facial expressions is a clear no. LoRAs seem slightly better?

Expression comparison between celebrity and nobody characters

In general, celebrities appeared to work better than nobodies.

On the other hand, two of the nobodies seemed to work better than any of the celeb characters.

Easy facial expressions candidates for LoRA development, based on what works with base model but not in combination with characters

bored apathetic tired

frowning disappointed

open mouth confused surprised gasping

sad crying

teasing flirty playful suggestive seductive smirking squinting aroused horny

licking and sticking out tongue (excluded from grids to avoid sexual imagery)

Difficult facial expressions candidates for LoRA development, based on what doesn't even work with the base model

excited (partial success with base model)

looking away (very limited success with base model)

pouting (absolutely no success with base model)

pleading puppy dog eyes (absolutely no success with base model)

embarrassed blushing looking down (weird results from base model, somewhat responsive)

suspicious skeptical raising eyebrow skeptycally (very limited success with base model)

kissing with eyes closed and puckered lips (very limited success with base model)

biting lip (this seems to be the most difficult of all these expressions)