As previously mentioned in my other article, my current PC specs are very limited, with only a 3060 12G vram.

With this in mind, I tend to avoid using too many lora as they eat up my vram, and even with comfyui, it hits a deadlock where the models have to be swapped out of vram in order to progress, causing everything to lock up.

My preference is to use embeddings (textual inversions) and there is a large library of very good embeddings for people or virtual people for sd 1.5, however these are not compatible with SDXL.

I have also been following Matteo's series of videos on his excellent extension IPAdapter plus attempting to match as closely as possible AI generation with actual facial features of a source subject.

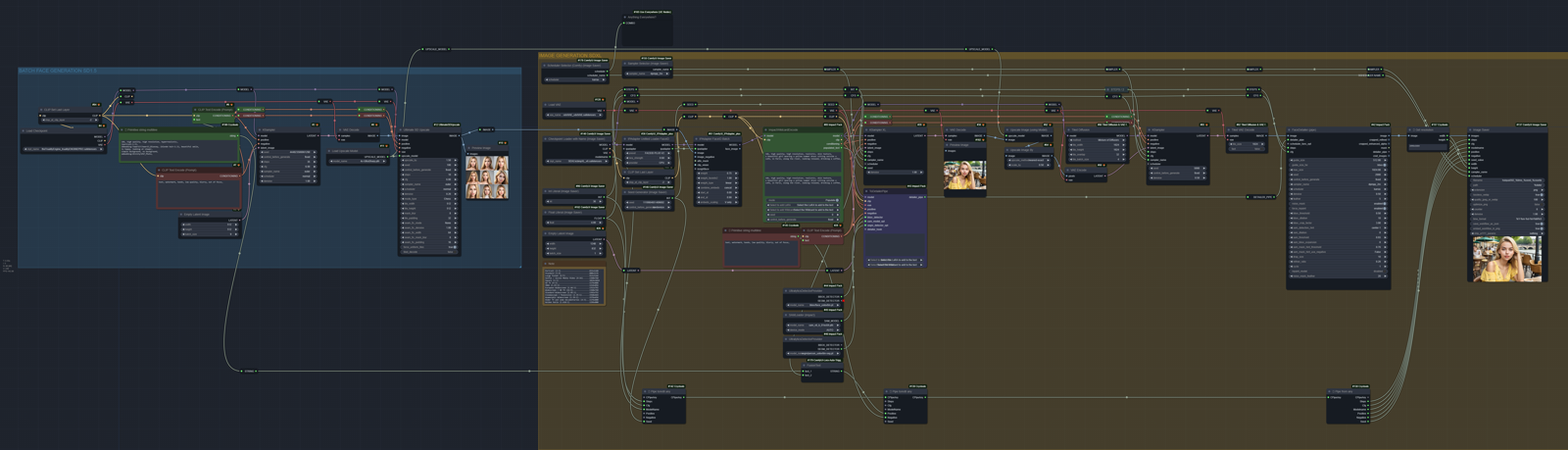

So I decided to create a workflow, basically an extension of my simplified comfyui workflow for civitai that mashes these concepts together.

The general steps are:

Use sd1.5 with appropriate prompting and an embedding to generate a series of 9 different images prompted for face only and upscale them. This is important as IPAdapter will fail if it doesn't detect a face. There is no need to use face detailer for this step as 512x512 should be large enough to generate a fairly detailed face and the upscale step will fix the rest. Example below using the Truality Engine and Tower13 Girls - Bluesey.

Run these 9 source images through the batch IPAdapter and hook it up to the sdxl workflow

Use pony or sdxl to generate the scene image including an upscaler step and face detailer. In this example I used ICBINP XL. I concentrate on prompting the scene rather than the character.

From this point to avoid confusion I will refer to the SD1.5 part as "source material" and SDXL as "scene".

Yes, I could have done it in two separate steps generating a folder of images to use as source material then loading it into the IPAdapter but this combined workflow allows me to customise the features of the source material and the final scene independent of each other in "real time".

I take advantage of the fact that comfyui caches the workflow results so as long as I don't change the seed or prompt for the source material. It doesn't re-generate after the first run or if I change the scene seed or prompt.

At the end of the generations, I could then save the set of source faces to be used later on in a workflow that replaces the whole source material step with a batch image loader module.

If I happen to generate a scene where I need to change certain facial features.. eg hair, eyecolor, etc.. instead of changing the scene prompt, I set the scene seed to fixed so I don't lose it, but I can go back to the source material and change the prompt there to regenerate 9 different faces.

This has the added benefit of mitigating color bleed, if I wanted a character with a different color eyes, makeup, maybe even expressions, it will be prompted in the source material and not the scene. Thus maintaining the overall composition of the scene.

Unfortunately, civitai does not or cannot at this time recognise that 2 models/checkpoints can be used in the workflow, so manual or automatic attribution for 2 models is impossible. In this workflow I have only linked the scene model to the metadata so that will automatically be picked up by civitai.

Anyway, this is all just experimentation and not gospel. Play around with the workflow and numbers. And as Bob Ross likes to say "happy little accidents"

Workflow png attached, drag into your comfyui to load the workflow.

Below I used SatPony-Niji with the same source material.

Below using vxpXL Hyper with scene prompt

"female astronaut, wearing a tight latex spacesuit, inside a spaceship, cosmos in the background, dynamic angles, creative angles," but changing the hair color of Truality Campus - Heather in the source material. Note the minimal color bleed as color is not mentioned in the scene prompt, but maybe a bit of color accents coming through. Using slightly modified workflow.

more examples https://civitai.com/posts/4602142