I've created a simple captioning script that runs the Joy-Caption script on all files in a folder.

https://github.com/MNeMoNiCuZ/joy-caption-batch

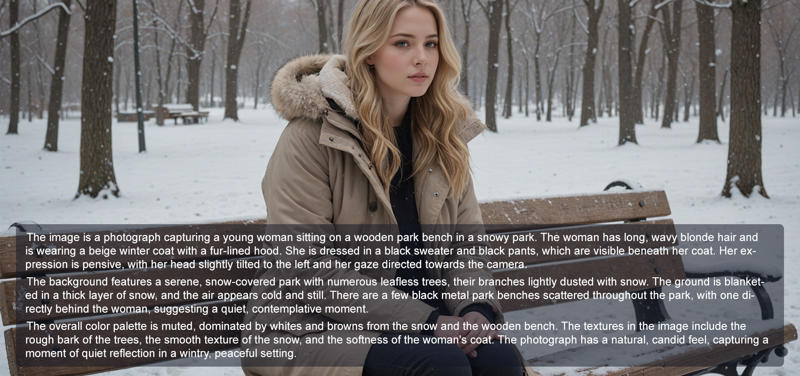

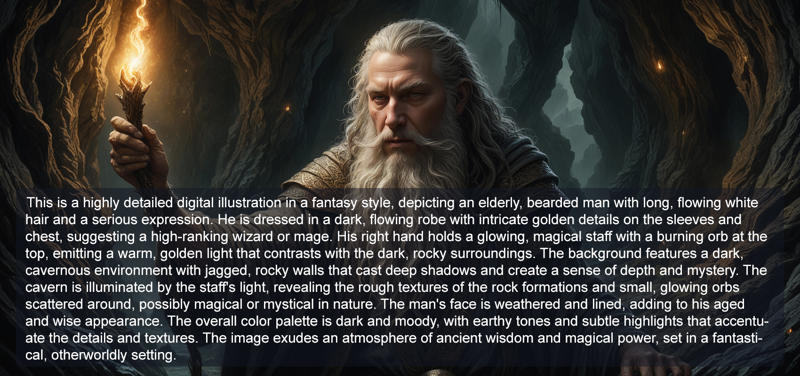

Examples:

Joy-Caption - Batch

This tool utilizes the Joy-Caption tool (still in Pre-Alpha), to caption image files in a batch.

Place all images you wish to caption in the /input directory and run py batch.py.

Setup

Git clone this repository

git clone https://github.com/MNeMoNiCuZ/joy-caption-batch/(Optional) Create a virtual environment for your setup. Use python 3.9 to 3.11. Do not use 3.12. Feel free to use the

venv_create.batfor a simple windows setup. Activate your venv.Run

pip install -r requirements.txt(this is done automatically with thevenv_create.bat).Install PyTorch with CUDA support matching your installed CUDA version. Run

nvcc --versionto find out which CUDA is your default.

Low VRAM Mode

Now supports LOW_VRAM_MODE=true. Edit this line in the batch.py-file.

This will use a llama3-8b-bnb-4bit quantized version from unsloth

Requirements

Tested on Python 3.10 and 3.11.

Tested on Pytorch w. CUDA 12.1.

Original app and source on huggingface.

WARNING:

This is just a captioning tool. This may not be the best way to train Flux models. We don't know

the best practices yet. See the conversation in the discussion below.

If you have any information to add to this, please share below <3

![[Tutorial + Tool] Caption files for Flux training [SFW + NSFW]](https://image.civitai.com/xG1nkqKTMzGDvpLrqFT7WA/a93d2601-77a2-4cde-9791-2cb2517f4921/width=1320/Man.jpeg)